Updating terraform providers, adding support and samples for TBFM and STDDS, improved documentation and more tests

This commit is contained in:

Родитель

fc92e28b4f

Коммит

9a1f0627ec

|

|

@ -57,7 +57,7 @@ jobs:

|

||||||

- name: Setup Terraform

|

- name: Setup Terraform

|

||||||

uses: hashicorp/setup-terraform@v1

|

uses: hashicorp/setup-terraform@v1

|

||||||

with:

|

with:

|

||||||

terraform_version: 1.0.8

|

terraform_version: 1.5.7

|

||||||

cli_config_credentials_token: ${{ secrets.TF_API_TOKEN }}

|

cli_config_credentials_token: ${{ secrets.TF_API_TOKEN }}

|

||||||

|

|

||||||

# Initialize a new or existing Terraform working directory by creating initial files, loading any remote state, downloading modules, etc.

|

# Initialize a new or existing Terraform working directory by creating initial files, loading any remote state, downloading modules, etc.

|

||||||

|

|

@ -149,7 +149,7 @@ jobs:

|

||||||

- name: Setup Terraform

|

- name: Setup Terraform

|

||||||

uses: hashicorp/setup-terraform@v1

|

uses: hashicorp/setup-terraform@v1

|

||||||

with:

|

with:

|

||||||

terraform_version: 1.0.8

|

terraform_version: 1.5.7

|

||||||

cli_config_credentials_token: ${{ secrets.TF_API_TOKEN }}

|

cli_config_credentials_token: ${{ secrets.TF_API_TOKEN }}

|

||||||

|

|

||||||

# Initialize a new or existing Terraform working directory by creating initial files, loading any remote state, downloading modules, etc.

|

# Initialize a new or existing Terraform working directory by creating initial files, loading any remote state, downloading modules, etc.

|

||||||

|

|

|

||||||

|

|

@ -0,0 +1 @@

|

||||||

|

internalSetup.md

|

||||||

|

|

@ -2,6 +2,7 @@

|

||||||

.chef/*.pem

|

.chef/*.pem

|

||||||

.chef/syntaxcache/

|

.chef/syntaxcache/

|

||||||

.chef/encrypted_data_bag_secret

|

.chef/encrypted_data_bag_secret

|

||||||

|

**/.kitchen/*

|

||||||

|

|

||||||

# Ruby

|

# Ruby

|

||||||

.rake_test_cache

|

.rake_test_cache

|

||||||

|

|

|

||||||

|

|

@ -1,13 +1,13 @@

|

||||||

default['kafkaServer']['kafkaRepo'] = 'https://archive.apache.org/dist/kafka/'

|

default['kafkaServer']['kafkaRepo'] = 'https://archive.apache.org/dist/kafka/'

|

||||||

default['kafkaServer']['kafkaVersion'] = '2.3.0'

|

default['kafkaServer']['kafkaVersion'] = '2.3.0'

|

||||||

default['kafkaServer']['solaceCoonector'] = '2.1.0'

|

default['kafkaServer']['solaceCoonector'] = '3.0.0'

|

||||||

|

|

||||||

# TFMS configuration

|

# TFMS configuration

|

||||||

default['kafkaServer']['SWIMEndpointPort'] = 55443

|

default['kafkaServer']['SWIMEndpointPort'] = 55443

|

||||||

default['kafkaServer']['SWIMVPN'] = 'TFMS'

|

|

||||||

|

|

||||||

## TFMS Secrets moved to a data bag

|

## TFMS Secrets moved to a data bag

|

||||||

# default['kafkaServer']['SWIMEndpoint']=''

|

# default['kafkaServer']['SWIMEndpoint']=''

|

||||||

# default['kafkaServer']['SWIMUserNaMe']=''

|

# default['kafkaServer']['SWIMUserNaMe']=''

|

||||||

# default['kafkaServer']['Password']=''

|

# default['kafkaServer']['Password']=''

|

||||||

# default['kafkaServer']['SWIMQueue']=''

|

# default['kafkaServer']['SWIMQueue']=''

|

||||||

|

# default['kafkaServer']['SWIMVPN'] = 'TFMS'

|

||||||

|

|

|

||||||

|

|

@ -4,7 +4,8 @@

|

||||||

#

|

#

|

||||||

# Copyright:: 2019, The Authors, All Rights Reserved.

|

# Copyright:: 2019, The Authors, All Rights Reserved.

|

||||||

|

|

||||||

package %w(java-1.8.0-openjdk-devel git tmux) do

|

# package %w(java-1.8.0-openjdk-devel git tmux) do

|

||||||

|

package %w(java-11-openjdk-devel git tmux) do

|

||||||

action :install

|

action :install

|

||||||

end

|

end

|

||||||

|

|

||||||

|

|

@ -95,6 +96,8 @@ template 'Configure Solace Connector in Stand Alone mode' do

|

||||||

end

|

end

|

||||||

|

|

||||||

TFMSConfig = data_bag_item('connConfig', 'TFMS')

|

TFMSConfig = data_bag_item('connConfig', 'TFMS')

|

||||||

|

TBFMConfig = data_bag_item('connConfig', 'TBFM')

|

||||||

|

STDDSConfig = data_bag_item('connConfig', 'STDDS')

|

||||||

|

|

||||||

template 'Configure TFMS Source Connector' do

|

template 'Configure TFMS Source Connector' do

|

||||||

source 'connect-solace-source.properties.erb'

|

source 'connect-solace-source.properties.erb'

|

||||||

|

|

@ -105,7 +108,36 @@ template 'Configure TFMS Source Connector' do

|

||||||

SWIMEndpoint: TFMSConfig['endPointJMS'],

|

SWIMEndpoint: TFMSConfig['endPointJMS'],

|

||||||

SWIMUserNaMe: TFMSConfig['userName'],

|

SWIMUserNaMe: TFMSConfig['userName'],

|

||||||

Password: TFMSConfig['secret'],

|

Password: TFMSConfig['secret'],

|

||||||

SWIMQueue: TFMSConfig['queueName']

|

SWIMQueue: TFMSConfig['queueName'],

|

||||||

|

SWIMVPN: TFMSConfig['vpn']

|

||||||

|

)

|

||||||

|

end

|

||||||

|

|

||||||

|

template 'Configure TBFM Source Connector' do

|

||||||

|

source 'connect-solace-source.properties.erb'

|

||||||

|

path '/opt/kafka/config/connect-solace-tbfm-source.properties'

|

||||||

|

variables(

|

||||||

|

connectorName: 'solaceConnectorTBFM',

|

||||||

|

kafkaTopic: 'tbfm',

|

||||||

|

SWIMEndpoint: TBFMConfig['endPointJMS'],

|

||||||

|

SWIMUserNaMe: TBFMConfig['userName'],

|

||||||

|

Password: TBFMConfig['secret'],

|

||||||

|

SWIMQueue: TBFMConfig['queueName'],

|

||||||

|

SWIMVPN: TBFMConfig['vpn']

|

||||||

|

)

|

||||||

|

end

|

||||||

|

|

||||||

|

template 'Configure STDDS Source Connector' do

|

||||||

|

source 'connect-solace-source.properties.erb'

|

||||||

|

path '/opt/kafka/config/connect-solace-stdds-source.properties'

|

||||||

|

variables(

|

||||||

|

connectorName: 'solaceConnectorSTDDS',

|

||||||

|

kafkaTopic: 'stdds',

|

||||||

|

SWIMEndpoint: STDDSConfig['endPointJMS'],

|

||||||

|

SWIMUserNaMe: STDDSConfig['userName'],

|

||||||

|

Password: STDDSConfig['secret'],

|

||||||

|

SWIMQueue: STDDSConfig['queueName'],

|

||||||

|

SWIMVPN: STDDSConfig['vpn']

|

||||||

)

|

)

|

||||||

end

|

end

|

||||||

|

|

||||||

|

|

@ -146,6 +178,18 @@ execute 'Create TFMS topic' do

|

||||||

not_if { ::Dir.exist?('/tmp/kafka-logs/tfms-0') }

|

not_if { ::Dir.exist?('/tmp/kafka-logs/tfms-0') }

|

||||||

end

|

end

|

||||||

|

|

||||||

|

execute 'Create TBFM topic' do

|

||||||

|

command '/opt/kafka/bin/kafka-topics.sh --create --bootstrap-server localhost:9092 --replication-factor 1 --partitions 1 --topic tbfm'

|

||||||

|

action :run

|

||||||

|

not_if { ::Dir.exist?('/tmp/kafka-logs/tbfm-0') }

|

||||||

|

end

|

||||||

|

|

||||||

|

execute 'Create STDDS topic' do

|

||||||

|

command '/opt/kafka/bin/kafka-topics.sh --create --bootstrap-server localhost:9092 --replication-factor 1 --partitions 1 --topic stdds'

|

||||||

|

action :run

|

||||||

|

not_if { ::Dir.exist?('/tmp/kafka-logs/stdds-0') }

|

||||||

|

end

|

||||||

|

|

||||||

file 'clean' do

|

file 'clean' do

|

||||||

path local_path

|

path local_path

|

||||||

action :delete

|

action :delete

|

||||||

|

|

|

||||||

|

|

@ -13,7 +13,11 @@ describe 'KafkaServer::Install' do

|

||||||

end

|

end

|

||||||

|

|

||||||

before do

|

before do

|

||||||

stub_data_bag_item('connConfig', 'TFMS').and_return(id: 'TFMS', userName: '', queueName: '', endPointJMS: '', connectionFactory: '', secret: '')

|

stub_data_bag_item('connConfig', 'TFMS').and_return(id: 'TFMS', userName: '', queueName: '', endPointJMS: '', connectionFactory: '', secret: '', vpn: '')

|

||||||

|

|

||||||

|

stub_data_bag_item('connConfig', 'TBFM').and_return(id: 'TBFM', userName: '', queueName: '', endPointJMS: '', connectionFactory: '', secret: '', vpn: '')

|

||||||

|

|

||||||

|

stub_data_bag_item('connConfig', 'STDDS').and_return(id: 'STDDS', userName: '', queueName: '', endPointJMS: '', connectionFactory: '', secret: '', vpn: '')

|

||||||

end

|

end

|

||||||

|

|

||||||

it 'converges successfully' do

|

it 'converges successfully' do

|

||||||

|

|

@ -21,7 +25,7 @@ describe 'KafkaServer::Install' do

|

||||||

end

|

end

|

||||||

|

|

||||||

it 'installs java, git and tmux' do

|

it 'installs java, git and tmux' do

|

||||||

expect(chef_run).to install_package 'java-1.8.0-openjdk-devel, git, tmux'

|

expect(chef_run).to install_package 'java-11-openjdk-devel, git, tmux'

|

||||||

end

|

end

|

||||||

|

|

||||||

it 'creates kafkaAdmin group' do

|

it 'creates kafkaAdmin group' do

|

||||||

|

|

@ -61,10 +65,36 @@ describe 'KafkaServer::Install' do

|

||||||

)

|

)

|

||||||

end

|

end

|

||||||

|

|

||||||

it 'creates porper permissions' do

|

it 'creates proper permissions' do

|

||||||

expect(chef_run).to run_execute('chown -R kafkaAdmin:kafkaAdmin /opt/kafka*')

|

expect(chef_run).to run_execute('chown -R kafkaAdmin:kafkaAdmin /opt/kafka*')

|

||||||

end

|

end

|

||||||

|

|

||||||

|

it 'gets solace connector' do

|

||||||

|

expect(chef_run).to create_remote_file('/tmp/solace-connector.zip').with(

|

||||||

|

source: 'https://solaceproducts.github.io/pubsubplus-connector-kafka-source/downloads/pubsubplus-connector-kafka-source-3.0.0.zip'

|

||||||

|

)

|

||||||

|

end

|

||||||

|

|

||||||

|

it 'copies solace connector to kafka libs' do

|

||||||

|

expect(chef_run).to run_execute('mv /tmp/solace-connector/pubsubplus-connector-kafka-source-3.0.0/lib/*.jar /opt/kafka/libs/')

|

||||||

|

end

|

||||||

|

|

||||||

|

it 'creates Solace Connector in Stand Alone mode config file' do

|

||||||

|

expect(chef_run).to create_template('/opt/kafka/config/connect-standalone.properties')

|

||||||

|

end

|

||||||

|

|

||||||

|

it 'creates TFMS config file' do

|

||||||

|

expect(chef_run).to create_template('/opt/kafka/config/connect-solace-tfms-source.properties')

|

||||||

|

end

|

||||||

|

|

||||||

|

it 'creates TBFM config file' do

|

||||||

|

expect(chef_run).to create_template('/opt/kafka/config/connect-solace-tbfm-source.properties')

|

||||||

|

end

|

||||||

|

|

||||||

|

it 'creates STDDS config file' do

|

||||||

|

expect(chef_run).to create_template('/opt/kafka/config/connect-solace-stdds-source.properties')

|

||||||

|

end

|

||||||

|

|

||||||

it 'creates zookeeper.service' do

|

it 'creates zookeeper.service' do

|

||||||

expect(chef_run).to create_template('/etc/systemd/system/zookeeper.service').with(

|

expect(chef_run).to create_template('/etc/systemd/system/zookeeper.service').with(

|

||||||

source: 'zookeeper.service.erb',

|

source: 'zookeeper.service.erb',

|

||||||

|

|

|

||||||

|

|

@ -12,8 +12,15 @@ describe 'KafkaServer::default' do

|

||||||

# https://github.com/chefspec/fauxhai/blob/master/PLATFORMS.md

|

# https://github.com/chefspec/fauxhai/blob/master/PLATFORMS.md

|

||||||

platform 'centos', '7'

|

platform 'centos', '7'

|

||||||

|

|

||||||

|

before do

|

||||||

|

stub_data_bag_item('connConfig', 'TFMS').and_return(id: 'TFMS', userName: '', queueName: '', endPointJMS: '', connectionFactory: '', secret: '', vpn: '')

|

||||||

|

|

||||||

|

stub_data_bag_item('connConfig', 'TBFM').and_return(id: 'TBFM', userName: '', queueName: '', endPointJMS: '', connectionFactory: '', secret: '', vpn: '')

|

||||||

|

|

||||||

|

stub_data_bag_item('connConfig', 'STDDS').and_return(id: 'STDDS', userName: '', queueName: '', endPointJMS: '', connectionFactory: '', secret: '', vpn: '')

|

||||||

|

end

|

||||||

|

|

||||||

it 'converges successfully' do

|

it 'converges successfully' do

|

||||||

stub_data_bag_item('connConfig', 'TFMS').and_return(id: 'TFMS', userName: '', queueName: '', endPointJMS: '', connectionFactory: '', secret: '')

|

|

||||||

expect { chef_run }.to_not raise_error

|

expect { chef_run }.to_not raise_error

|

||||||

end

|

end

|

||||||

end

|

end

|

||||||

|

|

|

||||||

|

|

@ -8,7 +8,7 @@ kafka.topic=<%= @kafkaTopic %>

|

||||||

sol.host=<%= @SWIMEndpoint %>:<%= node[:kafkaServer][:SWIMEndpointPort] %>

|

sol.host=<%= @SWIMEndpoint %>:<%= node[:kafkaServer][:SWIMEndpointPort] %>

|

||||||

sol.username=<%= @SWIMUserNaMe %>

|

sol.username=<%= @SWIMUserNaMe %>

|

||||||

sol.password=<%= @Password %>

|

sol.password=<%= @Password %>

|

||||||

sol.vpn_name=<%= node[:kafkaServer][:SWIMVPN] %>

|

sol.vpn_name=<%= @SWIMVPN %>

|

||||||

sol.queue=<%= @SWIMQueue %>

|

sol.queue=<%= @SWIMQueue %>

|

||||||

sol.message_callback_on_reactor=false

|

sol.message_callback_on_reactor=false

|

||||||

# sol.topics=soltest

|

# sol.topics=soltest

|

||||||

|

|

|

||||||

|

|

@ -0,0 +1,9 @@

|

||||||

|

{

|

||||||

|

"id": "STDDS",

|

||||||

|

"userName": "",

|

||||||

|

"queueName": "",

|

||||||

|

"endPointJMS": "",

|

||||||

|

"connectionFactory": "",

|

||||||

|

"secret": "",

|

||||||

|

"vpn": ""

|

||||||

|

}

|

||||||

|

|

@ -0,0 +1,9 @@

|

||||||

|

{

|

||||||

|

"id": "TBFM",

|

||||||

|

"userName": "",

|

||||||

|

"queueName": "",

|

||||||

|

"endPointJMS": "",

|

||||||

|

"connectionFactory": "",

|

||||||

|

"secret": "",

|

||||||

|

"vpn": ""

|

||||||

|

}

|

||||||

|

|

@ -4,5 +4,6 @@

|

||||||

"queueName": "",

|

"queueName": "",

|

||||||

"endPointJMS": "",

|

"endPointJMS": "",

|

||||||

"connectionFactory": "",

|

"connectionFactory": "",

|

||||||

"secret": ""

|

"secret": "",

|

||||||

|

"vpn": ""

|

||||||

}

|

}

|

||||||

|

|

@ -3,7 +3,7 @@

|

||||||

# The InSpec reference, with examples and extensive documentation, can be

|

# The InSpec reference, with examples and extensive documentation, can be

|

||||||

# found at https://www.inspec.io/docs/reference/resources/

|

# found at https://www.inspec.io/docs/reference/resources/

|

||||||

|

|

||||||

%w(java-1.8.0-openjdk-devel git tmux).each do |package|

|

%w(java-11-openjdk-devel git tmux).each do |package|

|

||||||

describe package(package) do

|

describe package(package) do

|

||||||

it { should be_installed }

|

it { should be_installed }

|

||||||

end

|

end

|

||||||

|

|

@ -36,7 +36,7 @@ describe service('kafka') do

|

||||||

it { should be_running }

|

it { should be_running }

|

||||||

end

|

end

|

||||||

|

|

||||||

describe file('/opt/kafka/libs/pubsubplus-connector-kafka-source-2.1.0.jar') do

|

describe file('/opt/kafka/libs/pubsubplus-connector-kafka-source-3.0.0.jar') do

|

||||||

it { should exist }

|

it { should exist }

|

||||||

end

|

end

|

||||||

|

|

||||||

|

|

@ -47,3 +47,11 @@ end

|

||||||

describe file('/opt/kafka/config/connect-solace-tfms-source.properties') do

|

describe file('/opt/kafka/config/connect-solace-tfms-source.properties') do

|

||||||

it { should exist }

|

it { should exist }

|

||||||

end

|

end

|

||||||

|

|

||||||

|

describe file('/opt/kafka/config/connect-solace-tbfm-source.properties') do

|

||||||

|

it { should exist }

|

||||||

|

end

|

||||||

|

|

||||||

|

describe file('/opt/kafka/config/connect-solace-stdds-source.properties') do

|

||||||

|

it { should exist }

|

||||||

|

end

|

||||||

|

|

|

||||||

|

|

@ -0,0 +1,9 @@

|

||||||

|

{

|

||||||

|

"id": "STDDS",

|

||||||

|

"userName": "",

|

||||||

|

"queueName": "",

|

||||||

|

"endPointJMS": "",

|

||||||

|

"connectionFactory": "",

|

||||||

|

"secret": "",

|

||||||

|

"vpn": ""

|

||||||

|

}

|

||||||

|

|

@ -0,0 +1,9 @@

|

||||||

|

{

|

||||||

|

"id": "TBFM",

|

||||||

|

"userName": "",

|

||||||

|

"queueName": "",

|

||||||

|

"endPointJMS": "",

|

||||||

|

"connectionFactory": "",

|

||||||

|

"secret": "",

|

||||||

|

"vpn": ""

|

||||||

|

}

|

||||||

|

|

@ -4,5 +4,6 @@

|

||||||

"queueName": "",

|

"queueName": "",

|

||||||

"endPointJMS": "",

|

"endPointJMS": "",

|

||||||

"connectionFactory": "",

|

"connectionFactory": "",

|

||||||

"secret": ""

|

"secret": "",

|

||||||

|

"vpn": ""

|

||||||

}

|

}

|

||||||

|

|

@ -2,9 +2,21 @@

|

||||||

# Manual edits may be lost in future updates.

|

# Manual edits may be lost in future updates.

|

||||||

|

|

||||||

provider "registry.terraform.io/hashicorp/azurerm" {

|

provider "registry.terraform.io/hashicorp/azurerm" {

|

||||||

version = "2.80.0"

|

version = "3.74.0"

|

||||||

constraints = "2.80.0"

|

constraints = "3.74.0"

|

||||||

hashes = [

|

hashes = [

|

||||||

"h1:hdeAgSZUaq54ornF5yquG/c91U+Ou6cMaQTQj3TVLRc=",

|

"h1:ETVZfmulZQ435+lgFCkZRpfVOLyAxfDOwbPXFg3aLLQ=",

|

||||||

|

"zh:0424c70152f949da1ec52ba96d20e5fd32fd22d9bd9203ce045d5f6aab3d20fc",

|

||||||

|

"zh:16dbf581d10f8e7937185bcdcceb4f91d08c919e452fb8da7580071288c8c397",

|

||||||

|

"zh:3019103bc2c3b4e185f5c65696c349697644c968f5c085af5505fed6d01c4241",

|

||||||

|

"zh:49bb56ebaed6653fdb913c2b2bb74fc8b5399e7258d1e89084f72c44ea1130dd",

|

||||||

|

"zh:85547666517f899d88620bd23a000a8f43c7dc93587c350eb1ea17bcb3e645c7",

|

||||||

|

"zh:8bed8b646ff1822d8764de68b56b71e5dd971a4b77eba80d47f400a530800bea",

|

||||||

|

"zh:8bfa6c70c004ba05ebce47f74f49ce872c28a68a18bb71b281a9681bcbbdbfa1",

|

||||||

|

"zh:a2ae9e38fda0695fb8aa810e4f1ce4b104bfda651a87923b307bb1728680d8b6",

|

||||||

|

"zh:beac1efe32f99072c892095f5ff46e40d6852b66679a03bc3acbe1b90fb1f653",

|

||||||

|

"zh:d8a6ca20e49ebe7ea5688d91233d571e2c2ccc3e41000c39a7d7031df209ea8e",

|

||||||

|

"zh:f569b65999264a9416862bca5cd2a6177d94ccb0424f3a4ef424428912b9cb3c",

|

||||||

|

"zh:f937b5fdf49b072c0347408d0a1c5a5d822dae1a23252915930e5a82d1d8ce8b",

|

||||||

]

|

]

|

||||||

}

|

}

|

||||||

|

|

|

||||||

|

|

@ -41,9 +41,9 @@ More information on this topic [here](https://docs.microsoft.com/en-us/azure/vir

|

||||||

|

|

||||||

This terraform script has been tested using the following versions:

|

This terraform script has been tested using the following versions:

|

||||||

|

|

||||||

- Terraform =>1.0.8

|

- Terraform =>1.5.7

|

||||||

- Azure provider 2.80.0

|

- Azure provider 3.74.0

|

||||||

- Azure CLI 2.29.0

|

- Azure CLI 2.52.0

|

||||||

|

|

||||||

## VM Authentication

|

## VM Authentication

|

||||||

|

|

||||||

|

|

@ -72,6 +72,13 @@ This terraform script uses the [cloud-init](https://cloudinit.readthedocs.io/en/

|

||||||

|

|

||||||

## Useful Apache Kafka commands

|

## Useful Apache Kafka commands

|

||||||

|

|

||||||

|

If all goes well, systemd should report running state on both service's status

|

||||||

|

|

||||||

|

```ssh

|

||||||

|

sudo systemctl status zookeeper.service

|

||||||

|

sudo systemctl status kafka.service

|

||||||

|

```

|

||||||

|

|

||||||

Create a topic

|

Create a topic

|

||||||

|

|

||||||

```ssh

|

```ssh

|

||||||

|

|

|

||||||

|

|

@ -6,9 +6,12 @@ terraform {

|

||||||

name = "AfG-Infra"

|

name = "AfG-Infra"

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

required_version = "= 1.0.8"

|

required_version = "= 1.5.7"

|

||||||

required_providers {

|

required_providers {

|

||||||

azurerm = "=2.80.0"

|

azurerm = {

|

||||||

|

source = "hashicorp/azurerm"

|

||||||

|

version = "= 3.74.0"

|

||||||

|

}

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

|

|

||||||

|

|

|

||||||

|

|

@ -6,7 +6,7 @@ output "generic_RG" {

|

||||||

output "subnets" {

|

output "subnets" {

|

||||||

value = {

|

value = {

|

||||||

for subnet in azurerm_subnet.subnets :

|

for subnet in azurerm_subnet.subnets :

|

||||||

subnet.name => subnet.address_prefix

|

subnet.name => subnet.address_prefixes

|

||||||

}

|

}

|

||||||

description = "subnets created."

|

description = "subnets created."

|

||||||

}

|

}

|

||||||

|

|

@ -14,7 +14,7 @@ output "subnets" {

|

||||||

output "dataBricksSubnets" {

|

output "dataBricksSubnets" {

|

||||||

value = {

|

value = {

|

||||||

for subnet in azurerm_subnet.dbSubnets :

|

for subnet in azurerm_subnet.dbSubnets :

|

||||||

subnet.name => subnet.address_prefix

|

subnet.name => subnet.address_prefixes

|

||||||

}

|

}

|

||||||

description = "Databricks dedicated subnets."

|

description = "Databricks dedicated subnets."

|

||||||

}

|

}

|

||||||

|

|

|

||||||

|

|

@ -68,7 +68,7 @@ resource "azurerm_linux_virtual_machine" "kafkaServer" {

|

||||||

node_name: "${var.suffix}-KafkaServer"

|

node_name: "${var.suffix}-KafkaServer"

|

||||||

run_list: "recipe[KafkaServer]"

|

run_list: "recipe[KafkaServer]"

|

||||||

validation_name: "chambras-validator"

|

validation_name: "chambras-validator"

|

||||||

validation_cert: "${file("${var.validatorCertPath}")}"

|

validation_cert: "${file(var.validatorCertPath)}"

|

||||||

|

|

||||||

output: {all: '| tee -a /var/log/cloud-init-output.log'}

|

output: {all: '| tee -a /var/log/cloud-init-output.log'}

|

||||||

runcmd:

|

runcmd:

|

||||||

|

|

|

||||||

|

|

@ -1,27 +1,36 @@

|

||||||

# This file is maintained automatically by "terraform init".

|

# This file is maintained automatically by "terraform init".

|

||||||

# Manual edits may be lost in future updates.

|

# Manual edits may be lost in future updates.

|

||||||

|

|

||||||

provider "registry.terraform.io/databrickslabs/databricks" {

|

provider "registry.terraform.io/databricks/databricks" {

|

||||||

version = "0.3.5"

|

version = "1.26.0"

|

||||||

constraints = "0.3.5"

|

constraints = "1.26.0"

|

||||||

hashes = [

|

hashes = [

|

||||||

"h1:abDOTQTOQIB8DJY9c+Sx5I13WG15yv/zQHBBqkrdDLA=",

|

"h1:nreSKmyONucOjKQlERZWmn9W4MvWDykKENWQqEanAnQ=",

|

||||||

"zh:0a74e3e6666206c63e6bc45de46d79848e8b530072a3a0d11f01a16445d82e9f",

|

"zh:01651d78dfaf51de205b1af0bd967ddfdec0f7fba534aa4676f82ad432bc1f40",

|

||||||

"zh:0b50cb2074363fc41642c3caededefc840bb63b1db28310b406376102582a652",

|

"zh:237803262c10388d632d02013df5b7cd4f5fc0f72f5b95983649cdc6d0c44426",

|

||||||

"zh:256e3b4a3af52852bad55af085efe95ea0ae9fd5383def4a475c12bfe97f93e6",

|

"zh:26cbb4a9f76266ae71daaec137f497061ea8d67ffa8d63a8e3ee626e14d95da5",

|

||||||

"zh:48a86ca88df1e79518cf2fcd831f7cab25eb4524c5b108471b6d8f634333df8e",

|

"zh:43816b9119214469e0e3f220953428caeb3cdefa673a4d0a0062ed145db1932b",

|

||||||

"zh:6bdf337c9e950fb9be7c5c907c8c20c340b64abb6426e7f4fe21d39842db7296",

|

"zh:9fa3015df65cb320c053e7ba3dc987a03b34e2d990bd4843b4c0f021f74554c5",

|

||||||

"zh:80b7983152b95c0a02438f418cf77dc384c08ea0fe89bdb9ca3a440db97fe805",

|

"zh:b286e0bd6f2bbabc2da491468eee716a462dcd1ae70befa886b3c1896ff57544",

|

||||||

"zh:8515aa8c7595307c24257fffffa623a7a32ff8930c6405530b8caa28de0db25f",

|

|

||||||

"zh:c221cd2b62b6ac00159cf741f5994c09b455e6ed4ec72fdec8961f66f3c1a4d9",

|

|

||||||

"zh:e4e8aeaac861c462f6daf9c54274796b2f47c9b23effa25e6222e370be0c926f",

|

|

||||||

]

|

]

|

||||||

}

|

}

|

||||||

|

|

||||||

provider "registry.terraform.io/hashicorp/azurerm" {

|

provider "registry.terraform.io/hashicorp/azurerm" {

|

||||||

version = "2.80.0"

|

version = "3.74.0"

|

||||||

constraints = "2.80.0"

|

constraints = "3.74.0"

|

||||||

hashes = [

|

hashes = [

|

||||||

"h1:hdeAgSZUaq54ornF5yquG/c91U+Ou6cMaQTQj3TVLRc=",

|

"h1:ETVZfmulZQ435+lgFCkZRpfVOLyAxfDOwbPXFg3aLLQ=",

|

||||||

|

"zh:0424c70152f949da1ec52ba96d20e5fd32fd22d9bd9203ce045d5f6aab3d20fc",

|

||||||

|

"zh:16dbf581d10f8e7937185bcdcceb4f91d08c919e452fb8da7580071288c8c397",

|

||||||

|

"zh:3019103bc2c3b4e185f5c65696c349697644c968f5c085af5505fed6d01c4241",

|

||||||

|

"zh:49bb56ebaed6653fdb913c2b2bb74fc8b5399e7258d1e89084f72c44ea1130dd",

|

||||||

|

"zh:85547666517f899d88620bd23a000a8f43c7dc93587c350eb1ea17bcb3e645c7",

|

||||||

|

"zh:8bed8b646ff1822d8764de68b56b71e5dd971a4b77eba80d47f400a530800bea",

|

||||||

|

"zh:8bfa6c70c004ba05ebce47f74f49ce872c28a68a18bb71b281a9681bcbbdbfa1",

|

||||||

|

"zh:a2ae9e38fda0695fb8aa810e4f1ce4b104bfda651a87923b307bb1728680d8b6",

|

||||||

|

"zh:beac1efe32f99072c892095f5ff46e40d6852b66679a03bc3acbe1b90fb1f653",

|

||||||

|

"zh:d8a6ca20e49ebe7ea5688d91233d571e2c2ccc3e41000c39a7d7031df209ea8e",

|

||||||

|

"zh:f569b65999264a9416862bca5cd2a6177d94ccb0424f3a4ef424428912b9cb3c",

|

||||||

|

"zh:f937b5fdf49b072c0347408d0a1c5a5d822dae1a23252915930e5a82d1d8ce8b",

|

||||||

]

|

]

|

||||||

}

|

}

|

||||||

|

|

|

||||||

|

|

@ -31,10 +31,10 @@ More information on this topic [here](https://docs.microsoft.com/en-us/azure/vir

|

||||||

|

|

||||||

This terraform script has been tested using the following versions:

|

This terraform script has been tested using the following versions:

|

||||||

|

|

||||||

- Terraform =>1.0.8

|

- Terraform =>1.5.7

|

||||||

- Azure provider 2.80.0

|

- Azure provider 3.74.0

|

||||||

- Databricks provider 0.3.5

|

- Databricks provider 1.26.0

|

||||||

- Azure CLI 2.29.0

|

- Azure CLI 2.52.0

|

||||||

|

|

||||||

## Usage

|

## Usage

|

||||||

|

|

||||||

|

|

|

||||||

|

|

@ -6,13 +6,16 @@ terraform {

|

||||||

name = "AfG-Databricks"

|

name = "AfG-Databricks"

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

required_version = "= 1.0.8"

|

required_version = "= 1.5.7"

|

||||||

required_providers {

|

required_providers {

|

||||||

databricks = {

|

databricks = {

|

||||||

source = "databrickslabs/databricks"

|

source = "databricks/databricks"

|

||||||

version = "0.3.5"

|

version = "= 1.26.0"

|

||||||

|

}

|

||||||

|

azurerm = {

|

||||||

|

source = "hashicorp/azurerm"

|

||||||

|

version = "= 3.74.0"

|

||||||

}

|

}

|

||||||

azurerm = "=2.80.0"

|

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

|

|

||||||

|

|

|

||||||

|

|

@ -1,5 +1,15 @@

|

||||||

# Create initial Databricks notebook

|

# Create initial Databricks notebook

|

||||||

resource "databricks_notebook" "ddl" {

|

resource "databricks_notebook" "tfms" {

|

||||||

source = "../../Notebooks/tfms.py"

|

source = "../../Notebooks/tfms.py"

|

||||||

path = "/Shared/TFMS"

|

path = "/Shared/TFMS"

|

||||||

}

|

}

|

||||||

|

|

||||||

|

resource "databricks_notebook" "stdds" {

|

||||||

|

source = "../../Notebooks/stdds.py"

|

||||||

|

path = "/Shared/STDDS"

|

||||||

|

}

|

||||||

|

|

||||||

|

resource "databricks_notebook" "tbfm" {

|

||||||

|

source = "../../Notebooks/tbfm.py"

|

||||||

|

path = "/Shared/TBFM"

|

||||||

|

}

|

||||||

|

|

@ -0,0 +1,29 @@

|

||||||

|

# COMMAND ----------

|

||||||

|

|

||||||

|

# simpler way without sql library, getting all values

|

||||||

|

# getting all values

|

||||||

|

df = spark \

|

||||||

|

.read \

|

||||||

|

.format("kafka") \

|

||||||

|

.option("kafka.bootstrap.servers", "10.70.3.4:9092") \

|

||||||

|

.option("subscribe", "stdds") \

|

||||||

|

.option("startingOffsets", """{"stdds":{"0":0}}""") \

|

||||||

|

.option("endingOffsets", """{"stdds":{"0":4}}""") \

|

||||||

|

.load()

|

||||||

|

|

||||||

|

df.printSchema()

|

||||||

|

|

||||||

|

# COMMAND ----------

|

||||||

|

|

||||||

|

# getting specific values

|

||||||

|

df = spark \

|

||||||

|

.read \

|

||||||

|

.format("kafka") \

|

||||||

|

.option("kafka.bootstrap.servers", "10.70.3.4:9092") \

|

||||||

|

.option("subscribe", "stdds") \

|

||||||

|

.option("startingOffsets", """{"stdds":{"0":0}}""") \

|

||||||

|

.option("endingOffsets", """{"stdds":{"0":4}}""") \

|

||||||

|

.load() \

|

||||||

|

.selectExpr("CAST (value as STRING)")

|

||||||

|

|

||||||

|

display(df)

|

||||||

|

|

@ -0,0 +1,29 @@

|

||||||

|

# COMMAND ----------

|

||||||

|

|

||||||

|

# simpler way without sql library, getting all values

|

||||||

|

# getting all values

|

||||||

|

df = spark \

|

||||||

|

.read \

|

||||||

|

.format("kafka") \

|

||||||

|

.option("kafka.bootstrap.servers", "10.70.3.4:9092") \

|

||||||

|

.option("subscribe", "tbfm") \

|

||||||

|

.option("startingOffsets", """{"tbfm":{"0":0}}""") \

|

||||||

|

.option("endingOffsets", """{"tbfm":{"0":4}}""") \

|

||||||

|

.load()

|

||||||

|

|

||||||

|

df.printSchema()

|

||||||

|

|

||||||

|

# COMMAND ----------

|

||||||

|

|

||||||

|

# getting specific values

|

||||||

|

df = spark \

|

||||||

|

.read \

|

||||||

|

.format("kafka") \

|

||||||

|

.option("kafka.bootstrap.servers", "10.70.3.4:9092") \

|

||||||

|

.option("subscribe", "tbfm") \

|

||||||

|

.option("startingOffsets", """{"tbfm":{"0":0}}""") \

|

||||||

|

.option("endingOffsets", """{"tbfm":{"0":4}}""") \

|

||||||

|

.load() \

|

||||||

|

.selectExpr("CAST (value as STRING)")

|

||||||

|

|

||||||

|

display(df)

|

||||||

29

README.md

29

README.md

|

|

@ -4,8 +4,8 @@

|

||||||

Demo project presented at [Automate for Good 2021](https://chef-hackathon.devpost.com/).

|

Demo project presented at [Automate for Good 2021](https://chef-hackathon.devpost.com/).

|

||||||

It shows how to integrate Chef Infra, Chef InSpec, Test Kitchen, Terraform, Terraform Cloud, and GitHub Actions in order to fully automate and create Data Analytics environments.

|

It shows how to integrate Chef Infra, Chef InSpec, Test Kitchen, Terraform, Terraform Cloud, and GitHub Actions in order to fully automate and create Data Analytics environments.

|

||||||

|

|

||||||

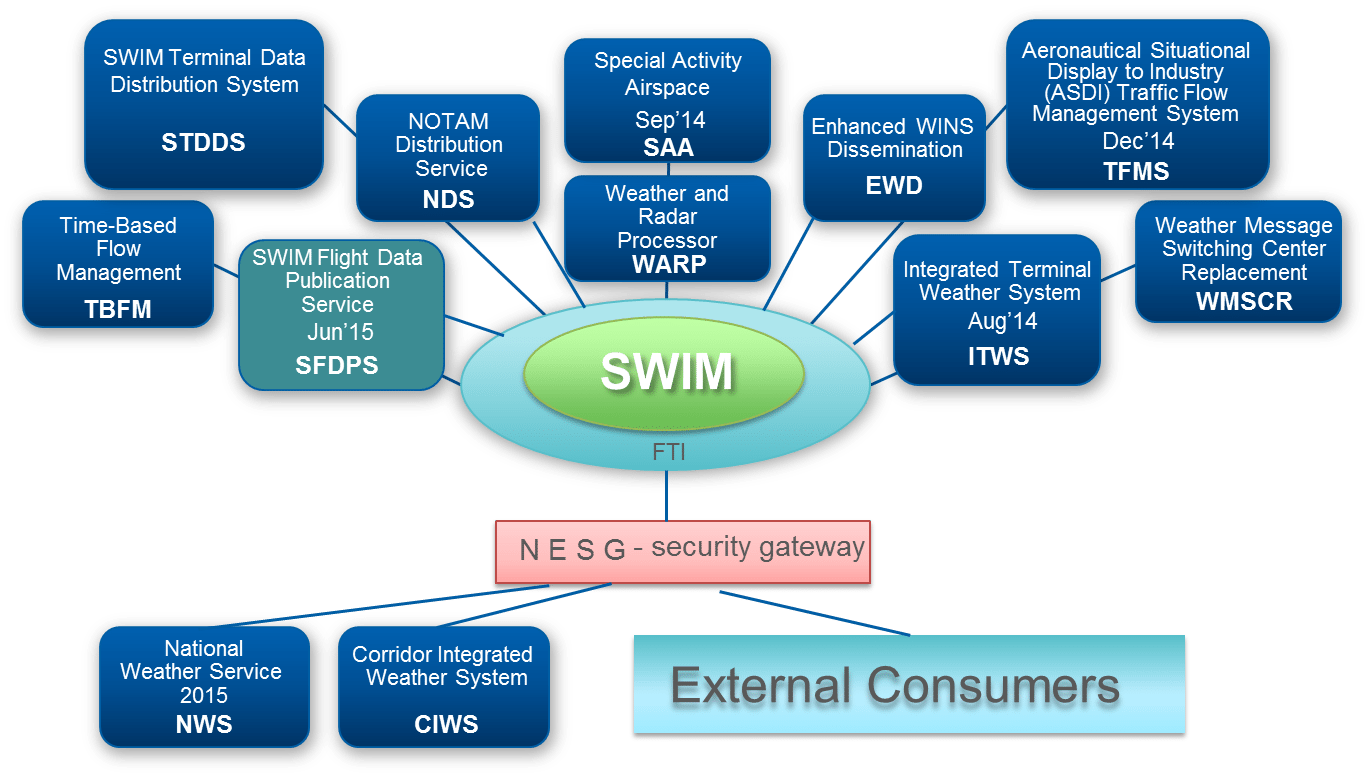

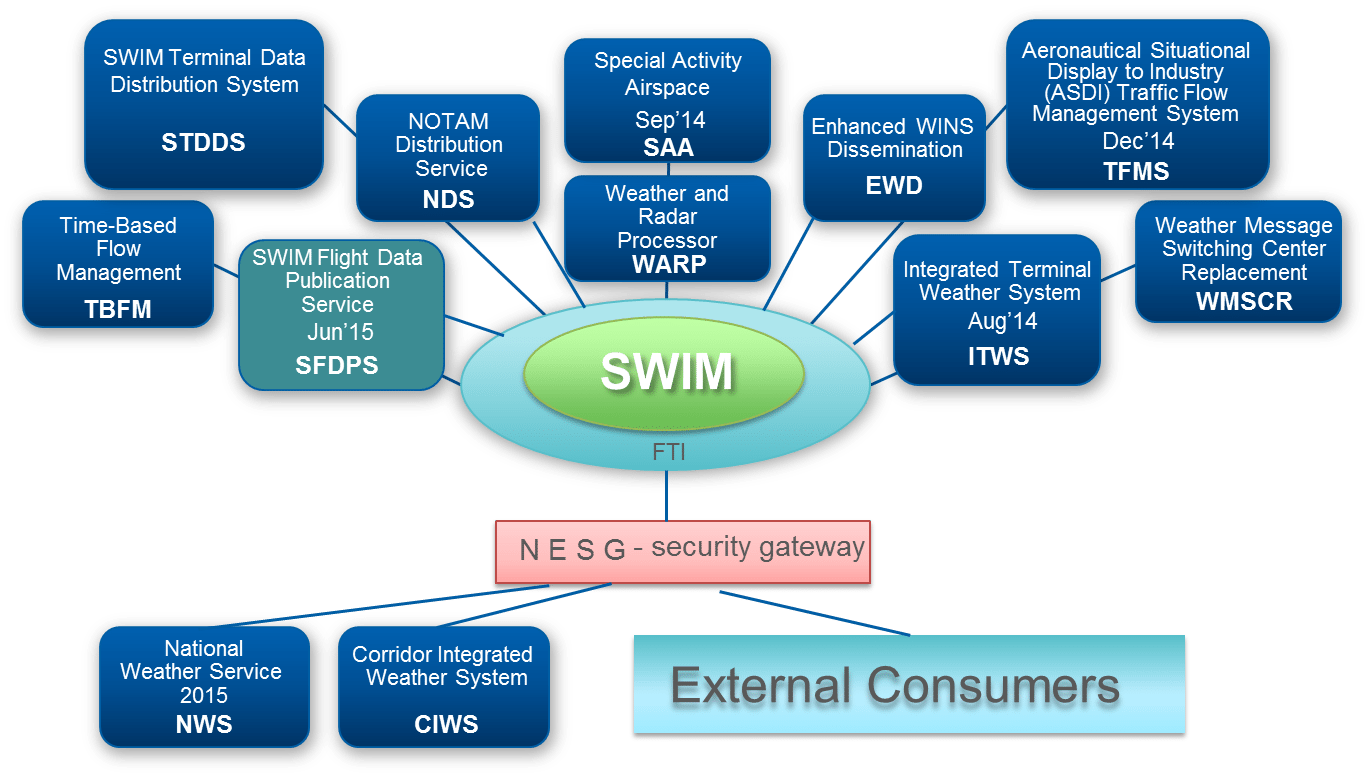

This specific demo uses FAA's System Wide Information System (SWIM) and connects to TFMS ( Traffic Flow Management System ) using a Kafka server.

|

This specific demo uses FAA's System Wide Information System (SWIM) and connects to Traffic Flow Management System (TFMS), Time-Based Flow Management (TBFM) and SWIM Terminal Data Distribution System (STDDS) using a Kafka server.

|

||||||

More information about SWIM and TFMS can be found [here.](https://www.faa.gov/air_traffic/technology/swim/)

|

More information about SWIM and its data sources can be found [here.](https://www.faa.gov/air_traffic/technology/swim/)

|

||||||

|

|

||||||

It also uses a Databricks cluster in order to analyze the data.

|

It also uses a Databricks cluster in order to analyze the data.

|

||||||

|

|

||||||

|

|

@ -15,15 +15,13 @@ This project has the following folders which make them easy to reuse, add or rem

|

||||||

|

|

||||||

```ssh

|

```ssh

|

||||||

.

|

.

|

||||||

├── .devcontainer

|

|

||||||

├── .github

|

├── .github

|

||||||

│ └── workflows

|

│ └── workflows

|

||||||

├── LICENSE

|

|

||||||

├── README.md

|

|

||||||

├── Chef

|

├── Chef

|

||||||

│ ├── .chef

|

│ ├── .chef

|

||||||

│ ├── cookbooks

|

│ ├── cookbooks

|

||||||

│ └── data_bags

|

│ └── data_bags

|

||||||

|

├── Diagrams

|

||||||

├── Infrastructure

|

├── Infrastructure

|

||||||

│ ├── terraform-azure

|

│ ├── terraform-azure

|

||||||

│ └── terraform-databricks

|

│ └── terraform-databricks

|

||||||

|

|

@ -32,7 +30,7 @@ This project has the following folders which make them easy to reuse, add or rem

|

||||||

|

|

||||||

## SWIM Architecture

|

## SWIM Architecture

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

## Architecture

|

## Architecture

|

||||||

|

|

||||||

|

|

@ -50,10 +48,10 @@ It uses GitHub Actions in order to orchestrate the CI/CD pipeline.

|

||||||

|

|

||||||

This project requires the following versions:

|

This project requires the following versions:

|

||||||

|

|

||||||

- **Terraform** =>1.0.8

|

- **Terraform** =>1.5.7

|

||||||

- **Azure provider** 2.80.0

|

- **Azure provider** 3.74.0

|

||||||

- **Databricks provider** 0.3.5

|

- **Databricks provider** 1.26.0

|

||||||

- **Azure CLI** 2.29.0

|

- **Azure CLI** 2.52.0

|

||||||

- **ChefDK** 4.13.3

|

- **ChefDK** 4.13.3

|

||||||

|

|

||||||

It also uses GitHub Secrets to store all required keys and secrets. The following GitHub Secrets need to be created ahead of time:

|

It also uses GitHub Secrets to store all required keys and secrets. The following GitHub Secrets need to be created ahead of time:

|

||||||

|

|

@ -69,20 +67,19 @@ It also uses GitHub Secrets to store all required keys and secrets. The followin

|

||||||

|

|

||||||

## GitHub Workflows

|

## GitHub Workflows

|

||||||

|

|

||||||

There are 2 GitHub Actions Workflows that are used to automate the Infrastructure which will host the Data Analytics environment using Terraform and the post-provisioning configurations required to connect to FAA's System Wide Information System (SWIM) and connects to TFMS ( Traffic Flow Management System ) datasource using **Chef Infra**.

|

There are 2 GitHub Actions Workflows that are used in this project:

|

||||||

|

|

||||||

|

1. To automate the Infrastructure which will host the Data Analytics environment using Terraform.

|

||||||

|

2. The post-provisioning configurations required to connect to FAA's System Wide Information System (SWIM) and connects to Traffic Flow Management System (TFMS), Time-Based Flow Management (TBFM) and SWIM Terminal Data Distribution System (STDDS) data sources using **Chef Infra**.

|

||||||

|

|

||||||

- **Chef-ApacheKafka** - Performs Static code analysis using **Cookstyle**, unit testing using **Chef InSpec**, and Integration tests using **Test Kitchen** to make sure the cookbook is properly tested before uploading it to the Chef Server.

|

- **Chef-ApacheKafka** - Performs Static code analysis using **Cookstyle**, unit testing using **Chef InSpec**, and Integration tests using **Test Kitchen** to make sure the cookbook is properly tested before uploading it to the Chef Server.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

- **Terraform-Azure** - Performs Terraform deployment using Terraform Cloud as remote state. It also creates a Databricks cluster and deploys a starter python notebook to test the connectivity to the Kafka server and retrieves the messages. All the infrastructure is created with proper naming convention and tagging.

|

- **Terraform-Azure** - Performs Terraform deployment using Terraform Cloud as remote state. It also creates a Databricks cluster and deploys a starter python notebook to test the connectivity to the Kafka server and retrieves the messages for each data source (TFMS, TBFM and STDDS). All the infrastructure is created with proper naming convention and tagging.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

## devcontainer

|

|

||||||

|

|

||||||

This repository also comes with a devcontainer which can be used to develop and test the project using Docker containers or GitHub Codespaces.

|

|

||||||

|

|

||||||

## Caution

|

## Caution

|

||||||

|

|

||||||

Be aware that by running this project your account will get billed.

|

Be aware that by running this project your account will get billed.

|

||||||

|

|

|

||||||

Загрузка…

Ссылка в новой задаче