Trim non-monitor files; change build definitions; clean references

This commit is contained in:

Родитель

8e92f22eb2

Коммит

ca00141008

|

|

@ -1,3 +1,3 @@

|

|||

@echo off

|

||||

powershell -ExecutionPolicy ByPass -NoProfile -command "& """%~dp0eng\build.ps1""" -restore %*"

|

||||

powershell -ExecutionPolicy ByPass -NoProfile -command "& """%~dp0eng\common\build.ps1""" -restore -build %*"

|

||||

exit /b %ErrorLevel%

|

||||

|

|

|

|||

663

CMakeLists.txt

663

CMakeLists.txt

|

|

@ -1,663 +0,0 @@

|

|||

# Copyright (c) .NET Foundation and contributors. All rights reserved.

|

||||

# Licensed under the MIT license. See LICENSE file in the project root for full license information.

|

||||

|

||||

# Verify minimum required version

|

||||

cmake_minimum_required(VERSION 2.8.12)

|

||||

|

||||

if(CMAKE_VERSION VERSION_EQUAL 3.0 OR CMAKE_VERSION VERSION_GREATER 3.0)

|

||||

cmake_policy(SET CMP0042 NEW)

|

||||

endif()

|

||||

|

||||

# Set the project name

|

||||

project(diagnostics)

|

||||

|

||||

# Include cmake functions

|

||||

include(functions.cmake)

|

||||

|

||||

if (WIN32)

|

||||

message(STATUS "VS_PLATFORM_TOOLSET is ${CMAKE_VS_PLATFORM_TOOLSET}")

|

||||

message(STATUS "VS_PLATFORM_NAME is ${CMAKE_VS_PLATFORM_NAME}")

|

||||

endif (WIN32)

|

||||

|

||||

set(ROOT_DIR ${CMAKE_CURRENT_SOURCE_DIR})

|

||||

|

||||

# Where the version source file for xplat is generated

|

||||

set(VERSION_FILE_PATH "${CMAKE_BINARY_DIR}/version.cpp")

|

||||

|

||||

# Where _version.h for Windows is generated

|

||||

if (WIN32)

|

||||

include_directories("${CMAKE_BINARY_DIR}")

|

||||

endif (WIN32)

|

||||

|

||||

set(CORECLR_SET_RPATH ON)

|

||||

if(CORECLR_SET_RPATH)

|

||||

# Enable @rpath support for shared libraries.

|

||||

set(MACOSX_RPATH ON)

|

||||

endif(CORECLR_SET_RPATH)

|

||||

|

||||

OPTION(CLR_CMAKE_ENABLE_CODE_COVERAGE "Enable code coverage" OFF)

|

||||

OPTION(CLR_CMAKE_WARNINGS_ARE_ERRORS "Warnings are errors" ON)

|

||||

|

||||

# Ensure other tools are present

|

||||

if (WIN32)

|

||||

if(CLR_CMAKE_HOST_ARCH STREQUAL arm)

|

||||

|

||||

# Confirm that Windows SDK is present

|

||||

if(NOT DEFINED CMAKE_VS_WINDOWS_TARGET_PLATFORM_VERSION OR CMAKE_VS_WINDOWS_TARGET_PLATFORM_VERSION STREQUAL "" )

|

||||

message(FATAL_ERROR "Windows SDK is required for the Arm32 build.")

|

||||

else()

|

||||

message("Using Windows SDK version ${CMAKE_VS_WINDOWS_TARGET_PLATFORM_VERSION}")

|

||||

endif()

|

||||

|

||||

# Explicitly specify the assembler to be used for Arm32 compile

|

||||

if($ENV{__VSVersion} STREQUAL "vs2015")

|

||||

file(TO_CMAKE_PATH "$ENV{VCINSTALLDIR}\\bin\\x86_arm\\armasm.exe" CMAKE_ASM_COMPILER)

|

||||

else()

|

||||

file(TO_CMAKE_PATH "$ENV{VCToolsInstallDir}\\bin\\HostX86\\arm\\armasm.exe" CMAKE_ASM_COMPILER)

|

||||

endif()

|

||||

|

||||

set(CMAKE_ASM_MASM_COMPILER ${CMAKE_ASM_COMPILER})

|

||||

message("CMAKE_ASM_MASM_COMPILER explicitly set to: ${CMAKE_ASM_MASM_COMPILER}")

|

||||

|

||||

# Enable generic assembly compilation to avoid CMake generate VS proj files that explicitly

|

||||

# use ml[64].exe as the assembler.

|

||||

enable_language(ASM)

|

||||

else()

|

||||

enable_language(ASM_MASM)

|

||||

endif()

|

||||

|

||||

# Ensure that MC is present

|

||||

find_program(MC mc)

|

||||

if (MC STREQUAL "MC-NOTFOUND")

|

||||

message(FATAL_ERROR "MC not found")

|

||||

endif()

|

||||

|

||||

if (CLR_CMAKE_HOST_ARCH STREQUAL arm64)

|

||||

# CMAKE_CXX_COMPILER will default to the compiler installed with

|

||||

# Visual studio. Overwrite it to the compiler on the path.

|

||||

# TODO, remove when cmake generator supports Arm64 as a target.

|

||||

find_program(PATH_CXX_COMPILER cl)

|

||||

set(CMAKE_CXX_COMPILER ${PATH_CXX_COMPILER})

|

||||

message("Overwriting the CMAKE_CXX_COMPILER.")

|

||||

message(CMAKE_CXX_COMPILER found:${CMAKE_CXX_COMPILER})

|

||||

endif()

|

||||

|

||||

else (WIN32)

|

||||

enable_language(ASM)

|

||||

|

||||

# Ensure that awk is present

|

||||

find_program(AWK awk)

|

||||

if (AWK STREQUAL "AWK-NOTFOUND")

|

||||

message(FATAL_ERROR "AWK not found")

|

||||

endif()

|

||||

|

||||

# Try to locate the paxctl tool. Failure to find it is not fatal,

|

||||

# but the generated executables won't work on a system where PAX is set

|

||||

# to prevent applications to create executable memory mappings.

|

||||

find_program(PAXCTL paxctl)

|

||||

|

||||

if (CMAKE_SYSTEM_NAME STREQUAL Darwin)

|

||||

|

||||

# Ensure that dsymutil and strip are present

|

||||

find_program(DSYMUTIL dsymutil)

|

||||

if (DSYMUTIL STREQUAL "DSYMUTIL-NOTFOUND")

|

||||

message(FATAL_ERROR "dsymutil not found")

|

||||

endif()

|

||||

|

||||

find_program(STRIP strip)

|

||||

if (STRIP STREQUAL "STRIP-NOTFOUND")

|

||||

message(FATAL_ERROR "strip not found")

|

||||

endif()

|

||||

|

||||

else (CMAKE_SYSTEM_NAME STREQUAL Darwin)

|

||||

|

||||

# Ensure that objcopy is present

|

||||

if (CLR_UNIX_CROSS_BUILD AND NOT DEFINED CLR_CROSS_COMPONENTS_BUILD)

|

||||

if (CMAKE_SYSTEM_PROCESSOR STREQUAL armv7l OR CMAKE_SYSTEM_PROCESSOR STREQUAL aarch64 OR CMAKE_SYSTEM_PROCESSOR STREQUAL arm OR CMAKE_SYSTEM_PROCESSOR STREQUAL mips64)

|

||||

find_program(OBJCOPY ${TOOLCHAIN}-objcopy)

|

||||

elseif(CMAKE_SYSTEM_PROCESSOR STREQUAL i686)

|

||||

find_program(OBJCOPY objcopy)

|

||||

else()

|

||||

clr_unknown_arch()

|

||||

endif()

|

||||

else()

|

||||

find_program(OBJCOPY objcopy)

|

||||

endif()

|

||||

|

||||

if (OBJCOPY STREQUAL "OBJCOPY-NOTFOUND")

|

||||

message(FATAL_ERROR "objcopy not found")

|

||||

endif()

|

||||

|

||||

endif (CMAKE_SYSTEM_NAME STREQUAL Darwin)

|

||||

endif(WIN32)

|

||||

|

||||

#----------------------------------------

|

||||

# Detect and set platform variable names

|

||||

# - for non-windows build platform & architecture is detected using inbuilt CMAKE variables and cross target component configure

|

||||

# - for windows we use the passed in parameter to CMAKE to determine build arch

|

||||

#----------------------------------------

|

||||

if(CMAKE_SYSTEM_NAME STREQUAL Linux)

|

||||

set(CLR_CMAKE_PLATFORM_UNIX 1)

|

||||

if(CLR_CROSS_COMPONENTS_BUILD)

|

||||

# CMAKE_HOST_SYSTEM_PROCESSOR returns the value of `uname -p` on host.

|

||||

if(CMAKE_HOST_SYSTEM_PROCESSOR STREQUAL x86_64 OR CMAKE_HOST_SYSTEM_PROCESSOR STREQUAL amd64)

|

||||

if(CLR_CMAKE_TARGET_ARCH STREQUAL "arm")

|

||||

set(CLR_CMAKE_PLATFORM_UNIX_X86 1)

|

||||

else()

|

||||

set(CLR_CMAKE_PLATFORM_UNIX_AMD64 1)

|

||||

endif()

|

||||

elseif(CMAKE_HOST_SYSTEM_PROCESSOR STREQUAL i686)

|

||||

set(CLR_CMAKE_PLATFORM_UNIX_X86 1)

|

||||

else()

|

||||

clr_unknown_arch()

|

||||

endif()

|

||||

else()

|

||||

# CMAKE_SYSTEM_PROCESSOR returns the value of `uname -p` on target.

|

||||

# For the AMD/Intel 64bit architecture two different strings are common.

|

||||

# Linux and Darwin identify it as "x86_64" while FreeBSD and netbsd uses the

|

||||

# "amd64" string. Accept either of the two here.

|

||||

if(CMAKE_SYSTEM_PROCESSOR STREQUAL x86_64 OR CMAKE_SYSTEM_PROCESSOR STREQUAL amd64)

|

||||

set(CLR_CMAKE_PLATFORM_UNIX_AMD64 1)

|

||||

elseif(CMAKE_SYSTEM_PROCESSOR STREQUAL armv7l)

|

||||

set(CLR_CMAKE_PLATFORM_UNIX_ARM 1)

|

||||

elseif(CMAKE_SYSTEM_PROCESSOR STREQUAL arm)

|

||||

set(CLR_CMAKE_PLATFORM_UNIX_ARM 1)

|

||||

elseif(CMAKE_SYSTEM_PROCESSOR STREQUAL aarch64)

|

||||

set(CLR_CMAKE_PLATFORM_UNIX_ARM64 1)

|

||||

elseif(CMAKE_SYSTEM_PROCESSOR STREQUAL mips64)

|

||||

set(CLR_CMAKE_PLATFORM_UNIX_MIPS64 1)

|

||||

elseif(CMAKE_SYSTEM_PROCESSOR STREQUAL i686)

|

||||

set(CLR_CMAKE_PLATFORM_UNIX_X86 1)

|

||||

else()

|

||||

clr_unknown_arch()

|

||||

endif()

|

||||

endif()

|

||||

set(CLR_CMAKE_PLATFORM_LINUX 1)

|

||||

|

||||

# Detect Linux ID

|

||||

if(DEFINED CLR_CMAKE_LINUX_ID)

|

||||

if(CLR_CMAKE_LINUX_ID STREQUAL ubuntu)

|

||||

set(CLR_CMAKE_TARGET_UBUNTU_LINUX 1)

|

||||

elseif(CLR_CMAKE_LINUX_ID STREQUAL tizen)

|

||||

set(CLR_CMAKE_TARGET_TIZEN_LINUX 1)

|

||||

elseif(CLR_CMAKE_LINUX_ID STREQUAL alpine)

|

||||

set(CLR_CMAKE_PLATFORM_ALPINE_LINUX 1)

|

||||

endif()

|

||||

if(CLR_CMAKE_LINUX_ID STREQUAL ubuntu)

|

||||

set(CLR_CMAKE_PLATFORM_UBUNTU_LINUX 1)

|

||||

endif()

|

||||

endif(DEFINED CLR_CMAKE_LINUX_ID)

|

||||

endif(CMAKE_SYSTEM_NAME STREQUAL Linux)

|

||||

|

||||

if(CMAKE_SYSTEM_NAME STREQUAL Darwin)

|

||||

set(CLR_CMAKE_PLATFORM_UNIX 1)

|

||||

if(CMAKE_OSX_ARCHITECTURES MATCHES "x86_64")

|

||||

set(CLR_CMAKE_PLATFORM_UNIX_AMD64 1)

|

||||

elseif(CMAKE_OSX_ARCHITECTURES MATCHES "arm64")

|

||||

set(CLR_CMAKE_PLATFORM_UNIX_ARM64 1)

|

||||

else()

|

||||

message(FATAL_ERROR "CMAKE_OSX_ARCHITECTURES:'${CMAKE_OSX_ARCHITECTURES}'")

|

||||

clr_unknown_arch()

|

||||

endif()

|

||||

set(CLR_CMAKE_PLATFORM_DARWIN 1)

|

||||

if(CMAKE_VERSION VERSION_LESS "3.4.0")

|

||||

set(CMAKE_ASM_COMPILE_OBJECT "${CMAKE_C_COMPILER} <FLAGS> <DEFINES> -o <OBJECT> -c <SOURCE>")

|

||||

else()

|

||||

set(CMAKE_ASM_COMPILE_OBJECT "${CMAKE_C_COMPILER} <FLAGS> <DEFINES> <INCLUDES> -o <OBJECT> -c <SOURCE>")

|

||||

endif(CMAKE_VERSION VERSION_LESS "3.4.0")

|

||||

endif(CMAKE_SYSTEM_NAME STREQUAL Darwin)

|

||||

|

||||

if(CMAKE_SYSTEM_NAME STREQUAL FreeBSD)

|

||||

set(CLR_CMAKE_PLATFORM_UNIX 1)

|

||||

set(CLR_CMAKE_PLATFORM_UNIX_AMD64 1)

|

||||

set(CLR_CMAKE_PLATFORM_FREEBSD 1)

|

||||

endif(CMAKE_SYSTEM_NAME STREQUAL FreeBSD)

|

||||

|

||||

if(CMAKE_SYSTEM_NAME STREQUAL OpenBSD)

|

||||

set(CLR_CMAKE_PLATFORM_UNIX 1)

|

||||

set(CLR_CMAKE_PLATFORM_UNIX_AMD64 1)

|

||||

set(CLR_CMAKE_PLATFORM_OPENBSD 1)

|

||||

endif(CMAKE_SYSTEM_NAME STREQUAL OpenBSD)

|

||||

|

||||

if(CMAKE_SYSTEM_NAME STREQUAL NetBSD)

|

||||

set(CLR_CMAKE_PLATFORM_UNIX 1)

|

||||

set(CLR_CMAKE_PLATFORM_UNIX_AMD64 1)

|

||||

set(CLR_CMAKE_PLATFORM_NETBSD 1)

|

||||

endif(CMAKE_SYSTEM_NAME STREQUAL NetBSD)

|

||||

|

||||

if(CMAKE_SYSTEM_NAME STREQUAL SunOS)

|

||||

set(CLR_CMAKE_PLATFORM_UNIX 1)

|

||||

EXECUTE_PROCESS(

|

||||

COMMAND isainfo -n

|

||||

OUTPUT_VARIABLE SUNOS_NATIVE_INSTRUCTION_SET

|

||||

)

|

||||

if(SUNOS_NATIVE_INSTRUCTION_SET MATCHES "amd64")

|

||||

set(CLR_CMAKE_PLATFORM_UNIX_AMD64 1)

|

||||

set(CMAKE_SYSTEM_PROCESSOR "amd64")

|

||||

else()

|

||||

clr_unknown_arch()

|

||||

endif()

|

||||

set(CLR_CMAKE_PLATFORM_SUNOS 1)

|

||||

endif(CMAKE_SYSTEM_NAME STREQUAL SunOS)

|

||||

|

||||

#--------------------------------------------

|

||||

# This repo builds two set of binaries

|

||||

# 1. binaries which execute on target arch machine

|

||||

# - for such binaries host architecture & target architecture are same

|

||||

# - eg. coreclr.dll

|

||||

# 2. binaries which execute on host machine but target another architecture

|

||||

# - host architecture is different from target architecture

|

||||

# - eg. crossgen.exe - runs on x64 machine and generates nis targeting arm64

|

||||

# - for complete list of such binaries refer to file crosscomponents.cmake

|

||||

#-------------------------------------------------------------

|

||||

# Set HOST architecture variables

|

||||

if(CLR_CMAKE_PLATFORM_UNIX_ARM)

|

||||

set(CLR_CMAKE_PLATFORM_ARCH_ARM 1)

|

||||

set(CLR_CMAKE_HOST_ARCH "arm")

|

||||

elseif(CLR_CMAKE_PLATFORM_UNIX_ARM64)

|

||||

set(CLR_CMAKE_PLATFORM_ARCH_ARM64 1)

|

||||

set(CLR_CMAKE_HOST_ARCH "arm64")

|

||||

elseif(CLR_CMAKE_PLATFORM_UNIX_AMD64)

|

||||

set(CLR_CMAKE_PLATFORM_ARCH_AMD64 1)

|

||||

set(CLR_CMAKE_HOST_ARCH "x64")

|

||||

elseif(CLR_CMAKE_PLATFORM_UNIX_X86)

|

||||

set(CLR_CMAKE_PLATFORM_ARCH_I386 1)

|

||||

set(CLR_CMAKE_HOST_ARCH "x86")

|

||||

elseif(CLR_CMAKE_PLATFORM_UNIX_MIPS64)

|

||||

set(CLR_CMAKE_PLATFORM_ARCH_MIPS64 1)

|

||||

set(CLR_CMAKE_HOST_ARCH "mips64")

|

||||

elseif(WIN32)

|

||||

# CLR_CMAKE_HOST_ARCH is passed in as param to cmake

|

||||

if (CLR_CMAKE_HOST_ARCH STREQUAL x64)

|

||||

set(CLR_CMAKE_PLATFORM_ARCH_AMD64 1)

|

||||

elseif(CLR_CMAKE_HOST_ARCH STREQUAL x86)

|

||||

set(CLR_CMAKE_PLATFORM_ARCH_I386 1)

|

||||

elseif(CLR_CMAKE_HOST_ARCH STREQUAL arm)

|

||||

set(CLR_CMAKE_PLATFORM_ARCH_ARM 1)

|

||||

elseif(CLR_CMAKE_HOST_ARCH STREQUAL arm64)

|

||||

set(CLR_CMAKE_PLATFORM_ARCH_ARM64 1)

|

||||

else()

|

||||

clr_unknown_arch()

|

||||

endif()

|

||||

endif()

|

||||

|

||||

# Set TARGET architecture variables

|

||||

# Target arch will be a cmake param (optional) for both windows as well as non-windows build

|

||||

# if target arch is not specified then host & target are same

|

||||

if(NOT DEFINED CLR_CMAKE_TARGET_ARCH OR CLR_CMAKE_TARGET_ARCH STREQUAL "" )

|

||||

set(CLR_CMAKE_TARGET_ARCH ${CLR_CMAKE_HOST_ARCH})

|

||||

endif()

|

||||

|

||||

# Set target architecture variables

|

||||

if (CLR_CMAKE_TARGET_ARCH STREQUAL x64)

|

||||

set(CLR_CMAKE_TARGET_ARCH_AMD64 1)

|

||||

elseif(CLR_CMAKE_TARGET_ARCH STREQUAL x86)

|

||||

set(CLR_CMAKE_TARGET_ARCH_I386 1)

|

||||

elseif(CLR_CMAKE_TARGET_ARCH STREQUAL arm64)

|

||||

set(CLR_CMAKE_TARGET_ARCH_ARM64 1)

|

||||

elseif(CLR_CMAKE_TARGET_ARCH STREQUAL arm)

|

||||

set(CLR_CMAKE_TARGET_ARCH_ARM 1)

|

||||

elseif(CLR_CMAKE_TARGET_ARCH STREQUAL mips64)

|

||||

set(CLR_CMAKE_TARGET_ARCH_MIPS64 1)

|

||||

else()

|

||||

clr_unknown_arch()

|

||||

endif()

|

||||

|

||||

# check if host & target arch combination are valid

|

||||

if(NOT(CLR_CMAKE_TARGET_ARCH STREQUAL CLR_CMAKE_HOST_ARCH))

|

||||

if(NOT((CLR_CMAKE_PLATFORM_ARCH_AMD64 AND CLR_CMAKE_TARGET_ARCH_ARM64) OR (CLR_CMAKE_PLATFORM_ARCH_I386 AND CLR_CMAKE_TARGET_ARCH_ARM)))

|

||||

message(FATAL_ERROR "Invalid host and target arch combination")

|

||||

endif()

|

||||

endif()

|

||||

|

||||

#-----------------------------------------------------

|

||||

# Initialize Cmake compiler flags and other variables

|

||||

#-----------------------------------------------------

|

||||

|

||||

if (CMAKE_CONFIGURATION_TYPES) # multi-configuration generator?

|

||||

set(CMAKE_CONFIGURATION_TYPES "Debug;Checked;Release;RelWithDebInfo" CACHE STRING "" FORCE)

|

||||

endif (CMAKE_CONFIGURATION_TYPES)

|

||||

|

||||

set(CMAKE_C_FLAGS_CHECKED ${CLR_C_FLAGS_CHECKED_INIT} CACHE STRING "Flags used by the compiler during checked builds.")

|

||||

set(CMAKE_CXX_FLAGS_CHECKED ${CLR_CXX_FLAGS_CHECKED_INIT} CACHE STRING "Flags used by the compiler during checked builds.")

|

||||

set(CMAKE_EXE_LINKER_FLAGS_CHECKED "")

|

||||

set(CMAKE_SHARED_LINKER_FLAGS_CHECKED "")

|

||||

|

||||

set(CMAKE_CXX_STANDARD_LIBRARIES "") # do not link against standard win32 libs i.e. kernel32, uuid, user32, etc.

|

||||

|

||||

if (WIN32)

|

||||

# For multi-configuration toolset (as Visual Studio)

|

||||

# set the different configuration defines.

|

||||

foreach (Config DEBUG CHECKED RELEASE RELWITHDEBINFO)

|

||||

foreach (Definition IN LISTS CLR_DEFINES_${Config}_INIT)

|

||||

set_property(DIRECTORY APPEND PROPERTY COMPILE_DEFINITIONS $<$<CONFIG:${Config}>:${Definition}>)

|

||||

endforeach (Definition)

|

||||

endforeach (Config)

|

||||

|

||||

set(CMAKE_SHARED_LINKER_FLAGS "${CMAKE_SHARED_LINKER_FLAGS} /GUARD:CF")

|

||||

set(CMAKE_EXE_LINKER_FLAGS "${CMAKE_EXE_LINKER_FLAGS} /GUARD:CF")

|

||||

|

||||

# Linker flags

|

||||

#

|

||||

set(CMAKE_SHARED_LINKER_FLAGS "${CMAKE_SHARED_LINKER_FLAGS} /MANIFEST:NO") #Do not create Side-by-Side Assembly Manifest

|

||||

|

||||

if (CLR_CMAKE_PLATFORM_ARCH_ARM)

|

||||

set(CMAKE_SHARED_LINKER_FLAGS "${CMAKE_SHARED_LINKER_FLAGS} /SUBSYSTEM:WINDOWS,6.02") #windows subsystem - arm minimum is 6.02

|

||||

elseif(CLR_CMAKE_PLATFORM_ARCH_ARM64)

|

||||

set(CMAKE_SHARED_LINKER_FLAGS "${CMAKE_SHARED_LINKER_FLAGS} /SUBSYSTEM:WINDOWS,6.03") #windows subsystem - arm64 minimum is 6.03

|

||||

else ()

|

||||

set(CMAKE_SHARED_LINKER_FLAGS "${CMAKE_SHARED_LINKER_FLAGS} /SUBSYSTEM:WINDOWS,6.01") #windows subsystem

|

||||

endif ()

|

||||

|

||||

set(CMAKE_SHARED_LINKER_FLAGS "${CMAKE_SHARED_LINKER_FLAGS} /LARGEADDRESSAWARE") # can handle addresses larger than 2 gigabytes

|

||||

set(CMAKE_SHARED_LINKER_FLAGS "${CMAKE_SHARED_LINKER_FLAGS} /NXCOMPAT") #Compatible with Data Execution Prevention

|

||||

set(CMAKE_SHARED_LINKER_FLAGS "${CMAKE_SHARED_LINKER_FLAGS} /DYNAMICBASE") #Use address space layout randomization

|

||||

set(CMAKE_SHARED_LINKER_FLAGS "${CMAKE_SHARED_LINKER_FLAGS} /PDBCOMPRESS") #shrink pdb size

|

||||

set(CMAKE_SHARED_LINKER_FLAGS "${CMAKE_SHARED_LINKER_FLAGS} /DEBUG")

|

||||

set(CMAKE_SHARED_LINKER_FLAGS "${CMAKE_SHARED_LINKER_FLAGS} /IGNORE:4197,4013,4254,4070,4221")

|

||||

|

||||

set(CMAKE_STATIC_LINKER_FLAGS "${CMAKE_STATIC_LINKER_FLAGS} /IGNORE:4221")

|

||||

|

||||

set(CMAKE_EXE_LINKER_FLAGS "${CMAKE_EXE_LINKER_FLAGS} /DEBUG /PDBCOMPRESS")

|

||||

set(CMAKE_EXE_LINKER_FLAGS "${CMAKE_EXE_LINKER_FLAGS} /STACK:1572864")

|

||||

|

||||

# Temporarily disable incremental link due to incremental linking CFG bug crashing crossgen.

|

||||

# See https://github.com/dotnet/coreclr/issues/12592

|

||||

# This has been fixed in VS 2017 Update 5 but we're keeping this around until everyone is off

|

||||

# the versions that have the bug. The bug manifests itself as a bad crash.

|

||||

set(NO_INCREMENTAL_LINKER_FLAGS "/INCREMENTAL:NO")

|

||||

|

||||

# Debug build specific flags

|

||||

set(CMAKE_SHARED_LINKER_FLAGS_DEBUG "/NOVCFEATURE ${NO_INCREMENTAL_LINKER_FLAGS}")

|

||||

set(CMAKE_EXE_LINKER_FLAGS_DEBUG "${NO_INCREMENTAL_LINKER_FLAGS}")

|

||||

|

||||

# Checked build specific flags

|

||||

set(CMAKE_SHARED_LINKER_FLAGS_CHECKED "${CMAKE_SHARED_LINKER_FLAGS_CHECKED} /OPT:REF /OPT:NOICF /NOVCFEATURE ${NO_INCREMENTAL_LINKER_FLAGS}")

|

||||

set(CMAKE_STATIC_LINKER_FLAGS_CHECKED "${CMAKE_STATIC_LINKER_FLAGS_CHECKED}")

|

||||

set(CMAKE_EXE_LINKER_FLAGS_CHECKED "${CMAKE_EXE_LINKER_FLAGS_CHECKED} /OPT:REF /OPT:NOICF ${NO_INCREMENTAL_LINKER_FLAGS}")

|

||||

|

||||

# Release build specific flags

|

||||

set(CMAKE_SHARED_LINKER_FLAGS_RELEASE "${CMAKE_SHARED_LINKER_FLAGS_RELEASE} /LTCG /OPT:REF /OPT:ICF ${NO_INCREMENTAL_LINKER_FLAGS}")

|

||||

set(CMAKE_STATIC_LINKER_FLAGS_RELEASE "${CMAKE_STATIC_LINKER_FLAGS_RELEASE} /LTCG")

|

||||

set(CMAKE_EXE_LINKER_FLAGS_RELEASE "${CMAKE_EXE_LINKER_FLAGS_RELEASE} /LTCG /OPT:REF /OPT:ICF ${NO_INCREMENTAL_LINKER_FLAGS}")

|

||||

|

||||

# ReleaseWithDebugInfo build specific flags

|

||||

set(CMAKE_SHARED_LINKER_FLAGS_RELWITHDEBINFO "${CMAKE_SHARED_LINKER_FLAGS_RELWITHDEBINFO} /LTCG /OPT:REF /OPT:ICF ${NO_INCREMENTAL_LINKER_FLAGS}")

|

||||

set(CMAKE_STATIC_LINKER_FLAGS_RELWITHDEBINFO "${CMAKE_STATIC_LINKER_FLAGS_RELWITHDEBINFO} /LTCG")

|

||||

set(CMAKE_EXE_LINKER_FLAGS_RELWITHDEBINFO "${CMAKE_EXE_LINKER_FLAGS_RELWITHDEBINFO} /LTCG /OPT:REF /OPT:ICF ${NO_INCREMENTAL_LINKER_FLAGS}")

|

||||

|

||||

# Temporary until cmake has VS generators for arm64

|

||||

if(CLR_CMAKE_PLATFORM_ARCH_ARM64)

|

||||

set(CMAKE_SHARED_LINKER_FLAGS "${CMAKE_SHARED_LINKER_FLAGS} /machine:arm64")

|

||||

set(CMAKE_STATIC_LINKER_FLAGS "${CMAKE_STATIC_LINKER_FLAGS} /machine:arm64")

|

||||

set(CMAKE_EXE_LINKER_FLAGS "${CMAKE_EXE_LINKER_FLAGS} /machine:arm64")

|

||||

endif(CLR_CMAKE_PLATFORM_ARCH_ARM64)

|

||||

|

||||

# Force uCRT to be dynamically linked for Release build

|

||||

set(CMAKE_SHARED_LINKER_FLAGS_RELEASE "${CMAKE_SHARED_LINKER_FLAGS_RELEASE} /NODEFAULTLIB:libucrt.lib /DEFAULTLIB:ucrt.lib")

|

||||

set(CMAKE_EXE_LINKER_FLAGS_RELEASE "${CMAKE_EXE_LINKER_FLAGS_RELEASE} /NODEFAULTLIB:libucrt.lib /DEFAULTLIB:ucrt.lib")

|

||||

set(CMAKE_SHARED_LINKER_FLAGS_RELWITHDEBINFO "${CMAKE_SHARED_LINKER_FLAGS_RELWITHDEBINFO} /NODEFAULTLIB:libucrt.lib /DEFAULTLIB:ucrt.lib")

|

||||

set(CMAKE_EXE_LINKER_FLAGS_RELWITHDEBINFO "${CMAKE_EXE_LINKER_FLAGS_RELWITHDEBINFO} /NODEFAULTLIB:libucrt.lib /DEFAULTLIB:ucrt.lib")

|

||||

|

||||

elseif (CLR_CMAKE_PLATFORM_UNIX)

|

||||

# Set the values to display when interactively configuring CMAKE_BUILD_TYPE

|

||||

set_property(CACHE CMAKE_BUILD_TYPE PROPERTY STRINGS "DEBUG;CHECKED;RELEASE;RELWITHDEBINFO")

|

||||

|

||||

# Use uppercase CMAKE_BUILD_TYPE for the string comparisons below

|

||||

string(TOUPPER ${CMAKE_BUILD_TYPE} UPPERCASE_CMAKE_BUILD_TYPE)

|

||||

|

||||

# For single-configuration toolset

|

||||

# set the different configuration defines.

|

||||

if (UPPERCASE_CMAKE_BUILD_TYPE STREQUAL DEBUG)

|

||||

# First DEBUG

|

||||

set_property(DIRECTORY PROPERTY COMPILE_DEFINITIONS ${CLR_DEFINES_DEBUG_INIT})

|

||||

elseif (UPPERCASE_CMAKE_BUILD_TYPE STREQUAL CHECKED)

|

||||

# Then CHECKED

|

||||

set_property(DIRECTORY PROPERTY COMPILE_DEFINITIONS ${CLR_DEFINES_CHECKED_INIT})

|

||||

elseif (UPPERCASE_CMAKE_BUILD_TYPE STREQUAL RELEASE)

|

||||

# Then RELEASE

|

||||

set_property(DIRECTORY PROPERTY COMPILE_DEFINITIONS ${CLR_DEFINES_RELEASE_INIT})

|

||||

elseif (UPPERCASE_CMAKE_BUILD_TYPE STREQUAL RELWITHDEBINFO)

|

||||

# And then RELWITHDEBINFO

|

||||

set_property(DIRECTORY APPEND PROPERTY COMPILE_DEFINITIONS ${CLR_DEFINES_RELWITHDEBINFO_INIT})

|

||||

else ()

|

||||

message(FATAL_ERROR "Unknown build type! Set CMAKE_BUILD_TYPE to DEBUG, CHECKED, RELEASE, or RELWITHDEBINFO!")

|

||||

endif ()

|

||||

|

||||

# set the CLANG sanitizer flags for debug build

|

||||

if(UPPERCASE_CMAKE_BUILD_TYPE STREQUAL DEBUG OR UPPERCASE_CMAKE_BUILD_TYPE STREQUAL CHECKED)

|

||||

# obtain settings from running enablesanitizers.sh

|

||||

string(FIND "$ENV{DEBUG_SANITIZERS}" "asan" __ASAN_POS)

|

||||

string(FIND "$ENV{DEBUG_SANITIZERS}" "ubsan" __UBSAN_POS)

|

||||

if ((${__ASAN_POS} GREATER -1) OR (${__UBSAN_POS} GREATER -1))

|

||||

set(CLR_SANITIZE_CXX_FLAGS "${CLR_SANITIZE_CXX_FLAGS} -fsanitize-blacklist=${CMAKE_CURRENT_SOURCE_DIR}/sanitizerblacklist.txt -fsanitize=")

|

||||

set(CLR_SANITIZE_LINK_FLAGS "${CLR_SANITIZE_LINK_FLAGS} -fsanitize=")

|

||||

if (${__ASAN_POS} GREATER -1)

|

||||

set(CLR_SANITIZE_CXX_FLAGS "${CLR_SANITIZE_CXX_FLAGS}address,")

|

||||

set(CLR_SANITIZE_LINK_FLAGS "${CLR_SANITIZE_LINK_FLAGS}address,")

|

||||

add_definitions(-DHAS_ASAN)

|

||||

message("Address Sanitizer (asan) enabled")

|

||||

endif ()

|

||||

if (${__UBSAN_POS} GREATER -1)

|

||||

# all sanitizier flags are enabled except alignment (due to heavy use of __unaligned modifier)

|

||||

set(CLR_SANITIZE_CXX_FLAGS "${CLR_SANITIZE_CXX_FLAGS}bool,bounds,enum,float-cast-overflow,float-divide-by-zero,function,integer,nonnull-attribute,null,object-size,return,returns-nonnull-attribute,shift,unreachable,vla-bound,vptr")

|

||||

set(CLR_SANITIZE_LINK_FLAGS "${CLR_SANITIZE_LINK_FLAGS}undefined")

|

||||

message("Undefined Behavior Sanitizer (ubsan) enabled")

|

||||

endif ()

|

||||

|

||||

# -fdata-sections -ffunction-sections: each function has own section instead of one per .o file (needed for --gc-sections)

|

||||

# -fPIC: enable Position Independent Code normally just for shared libraries but required when linking with address sanitizer

|

||||

# -O1: optimization level used instead of -O0 to avoid compile error "invalid operand for inline asm constraint"

|

||||

set(CMAKE_CXX_FLAGS_DEBUG "${CMAKE_CXX_FLAGS_DEBUG} ${CLR_SANITIZE_CXX_FLAGS} -fdata-sections -ffunction-sections -fPIC -O1")

|

||||

set(CMAKE_CXX_FLAGS_CHECKED "${CMAKE_CXX_FLAGS_CHECKED} ${CLR_SANITIZE_CXX_FLAGS} -fdata-sections -ffunction-sections -fPIC -O1")

|

||||

|

||||

set(CMAKE_EXE_LINKER_FLAGS_DEBUG "${CMAKE_EXE_LINKER_FLAGS_DEBUG} ${CLR_SANITIZE_LINK_FLAGS}")

|

||||

set(CMAKE_EXE_LINKER_FLAGS_CHECKED "${CMAKE_EXE_LINKER_FLAGS_CHECKED} ${CLR_SANITIZE_LINK_FLAGS}")

|

||||

|

||||

# -Wl and --gc-sections: drop unused sections\functions (similar to Windows /Gy function-level-linking)

|

||||

set(CMAKE_SHARED_LINKER_FLAGS_DEBUG "${CMAKE_SHARED_LINKER_FLAGS_DEBUG} ${CLR_SANITIZE_LINK_FLAGS} -Wl,--gc-sections")

|

||||

set(CMAKE_SHARED_LINKER_FLAGS_CHECKED "${CMAKE_SHARED_LINKER_FLAGS_CHECKED} ${CLR_SANITIZE_LINK_FLAGS} -Wl,--gc-sections")

|

||||

endif ()

|

||||

endif(UPPERCASE_CMAKE_BUILD_TYPE STREQUAL DEBUG OR UPPERCASE_CMAKE_BUILD_TYPE STREQUAL CHECKED)

|

||||

endif(WIN32)

|

||||

|

||||

# CLR_ADDITIONAL_LINKER_FLAGS - used for passing additional arguments to linker

|

||||

# CLR_ADDITIONAL_COMPILER_OPTIONS - used for passing additional arguments to compiler

|

||||

|

||||

if(CLR_CMAKE_PLATFORM_UNIX)

|

||||

set(CMAKE_SHARED_LINKER_FLAGS "${CMAKE_SHARED_LINKER_FLAGS} ${CLR_ADDITIONAL_LINKER_FLAGS}")

|

||||

set(CMAKE_EXE_LINKER_FLAGS "${CMAKE_EXE_LINKER_FLAGS} ${CLR_ADDITIONAL_LINKER_FLAGS}" )

|

||||

add_compile_options(${CLR_ADDITIONAL_COMPILER_OPTIONS})

|

||||

endif(CLR_CMAKE_PLATFORM_UNIX)

|

||||

|

||||

if(CLR_CMAKE_PLATFORM_LINUX)

|

||||

set(CMAKE_ASM_FLAGS "${CMAKE_ASM_FLAGS} -Wa,--noexecstack")

|

||||

set(CMAKE_SHARED_LINKER_FLAGS "${CMAKE_SHARED_LINKER_FLAGS} -Wl,--build-id=sha1")

|

||||

set(CMAKE_EXE_LINKER_FLAGS "${CMAKE_EXE_LINKER_FLAGS} -Wl,--build-id=sha1")

|

||||

endif(CLR_CMAKE_PLATFORM_LINUX)

|

||||

|

||||

#------------------------------------

|

||||

# Definitions (for platform)

|

||||

#-----------------------------------

|

||||

|

||||

if (CLR_CMAKE_PLATFORM_ARCH_AMD64)

|

||||

add_definitions(-D_AMD64_)

|

||||

add_definitions(-D_WIN64)

|

||||

add_definitions(-DAMD64)

|

||||

add_definitions(-DBIT64=1)

|

||||

elseif (CLR_CMAKE_PLATFORM_ARCH_I386)

|

||||

add_definitions(-D_X86_)

|

||||

elseif (CLR_CMAKE_PLATFORM_ARCH_ARM)

|

||||

add_definitions(-D_ARM_)

|

||||

add_definitions(-DARM)

|

||||

elseif (CLR_CMAKE_PLATFORM_ARCH_ARM64)

|

||||

add_definitions(-D_ARM64_)

|

||||

add_definitions(-DARM64)

|

||||

add_definitions(-D_WIN64)

|

||||

add_definitions(-DBIT64=1)

|

||||

elseif (CLR_CMAKE_PLATFORM_ARCH_MIPS64)

|

||||

add_definitions(-D_MIPS64_)

|

||||

add_definitions(-DMIPS64)

|

||||

add_definitions(-D_WIN64)

|

||||

add_definitions(-DBIT64=1)

|

||||

else ()

|

||||

clr_unknown_arch()

|

||||

endif ()

|

||||

|

||||

if (CLR_CMAKE_PLATFORM_UNIX)

|

||||

if(CLR_CMAKE_PLATFORM_LINUX)

|

||||

if(CLR_CMAKE_PLATFORM_UNIX_AMD64)

|

||||

message("Detected Linux x86_64")

|

||||

add_definitions(-DLINUX64)

|

||||

elseif(CLR_CMAKE_PLATFORM_UNIX_ARM)

|

||||

message("Detected Linux ARM")

|

||||

add_definitions(-DLINUX32)

|

||||

elseif(CLR_CMAKE_PLATFORM_UNIX_ARM64)

|

||||

message("Detected Linux ARM64")

|

||||

add_definitions(-DLINUX64)

|

||||

elseif(CLR_CMAKE_PLATFORM_UNIX_X86)

|

||||

message("Detected Linux i686")

|

||||

add_definitions(-DLINUX32)

|

||||

elseif(CLR_CMAKE_PLATFORM_UNIX_MIPS64)

|

||||

message("Detected Linux MIPS64")

|

||||

add_definitions(-DLINUX64)

|

||||

else()

|

||||

clr_unknown_arch()

|

||||

endif()

|

||||

endif(CLR_CMAKE_PLATFORM_LINUX)

|

||||

endif(CLR_CMAKE_PLATFORM_UNIX)

|

||||

|

||||

if (CLR_CMAKE_PLATFORM_UNIX)

|

||||

add_definitions(-DPLATFORM_UNIX=1)

|

||||

|

||||

if(CLR_CMAKE_PLATFORM_DARWIN)

|

||||

message("Detected OSX x86_64")

|

||||

endif(CLR_CMAKE_PLATFORM_DARWIN)

|

||||

|

||||

if(CLR_CMAKE_PLATFORM_FREEBSD)

|

||||

message("Detected FreeBSD amd64")

|

||||

endif(CLR_CMAKE_PLATFORM_FREEBSD)

|

||||

|

||||

if(CLR_CMAKE_PLATFORM_NETBSD)

|

||||

message("Detected NetBSD amd64")

|

||||

endif(CLR_CMAKE_PLATFORM_NETBSD)

|

||||

endif(CLR_CMAKE_PLATFORM_UNIX)

|

||||

|

||||

if (WIN32)

|

||||

# Define the CRT lib references that link into Desktop imports

|

||||

set(STATIC_MT_CRT_LIB "libcmt$<$<OR:$<CONFIG:Debug>,$<CONFIG:Checked>>:d>.lib")

|

||||

set(STATIC_MT_VCRT_LIB "libvcruntime$<$<OR:$<CONFIG:Debug>,$<CONFIG:Checked>>:d>.lib")

|

||||

set(STATIC_MT_CPP_LIB "libcpmt$<$<OR:$<CONFIG:Debug>,$<CONFIG:Checked>>:d>.lib")

|

||||

endif(WIN32)

|

||||

|

||||

# Architecture specific files folder name

|

||||

if (CLR_CMAKE_TARGET_ARCH_AMD64)

|

||||

set(ARCH_SOURCES_DIR amd64)

|

||||

elseif (CLR_CMAKE_TARGET_ARCH_ARM64)

|

||||

set(ARCH_SOURCES_DIR arm64)

|

||||

elseif (CLR_CMAKE_TARGET_ARCH_ARM)

|

||||

set(ARCH_SOURCES_DIR arm)

|

||||

elseif (CLR_CMAKE_TARGET_ARCH_I386)

|

||||

set(ARCH_SOURCES_DIR i386)

|

||||

elseif (CLR_CMAKE_TARGET_ARCH_MIPS64)

|

||||

set(ARCH_SOURCES_DIR mips64)

|

||||

else ()

|

||||

clr_unknown_arch()

|

||||

endif ()

|

||||

|

||||

if (CLR_CMAKE_TARGET_ARCH_AMD64)

|

||||

if (CLR_CMAKE_PLATFORM_UNIX)

|

||||

add_definitions(-DDBG_TARGET_AMD64_UNIX)

|

||||

endif()

|

||||

add_definitions(-D_TARGET_64BIT_=1)

|

||||

add_definitions(-D_TARGET_AMD64_=1)

|

||||

add_definitions(-DDBG_TARGET_64BIT=1)

|

||||

add_definitions(-DDBG_TARGET_AMD64=1)

|

||||

add_definitions(-DDBG_TARGET_WIN64=1)

|

||||

elseif (CLR_CMAKE_TARGET_ARCH_ARM64)

|

||||

if (CLR_CMAKE_PLATFORM_UNIX)

|

||||

add_definitions(-DDBG_TARGET_ARM64_UNIX)

|

||||

endif()

|

||||

add_definitions(-D_TARGET_ARM64_=1)

|

||||

add_definitions(-D_TARGET_64BIT_=1)

|

||||

add_definitions(-DDBG_TARGET_64BIT=1)

|

||||

add_definitions(-DDBG_TARGET_ARM64=1)

|

||||

add_definitions(-DDBG_TARGET_WIN64=1)

|

||||

add_definitions(-DFEATURE_MULTIREG_RETURN)

|

||||

elseif (CLR_CMAKE_TARGET_ARCH_ARM)

|

||||

if (CLR_CMAKE_PLATFORM_UNIX)

|

||||

add_definitions(-DDBG_TARGET_ARM_UNIX)

|

||||

elseif (WIN32 AND NOT DEFINED CLR_CROSS_COMPONENTS_BUILD)

|

||||

# Set this to ensure we can use Arm SDK for Desktop binary linkage when doing native (Arm32) build

|

||||

add_definitions(-D_ARM_WINAPI_PARTITION_DESKTOP_SDK_AVAILABLE=1)

|

||||

add_definitions(-D_ARM_WORKAROUND_)

|

||||

endif (CLR_CMAKE_PLATFORM_UNIX)

|

||||

add_definitions(-D_TARGET_ARM_=1)

|

||||

add_definitions(-DDBG_TARGET_32BIT=1)

|

||||

add_definitions(-DDBG_TARGET_ARM=1)

|

||||

elseif (CLR_CMAKE_TARGET_ARCH_I386)

|

||||

add_definitions(-D_TARGET_X86_=1)

|

||||

add_definitions(-DDBG_TARGET_32BIT=1)

|

||||

add_definitions(-DDBG_TARGET_X86=1)

|

||||

elseif (CLR_CMAKE_TARGET_ARCH_MIPS64)

|

||||

add_definitions(-DDBG_TARGET_MIPS64_UNIX)

|

||||

add_definitions(-D_TARGET_MIPS64_=1)

|

||||

add_definitions(-D_TARGET_64BIT_=1)

|

||||

add_definitions(-DDBG_TARGET_64BIT=1)

|

||||

add_definitions(-DDBG_TARGET_MIPS64=1)

|

||||

add_definitions(-DDBG_TARGET_WIN64=1)

|

||||

add_definitions(-DFEATURE_MULTIREG_RETURN)

|

||||

else ()

|

||||

clr_unknown_arch()

|

||||

endif (CLR_CMAKE_TARGET_ARCH_AMD64)

|

||||

|

||||

if(WIN32)

|

||||

add_definitions(-DWIN32)

|

||||

add_definitions(-D_WIN32)

|

||||

add_definitions(-DWINVER=0x0602)

|

||||

add_definitions(-D_WIN32_WINNT=0x0602)

|

||||

add_definitions(-DWIN32_LEAN_AND_MEAN=1)

|

||||

add_definitions(-D_CRT_SECURE_NO_WARNINGS)

|

||||

endif(WIN32)

|

||||

|

||||

#--------------------------------------

|

||||

# FEATURE Defines

|

||||

#--------------------------------------

|

||||

|

||||

add_definitions(-DFEATURE_CORESYSTEM)

|

||||

|

||||

if(CLR_CMAKE_PLATFORM_UNIX)

|

||||

add_definitions(-DFEATURE_PAL)

|

||||

add_definitions(-DFEATURE_PAL_ANSI)

|

||||

endif(CLR_CMAKE_PLATFORM_UNIX)

|

||||

|

||||

if(WIN32)

|

||||

add_definitions(-DFEATURE_COMINTEROP)

|

||||

endif(WIN32)

|

||||

|

||||

if(NOT CMAKE_SYSTEM_NAME STREQUAL NetBSD)

|

||||

add_definitions(-DFEATURE_HIJACK)

|

||||

endif(NOT CMAKE_SYSTEM_NAME STREQUAL NetBSD)

|

||||

|

||||

if(FEATURE_EVENT_TRACE)

|

||||

add_definitions(-DFEATURE_EVENT_TRACE=1)

|

||||

add_definitions(-DFEATURE_PERFTRACING=1)

|

||||

endif(FEATURE_EVENT_TRACE)

|

||||

|

||||

if(CLR_CMAKE_PLATFORM_UNIX_AMD64)

|

||||

add_definitions(-DFEATURE_MULTIREG_RETURN)

|

||||

endif (CLR_CMAKE_PLATFORM_UNIX_AMD64)

|

||||

|

||||

if(CLR_CMAKE_PLATFORM_UNIX AND CLR_CMAKE_TARGET_ARCH_AMD64)

|

||||

add_definitions(-DUNIX_AMD64_ABI)

|

||||

endif(CLR_CMAKE_PLATFORM_UNIX AND CLR_CMAKE_TARGET_ARCH_AMD64)

|

||||

|

||||

#--------------------------------------

|

||||

# Compile Options

|

||||

#--------------------------------------

|

||||

include(compileoptions.cmake)

|

||||

|

||||

#-----------------------------------------

|

||||

# Native Projects

|

||||

#-----------------------------------------

|

||||

add_subdirectory(src)

|

||||

|

|

@ -6,15 +6,9 @@

|

|||

<packageSources>

|

||||

<clear />

|

||||

<!-- Feeds used in Maestro/Arcade publishing -->

|

||||

<add key="dotnet6" value="https://dnceng.pkgs.visualstudio.com/public/_packaging/dotnet6/nuget/v3/index.json" />

|

||||

<add key="dotnet6-transport" value="https://dnceng.pkgs.visualstudio.com/public/_packaging/dotnet6-transport/nuget/v3/index.json" />

|

||||

<add key="dotnet5" value="https://dnceng.pkgs.visualstudio.com/public/_packaging/dotnet5/nuget/v3/index.json" />

|

||||

<add key="dotnet5-transport" value="https://dnceng.pkgs.visualstudio.com/public/_packaging/dotnet5-transport/nuget/v3/index.json" />

|

||||

<add key="dotnet-tools" value="https://pkgs.dev.azure.com/dnceng/public/_packaging/dotnet-tools/nuget/v3/index.json" />

|

||||

<add key="dotnet-eng" value="https://pkgs.dev.azure.com/dnceng/public/_packaging/dotnet-eng/nuget/v3/index.json" />

|

||||

<add key="dotnet-diagnostics-tests" value="https://pkgs.dev.azure.com/dnceng/public/_packaging/dotnet-diagnostics-tests/nuget/v3/index.json" />

|

||||

<!-- Legacy feeds -->

|

||||

<add key="dotnet-core" value="https://dotnetfeed.blob.core.windows.net/dotnet-core/index.json" />

|

||||

<!-- Standard feeds -->

|

||||

<add key="dotnet-public" value="https://pkgs.dev.azure.com/dnceng/public/_packaging/dotnet-public/nuget/v3/index.json" />

|

||||

</packageSources>

|

||||

|

|

|

|||

58

README.md

58

README.md

|

|

@ -1,60 +1,34 @@

|

|||

.NET Core Diagnostics Repo

|

||||

==========================

|

||||

# .NET Core Diagnostics Repo

|

||||

|

||||

This repository contains the source code for various .NET Core runtime diagnostic tools. It currently contains SOS, the managed portion of SOS, the lldb SOS plugin and various global diagnostic tools. The goals of this repo is to build SOS and the lldb SOS plugin for the portable (glibc based) Linux platform (Centos 7) and the platforms not supported by the portable (musl based) build (Centos 6, Alpine, and macOS) and to test across various indexes in a very large matrix: OSs/distros (Centos 6/7, Ubuntu, Alpine, Fedora, Debian, RHEL 7.2), architectures (x64, x86, arm, arm64), lldb versions (3.9 to 9.0) and .NET Core versions (2.1, 3.1, 5.0.x).

|

||||

This repository contains the source code for dotnet-monitor, a diagnostic tool

|

||||

|

||||

Another goal to make it easier to obtain a version of lldb (currently 3.9) with scripts and documentation for platforms/distros like Centos, Alpine, Fedora, etc. that by default provide really old versions.

|

||||

|

||||

This repo will also allow out of band development of new SOS and lldb plugin features like symbol server support for the .NET Core runtime and solve the source build problem having SOS.NETCore (managed portion of SOS) in the runtime repo.

|

||||

|

||||

See the [GitHub Release tab](https://github.com/dotnet/diagnostics/releases) for notes on SOS and diagnostic tools releases.

|

||||

|

||||

--------------------------

|

||||

## Building the Repository

|

||||

|

||||

The build depends on Git, CMake, Python and of course a C++ compiler. Once these prerequisites are installed

|

||||

the build is simply a matter of invoking the 'build' script (`build.cmd` or `build.sh`) at the base of the

|

||||

repository.

|

||||

See [building instructions](documentation/building.md/windows-instructions.md) in our documentation directory.

|

||||

|

||||

The details of installing the components differ depending on the operating system. See the following

|

||||

pages based on your OS. There is no cross-building across OS (only for ARM, which is built on x64).

|

||||

You have to be on the particular platform to build that platform.

|

||||

## Reporting security issues and security bugs

|

||||

|

||||

To install the platform's prerequisites and build:

|

||||

Security issues and bugs should be reported privately, via email, to the Microsoft Security Response Center (MSRC) <secure@microsoft.com>. You should receive a response within 24 hours. If for some reason you do not, please follow up via email to ensure we received your original message. Further information, including the MSRC PGP key, can be found in the [Security TechCenter](https://www.microsoft.com/msrc/faqs-report-an-issue).

|

||||

|

||||

* [Windows Instructions](documentation/building/windows-instructions.md)

|

||||

* [Linux Instructions](documentation/building/linux-instructions.md)

|

||||

* [MacOS Instructions](documentation/building/osx-instructions.md)

|

||||

* [FreeBSD Instructions](documentation/building/freebsd-instructions.md)

|

||||

* [NetBSD Instructions](documentation/building/netbsd-instructions.md)

|

||||

* [Testing on private runtime builds](documentation/privatebuildtesting.md)

|

||||

|

||||

## SOS and Other Diagnostic Tools

|

||||

|

||||

* [SOS](documentation/sos.md) - About the SOS debugger extension.

|

||||

* [dotnet-dump](documentation/dotnet-dump-instructions.md) - Dump collection and analysis utility.

|

||||

* [dotnet-gcdump](documentation/dotnet-gcdump-instructions.md) - Heap analysis tool that collects gcdumps of live .NET processes.

|

||||

* [dotnet-trace](documentation/dotnet-trace-instructions.md) - Enable the collection of events for a running .NET Core Application to a local trace file.

|

||||

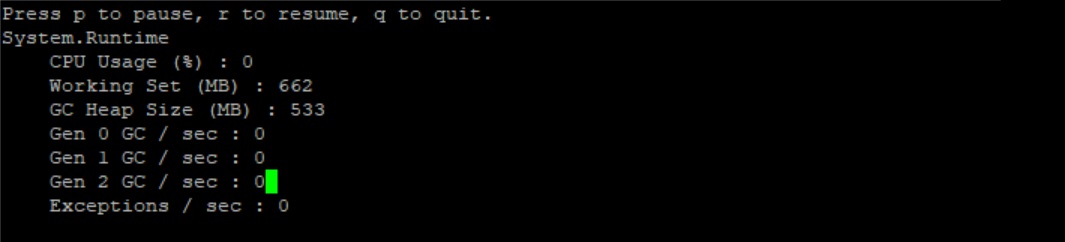

* [dotnet-counters](documentation/dotnet-counters-instructions.md) - Monitor performance counters of a .NET Core application in real time.

|

||||

Also see info about related [Microsoft .NET Core and ASP.NET Core Bug Bounty Program](https://www.microsoft.com/msrc/bounty-dot-net-core).

|

||||

|

||||

## Useful Links

|

||||

|

||||

* [FAQ](documentation/FAQ.md) - Frequently asked questions.

|

||||

* [The LLDB Debugger](http://lldb.llvm.org/index.html) - More information about lldb.

|

||||

* [SOS](https://msdn.microsoft.com/en-us/library/bb190764(v=vs.110).aspx) - More information about SOS.

|

||||

* [Debugging CoreCLR](https://github.com/dotnet/runtime/blob/master/docs/workflow/debugging/coreclr/debugging.md) - Instructions for debugging .NET Core and the CoreCLR runtime.

|

||||

* [dotnet/runtime](https://github.com/dotnet/runtime) - Source for the .NET Core runtime.

|

||||

* [Official Build Instructions](documentation/building/official-build-instructions.md) - Internal official build instructions.

|

||||

See [Introducing dotnet-monitor](https://devblogs.microsoft.com/dotnet/introducing-dotnet-monitor/)

|

||||

|

||||

[//]: # (Begin current test results)

|

||||

## .NET Foundation

|

||||

|

||||

## Build Status

|

||||

.NET Monitor is a [.NET Foundation](https://www.dotnetfoundation.org/projects) project.

|

||||

|

||||

[](https://dnceng.visualstudio.com/public/_build/latest?definitionId=72&branchName=master)

|

||||

There are many .NET related projects on GitHub.

|

||||

|

||||

[//]: # (End current test results)

|

||||

- [.NET home repo](https://github.com/Microsoft/dotnet) - links to 100s of .NET projects, from Microsoft and the community.

|

||||

- [ASP.NET Core home](https://docs.microsoft.com/aspnet/core/?view=aspnetcore-3.1) - the best place to start learning about ASP.NET Core.

|

||||

|

||||

This project has adopted the code of conduct defined by the [Contributor Covenant](http://contributor-covenant.org/) to clarify expected behavior in our community. For more information, see the [.NET Foundation Code of Conduct](http://www.dotnetfoundation.org/code-of-conduct).

|

||||

|

||||

General .NET OSS discussions: [.NET Foundation forums](https://forums.dotnetfoundation.org)

|

||||

|

||||

## License

|

||||

|

||||

The diagnostics repository is licensed under the [MIT license](LICENSE.TXT).

|

||||

.NET monitor is licensed under the [MIT](LICENSE.TXT) license.

|

||||

|

|

|

|||

|

|

@ -1,3 +1,3 @@

|

|||

@echo off

|

||||

powershell -ExecutionPolicy ByPass -NoProfile -command "& """%~dp0eng\build.ps1""" -restore -skipmanaged -skipnative %*"

|

||||

powershell -ExecutionPolicy ByPass -NoProfile -command "& """%~dp0eng\common\build.ps1""" -restore %*"

|

||||

exit /b %ErrorLevel%

|

||||

|

|

|

|||

2

Test.cmd

2

Test.cmd

|

|

@ -1,3 +1,3 @@

|

|||

@echo off

|

||||

powershell -ExecutionPolicy ByPass -NoProfile -command "& """%~dp0eng\build.ps1""" -test -skipmanaged -skipnative %*"

|

||||

powershell -ExecutionPolicy ByPass -NoProfile -command "& """%~dp0eng\common\build.ps1""" -test %*"

|

||||

exit /b %ErrorLevel%

|

||||

|

|

|

|||

2

build.sh

2

build.sh

|

|

@ -13,4 +13,4 @@ while [[ -h $source ]]; do

|

|||

done

|

||||

|

||||

scriptroot="$( cd -P "$( dirname "$source" )" && pwd )"

|

||||

"$scriptroot/eng/build.sh" --restore $@

|

||||

"$scriptroot/eng/common/build.sh" --restore -build $@

|

||||

|

|

|

|||

|

|

@ -1,156 +0,0 @@

|

|||

# Copyright (c) .NET Foundation and contributors. All rights reserved.

|

||||

# Licensed under the MIT license. See LICENSE file in the project root for full license information.

|

||||

|

||||

if (CLR_CMAKE_PLATFORM_UNIX)

|

||||

# Disable frame pointer optimizations so profilers can get better call stacks

|

||||

add_compile_options(-fno-omit-frame-pointer)

|

||||

|

||||

# The -fms-extensions enable the stuff like __if_exists, __declspec(uuid()), etc.

|

||||

add_compile_options(-fms-extensions )

|

||||

#-fms-compatibility Enable full Microsoft Visual C++ compatibility

|

||||

#-fms-extensions Accept some non-standard constructs supported by the Microsoft compiler

|

||||

|

||||

# Make signed arithmetic overflow of addition, subtraction, and multiplication wrap around

|

||||

# using twos-complement representation (this is normally undefined according to the C++ spec).

|

||||

add_compile_options(-fwrapv)

|

||||

|

||||

if(CLR_CMAKE_PLATFORM_DARWIN)

|

||||

# We cannot enable "stack-protector-strong" on OS X due to a bug in clang compiler (current version 7.0.2)

|

||||

add_compile_options(-fstack-protector)

|

||||

if(CMAKE_OSX_ARCHITECTURES MATCHES "x86_64")

|

||||

add_compile_options(-arch x86_64)

|

||||

elseif(CMAKE_OSX_ARCHITECTURES MATCHES "arm64")

|

||||

add_compile_options(-arch arm64)

|

||||

else()

|

||||

clr_unknown_arch()

|

||||

endif()

|

||||

else()

|

||||

add_compile_options(-fstack-protector-strong)

|

||||

endif(CLR_CMAKE_PLATFORM_DARWIN)

|

||||

|

||||

add_definitions(-DDISABLE_CONTRACTS)

|

||||

# The -ferror-limit is helpful during the porting, it makes sure the compiler doesn't stop

|

||||

# after hitting just about 20 errors.

|

||||

add_compile_options(-ferror-limit=4096)

|

||||

|

||||

if (CLR_CMAKE_WARNINGS_ARE_ERRORS)

|

||||

# All warnings that are not explicitly disabled are reported as errors

|

||||

add_compile_options(-Werror)

|

||||

endif(CLR_CMAKE_WARNINGS_ARE_ERRORS)

|

||||

|

||||

# Disabled warnings

|

||||

add_compile_options(-Wno-unused-private-field)

|

||||

add_compile_options(-Wno-unused-variable)

|

||||

# Explicit constructor calls are not supported by clang (this->ClassName::ClassName())

|

||||

add_compile_options(-Wno-microsoft)

|

||||

# This warning is caused by comparing 'this' to NULL

|

||||

add_compile_options(-Wno-tautological-compare)

|

||||

# There are constants of type BOOL used in a condition. But BOOL is defined as int

|

||||

# and so the compiler thinks that there is a mistake.

|

||||

add_compile_options(-Wno-constant-logical-operand)

|

||||

# We use pshpack1/2/4/8.h and poppack.h headers to set and restore packing. However

|

||||

# clang 6.0 complains when the packing change lifetime is not contained within

|

||||

# a header file.

|

||||

add_compile_options(-Wno-pragma-pack)

|

||||

|

||||

add_compile_options(-Wno-unknown-warning-option)

|

||||

|

||||

#These seem to indicate real issues

|

||||

add_compile_options(-Wno-invalid-offsetof)

|

||||

# The following warning indicates that an attribute __attribute__((__ms_struct__)) was applied

|

||||

# to a struct or a class that has virtual members or a base class. In that case, clang

|

||||

# may not generate the same object layout as MSVC.

|

||||

add_compile_options(-Wno-incompatible-ms-struct)

|

||||

|

||||

# Some architectures (e.g., ARM) assume char type is unsigned while CoreCLR assumes char is signed

|

||||

# as x64 does. It has been causing issues in ARM (https://github.com/dotnet/coreclr/issues/4746)

|

||||

add_compile_options(-fsigned-char)

|

||||

endif(CLR_CMAKE_PLATFORM_UNIX)

|

||||

|

||||

if(CLR_CMAKE_PLATFORM_UNIX_ARM)

|

||||

# Because we don't use CMAKE_C_COMPILER/CMAKE_CXX_COMPILER to use clang

|

||||

# we have to set the triple by adding a compiler argument

|

||||

add_compile_options(-mthumb)

|

||||

add_compile_options(-mfpu=vfpv3)

|

||||

add_compile_options(-march=armv7-a)

|

||||

if(ARM_SOFTFP)

|

||||

add_definitions(-DARM_SOFTFP)

|

||||

add_compile_options(-mfloat-abi=softfp)

|

||||

endif(ARM_SOFTFP)

|

||||

endif(CLR_CMAKE_PLATFORM_UNIX_ARM)

|

||||

|

||||

if (WIN32)

|

||||

# Compile options for targeting windows

|

||||

|

||||

# The following options are set by the razzle build

|

||||

add_compile_options(/TP) # compile all files as C++

|

||||

add_compile_options(/d2Zi+) # make optimized builds debugging easier

|

||||

add_compile_options(/nologo) # Suppress Startup Banner

|

||||

add_compile_options(/W3) # set warning level to 3

|

||||

add_compile_options(/WX) # treat warnings as errors

|

||||

add_compile_options(/Oi) # enable intrinsics

|

||||

add_compile_options(/Oy-) # disable suppressing of the creation of frame pointers on the call stack for quicker function calls

|

||||

add_compile_options(/U_MT) # undefine the predefined _MT macro

|

||||

add_compile_options(/GF) # enable read-only string pooling

|

||||

add_compile_options(/Gm-) # disable minimal rebuild

|

||||

add_compile_options(/EHa) # enable C++ EH (w/ SEH exceptions)

|

||||

add_compile_options(/Zp8) # pack structs on 8-byte boundary

|

||||

add_compile_options(/Gy) # separate functions for linker

|

||||

add_compile_options(/Zc:wchar_t-) # C++ language conformance: wchar_t is NOT the native type, but a typedef

|

||||

add_compile_options(/Zc:forScope) # C++ language conformance: enforce Standard C++ for scoping rules

|

||||

add_compile_options(/GR-) # disable C++ RTTI

|

||||

add_compile_options(/FC) # use full pathnames in diagnostics

|

||||

add_compile_options(/MP) # Build with Multiple Processes (number of processes equal to the number of processors)

|

||||

add_compile_options(/GS) # Buffer Security Check

|

||||

add_compile_options(/Zm200) # Specify Precompiled Header Memory Allocation Limit of 150MB

|

||||

add_compile_options(/wd4960 /wd4961 /wd4603 /wd4627 /wd4838 /wd4456 /wd4457 /wd4458 /wd4459 /wd4091 /we4640)

|

||||

add_compile_options(/Zi) # enable debugging information

|

||||

add_compile_options(/ZH:SHA_256) # use SHA256 for generating hashes of compiler processed source files.

|

||||

add_compile_options(/source-charset:utf-8) # Force MSVC to compile source as UTF-8.

|

||||

|

||||

if (CLR_CMAKE_PLATFORM_ARCH_I386)

|

||||

add_compile_options(/Gz)

|

||||

endif (CLR_CMAKE_PLATFORM_ARCH_I386)

|

||||

|

||||

add_compile_options($<$<OR:$<CONFIG:Release>,$<CONFIG:Relwithdebinfo>>:/GL>)

|

||||

add_compile_options($<$<OR:$<OR:$<CONFIG:Release>,$<CONFIG:Relwithdebinfo>>,$<CONFIG:Checked>>:/O1>)

|

||||

|

||||

if (CLR_CMAKE_PLATFORM_ARCH_AMD64)

|

||||

# The generator expression in the following command means that the /homeparams option is added only for debug builds

|

||||

add_compile_options($<$<CONFIG:Debug>:/homeparams>) # Force parameters passed in registers to be written to the stack

|

||||

endif (CLR_CMAKE_PLATFORM_ARCH_AMD64)

|

||||

|

||||

# enable control-flow-guard support for native components for non-Arm64 builds

|

||||

add_compile_options(/guard:cf)

|

||||

|

||||

# Statically linked CRT (libcmt[d].lib, libvcruntime[d].lib and libucrt[d].lib) by default. This is done to avoid

|

||||

# linking in VCRUNTIME140.DLL for a simplified xcopy experience by reducing the dependency on VC REDIST.

|

||||

#

|

||||

# For Release builds, we shall dynamically link into uCRT [ucrtbase.dll] (which is pushed down as a Windows Update on downlevel OS) but

|

||||

# wont do the same for debug/checked builds since ucrtbased.dll is not redistributable and Debug/Checked builds are not

|

||||

# production-time scenarios.

|

||||

add_compile_options($<$<OR:$<CONFIG:Release>,$<CONFIG:Relwithdebinfo>>:/MT>)

|

||||

add_compile_options($<$<OR:$<CONFIG:Debug>,$<CONFIG:Checked>>:/MTd>)

|

||||

|

||||

set(CMAKE_ASM_MASM_FLAGS "${CMAKE_ASM_MASM_FLAGS} /ZH:SHA_256")

|

||||

|

||||

endif (WIN32)

|

||||

|

||||

if(CLR_CMAKE_ENABLE_CODE_COVERAGE)

|

||||

|

||||

if(CLR_CMAKE_PLATFORM_UNIX)

|

||||

string(TOUPPER ${CMAKE_BUILD_TYPE} UPPERCASE_CMAKE_BUILD_TYPE)

|

||||

if(NOT UPPERCASE_CMAKE_BUILD_TYPE STREQUAL DEBUG)

|

||||

message( WARNING "Code coverage results with an optimised (non-Debug) build may be misleading" )

|

||||

endif(NOT UPPERCASE_CMAKE_BUILD_TYPE STREQUAL DEBUG)

|

||||

|

||||

add_compile_options(-fprofile-arcs)

|

||||

add_compile_options(-ftest-coverage)

|

||||

set(CLANG_COVERAGE_LINK_FLAGS "--coverage")

|

||||

set(CMAKE_SHARED_LINKER_FLAGS "${CMAKE_SHARED_LINKER_FLAGS} ${CLANG_COVERAGE_LINK_FLAGS}")

|

||||

set(CMAKE_EXE_LINKER_FLAGS "${CMAKE_EXE_LINKER_FLAGS} ${CLANG_COVERAGE_LINK_FLAGS}")

|

||||

else()

|

||||

message(FATAL_ERROR "Code coverage builds not supported on current platform")

|

||||

endif(CLR_CMAKE_PLATFORM_UNIX)

|

||||

|

||||

endif(CLR_CMAKE_ENABLE_CODE_COVERAGE)

|

||||

|

|

@ -1,27 +0,0 @@

|

|||

# Contains the crossgen build specific definitions. Included by the leaf crossgen cmake files.

|

||||

|

||||

add_definitions(

|

||||

-DCROSSGEN_COMPILE

|

||||

-DCROSS_COMPILE

|

||||

-DFEATURE_NATIVE_IMAGE_GENERATION

|

||||

-DSELF_NO_HOST)

|

||||

|

||||

remove_definitions(

|

||||

-DFEATURE_CODE_VERSIONING

|

||||

-DEnC_SUPPORTED

|

||||

-DFEATURE_EVENT_TRACE=1

|

||||

-DFEATURE_LOADER_OPTIMIZATION

|

||||

-DFEATURE_MULTICOREJIT

|

||||

-DFEATURE_PERFMAP

|

||||

-DFEATURE_REJIT

|

||||

-DFEATURE_TIERED_COMPILATION

|

||||

-DFEATURE_VERSIONING_LOG

|

||||

)

|

||||

|

||||

if(FEATURE_READYTORUN)

|

||||

add_definitions(-DFEATURE_READYTORUN_COMPILER)

|

||||

endif(FEATURE_READYTORUN)

|

||||

|

||||

if(CLR_CMAKE_PLATFORM_LINUX)

|

||||

add_definitions(-DFEATURE_PERFMAP)

|

||||

endif(CLR_CMAKE_PLATFORM_LINUX)

|

||||

735

debuggees.sln

735

debuggees.sln

|

|

@ -1,735 +0,0 @@

|

|||

|

||||

Microsoft Visual Studio Solution File, Format Version 12.00

|

||||

# Visual Studio Version 16

|

||||

VisualStudioVersion = 16.0.29019.234

|

||||

MinimumVisualStudioVersion = 10.0.40219.1

|

||||

Project("{9A19103F-16F7-4668-BE54-9A1E7A4F7556}") = "TestDebuggee", "src\SOS\lldbplugin.tests\TestDebuggee\TestDebuggee.csproj", "{6C43BE85-F8C3-4D76-8050-F25CE953A7FD}"

|

||||

EndProject

|

||||

Project("{2150E333-8FDC-42A3-9474-1A3956D46DE8}") = "SOS", "SOS", "{41638A4C-0DAF-47ED-A774-ECBBAC0315D7}"

|

||||

EndProject

|

||||

Project("{2150E333-8FDC-42A3-9474-1A3956D46DE8}") = "src", "src", "{19FAB78C-3351-4911-8F0C-8C6056401740}"

|

||||

EndProject

|

||||

Project("{2150E333-8FDC-42A3-9474-1A3956D46DE8}") = "Debuggees", "Debuggees", "{C3072949-6D24-451B-A308-2F3621F858B0}"

|

||||

EndProject

|

||||

Project("{9A19103F-16F7-4668-BE54-9A1E7A4F7556}") = "WebApp3", "src\SOS\SOS.UnitTests\Debuggees\WebApp3\WebApp3.csproj", "{252E5845-8D4C-4306-9D8F-ED2E2F7005F6}"

|

||||

EndProject

|

||||

Project("{9A19103F-16F7-4668-BE54-9A1E7A4F7556}") = "WebApp", "src\SOS\SOS.UnitTests\Debuggees\WebApp\WebApp.csproj", "{E7FEA82E-0E16-4868-B122-4B0BC0014E7F}"

|

||||

EndProject

|

||||

Project("{9A19103F-16F7-4668-BE54-9A1E7A4F7556}") = "SimpleThrow", "src\SOS\SOS.UnitTests\Debuggees\SimpleThrow\SimpleThrow.csproj", "{179EF543-E30A-4428-ABA0-2E2621860173}"

|

||||

EndProject

|

||||

Project("{9A19103F-16F7-4668-BE54-9A1E7A4F7556}") = "DivZero", "src\SOS\SOS.UnitTests\Debuggees\DivZero\DivZero.csproj", "{447AC053-2E0A-4119-BD11-30A4A8E3F765}"

|

||||

EndProject

|

||||

Project("{9A19103F-16F7-4668-BE54-9A1E7A4F7556}") = "GCWhere", "src\SOS\SOS.UnitTests\Debuggees\GCWhere\GCWhere.csproj", "{664F46A9-3C99-489B-AAB9-4CD3A430C425}"

|

||||

EndProject

|

||||

Project("{9A19103F-16F7-4668-BE54-9A1E7A4F7556}") = "NestedExceptionTest", "src\SOS\SOS.UnitTests\Debuggees\NestedExceptionTest\NestedExceptionTest.csproj", "{0CB805C8-0B76-4B1D-8AAF-48535B180448}"

|

||||

EndProject

|

||||

Project("{9A19103F-16F7-4668-BE54-9A1E7A4F7556}") = "Overflow", "src\SOS\SOS.UnitTests\Debuggees\Overflow\Overflow.csproj", "{20251748-AA7B-45BE-ADAA-C9375F5CC80F}"

|

||||

EndProject

|

||||

Project("{9A19103F-16F7-4668-BE54-9A1E7A4F7556}") = "ReflectionTest", "src\SOS\SOS.UnitTests\Debuggees\ReflectionTest\ReflectionTest.csproj", "{DDDA69DF-2C4C-477A-B6C9-B4FE73C6E288}"

|

||||

EndProject

|

||||

Project("{9A19103F-16F7-4668-BE54-9A1E7A4F7556}") = "TaskNestedException", "src\SOS\SOS.UnitTests\Debuggees\TaskNestedException\TaskNestedException\TaskNestedException.csproj", "{73EA5188-1E4F-42D8-B63E-F1B878A4EB63}"

|

||||

EndProject

|

||||

Project("{9A19103F-16F7-4668-BE54-9A1E7A4F7556}") = "RandomUserLibrary", "src\SOS\SOS.UnitTests\Debuggees\TaskNestedException\RandomUserLibrary\RandomUserLibrary.csproj", "{B50D14DB-8EE5-47BD-B412-62FA5C693CC7}"

|

||||

EndProject

|

||||

Project("{9A19103F-16F7-4668-BE54-9A1E7A4F7556}") = "SymbolTestApp", "src\SOS\SOS.UnitTests\Debuggees\SymbolTestApp\SymbolTestApp\SymbolTestApp.csproj", "{112FE2A7-3FD2-4496-8A14-171898AD5CF5}"

|

||||

EndProject

|

||||

Project("{9A19103F-16F7-4668-BE54-9A1E7A4F7556}") = "SymbolTestDll", "src\SOS\SOS.UnitTests\Debuggees\SymbolTestApp\SymbolTestDll\SymbolTestDll.csproj", "{8C27904A-47C0-44C7-B191-88FF34580CBE}"

|

||||

EndProject

|

||||

Project("{9A19103F-16F7-4668-BE54-9A1E7A4F7556}") = "LineNums", "src\SOS\SOS.UnitTests\Debuggees\LineNums\LineNums.csproj", "{84881FB8-37E1-4D9B-B27E-9831C30DCC04}"

|

||||

EndProject

|

||||

Project("{9A19103F-16F7-4668-BE54-9A1E7A4F7556}") = "GCPOH", "src\SOS\SOS.UnitTests\Debuggees\GCPOH\GCPOH.csproj", "{0A34CA51-8B8C-41A1-BE24-AB2C574EA144}"

|

||||

EndProject

|

||||

Project("{FAE04EC0-301F-11D3-BF4B-00C04F79EFBC}") = "DotnetDumpCommands", "src\SOS\SOS.UnitTests\Debuggees\DotnetDumpCommands\DotnetDumpCommands.csproj", "{F9A69812-DC52-428D-9DB1-8B831A8FF776}"

|

||||

EndProject

|

||||

Global

|

||||

GlobalSection(SolutionConfigurationPlatforms) = preSolution

|

||||

Checked|Any CPU = Checked|Any CPU

|

||||

Checked|ARM = Checked|ARM

|

||||

Checked|ARM64 = Checked|ARM64

|

||||

Checked|x64 = Checked|x64

|

||||

Checked|x86 = Checked|x86

|

||||

Debug|Any CPU = Debug|Any CPU

|

||||

Debug|ARM = Debug|ARM

|

||||

Debug|ARM64 = Debug|ARM64

|

||||

Debug|x64 = Debug|x64

|

||||

Debug|x86 = Debug|x86

|

||||

Release|Any CPU = Release|Any CPU

|

||||

Release|ARM = Release|ARM

|

||||

Release|ARM64 = Release|ARM64

|

||||

Release|x64 = Release|x64

|

||||

Release|x86 = Release|x86

|

||||

RelWithDebInfo|Any CPU = RelWithDebInfo|Any CPU

|

||||

RelWithDebInfo|ARM = RelWithDebInfo|ARM

|

||||

RelWithDebInfo|ARM64 = RelWithDebInfo|ARM64

|

||||

RelWithDebInfo|x64 = RelWithDebInfo|x64

|

||||

RelWithDebInfo|x86 = RelWithDebInfo|x86

|

||||

EndGlobalSection

|

||||

GlobalSection(ProjectConfigurationPlatforms) = postSolution

|

||||

{6C43BE85-F8C3-4D76-8050-F25CE953A7FD}.Checked|Any CPU.ActiveCfg = Release|Any CPU

|

||||

{6C43BE85-F8C3-4D76-8050-F25CE953A7FD}.Checked|Any CPU.Build.0 = Release|Any CPU

|

||||

{6C43BE85-F8C3-4D76-8050-F25CE953A7FD}.Checked|ARM.ActiveCfg = Release|Any CPU

|

||||

{6C43BE85-F8C3-4D76-8050-F25CE953A7FD}.Checked|ARM.Build.0 = Release|Any CPU

|

||||

{6C43BE85-F8C3-4D76-8050-F25CE953A7FD}.Checked|ARM64.ActiveCfg = Release|Any CPU

|

||||

{6C43BE85-F8C3-4D76-8050-F25CE953A7FD}.Checked|ARM64.Build.0 = Release|Any CPU

|

||||

{6C43BE85-F8C3-4D76-8050-F25CE953A7FD}.Checked|x64.ActiveCfg = Release|Any CPU

|

||||

{6C43BE85-F8C3-4D76-8050-F25CE953A7FD}.Checked|x64.Build.0 = Release|Any CPU

|

||||

{6C43BE85-F8C3-4D76-8050-F25CE953A7FD}.Checked|x86.ActiveCfg = Release|Any CPU

|

||||

{6C43BE85-F8C3-4D76-8050-F25CE953A7FD}.Checked|x86.Build.0 = Release|Any CPU

|

||||

{6C43BE85-F8C3-4D76-8050-F25CE953A7FD}.Debug|Any CPU.ActiveCfg = Debug|Any CPU

|

||||

{6C43BE85-F8C3-4D76-8050-F25CE953A7FD}.Debug|Any CPU.Build.0 = Debug|Any CPU

|

||||

{6C43BE85-F8C3-4D76-8050-F25CE953A7FD}.Debug|ARM.ActiveCfg = Debug|Any CPU

|

||||

{6C43BE85-F8C3-4D76-8050-F25CE953A7FD}.Debug|ARM.Build.0 = Debug|Any CPU

|

||||

{6C43BE85-F8C3-4D76-8050-F25CE953A7FD}.Debug|ARM64.ActiveCfg = Debug|Any CPU

|

||||

{6C43BE85-F8C3-4D76-8050-F25CE953A7FD}.Debug|ARM64.Build.0 = Debug|Any CPU

|

||||

{6C43BE85-F8C3-4D76-8050-F25CE953A7FD}.Debug|x64.ActiveCfg = Debug|Any CPU

|

||||

{6C43BE85-F8C3-4D76-8050-F25CE953A7FD}.Debug|x64.Build.0 = Debug|Any CPU

|

||||

{6C43BE85-F8C3-4D76-8050-F25CE953A7FD}.Debug|x86.ActiveCfg = Debug|Any CPU

|

||||

{6C43BE85-F8C3-4D76-8050-F25CE953A7FD}.Debug|x86.Build.0 = Debug|Any CPU

|

||||

{6C43BE85-F8C3-4D76-8050-F25CE953A7FD}.Release|Any CPU.ActiveCfg = Release|Any CPU

|

||||

{6C43BE85-F8C3-4D76-8050-F25CE953A7FD}.Release|Any CPU.Build.0 = Release|Any CPU

|

||||

{6C43BE85-F8C3-4D76-8050-F25CE953A7FD}.Release|ARM.ActiveCfg = Release|Any CPU

|

||||

{6C43BE85-F8C3-4D76-8050-F25CE953A7FD}.Release|ARM.Build.0 = Release|Any CPU

|

||||

{6C43BE85-F8C3-4D76-8050-F25CE953A7FD}.Release|ARM64.ActiveCfg = Release|Any CPU

|

||||

{6C43BE85-F8C3-4D76-8050-F25CE953A7FD}.Release|ARM64.Build.0 = Release|Any CPU

|

||||

{6C43BE85-F8C3-4D76-8050-F25CE953A7FD}.Release|x64.ActiveCfg = Release|Any CPU

|

||||

{6C43BE85-F8C3-4D76-8050-F25CE953A7FD}.Release|x64.Build.0 = Release|Any CPU

|

||||

{6C43BE85-F8C3-4D76-8050-F25CE953A7FD}.Release|x86.ActiveCfg = Release|Any CPU

|

||||

{6C43BE85-F8C3-4D76-8050-F25CE953A7FD}.Release|x86.Build.0 = Release|Any CPU

|

||||

{6C43BE85-F8C3-4D76-8050-F25CE953A7FD}.RelWithDebInfo|Any CPU.ActiveCfg = Release|Any CPU

|

||||

{6C43BE85-F8C3-4D76-8050-F25CE953A7FD}.RelWithDebInfo|Any CPU.Build.0 = Release|Any CPU

|

||||

{6C43BE85-F8C3-4D76-8050-F25CE953A7FD}.RelWithDebInfo|ARM.ActiveCfg = Release|Any CPU

|

||||

{6C43BE85-F8C3-4D76-8050-F25CE953A7FD}.RelWithDebInfo|ARM.Build.0 = Release|Any CPU

|

||||

{6C43BE85-F8C3-4D76-8050-F25CE953A7FD}.RelWithDebInfo|ARM64.ActiveCfg = Release|Any CPU

|

||||

{6C43BE85-F8C3-4D76-8050-F25CE953A7FD}.RelWithDebInfo|ARM64.Build.0 = Release|Any CPU

|

||||

{6C43BE85-F8C3-4D76-8050-F25CE953A7FD}.RelWithDebInfo|x64.ActiveCfg = Release|Any CPU

|

||||

{6C43BE85-F8C3-4D76-8050-F25CE953A7FD}.RelWithDebInfo|x64.Build.0 = Release|Any CPU

|

||||

{6C43BE85-F8C3-4D76-8050-F25CE953A7FD}.RelWithDebInfo|x86.ActiveCfg = Release|Any CPU

|

||||

{6C43BE85-F8C3-4D76-8050-F25CE953A7FD}.RelWithDebInfo|x86.Build.0 = Release|Any CPU

|

||||

{252E5845-8D4C-4306-9D8F-ED2E2F7005F6}.Checked|Any CPU.ActiveCfg = Debug|Any CPU

|

||||

{252E5845-8D4C-4306-9D8F-ED2E2F7005F6}.Checked|Any CPU.Build.0 = Debug|Any CPU

|

||||

{252E5845-8D4C-4306-9D8F-ED2E2F7005F6}.Checked|ARM.ActiveCfg = Debug|Any CPU

|

||||

{252E5845-8D4C-4306-9D8F-ED2E2F7005F6}.Checked|ARM.Build.0 = Debug|Any CPU

|

||||

{252E5845-8D4C-4306-9D8F-ED2E2F7005F6}.Checked|ARM64.ActiveCfg = Debug|Any CPU

|

||||

{252E5845-8D4C-4306-9D8F-ED2E2F7005F6}.Checked|ARM64.Build.0 = Debug|Any CPU

|

||||

{252E5845-8D4C-4306-9D8F-ED2E2F7005F6}.Checked|x64.ActiveCfg = Debug|Any CPU

|

||||

{252E5845-8D4C-4306-9D8F-ED2E2F7005F6}.Checked|x64.Build.0 = Debug|Any CPU

|

||||

{252E5845-8D4C-4306-9D8F-ED2E2F7005F6}.Checked|x86.ActiveCfg = Debug|Any CPU

|

||||

{252E5845-8D4C-4306-9D8F-ED2E2F7005F6}.Checked|x86.Build.0 = Debug|Any CPU

|

||||

{252E5845-8D4C-4306-9D8F-ED2E2F7005F6}.Debug|Any CPU.ActiveCfg = Debug|Any CPU

|

||||

{252E5845-8D4C-4306-9D8F-ED2E2F7005F6}.Debug|Any CPU.Build.0 = Debug|Any CPU

|

||||

{252E5845-8D4C-4306-9D8F-ED2E2F7005F6}.Debug|ARM.ActiveCfg = Debug|Any CPU

|

||||

{252E5845-8D4C-4306-9D8F-ED2E2F7005F6}.Debug|ARM.Build.0 = Debug|Any CPU

|

||||

{252E5845-8D4C-4306-9D8F-ED2E2F7005F6}.Debug|ARM64.ActiveCfg = Debug|Any CPU

|

||||

{252E5845-8D4C-4306-9D8F-ED2E2F7005F6}.Debug|ARM64.Build.0 = Debug|Any CPU

|

||||

{252E5845-8D4C-4306-9D8F-ED2E2F7005F6}.Debug|x64.ActiveCfg = Debug|Any CPU

|

||||