Before this commit, iclasses were "shady", or not protected by write

barriers. Because of that, the GC needs to spend more time marking these

objects than otherwise.

Applications that make heavy use of modules should see reduction in GC

time as they have a significant number of live iclasses on the heap.

- Put logic for iclass method table ownership into a function

- Remove calls to WB_UNPROTECT and insert write barriers for iclasses

This commit relies on the following invariant: for any non oirigin

iclass `I`, `RCLASS_M_TBL(I) == RCLASS_M_TBL(RBasic(I)->klass)`. This

invariant did not hold prior to 98286e9 for classes and modules that

have prepended modules.

[Feature #16984]

To optimize the sweep phase, there is bit operation to set mark

bits for out-of-range bits in the last bit_t.

However, if there is no out-of-ragnge bits, it set all last bit_t

as mark bits and it braek the assumption (unmarked objects will

be swept).

GC_DEBUG=1 makes sizeof(RVALUE)=64 on my machine and this condition

happens.

It took me one Saturday to debug this.

imemo_callcache and imemo_callinfo were not handled by the `objspace`

module and were showing up as "unknown" in the dump. Extract the code for

naming imemos and use that in both the GC and the `objspace` module.

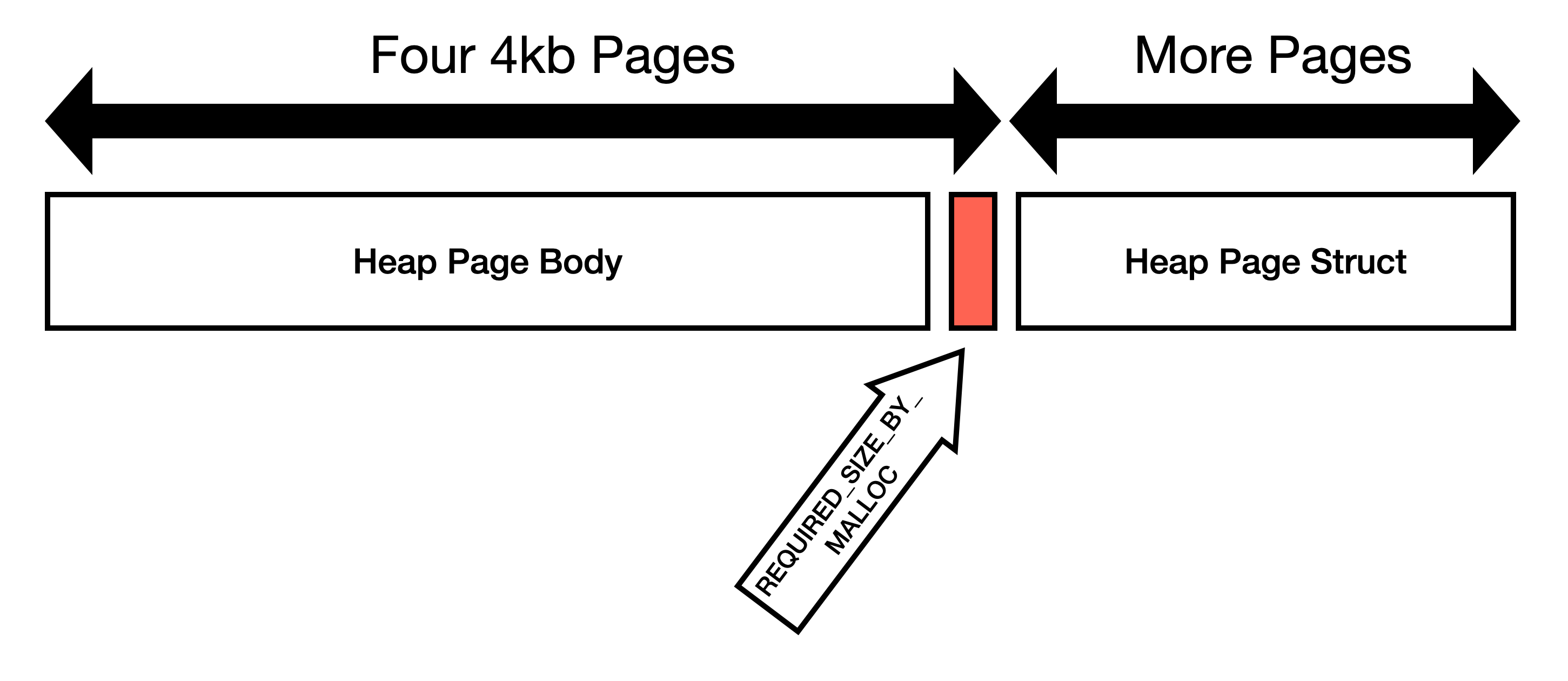

This commit expands heap pages to be exactly 16KiB and eliminates the

`REQUIRED_SIZE_BY_MALLOC` constant.

I believe the goal of `REQUIRED_SIZE_BY_MALLOC` was to make the heap

pages consume some multiple of OS page size. 16KiB is convenient because

OS page size is typically 4KiB, so one Ruby page is four OS pages.

Do not guess how malloc works

=============================

We should not try to guess how `malloc` works and instead request (and

use) four OS pages.

Here is my reasoning:

1. Not all mallocs will store metadata in the same region as user requested

memory. jemalloc specifically states[1]:

> Information about the states of the runs is stored as a page map at the beginning of each chunk.

2. We're using `posix_memalign` to request memory. This means that the

first address must be divisible by the alignment. Our allocation is

page aligned, so if malloc is storing metadata *before* the page,

then we've already crossed page boundaries.

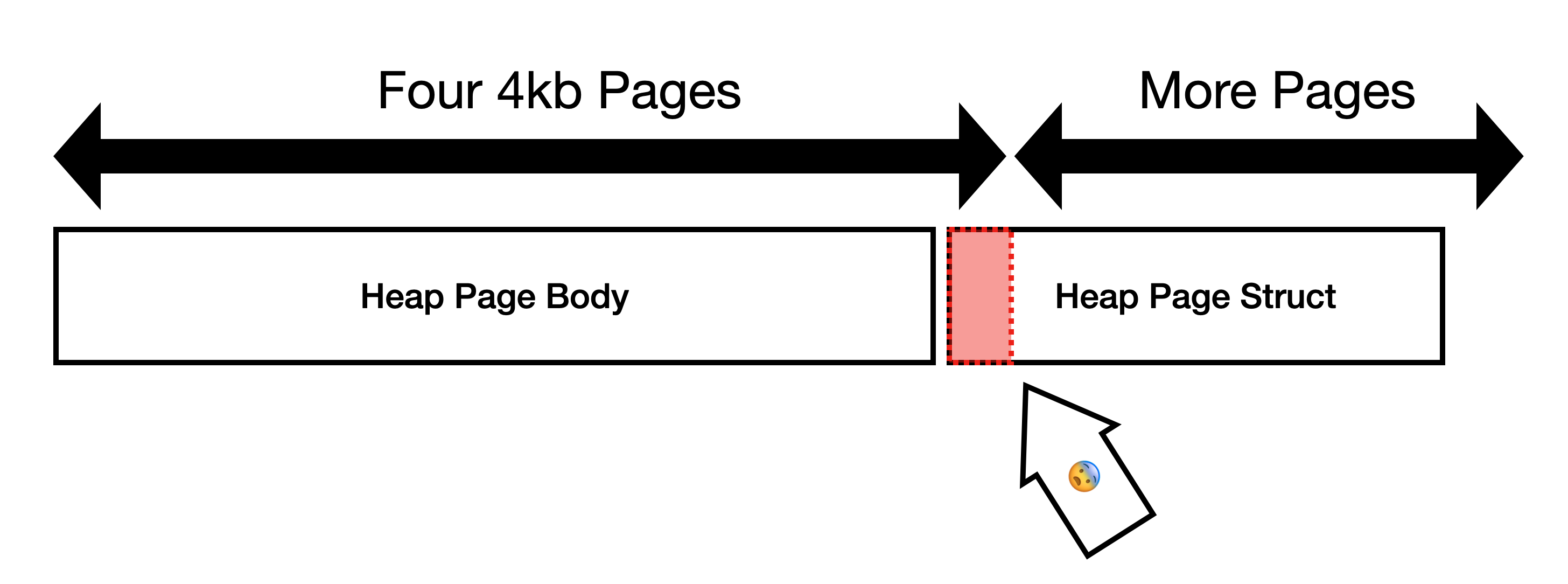

3. Some allocators like glibc will use the memory at the end of the

page. I am able to demonstrate that glibc will return pointers

within the page boundary that contains `heap_page_body`[2]. We

*expected* the allocation to look like this:

But since `heap_page` is allocated immediately after

`heap_page_body`[3], instead the layout looks like this:

This is not optimal because `heap_page` gets allocated immediately

after `heap_page_body`. We frequently write to `heap_page`, so the

bottom OS page of `heap_page_body` is very likely to be copied.

One more object per page

========================

In jemalloc, allocation requests are rounded to the nearest boundary,

which in this case is 16KiB[4], so `REQUIRED_SIZE_BY_MALLOC` space is

just wasted on jemalloc.

On glibc, the space is not wasted, but instead it is very likely to

cause page faults.

Instead of wasting space or causing page faults, lets just use the space

to store one more Ruby object. Using the space to store one more Ruby

object will prevent page faults, stop wasting space, decrease memory

usage, decrease GC time, etc.

1. https://people.freebsd.org/~jasone/jemalloc/bsdcan2006/jemalloc.pdf

2. 33390d15e7

3 289a28e68f/gc.c (L1757-L1763)

4. https://people.freebsd.org/~jasone/jemalloc/bsdcan2006/jemalloc.pdf page 4

Co-authored-by: John Hawthorn <john@hawthorn.email>

This commit converts RMoved slots to a doubly linked list. I want to

convert this to a doubly linked list because the read barrier (currently

in development) must remove nodes from the moved list sometimes.

Removing nodes from the list is much easier if the list is doubly

linked. In addition, we can reuse the list manipulation routines.

Ensure that the argument is an Integer or implicitly convert to,

before dereferencing as a Bignum. Addressed a regression in

b99833baec.

Reported by u75615 at https://hackerone.com/reports/898614

This reverts commit 02b216e5a7.

This reverts commit 9b8825b6f9.

I found that combining sweep and move is not safe. I don't think that

we can do compaction concurrently with _anything_ unless there is a read

barrier installed.

Here is a simple example. A class object is freed, and during it's free

step, it tries to remove itself from its parent's subclass list.

However, during the sweep step, the parent class was moved and the

"currently being freed" class didn't have references updated yet. So we

get a segv like this:

```

(lldb) bt

* thread #1, name = 'ruby', stop reason = signal SIGSEGV

* frame #0: 0x0000560763e344cb ruby`rb_st_lookup at st.c:320:43

frame #1: 0x0000560763e344cb ruby`rb_st_lookup(tab=0x2f7469672f6e6f72, key=3809, value=0x0000560765bf2270) at st.c:1010

frame #2: 0x0000560763e8f16a ruby`rb_search_class_path at variable.c:99:9

frame #3: 0x0000560763e8f141 ruby`rb_search_class_path at variable.c:145

frame #4: 0x0000560763e8f141 ruby`rb_search_class_path(klass=94589785585880) at variable.c:191

frame #5: 0x0000560763ec744e ruby`rb_vm_bugreport at vm_dump.c:996:17

frame #6: 0x0000560763f5b958 ruby`rb_bug_for_fatal_signal at error.c:675:5

frame #7: 0x0000560763e27dad ruby`sigsegv(sig=<unavailable>, info=<unavailable>, ctx=<unavailable>) at signal.c:955:5

frame #8: 0x00007f8b891d33c0 libpthread.so.0`___lldb_unnamed_symbol1$$libpthread.so.0 + 1

frame #9: 0x0000560763efa8bb ruby`rb_class_remove_from_super_subclasses(klass=94589790314280) at class.c:93:56

frame #10: 0x0000560763d10cb7 ruby`gc_sweep_step at gc.c:2674:2

frame #11: 0x0000560763d1187b ruby`gc_sweep at gc.c:4540:2

frame #12: 0x0000560763d101f0 ruby`gc_start at gc.c:6797:6

frame #13: 0x0000560763d15153 ruby`rb_gc_compact at gc.c:7479:12

frame #14: 0x0000560763eb4eb8 ruby`vm_exec_core at vm_insnhelper.c:5183:13

frame #15: 0x0000560763ea9bae ruby`rb_vm_exec at vm.c:1953:22

frame #16: 0x0000560763eac08d ruby`rb_yield at vm.c:1132:9

frame #17: 0x0000560763edb4f2 ruby`rb_ary_collect at array.c:3186:9

frame #18: 0x0000560763e9ee15 ruby`vm_call_cfunc_with_frame at vm_insnhelper.c:2575:12

frame #19: 0x0000560763eb2e66 ruby`vm_exec_core at vm_insnhelper.c:4177:11

frame #20: 0x0000560763ea9bae ruby`rb_vm_exec at vm.c:1953:22

frame #21: 0x0000560763eac08d ruby`rb_yield at vm.c:1132:9

frame #22: 0x0000560763edb4f2 ruby`rb_ary_collect at array.c:3186:9

frame #23: 0x0000560763e9ee15 ruby`vm_call_cfunc_with_frame at vm_insnhelper.c:2575:12

frame #24: 0x0000560763eb2e66 ruby`vm_exec_core at vm_insnhelper.c:4177:11

frame #25: 0x0000560763ea9bae ruby`rb_vm_exec at vm.c:1953:22

frame #26: 0x0000560763ceee01 ruby`rb_ec_exec_node(ec=0x0000560765afa530, n=0x0000560765b088e0) at eval.c:296:2

frame #27: 0x0000560763cf3b7b ruby`ruby_run_node(n=0x0000560765b088e0) at eval.c:354:12

frame #28: 0x0000560763cee4a3 ruby`main(argc=<unavailable>, argv=<unavailable>) at main.c:50:9

frame #29: 0x00007f8b88e560b3 libc.so.6`__libc_start_main + 243

frame #30: 0x0000560763cee4ee ruby`_start + 46

(lldb) f 9

frame #9: 0x0000560763efa8bb ruby`rb_class_remove_from_super_subclasses(klass=94589790314280) at class.c:93:56

90

91 *RCLASS_EXT(klass)->parent_subclasses = entry->next;

92 if (entry->next) {

-> 93 RCLASS_EXT(entry->next->klass)->parent_subclasses = RCLASS_EXT(klass)->parent_subclasses;

94 }

95 xfree(entry);

96 }

(lldb) command script import -r misc/lldb_cruby.py

lldb scripts for ruby has been installed.

(lldb) rp entry->next->klass

(struct RMoved) $1 = (flags = 30, destination = 94589792806680, next = 94589784369160)

(lldb)

```

We don't need to resolve symbols when freeing cc tables, so this commit

just changes the id table iterator to look at values rather than keys

and values.

This commit combines the sweep step with moving objects. With this

commit, we can do:

```ruby

GC.start(compact: true)

```

This code will do the following 3 steps:

1. Fully mark the heap

2. Sweep + Move objects

3. Update references

By default, this will compact in order that heap pages are allocated.

In other words, objects will be packed towards older heap pages (as

opposed to heap pages with more pinned objects like `GC.compact` does).

If a module has an origin, and that module is included in another

module or class, previously the iclass created for the module had

an origin pointer to the module's origin instead of the iclass's

origin.

Setting the origin pointer correctly requires using a stack, since

the origin iclass is not created until after the iclass itself.

Use a hidden ruby array to implement that stack.

Correctly assigning the origin pointers in the iclass caused a

use-after-free in GC. If a module with an origin is included

in a class, the iclass shares a method table with the module

and the iclass origin shares a method table with module origin.

Mark iclass origin with a flag that notes that even though the

iclass is an origin, it shares a method table, so the method table

should not be garbage collected. The shared method table will be

garbage collected when the module origin is garbage collected.

I've tested that this does not introduce a memory leak.

This change caused a VM assertion failure, which was traced to callable

method entries using the incorrect defined_class. Update

rb_vm_check_redefinition_opt_method and find_defined_class_by_owner

to treat iclass origins different than class origins to avoid this

issue.

This also includes a fix for Module#included_modules to skip

iclasses with origins.

Fixes [Bug #16736]

If a module has an origin, and that module is included in another

module or class, previously the iclass created for the module had

an origin pointer to the module's origin instead of the iclass's

origin.

Setting the origin pointer correctly requires using a stack, since

the origin iclass is not created until after the iclass itself.

Use a hidden ruby array to implement that stack.

Correctly assigning the origin pointers in the iclass caused a

use-after-free in GC. If a module with an origin is included

in a class, the iclass shares a method table with the module

and the iclass origin shares a method table with module origin.

Mark iclass origin with a flag that notes that even though the

iclass is an origin, it shares a method table, so the method table

should not be garbage collected. The shared method table will be

garbage collected when the module origin is garbage collected.

I've tested that this does not introduce a memory leak.

This also includes a fix for Module#included_modules to skip

iclasses with origins.

Fixes [Bug #16736]

Ruby's GC is incremental, meaning that during the mark phase (and also

the sweep phase) programs are allowed to run. This means that programs

can allocate objects before the mark or sweep phase have actually

completed. Those objects may not have had a chance to be marked, so we

can't know if they are movable or not. Something that references the

newly created object might have called the pinning function during the

mark phase, but since the mark phase hasn't run we can't know if there

is a "pinning" relationship.

To be conservative, we must only allow objects that are not pinned but

also marked to move.

This patch allows global variables that have been assigned in Ruby to

move. I added a new function for the GC to call that will update

global references and introduced a new callback in the global variable

struct for updating references.

Only pure Ruby global variables are supported right now, other

references will be pinned.

No objects should ever reference a `T_MOVED` slot. If they do, it's

absolutely a bug. If we kill the process when `T_MOVED` is pushed on

the mark stack it will make it easier to identify which object holds a

reference that hasn't been updated.

We only need to loop `T_MASK` times once. Also, not every value between

0 and `T_MASK` is an actual Ruby type. Before this change, some

integers were being added to the result hash even though they aren't

actual types. This patch omits considered / moved entries that total 0,

cleaning up the result hash and eliminating these "fake types".

This compile-time option has been broken for years (at least since

commit 49369ef173, according to git

bisect). Let's delete codes that no longer works.