Noticed that struct rb_builtin_function is a purely compile-time

constant. MJIT can eliminate some runtime calculations by statically

generate dedicated C code generator for each builtin functions.

* Enhanced RDoc for Array#fill

* Update array.c

There's one more at 5072. I'll get it.

Co-authored-by: Eric Hodel <drbrain@segment7.net>

* Update array.c

Co-authored-by: Eric Hodel <drbrain@segment7.net>

* Update array.c

Co-authored-by: Eric Hodel <drbrain@segment7.net>

* Update array.c

Co-authored-by: Eric Hodel <drbrain@segment7.net>

* Update array.c

Co-authored-by: Eric Hodel <drbrain@segment7.net>

* Update array.c

Co-authored-by: Eric Hodel <drbrain@segment7.net>

imemo_callcache and imemo_callinfo were not handled by the `objspace`

module and were showing up as "unknown" in the dump. Extract the code for

naming imemos and use that in both the GC and the `objspace` module.

which is checked by the first guard. When JIT-inlined cc and operand

cd->cc are different, the JIT-ed code might wrongly dispatch cd->cc even

while class check is done with another cc inlined by JIT.

This fixes SEGV on railsbench.

Struct assignment using a compound literal is more readable than before,

to me at least. It seems compilers reorder assignments anyways.

Neither speedup nor slowdown is observed on my machine.

Update and format the Kernel#load documentation to separate the

three cases (absolute path, explicit relative path, other), and

also document that it raises LoadError on failure.

Fixes [Bug #16988]

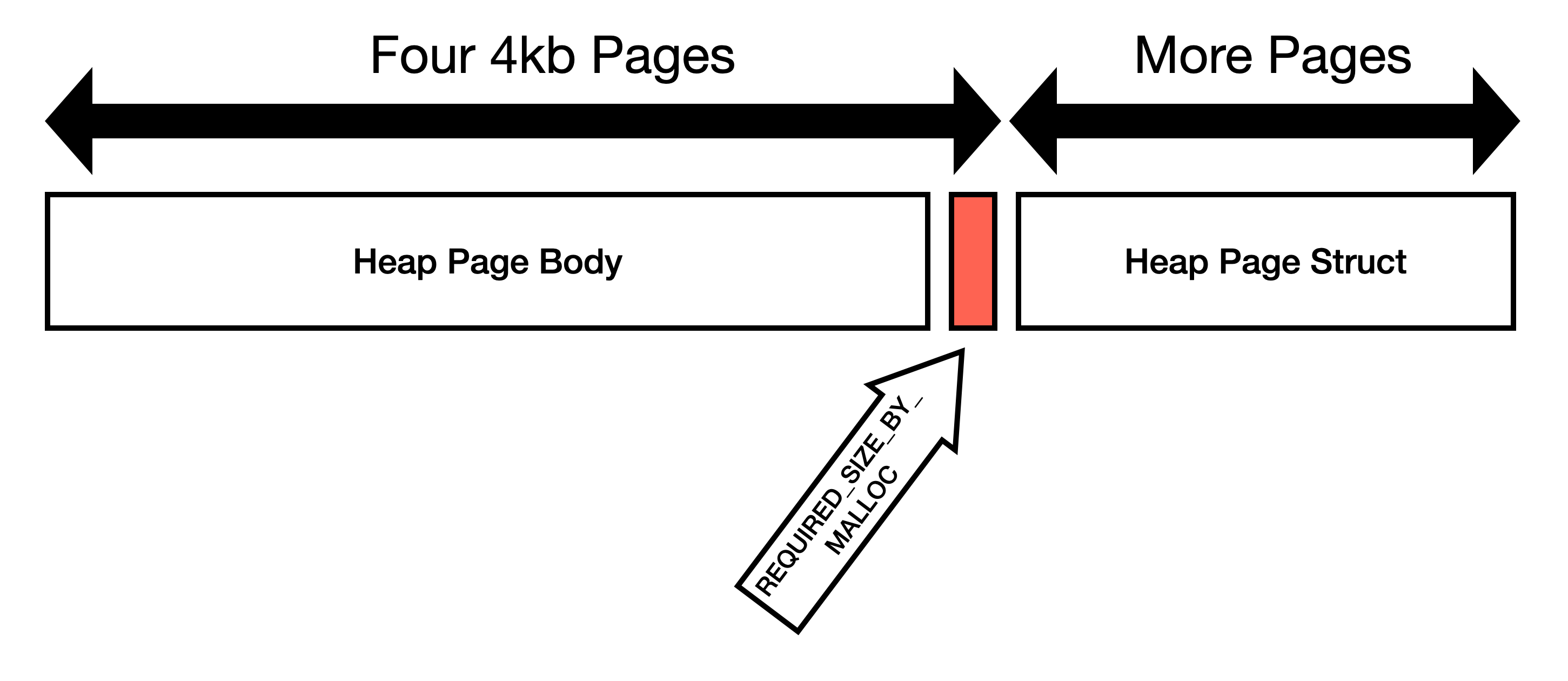

This commit expands heap pages to be exactly 16KiB and eliminates the

`REQUIRED_SIZE_BY_MALLOC` constant.

I believe the goal of `REQUIRED_SIZE_BY_MALLOC` was to make the heap

pages consume some multiple of OS page size. 16KiB is convenient because

OS page size is typically 4KiB, so one Ruby page is four OS pages.

Do not guess how malloc works

=============================

We should not try to guess how `malloc` works and instead request (and

use) four OS pages.

Here is my reasoning:

1. Not all mallocs will store metadata in the same region as user requested

memory. jemalloc specifically states[1]:

> Information about the states of the runs is stored as a page map at the beginning of each chunk.

2. We're using `posix_memalign` to request memory. This means that the

first address must be divisible by the alignment. Our allocation is

page aligned, so if malloc is storing metadata *before* the page,

then we've already crossed page boundaries.

3. Some allocators like glibc will use the memory at the end of the

page. I am able to demonstrate that glibc will return pointers

within the page boundary that contains `heap_page_body`[2]. We

*expected* the allocation to look like this:

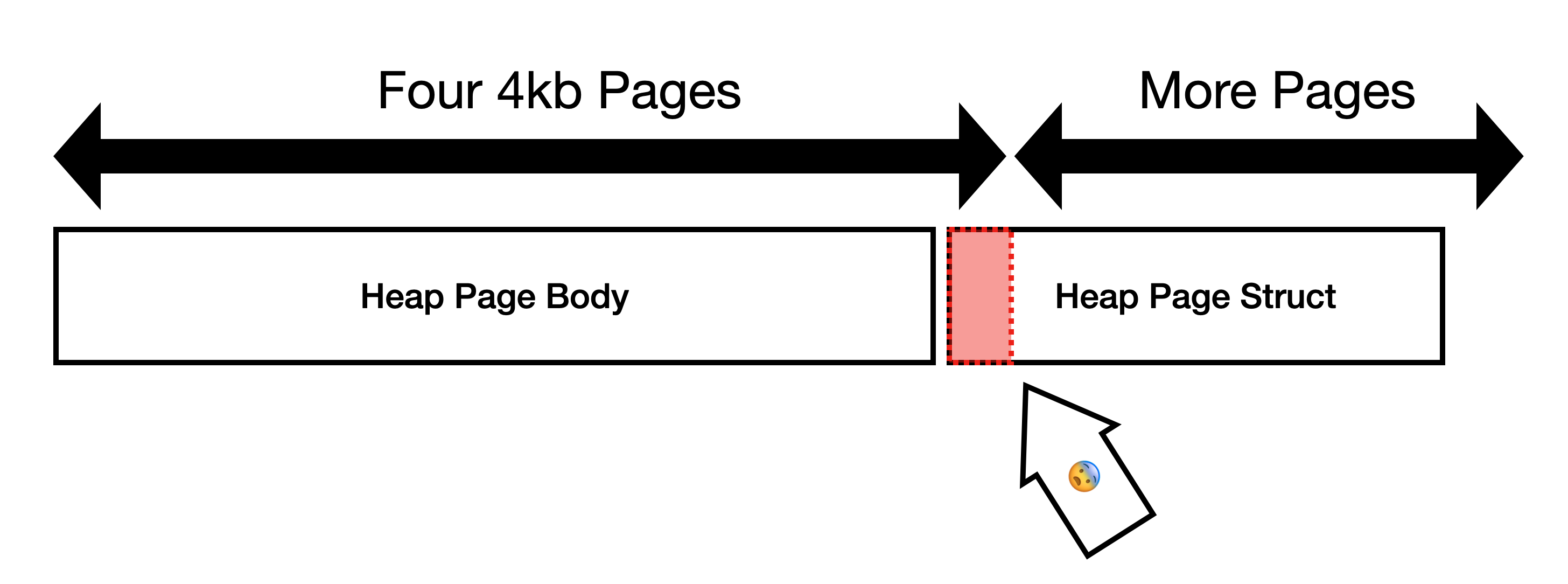

But since `heap_page` is allocated immediately after

`heap_page_body`[3], instead the layout looks like this:

This is not optimal because `heap_page` gets allocated immediately

after `heap_page_body`. We frequently write to `heap_page`, so the

bottom OS page of `heap_page_body` is very likely to be copied.

One more object per page

========================

In jemalloc, allocation requests are rounded to the nearest boundary,

which in this case is 16KiB[4], so `REQUIRED_SIZE_BY_MALLOC` space is

just wasted on jemalloc.

On glibc, the space is not wasted, but instead it is very likely to

cause page faults.

Instead of wasting space or causing page faults, lets just use the space

to store one more Ruby object. Using the space to store one more Ruby

object will prevent page faults, stop wasting space, decrease memory

usage, decrease GC time, etc.

1. https://people.freebsd.org/~jasone/jemalloc/bsdcan2006/jemalloc.pdf

2. 33390d15e7

3 289a28e68f/gc.c (L1757-L1763)

4. https://people.freebsd.org/~jasone/jemalloc/bsdcan2006/jemalloc.pdf page 4

Co-authored-by: John Hawthorn <john@hawthorn.email>