|

|

||

|---|---|---|

| .. | ||

| assets | ||

| decai | ||

| .gitignore | ||

| README.md | ||

| setup.py | ||

README.md

Decentralized & Collaborative AI Simulation

Tools to run simulations for AI models in smart contracts.

Example:

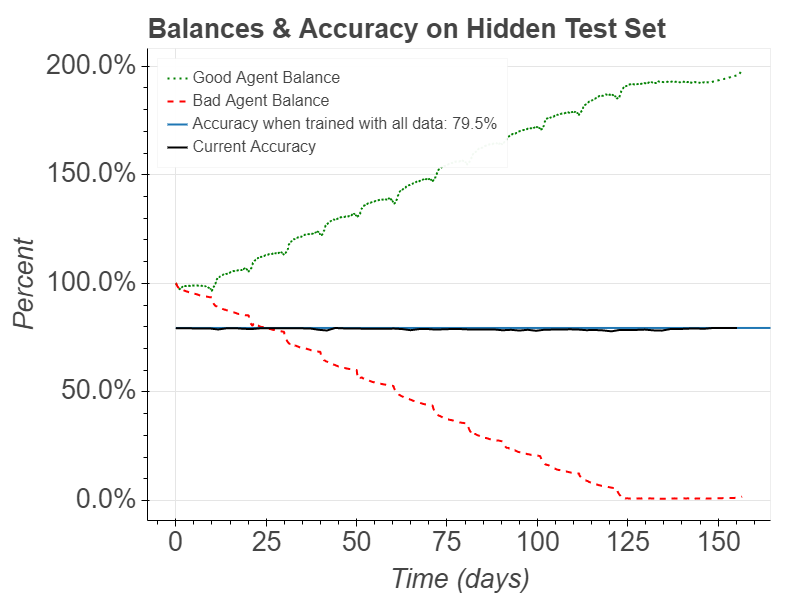

Even when a bad actor submits incorrect data, an honest contributor profits while the model's accuracy remains stable.

For this simulation, a perceptron was trained on the IMDB reviews dataset for sentiment classification. The model was initially trained on 2000 of the 25000 training data samples. The model has 1000 binary features which are the presence of the 1000 most frequent words in the dataset. The graph below shows the results of a simulation where for simplicity, we show just one honest contributor and one malicious contributor but these contributors effectively represent many contributors submitting the remaining 92% of the training data over time. In this simulation, we use the Deposit, Refund, and Take (DRT) incentive mechanism where contributors have 1 day to claim a refund. Any contributor can take the remaining deposit from a contribution after 9 days. "Bad Agent" is willing to spend about twice as much on deposits than an honest contributor, "Good Agent". The adversary is only submitting data about one sixth as often. Despite the malicious efforts, the accuracy can still be maintained and the honest contributors profit.

Setup

Run:

conda create --name decai-simulation python=3.7 bokeh ipython mkl mkl-service numpy phantomjs scikit-learn scipy six tensorflow

conda activate decai-simulation

pip install -e .

Running Simulations

Run:

bokeh serve decai/simulation/simulate_imdb_perceptron.py

Then open the browser to the address the above command tells you. It should be something like: http://localhost:5006/simulate_imdb_perceptron.

Customizing Simulations

To try out your own models or incentive mechanisms, you'll need to implement the interfaces. You can proceed by just copying the examples. Here are the details if you need them:

Suppose you want to use a neural network for the classifier:

- Implement the

Classifierinterface in a classNeuralNetworkClassifier. The easiest way is to copy an existing classifier like thePerceptron. - Create a

ModulecalledNeuralNetworkModulewhich bindsClassifierto your new class just like inPerceptronModule.

Setting up a custom incentive mechanism is similar:

- Implement

IncentiveMechanism. You can useStakeableas an example. - Bind your implementation in a module.

Now set up the main entry point to run the simulation: copy (decai/simulation/simulate_imdb_perceptron.py) to a new file, e.g. decai/simulation/simulate_imdb_neural_network.py.

In simulate_imdb_neural_network.py, you can set up the agents that will act in the simulation.

Then set the modules you created.

So instead of PerceptronModule put NeuralNetworkModule.

Run bokeh serve decai/simulation/simulate_imdb_neural_network.py and open your browse to the displayed URL to try it out.

Testing

Setup the testing environment:

pip install pytest

Run tests:

pytest