Fixed notebook markdowns for nbsphinx compatibility

This commit is contained in:

Родитель

908274c8c7

Коммит

919949bf1a

Различия файлов скрыты, потому что одна или несколько строк слишком длинны

|

|

@ -4,7 +4,9 @@

|

|||

"cell_type": "code",

|

||||

"execution_count": 1,

|

||||

"metadata": {

|

||||

"collapsed": true

|

||||

"collapsed": true,

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

|

|

@ -14,6 +16,8 @@

|

|||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {

|

||||

"deletable": true,

|

||||

"editable": true,

|

||||

"nbpresent": {

|

||||

"id": "29b9bd1d-766f-4422-ad96-de0accc1ce58"

|

||||

}

|

||||

|

|

@ -27,14 +31,17 @@

|

|||

"\n",

|

||||

"**Problem** (recap from CNTK 101):\n",

|

||||

"\n",

|

||||

"A cancer hospital has provided data and wants us to determine if a patient has a fatal [malignant][] cancer vs. a benign growth. This is known as a classification problem. To help classify each patient, we are given their age and the size of the tumor. Intuitively, one can imagine that younger patients and/or patient with small tumor size are less likely to have malignant cancer. The data set simulates this application where the each observation is a patient represented as a dot where red color indicates malignant and blue indicates a benign disease. Note: This is a toy example for learning, in real life there are large number of features from different tests/examination sources and doctors' experience that play into the diagnosis/treatment decision for a patient.\n",

|

||||

"[malignant]: https://en.wikipedia.org/wiki/Malignancy"

|

||||

"A cancer hospital has provided data and wants us to determine if a patient has a fatal [malignant](https://en.wikipedia.org/wiki/Malignancy) cancer vs. a benign growth. This is known as a classification problem. To help classify each patient, we are given their age and the size of the tumor. Intuitively, one can imagine that younger patients and/or patient with small tumor size are less likely to have malignant cancer. The data set simulates this application where the each observation is a patient represented as a dot where red color indicates malignant and blue indicates a benign disease. Note: This is a toy example for learning, in real life there are large number of features from different tests/examination sources and doctors' experience that play into the diagnosis/treatment decision for a patient."

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": 2,

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"collapsed": false,

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"outputs": [

|

||||

{

|

||||

"data": {

|

||||

|

|

@ -57,7 +64,10 @@

|

|||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"source": [

|

||||

"**Goal**:\n",

|

||||

"Our goal is to learn a classifier that classifies any patient into either benign or malignant category given two features (age, tumor size). \n",

|

||||

|

|

@ -78,7 +88,11 @@

|

|||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": 3,

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"collapsed": false,

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"outputs": [

|

||||

{

|

||||

"data": {

|

||||

|

|

@ -101,7 +115,10 @@

|

|||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"source": [

|

||||

"A feedforward neural network is an artificial neural network where connections between the units **do not** form a cycle.\n",

|

||||

"The feedforward neural network was the first and simplest type of artificial neural network devised. In this network, the information moves in only one direction, forward, from the input nodes, through the hidden nodes (if any) and to the output nodes. There are no cycles or loops in the network\n",

|

||||

|

|

@ -114,6 +131,8 @@

|

|||

"execution_count": 4,

|

||||

"metadata": {

|

||||

"collapsed": true,

|

||||

"deletable": true,

|

||||

"editable": true,

|

||||

"nbpresent": {

|

||||

"id": "138d1a78-02e2-4bd6-a20e-07b83f303563"

|

||||

}

|

||||

|

|

@ -137,7 +156,10 @@

|

|||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"source": [

|

||||

"## Data Generation\n",

|

||||

"This section can be *skipped* (next section titled <a href='#Model Creation'>Model Creation</a>) if you have gone through CNTK 101. \n",

|

||||

|

|

@ -151,7 +173,9 @@

|

|||

"cell_type": "code",

|

||||

"execution_count": 6,

|

||||

"metadata": {

|

||||

"collapsed": true

|

||||

"collapsed": true,

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

|

|

@ -165,7 +189,10 @@

|

|||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"source": [

|

||||

"### Input and Labels\n",

|

||||

"\n",

|

||||

|

|

@ -176,7 +203,9 @@

|

|||

"cell_type": "code",

|

||||

"execution_count": 7,

|

||||

"metadata": {

|

||||

"collapsed": true

|

||||

"collapsed": true,

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

|

|

@ -199,7 +228,9 @@

|

|||

"cell_type": "code",

|

||||

"execution_count": 8,

|

||||

"metadata": {

|

||||

"collapsed": true

|

||||

"collapsed": true,

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

|

|

@ -212,7 +243,10 @@

|

|||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"source": [

|

||||

"Let us visualize the input data. \n",

|

||||

"\n",

|

||||

|

|

@ -222,7 +256,11 @@

|

|||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": 9,

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"collapsed": false,

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"outputs": [

|

||||

{

|

||||

"data": {

|

||||

|

|

@ -251,9 +289,11 @@

|

|||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"source": [

|

||||

"<a id='#Model Creation'></a>\n",

|

||||

"## Model Creation\n",

|

||||

"\n",

|

||||

"Our feed forward network will be relatively simple with 2 hidden layers (`num_hidden_layers`) with each layer having 50 hidden nodes (`hidden_layers_dim`)."

|

||||

|

|

@ -262,7 +302,11 @@

|

|||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": 10,

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"collapsed": false,

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"outputs": [

|

||||

{

|

||||

"data": {

|

||||

|

|

@ -285,7 +329,10 @@

|

|||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"source": [

|

||||

"The number of green nodes (refer to picture above) in each hidden layer is set to 50 in the example and the number of hidden layers (refer to the number of layers of green nodes) is 2. Fill in the following values:\n",

|

||||

"- num_hidden_layers\n",

|

||||

|

|

@ -298,7 +345,9 @@

|

|||

"cell_type": "code",

|

||||

"execution_count": 11,

|

||||

"metadata": {

|

||||

"collapsed": true

|

||||

"collapsed": true,

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

|

|

@ -308,7 +357,10 @@

|

|||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"source": [

|

||||

"Network input and output: \n",

|

||||

"- **input** variable (a key CNTK concept): \n",

|

||||

|

|

@ -322,7 +374,9 @@

|

|||

"cell_type": "code",

|

||||

"execution_count": 12,

|

||||

"metadata": {

|

||||

"collapsed": true

|

||||

"collapsed": true,

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

|

|

@ -337,7 +391,10 @@

|

|||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"source": [

|

||||

"## Feed forward network setup\n",

|

||||

"Let us define the feedforward network one step at a time. The first layer takes an input feature vector ($\\bf{x}$) with dimensions `input_dim`, say $m$, and emits the output a.k.a. *evidence* (first hidden layer $\\bf{z_1}$ with dimension `hidden_layer_dim`, say $n$). Each feature in the input layer is connected with a node in the output layer by the weight which is represented by a matrix $\\bf{W}$ with dimensions ($m \\times n$). The first step is to compute the evidence for the entire feature set. Note: we use **bold** notations to denote matrix / vectors: \n",

|

||||

|

|

@ -355,7 +412,9 @@

|

|||

"cell_type": "code",

|

||||

"execution_count": 13,

|

||||

"metadata": {

|

||||

"collapsed": true

|

||||

"collapsed": true,

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

|

|

@ -370,10 +429,12 @@

|

|||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"source": [

|

||||

"The next step is to convert the *evidence* (the output of the linear layer) through a non-linear function a.k.a. *activation functions* of your choice that would squash the evidence to activations using a choice of functions ([found here][]). **Sigmoid** or **Tanh** are historically popular. We will use **sigmoid** function in this tutorial. The output of the sigmoid function often is the input to the next layer or the output of the final layer. \n",

|

||||

"[found here]: https://docs.microsoft.com/en-us/cognitive-toolkit/Brainscript-Activation-Functions \n",

|

||||

"The next step is to convert the *evidence* (the output of the linear layer) through a non-linear function a.k.a. *activation functions* of your choice that would squash the evidence to activations using a choice of functions ([found here](https://cntk.ai/pythondocs/cntk.layers.layers.html#cntk.layers.layers.Activation)). **Sigmoid** or **Tanh** are historically popular. We will use **sigmoid** function in this tutorial. The output of the sigmoid function often is the input to the next layer or the output of the final layer. \n",

|

||||

"\n",

|

||||

"**Question**: Try different activation functions by passing different them to `nonlinearity` value and get familiarized with using them."

|

||||

]

|

||||

|

|

@ -382,7 +443,9 @@

|

|||

"cell_type": "code",

|

||||

"execution_count": 14,

|

||||

"metadata": {

|

||||

"collapsed": true

|

||||

"collapsed": true,

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

|

|

@ -394,7 +457,10 @@

|

|||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"source": [

|

||||

"Now that we have created one hidden layer, we need to iterate through the layers to create a fully connected classifier. Output of the first layer $\\bf{h_1}$ becomes the input to the next layer.\n",

|

||||

"\n",

|

||||

|

|

@ -415,7 +481,9 @@

|

|||

"cell_type": "code",

|

||||

"execution_count": 15,

|

||||

"metadata": {

|

||||

"collapsed": true

|

||||

"collapsed": true,

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

|

|

@ -432,7 +500,10 @@

|

|||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"source": [

|

||||

"The network output `z` will be used to represent the output of a network across."

|

||||

]

|

||||

|

|

@ -441,7 +512,9 @@

|

|||

"cell_type": "code",

|

||||

"execution_count": 16,

|

||||

"metadata": {

|

||||

"collapsed": true

|

||||

"collapsed": true,

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

|

|

@ -452,9 +525,12 @@

|

|||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"source": [

|

||||

"While the aforementioned network helps us better understand how to implement a network using CNTK primitives, it is much more convenient and faster to use the [layers library](https://www.cntk.ai/pythondocs/layerref.html). It provides predefined commonly used “layers” (lego like blocks), which simplifies the design of networks that consist of standard layers layered on top of each other. For instance, ``dense_layer`` is already easily accessible through the [`Dense`](https://www.cntk.ai/pythondocs/layerref.html#dense) layer function to compose our deep model. We can pass the input variable (`input`) to this model to get the network output. \n",

|

||||

"While the aforementioned network helps us better understand how to implement a network using CNTK primitives, it is much more convenient and faster to use the [layers library](https://www.cntk.ai/pythondocs/layerref.html). It provides predefined commonly used “layers” (lego like blocks), which simplifies the design of networks that consist of standard layers layered on top of each other. For instance, ``dense_layer`` is already easily accessible through the [Dense](https://www.cntk.ai/pythondocs/layerref.html#dense) layer function to compose our deep model. We can pass the input variable (`input`) to this model to get the network output. \n",

|

||||

"\n",

|

||||

"**Suggested task**: Please go through the model defined above and the output of the `create_model` function and convince yourself that the implementation below encapsulates the code above."

|

||||

]

|

||||

|

|

@ -463,7 +539,9 @@

|

|||

"cell_type": "code",

|

||||

"execution_count": 17,

|

||||

"metadata": {

|

||||

"collapsed": true

|

||||

"collapsed": true,

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

|

|

@ -481,7 +559,10 @@

|

|||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"source": [

|

||||

"### Learning model parameters\n",

|

||||

"\n",

|

||||

|

|

@ -489,16 +570,15 @@

|

|||

"\n",

|

||||

"$$ \\textbf{p} = \\mathrm{softmax}(\\bf{z_{final~layer}})$$ \n",

|

||||

"\n",

|

||||

"One can see the `softmax` function as an activation function that maps the accumulated evidences to a probability distribution over the classes (Details of the [softmax function][]). Other choices of activation function can be [found here][].\n",

|

||||

"\n",

|

||||

"[softmax function]: https://www.cntk.ai/pythondocs/cntk.ops.html#cntk.ops.softmax\n",

|

||||

"\n",

|

||||

"[found here]: https://docs.microsoft.com/en-us/cognitive-toolkit/Brainscript-Activation-Functions"

|

||||

"One can see the `softmax` function as an activation function that maps the accumulated evidences to a probability distribution over the classes (Details of the [softmax function](https://www.cntk.ai/pythondocs/cntk.ops.html#cntk.ops.softmax)). Other choices of activation function can be [found here](https://cntk.ai/pythondocs/cntk.layers.layers.html#cntk.layers.layers.Activation)."

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"source": [

|

||||

"## Training\n",

|

||||

"\n",

|

||||

|

|

@ -509,17 +589,16 @@

|

|||

"\n",

|

||||

"$$ H(p) = - \\sum_{j=1}^C y_j \\log (p_j) $$ \n",

|

||||

"\n",

|

||||

"where $p$ is our predicted probability from `softmax` function and $y$ represents the label. This label provided with the data for training is also called the ground-truth label. In the two-class example, the `label` variable has dimensions of two (equal to the `num_output_classes` or $C$). Generally speaking, if the task in hand requires classification into $C$ different classes, the label variable will have $C$ elements with 0 everywhere except for the class represented by the data point where it will be 1. Understanding the [details][] of this cross-entropy function is highly recommended.\n",

|

||||

"\n",

|

||||

"[`cross-entropy`]: http://cntk.ai/pythondocs/cntk.ops.html#cntk.ops.cross_entropy_with_softmax\n",

|

||||

"[details]: http://colah.github.io/posts/2015-09-Visual-Information/"

|

||||

"where $p$ is our predicted probability from `softmax` function and $y$ represents the label. This label provided with the data for training is also called the ground-truth label. In the two-class example, the `label` variable has dimensions of two (equal to the `num_output_classes` or $C$). Generally speaking, if the task in hand requires classification into $C$ different classes, the label variable will have $C$ elements with 0 everywhere except for the class represented by the data point where it will be 1. Understanding the [details](http://colah.github.io/posts/2015-09-Visual-Information/) of this cross-entropy function is highly recommended."

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": 18,

|

||||

"metadata": {

|

||||

"collapsed": true

|

||||

"collapsed": true,

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

|

|

@ -528,9 +607,12 @@

|

|||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"source": [

|

||||

"#### Evaluation\n",

|

||||

"### Evaluation\n",

|

||||

"\n",

|

||||

"In order to evaluate the classification, one can compare the output of the network which for each observation emits a vector of evidences (can be converted into probabilities using `softmax` functions) with dimension equal to number of classes."

|

||||

]

|

||||

|

|

@ -539,7 +621,9 @@

|

|||

"cell_type": "code",

|

||||

"execution_count": 19,

|

||||

"metadata": {

|

||||

"collapsed": true

|

||||

"collapsed": true,

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

|

|

@ -548,29 +632,30 @@

|

|||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"source": [

|

||||

"### Configure training\n",

|

||||

"\n",

|

||||

"The trainer strives to reduce the `loss` function by different optimization approaches, [Stochastic Gradient Descent][] (`sgd`) being one of the most popular one. Typically, one would start with random initialization of the model parameters. The `sgd` optimizer would calculate the `loss` or error between the predicted label against the corresponding ground-truth label and using [gradient-decent][] generate a new set model parameters in a single iteration. \n",

|

||||

"The trainer strives to reduce the `loss` function by different optimization approaches, [Stochastic Gradient Descent](https://en.wikipedia.org/wiki/Stochastic_gradient_descent) (`sgd`) being one of the most popular one. Typically, one would start with random initialization of the model parameters. The `sgd` optimizer would calculate the `loss` or error between the predicted label against the corresponding ground-truth label and using [gradient-decent](http://www.statisticsviews.com/details/feature/5722691/Getting-to-the-Bottom-of-Regression-with-Gradient-Descent.html) generate a new set model parameters in a single iteration. \n",

|

||||

"\n",

|

||||

"The aforementioned model parameter update using a single observation at a time is attractive since it does not require the entire data set (all observation) to be loaded in memory and also requires gradient computation over fewer datapoints, thus allowing for training on large data sets. However, the updates generated using a single observation sample at a time can vary wildly between iterations. An intermediate ground is to load a small set of observations and use an average of the `loss` or error from that set to update the model parameters. This subset is called a *minibatch*.\n",

|

||||

"\n",

|

||||

"With minibatches we often sample observation from the larger training dataset. We repeat the process of model parameters update using different combination of training samples and over a period of time minimize the `loss` (and the error). When the incremental error rates are no longer changing significantly or after a preset number of maximum minibatches to train, we claim that our model is trained.\n",

|

||||

"\n",

|

||||

"One of the key parameter for optimization is called the `learning_rate`. For now, we can think of it as a scaling factor that modulates how much we change the parameters in any iteration. We will be covering more details in later tutorial. \n",

|

||||

"With this information, we are ready to create our trainer.\n",

|

||||

"\n",

|

||||

"[optimization]: https://en.wikipedia.org/wiki/Category:Convex_optimization\n",

|

||||

"[Stochastic Gradient Descent]: https://en.wikipedia.org/wiki/Stochastic_gradient_descent\n",

|

||||

"[gradient-decent]: http://www.statisticsviews.com/details/feature/5722691/Getting-to-the-Bottom-of-Regression-with-Gradient-Descent.html"

|

||||

"One of the key parameter for [optimization](https://en.wikipedia.org/wiki/Category:Convex_optimization) is called the `learning_rate`. For now, we can think of it as a scaling factor that modulates how much we change the parameters in any iteration. We will be covering more details in later tutorial. \n",

|

||||

"With this information, we are ready to create our trainer. "

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": 20,

|

||||

"metadata": {

|

||||

"collapsed": true

|

||||

"collapsed": true,

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

|

|

@ -583,7 +668,10 @@

|

|||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"source": [

|

||||

"First lets create some helper functions that will be needed to visualize different functions associated with training."

|

||||

]

|

||||

|

|

@ -592,7 +680,9 @@

|

|||

"cell_type": "code",

|

||||

"execution_count": 21,

|

||||

"metadata": {

|

||||

"collapsed": true

|

||||

"collapsed": true,

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

|

|

@ -620,9 +710,11 @@

|

|||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"source": [

|

||||

"<a id='#Run the trainer'></a>\n",

|

||||

"### Run the trainer\n",

|

||||

"\n",

|

||||

"We are now ready to train our fully connected neural net. We want to decide what data we need to feed into the training engine.\n",

|

||||

|

|

@ -636,7 +728,9 @@

|

|||

"cell_type": "code",

|

||||

"execution_count": 22,

|

||||

"metadata": {

|

||||

"collapsed": true

|

||||

"collapsed": true,

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

|

|

@ -650,7 +744,9 @@

|

|||

"cell_type": "code",

|

||||

"execution_count": 23,

|

||||

"metadata": {

|

||||

"collapsed": true

|

||||

"collapsed": true,

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

|

|

@ -675,7 +771,10 @@

|

|||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"source": [

|

||||

"Let us plot the errors over the different training minibatches. Note that as we iterate the training loss decreases though we do see some intermediate bumps. The bumps indicate that during that iteration the model came across observations that it predicted incorrectly. This can happen with observations that are novel during model training.\n",

|

||||

"\n",

|

||||

|

|

@ -687,7 +786,11 @@

|

|||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": 24,

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"collapsed": false,

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"outputs": [

|

||||

{

|

||||

"data": {

|

||||

|

|

@ -737,9 +840,12 @@

|

|||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"source": [

|

||||

"## Evaluation / Testing \n",

|

||||

"### Run evaluation / testing \n",

|

||||

"\n",

|

||||

"Now that we have trained the network, let us evaluate the trained network on data that hasn't been used for training. This is often called **testing**. Let us create some new data set and evaluate the average error and loss on this set. This is done using `trainer.test_minibatch`."

|

||||

]

|

||||

|

|

@ -747,7 +853,11 @@

|

|||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": 25,

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"collapsed": false,

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"outputs": [

|

||||

{

|

||||

"data": {

|

||||

|

|

@ -770,18 +880,24 @@

|

|||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"source": [

|

||||

"Note, this error is very comparable to our training error indicating that our model has good \"out of sample\" error a.k.a. generalization error. This implies that our model can very effectively deal with previously unseen observations (during the training process). This is key to avoid the phenomenon of overfitting."

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"source": [

|

||||

"We have so far been dealing with aggregate measures of error. Lets now get the probabilities associated with individual data points. For each observation, the `eval` function returns the probability distribution across all the classes. If you used the default parameters in this tutorial, then it would be a vector of 2 elements per observation. First let us route the network output through a softmax function.\n",

|

||||

"\n",

|

||||

"#### Why do we need to route the network output `netout` via `softmax`?\n",

|

||||

"**Why do we need to route the network output `netout` via `softmax`?**\n",

|

||||

"\n",

|

||||

"The way we have configured the network includes the output of all the activation nodes (e.g., the green layer in Figure 4). The output nodes (the orange layer in Figure 4), converts the activations into a probability. A simple and effective way is to route the activations via a softmax function."

|

||||

]

|

||||

|

|

@ -789,7 +905,11 @@

|

|||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": 26,

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"collapsed": false,

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"outputs": [

|

||||

{

|

||||

"data": {

|

||||

|

|

@ -814,7 +934,9 @@

|

|||

"cell_type": "code",

|

||||

"execution_count": 27,

|

||||

"metadata": {

|

||||

"collapsed": true

|

||||

"collapsed": true,

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

|

|

@ -823,7 +945,10 @@

|

|||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"source": [

|

||||

"Let us test on previously unseen data."

|

||||

]

|

||||

|

|

@ -832,7 +957,9 @@

|

|||

"cell_type": "code",

|

||||

"execution_count": 28,

|

||||

"metadata": {

|

||||

"collapsed": true

|

||||

"collapsed": true,

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

|

|

@ -842,7 +969,11 @@

|

|||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": 29,

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"collapsed": false,

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"outputs": [

|

||||

{

|

||||

"name": "stdout",

|

||||

|

|

@ -861,7 +992,9 @@

|

|||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {

|

||||

"collapsed": true

|

||||

"collapsed": true,

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"source": [

|

||||

"**Exploration Suggestion**\n",

|

||||

|

|

@ -874,15 +1007,26 @@

|

|||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {

|

||||

"collapsed": true

|

||||

"collapsed": true,

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"source": [

|

||||

"#### Code link\n",

|

||||

"**Code link**\n",

|

||||

"\n",

|

||||

"If you want to try running the tutorial from python command prompt. Please run the [FeedForwardNet.py][] example.\n",

|

||||

"\n",

|

||||

"[FeedForwardNet.py]: https://github.com/Microsoft/CNTK/blob/release/2.1/Tutorials/NumpyInterop/FeedForwardNet.py"

|

||||

"If you want to try running the tutorial from python command prompt. Please run the [FeedForwardNet.py](https://github.com/Microsoft/CNTK/blob/release/2.1/Tutorials/NumpyInterop/FeedForwardNet.py) example."

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"metadata": {

|

||||

"collapsed": true,

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"outputs": [],

|

||||

"source": []

|

||||

}

|

||||

],

|

||||

"metadata": {

|

||||

|

|

@ -902,7 +1046,7 @@

|

|||

"name": "python",

|

||||

"nbconvert_exporter": "python",

|

||||

"pygments_lexer": "ipython3",

|

||||

"version": "3.5.2"

|

||||

"version": "3.5.3"

|

||||

}

|

||||

},

|

||||

"nbformat": 4,

|

||||

|

|

|

|||

|

|

@ -3,7 +3,9 @@

|

|||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {

|

||||

"collapsed": true

|

||||

"collapsed": true,

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"source": [

|

||||

"# CNTK 103 Part A: MNIST Data Loader\n",

|

||||

|

|

@ -11,18 +13,18 @@

|

|||

"This tutorial is targeted to individuals who are new to CNTK and to machine learning. We assume you have completed or are familiar with CNTK 101 and 102. In this tutorial, we will download and pre-process the MNIST digit images to be used for building different models to recognize handwritten digits. We will extend CNTK 101 and 102 to be applied to this data set. Additionally, we will introduce a convolutional network to achieve superior performance. This is the first example, where we will train and evaluate a neural network based model on real world data. \n",

|

||||

"\n",

|

||||

"CNTK 103 tutorial is divided into multiple parts:\n",

|

||||

"- Part A: Familiarize with the [MNIST][] database that will be used later in the tutorial\n",

|

||||

"- Subsequent parts in this 103 series would be using the MNIST data with different types of networks.\n",

|

||||

"\n",

|

||||

"\n",

|

||||

"[MNIST]: http://yann.lecun.com/exdb/mnist/\n",

|

||||

"\n"

|

||||

"- Part A: Familiarize with the [MNIST](http://yann.lecun.com/exdb/mnist/) database that will be used later in the tutorial\n",

|

||||

"- Subsequent parts in this 103 series would be using the MNIST data with different types of networks."

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": 1,

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"collapsed": true,

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"# Import the relevant modules to be used later\n",

|

||||

|

|

@ -47,7 +49,10 @@

|

|||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"source": [

|

||||

"## Data download\n",

|

||||

"\n",

|

||||

|

|

@ -58,7 +63,9 @@

|

|||

"cell_type": "code",

|

||||

"execution_count": 2,

|

||||

"metadata": {

|

||||

"collapsed": true

|

||||

"collapsed": true,

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

|

|

@ -120,7 +127,10 @@

|

|||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"source": [

|

||||

"# Download the data\n",

|

||||

"\n",

|

||||

|

|

@ -130,7 +140,11 @@

|

|||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": 3,

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"collapsed": false,

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"outputs": [

|

||||

{

|

||||

"name": "stdout",

|

||||

|

|

@ -169,7 +183,10 @@

|

|||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"source": [

|

||||

"# Visualize the data"

|

||||

]

|

||||

|

|

@ -177,7 +194,11 @@

|

|||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": 4,

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"collapsed": false,

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"outputs": [

|

||||

{

|

||||

"name": "stdout",

|

||||

|

|

@ -207,25 +228,30 @@

|

|||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"source": [

|

||||

"# Save the images\n",

|

||||

"\n",

|

||||

"Save the images in a local directory. While saving the data we flatten the images to a vector (28x28 image pixels becomes an array of length 784 data points).\n",

|

||||

"\n",

|

||||

"\n",

|

||||

"\n",

|

||||

"\n",

|

||||

"The labels are encoded as [1-hot][] encoding (label of 3 with 10 digits becomes `0001000000`, where the first index corresponds to digit `0` and the last one corresponds to digit `9`.\n",

|

||||

"The labels are encoded as [1-hot]( https://en.wikipedia.org/wiki/One-hot) encoding (label of 3 with 10 digits becomes `0001000000`, where the first index corresponds to digit `0` and the last one corresponds to digit `9`.\n",

|

||||

"\n",

|

||||

"\n",

|

||||

"\n",

|

||||

"[1-hot]: https://en.wikipedia.org/wiki/One-hot"

|

||||

""

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": 5,

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"collapsed": true,

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

"# Save the data files into a format compatible with CNTK text reader\n",

|

||||

|

|

@ -251,7 +277,11 @@

|

|||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": 6,

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"collapsed": false,

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"outputs": [

|

||||

{

|

||||

"name": "stdout",

|

||||

|

|

@ -282,7 +312,10 @@

|

|||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"source": [

|

||||

"**Suggested Explorations**\n",

|

||||

"\n",

|

||||

|

|

@ -291,18 +324,17 @@

|

|||

"There are several ways data alterations can be performed. CNTK readers automate a lot of these actions for you. However, to get a feel for how these transforms can impact training and test accuracies, we strongly encourage individuals to try one or more of data perturbation.\n",

|

||||

"\n",

|

||||

"- Shuffle the training data (rows to create a different). Hint: Use `permute_indices = np.random.permutation(train.shape[0])`. Then, run Part B of the tutorial with this newly permuted data.\n",

|

||||

"- Adding noise to the data can often improves [generalization error][]. You can augment the training set by adding noise (generated with numpy, hint: use `numpy.random`) to the training images. \n",

|

||||

"- Distort the images with [affine transformation][] (translations or rotations)\n",

|

||||

"\n",

|

||||

"[generalization error]: https://en.wikipedia.org/wiki/Generalization_error\n",

|

||||

"[affine transformation]: https://en.wikipedia.org/wiki/Affine_transformation\n"

|

||||

"- Adding noise to the data can often improves [generalization error](https://en.wikipedia.org/wiki/Generalization_error). You can augment the training set by adding noise (generated with numpy, hint: use `numpy.random`) to the training images. \n",

|

||||

"- Distort the images with [affine transformation](https://en.wikipedia.org/wiki/Affine_transformation) (translations or rotations)"

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": null,

|

||||

"metadata": {

|

||||

"collapsed": true

|

||||

"collapsed": true,

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"outputs": [],

|

||||

"source": []

|

||||

|

|

@ -325,7 +357,7 @@

|

|||

"name": "python",

|

||||

"nbconvert_exporter": "python",

|

||||

"pygments_lexer": "ipython3",

|

||||

"version": "3.5.2"

|

||||

"version": "3.5.3"

|

||||

}

|

||||

},

|

||||

"nbformat": 4,

|

||||

|

|

|

|||

|

|

@ -4,7 +4,9 @@

|

|||

"cell_type": "code",

|

||||

"execution_count": 1,

|

||||

"metadata": {

|

||||

"collapsed": true

|

||||

"collapsed": true,

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

|

|

@ -14,6 +16,8 @@

|

|||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {

|

||||

"deletable": true,

|

||||

"editable": true,

|

||||

"nbpresent": {

|

||||

"id": "29b9bd1d-766f-4422-ad96-de0accc1ce58"

|

||||

}

|

||||

|

|

@ -34,7 +38,11 @@

|

|||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": 2,

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"collapsed": false,

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"outputs": [

|

||||

{

|

||||

"data": {

|

||||

|

|

@ -57,7 +65,10 @@

|

|||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"source": [

|

||||

"**Goal**:\n",

|

||||

"Our goal is to train a classifier that will identify the digits in the MNIST dataset. \n",

|

||||

|

|

@ -72,19 +83,25 @@

|

|||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"source": [

|

||||

"## Logistic Regression\n",

|

||||

"[Logistic Regression](https://en.wikipedia.org/wiki/Logistic_regression) (LR) is a fundamental machine learning technique that uses a linear weighted combination of features and generates probability-based predictions of different classes. \n",

|

||||

"\n",

|

||||

"There are two basic forms of LR: **Binary LR** (with a single output that can predict two classes) and **multinomial LR** (with multiple outputs, each of which is used to predict a single class). \n",

|

||||

"\n",

|

||||

""

|

||||

""

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"source": [

|

||||

"In **Binary Logistic Regression** (see top of figure above), the input features are each scaled by an associated weight and summed together. The sum is passed through a squashing (aka activation) function and generates an output in [0,1]. This output value (which can be thought of as a probability) is then compared with a threshold (such as 0.5) to produce a binary label (0 or 1). This technique supports only classification problems with two output classes, hence the name binary LR. In the binary LR example shown above, the [sigmoid][] function is used as the squashing function.\n",

|

||||

"\n",

|

||||

|

|

@ -93,7 +110,10 @@

|

|||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"source": [

|

||||

"In **Multinomial Linear Regression** (see bottom of figure above), 2 or more output nodes are used, one for each output class to be predicted. Each summation node uses its own set of weights to scale the input features and sum them together. Instead of passing the summed output of the weighted input features through a sigmoid squashing function, the output is often passed through a [softmax][] function (which in addition to squashing, like the sigmoid, the softmax normalizes each nodes' output value using the sum of all unnormalized nodes). (Details in the context of MNIST image to follow)\n",

|

||||

"\n",

|

||||

|

|

@ -107,6 +127,8 @@

|

|||

"execution_count": 3,

|

||||

"metadata": {

|

||||

"collapsed": true,

|

||||

"deletable": true,

|

||||

"editable": true,

|

||||

"nbpresent": {

|

||||

"id": "138d1a78-02e2-4bd6-a20e-07b83f303563"

|

||||

}

|

||||

|

|

@ -131,7 +153,10 @@

|

|||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"source": [

|

||||

"## Initialization"

|

||||

]

|

||||

|

|

@ -140,7 +165,9 @@

|

|||

"cell_type": "code",

|

||||

"execution_count": 5,

|

||||

"metadata": {

|

||||

"collapsed": true

|

||||

"collapsed": true,

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

|

|

@ -151,7 +178,10 @@

|

|||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"source": [

|

||||

"## Data reading\n",

|

||||

"\n",

|

||||

|

|

@ -169,7 +199,9 @@

|

|||

"cell_type": "code",

|

||||

"execution_count": 6,

|

||||

"metadata": {

|

||||

"collapsed": true

|

||||

"collapsed": true,

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

|

|

@ -188,7 +220,11 @@

|

|||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": 7,

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"collapsed": false,

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"outputs": [

|

||||

{

|

||||

"name": "stdout",

|

||||

|

|

@ -219,21 +255,27 @@

|

|||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"source": [

|

||||

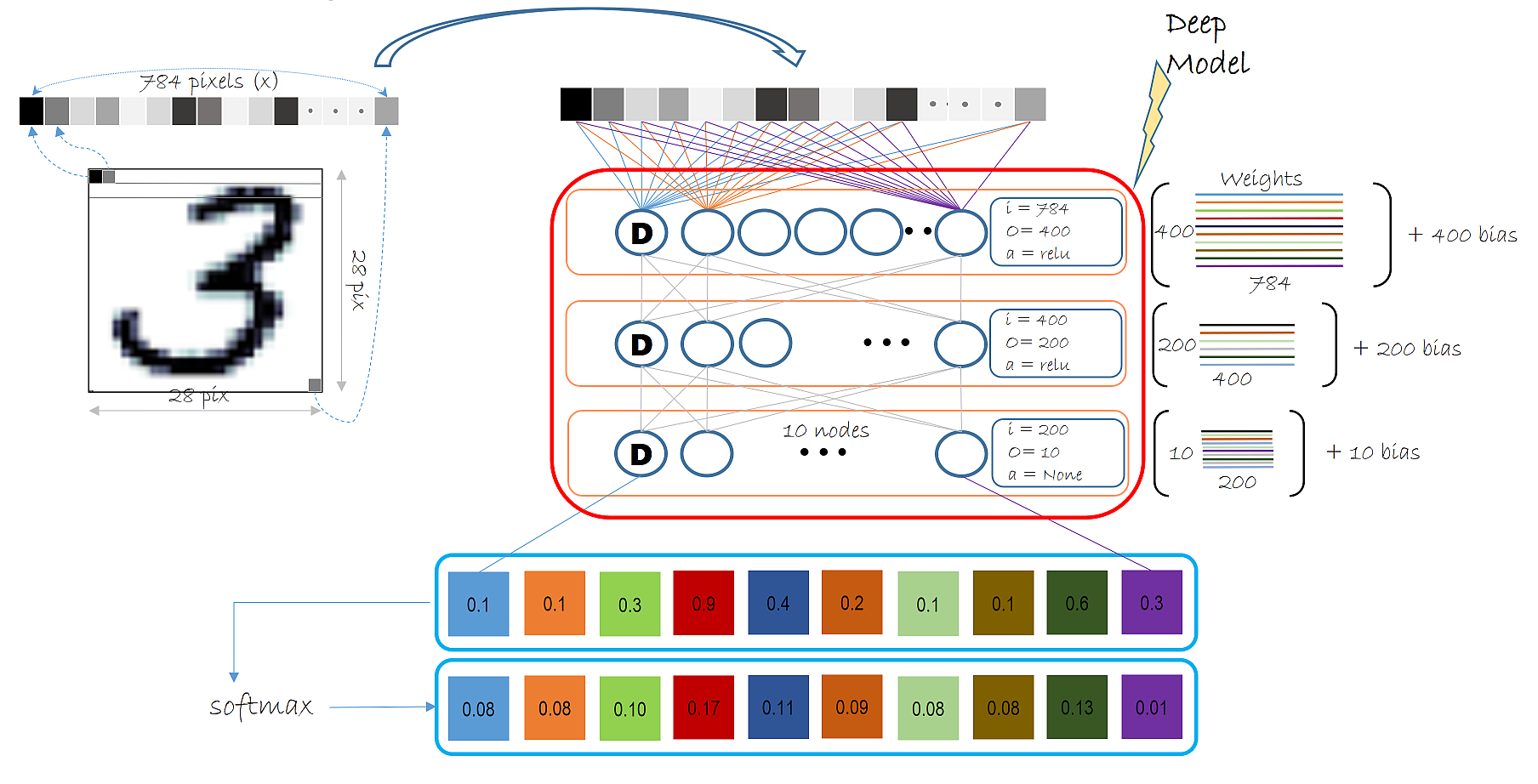

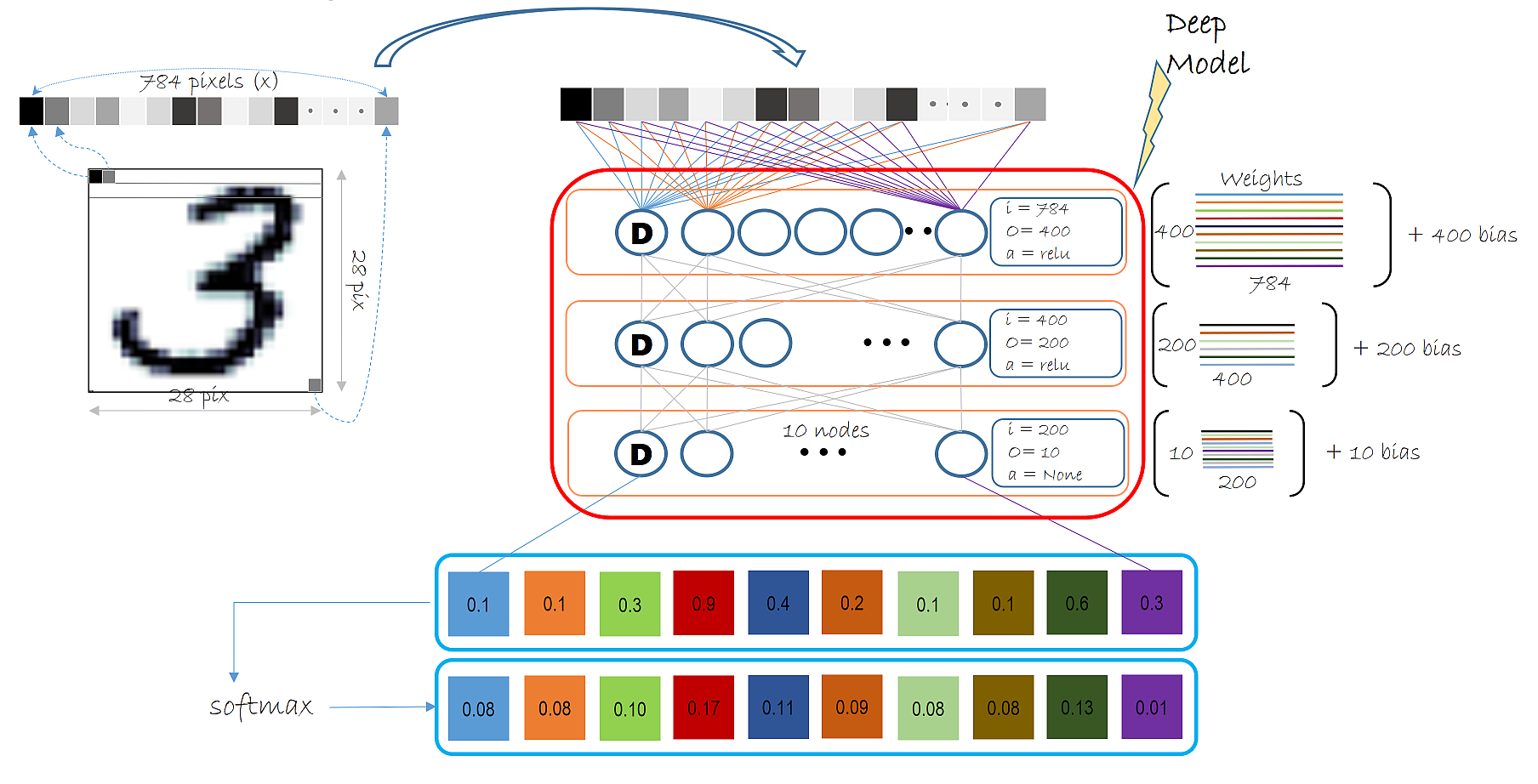

"# Model Creation\n",

|

||||

"## Model Creation\n",

|

||||

"\n",

|

||||

"A logistic regression (LR) network is a simple building block that has been effectively powering many ML \n",

|

||||

"applications in the past decade. The figure below summarizes the model in the context of the MNIST data.\n",

|

||||

"\n",

|

||||

"\n",

|

||||

"\n",

|

||||

"\n",

|

||||

"LR is a simple linear model that takes as input, a vector of numbers describing the properties of what we are classifying (also known as a feature vector, $\\bf \\vec{x}$, the pixels in the input MNIST digit image) and emits the *evidence* ($z$). For each of the 10 digits, there is a vector of weights corresponding to the input pixels as show in the figure. These 10 weight vectors define the weight matrix ($\\bf {W}$) with dimension of 10 x 784. Each feature in the input layer is connected with a summation node by a corresponding weight $w$ (individual weight values from the $\\bf{W}$ matrix). Note there are 10 such nodes, 1 corresponding to each digit to be classified. "

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"source": [

|

||||

"The first step is to compute the evidence for an observation. \n",

|

||||

"\n",

|

||||

|

|

@ -246,7 +288,10 @@

|

|||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"source": [

|

||||

"Network input and output: \n",

|

||||

"- **input** variable (a key CNTK concept): \n",

|

||||

|

|

@ -260,7 +305,9 @@

|

|||

"cell_type": "code",

|

||||

"execution_count": 8,

|

||||

"metadata": {

|

||||

"collapsed": true

|

||||

"collapsed": true,

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

|

|

@ -270,7 +317,10 @@

|

|||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"source": [

|

||||

"## Logistic Regression network setup\n",

|

||||

"\n",

|

||||

|

|

@ -281,7 +331,9 @@

|

|||

"cell_type": "code",

|

||||

"execution_count": 9,

|

||||

"metadata": {

|

||||

"collapsed": true

|

||||

"collapsed": true,

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

|

|

@ -293,7 +345,10 @@

|

|||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"source": [

|

||||

"`z` will be used to represent the output of a network."

|

||||

]

|

||||

|

|

@ -302,7 +357,9 @@

|

|||

"cell_type": "code",

|

||||

"execution_count": 10,

|

||||

"metadata": {

|

||||

"collapsed": true

|

||||

"collapsed": true,

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

|

|

@ -312,20 +369,22 @@

|

|||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"source": [

|

||||

"### Learning model parameters\n",

|

||||

"\n",

|

||||

"Same as the previous tutorial, we use the `softmax` function to map the accumulated evidences or activations to a probability distribution over the classes (Details of the [softmax function][] and other [activation][] functions).\n",

|

||||

"\n",

|

||||

"[softmax function]: http://cntk.ai/pythondocs/cntk.ops.html#cntk.ops.softmax\n",

|

||||

"\n",

|

||||

"[activation]: https://docs.microsoft.com/en-us/cognitive-toolkit/Brainscript-Activation-Functions"

|

||||

"Same as the previous tutorial, we use the `softmax` function to map the accumulated evidences or activations to a probability distribution over the classes (Details of the [softmax function](http://cntk.ai/pythondocs/cntk.ops.html#cntk.ops.softmax) and other [activation](https://cntk.ai/pythondocs/cntk.layers.layers.html#cntk.layers.layers.Activation) functions)."

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"source": [

|

||||

"## Training\n",

|

||||

"\n",

|

||||

|

|

@ -336,7 +395,9 @@

|

|||

"cell_type": "code",

|

||||

"execution_count": 11,

|

||||

"metadata": {

|

||||

"collapsed": true

|

||||

"collapsed": true,

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

|

|

@ -345,9 +406,12 @@

|

|||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"source": [

|

||||

"#### Evaluation\n",

|

||||

"### Evaluation\n",

|

||||

"\n",

|

||||

"In order to evaluate the classification, one can compare the output of the network which for each observation emits a vector of evidences (can be converted into probabilities using `softmax` functions) with dimension equal to number of classes."

|

||||

]

|

||||

|

|

@ -356,7 +420,9 @@

|

|||

"cell_type": "code",

|

||||

"execution_count": 12,

|

||||

"metadata": {

|

||||

"collapsed": true

|

||||

"collapsed": true,

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

|

|

@ -365,29 +431,30 @@

|

|||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"source": [

|

||||

"### Configure training\n",

|

||||

"\n",

|

||||

"The trainer strives to reduce the `loss` function by different optimization approaches, [Stochastic Gradient Descent][] (`sgd`) being one of the most popular one. Typically, one would start with random initialization of the model parameters. The `sgd` optimizer would calculate the `loss` or error between the predicted label against the corresponding ground-truth label and using [gradient-decent][] generate a new set model parameters in a single iteration. \n",

|

||||

"The trainer strives to reduce the `loss` function by different optimization approaches, [Stochastic Gradient Descent](https://en.wikipedia.org/wiki/Stochastic_gradient_descent) (`sgd`) being one of the most popular one. Typically, one would start with random initialization of the model parameters. The `sgd` optimizer would calculate the `loss` or error between the predicted label against the corresponding ground-truth label and using [gradient-decent](http://www.statisticsviews.com/details/feature/5722691/Getting-to-the-Bottom-of-Regression-with-Gradient-Descent.html) generate a new set model parameters in a single iteration. \n",

|

||||

"\n",

|

||||

"The aforementioned model parameter update using a single observation at a time is attractive since it does not require the entire data set (all observation) to be loaded in memory and also requires gradient computation over fewer datapoints, thus allowing for training on large data sets. However, the updates generated using a single observation sample at a time can vary wildly between iterations. An intermediate ground is to load a small set of observations and use an average of the `loss` or error from that set to update the model parameters. This subset is called a *minibatch*.\n",

|

||||

"\n",

|

||||

"With minibatches, we often sample observation from the larger training dataset. We repeat the process of model parameters update using different combination of training samples and over a period of time minimize the `loss` (and the error). When the incremental error rates are no longer changing significantly or after a preset number of maximum minibatches to train, we claim that our model is trained.\n",

|

||||

"\n",

|

||||

"One of the key optimization parameter is called the `learning_rate`. For now, we can think of it as a scaling factor that modulates how much we change the parameters in any iteration. We will be covering more details in later tutorial. \n",

|

||||

"With this information, we are ready to create our trainer. \n",

|

||||

"\n",

|

||||

"[optimization]: https://en.wikipedia.org/wiki/Category:Convex_optimization\n",

|

||||

"[Stochastic Gradient Descent]: https://en.wikipedia.org/wiki/Stochastic_gradient_descent\n",

|

||||

"[gradient-decent]: http://www.statisticsviews.com/details/feature/5722691/Getting-to-the-Bottom-of-Regression-with-Gradient-Descent.html"

|

||||

"One of the key [optimization](https://en.wikipedia.org/wiki/Category:Convex_optimization) parameter is called the `learning_rate`. For now, we can think of it as a scaling factor that modulates how much we change the parameters in any iteration. We will be covering more details in later tutorial. \n",

|

||||

"With this information, we are ready to create our trainer. "

|

||||

]

|

||||

},

|

||||

{

|

||||

"cell_type": "code",

|

||||

"execution_count": 13,

|

||||

"metadata": {

|

||||

"collapsed": true

|

||||

"collapsed": true,

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"outputs": [],

|

||||

"source": [

|

||||

|

|

@ -400,7 +467,10 @@

|

|||

},

|

||||

{

|

||||

"cell_type": "markdown",

|

||||

"metadata": {},

|

||||

"metadata": {

|

||||

"deletable": true,

|

||||

"editable": true

|

||||

},

|

||||

"source": [

|

||||

"First let us create some helper functions that will be needed to visualize different functions associated with training."

|

||||

]

|

||||

|

|

@ -409,7 +479,9 @@

|

|||

"cell_type": "code",

|

||||

"execution_count": 14,

|

||||

"metadata": {

|

||||

"collapsed": true

|

||||

"collapsed": true,

|

||||

"deletable": true,

|

||||

"editable": true

|