|

|

||

|---|---|---|

| Dependencies/CNTKCustomMKL | ||

| Documentation | ||

| Examples | ||

| Scripts | ||

| Source | ||

| Tests | ||

| Tools | ||

| contrib | ||

| .clang-format | ||

| .gitattributes | ||

| .gitignore | ||

| .gitmodules | ||

| CNTK.Cpp.props | ||

| CNTK.sln | ||

| CONTRIBUTING.md | ||

| CppCntk.vssettings | ||

| LICENSE.md | ||

| Makefile | ||

| README.md | ||

| configure | ||

README.md

CNTK

Latest news

2016-07-05. CNTK now supports Deconvolution and Unpooling. See the usage example in the Network number 4 in MNIST Sample.

2016-06-23. New License Terms for CNTK 1bit-SGD and related components.

Effective immediately the License Terms for CNTK 1bit-SGD and related components have changed. The new Terms provide more flexibility and enable new usage scenarios, especially in commercial environments. Read the new Terms at the standard location. Please note, that while the new Terms are significantly more flexible comparing to the previous ones, they are still more restrictive than the main CNTK License. Consequently everything described in Enabling 1bit-SGD section of the Wiki remains valid.

2016-06-20. A post on Intel MKL and CNTK is published in the Intel IT Peer Network

2016-06-16. V 1.5 Binary release. NuGet Package with CNTK Model Evaluation Libraries.

NuGet Package is added to CNTK v.1.5 binaries. See CNTK Releases page and NuGet Package description.

2016-06-15. CNTK now supports building against a custom Intel® Math Kernel Library (MKL). See setup instructions on how to set this up for your platform.

See all news.

What is CNTK

CNTK (http://www.cntk.ai/), the Computational Network Toolkit by Microsoft Research, is a unified deep-learning toolkit that describes neural networks as a series of computational steps via a directed graph. In this directed graph, leaf nodes represent input values or network parameters, while other nodes represent matrix operations upon their inputs. CNTK allows to easily realize and combine popular model types such as feed-forward DNNs, convolutional nets (CNNs), and recurrent networks (RNNs/LSTMs). It implements stochastic gradient descent (SGD, error backpropagation) learning with automatic differentiation and parallelization across multiple GPUs and servers. CNTK has been available under an open-source license since April 2015. It is our hope that the community will take advantage of CNTK to share ideas more quickly through the exchange of open source working code.

Wiki: Go to the CNTK Wiki for all information on CNTK including setup, examples, etc.

License: See LICENSE.md in the root of this repository for the full license information.

Tutorial: Microsoft Computational Network Toolkit (CNTK) @ NIPS 2015 Workshops

Blogs:

- Microsoft Computational Network Toolkit offers most efficient distributed deep learning computational performance

- Microsoft researchers win ImageNet computer vision challenge (December 2015)

Performance

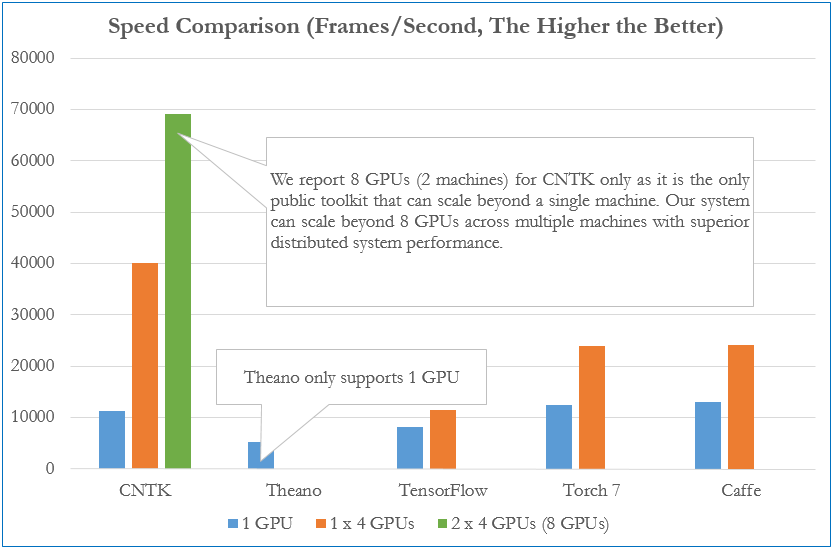

The figure below compares processing speed (frames processed per second) of CNTK to that of four other well-known toolkits. The configuration uses a fully connected 4-layer neural network (see our benchmark scripts) and an effective mini batch size (8192). All results were obtained on the same hardware with the respective latest public software versions as of Dec 3, 2015.

Citation

If you used this toolkit or part of it to do your research, please cite the work as:

Amit Agarwal, Eldar Akchurin, Chris Basoglu, Guoguo Chen, Scott Cyphers, Jasha Droppo, Adam Eversole, Brian Guenter, Mark Hillebrand, T. Ryan Hoens, Xuedong Huang, Zhiheng Huang, Vladimir Ivanov, Alexey Kamenev, Philipp Kranen, Oleksii Kuchaiev, Wolfgang Manousek, Avner May, Bhaskar Mitra, Olivier Nano, Gaizka Navarro, Alexey Orlov, Hari Parthasarathi, Baolin Peng, Marko Radmilac, Alexey Reznichenko, Frank Seide, Michael L. Seltzer, Malcolm Slaney, Andreas Stolcke, Huaming Wang, Yongqiang Wang, Kaisheng Yao, Dong Yu, Yu Zhang, Geoffrey Zweig (in alphabetical order), "An Introduction to Computational Networks and the Computational Network Toolkit", Microsoft Technical Report MSR-TR-2014-112, 2014.

Disclaimer

CNTK is in active use at Microsoft and constantly evolving. There will be bugs.

Microsoft Open Source Code of Conduct

This project has adopted the Microsoft Open Source Code of Conduct. For more information see the Code of Conduct FAQ or contact opencode@microsoft.com with any additional questions or comments.