зеркало из https://github.com/microsoft/FERPlus.git

Родитель

e655756d01

Коммит

932556218b

10

README.md

10

README.md

|

|

@ -1,17 +1,17 @@

|

||||||

# FER+

|

#s FER+

|

||||||

The FER+ annotations provide a set of new labels for the standard Emotion FER dataset. In FER+, each image has been labeled by 10 crowd-sourced taggers, which provide better quality ground truth for still image emotion than the original FER labels. Having 10 taggers for each image enables researchers to estimate an emotion probability distribution per face. This allows constructing algorithms that produce statistical distributions or multi-label outputs instead of the conventional single-label output, as described in: https://arxiv.org/abs/1608.01041

|

The FER+ annotations provide a set of new labels for the standard Emotion FER dataset. In FER+, each image has been labeled by 10 crowd-sourced taggers, which provide better quality ground truth for still image emotion than the original FER labels. Having 10 taggers for each image enables researchers to estimate an emotion probability distribution per face. This allows constructing algorithms that produce statistical distributions or multi-label outputs instead of the conventional single-label output, as described in: https://arxiv.org/abs/1608.01041

|

||||||

|

|

||||||

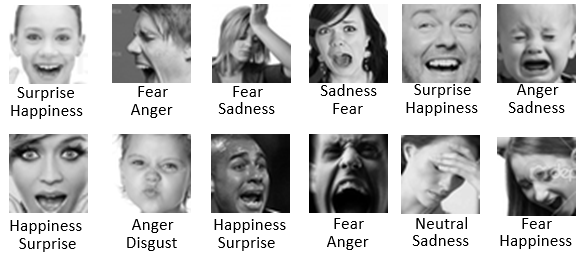

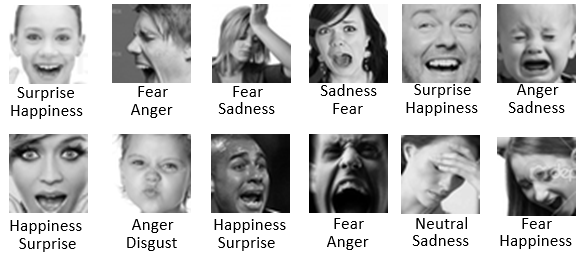

Here are some examples of the FER vs FER+ labels extracted from the abovementioned paper (FER top, FER+ bottom):

|

Here are some examples of the FER vs FER+ labels extracted from the abovementioned paper (FER top, FER+ bottom):

|

||||||

|

|

||||||

|

|

||||||

The new label file is named **_fer2013new.csv_** and contains the same number of rows as the original *fer2013.csv* label file with the same order, so that you infer which emotion tag belong to which image. Since we can't host the actual image content, please find the original FER data set here: https://www.kaggle.com/c/challenges-in-representation-learning-facial-expression-recognition-challenge/data

|

The new label file is named **_fer2013new.csv_** and contains the same number of rows as the original *fer2013.csv* label file with the same order, so that you infer which emotion tag belongs to which image. Since we can't host the actual image content, please find the original FER data set here: https://www.kaggle.com/c/challenges-in-representation-learning-facial-expression-recognition-challenge/data

|

||||||

|

|

||||||

We also provide a simple parsing code in Python to show how to parse the new label and how to convert it to a probability distribution (there are multiple ways to do it, we show an example). The parsing code is in [src/ReadFERPlus.py](https://github.com/Microsoft/FERPlus/tree/master/src)

|

We also provide a simple parsing code in Python to show how to parse the new labels and how to convert labels to a probability distribution (there are multiple ways to do it, we show an example). The parsing code is in [src/ReadFERPlus.py](https://github.com/Microsoft/FERPlus/tree/master/src)

|

||||||

|

|

||||||

The format of the CSV file is as follow: usage, neutral, happiness, surprise, sadness, anger, disgust, fear, contempt, unknown, NF. Columns "usage" is the same as the original FER label to differentiate between training, public test and private test sets. The other columns are the vote count for each emotion with the addition of unknown and NF (Not a Face).

|

The format of the CSV file is as follows: usage, neutral, happiness, surprise, sadness, anger, disgust, fear, contempt, unknown, NF. Columns "usage" is the same as the original FER label to differentiate between training, public test and private test sets. The other columns are the vote count for each emotion with the addition of unknown and NF (Not a Face).

|

||||||

|

|

||||||

# Citation

|

# Citation

|

||||||

If you use the new FER+ label or the sample code or part of it in your research, please cite the below:

|

If you use the new FER+ label or the sample code or part of it in your research, please cite the following:

|

||||||

|

|

||||||

**@inproceedings{BarsoumICMI2016,

|

**@inproceedings{BarsoumICMI2016,

|

||||||

title={Training Deep Networks for Facial Expression Recognition with Crowd-Sourced Label Distribution},

|

title={Training Deep Networks for Facial Expression Recognition with Crowd-Sourced Label Distribution},

|

||||||

|

|

|

||||||

Загрузка…

Ссылка в новой задаче