|

|

||

|---|---|---|

| .ci | ||

| .github | ||

| classification | ||

| contrib | ||

| detection | ||

| media | ||

| similarity | ||

| tests | ||

| tools | ||

| utils_cv | ||

| .flake8 | ||

| .gitignore | ||

| .pre-commit-config.yaml | ||

| CONTRIBUTING.md | ||

| LICENSE | ||

| MANIFEST.in | ||

| README.md | ||

| environment.yml | ||

| pyproject.toml | ||

| setup.py | ||

README.md

Computer Vision

This repository provides examples and best practice guidelines for building computer vision systems. All examples are given as Jupyter notebooks, and use PyTorch as the deep learning library.

Overview

The goal of this repository is to accelerate the development of computer vision applications. Rather than creating implementions from scratch, the focus is on providing examples and links to existing state-of-the-art libraries. In addition, having worked in this space for many years, we aim to answer common questions, point out frequently observed pitfalls, and show how to use the cloud for training and deployment.

Scenarios

The following is a summary of commonly used Computer Vision scenarios that are covered in this repository. For each of these scenarios, we give you the tools to effectively build your own model. This includes tasks such as fine-tuning your own model on your own data, to more complex tasks such as hard-negative mining and even model deployment.

| Scenario | Description |

|---|---|

| Classification | Image Classification is a supervised machine learning technique that allows you to learn and predict the category of a given image. |

| Similarity | Image Similarity is a way to compute a similarity score given a pair of images. Given an image, it allows you to identify the most similar image in a given dataset. |

| Detection | Object Detection is a supervised machine learning technique that allows you to detect the bounding box of an object within an image. |

Getting Started

To get started:

- (Optional) Create an Azure Data Science Virtual Machine with e.g. a V100 GPU (instructions, price table).

- Install Anaconda with Python >= 3.6. Miniconda. This step can be skipped if working on a Data Science Virtual Machine.

- Clone the repository

git clone https://github.com/Microsoft/ComputerVision - Install the conda environment, you'll find the

environment.ymlfile in the root directory. To build the conda environment:If you are on Windows uncomment

- git+https://github.com/philferriere/cocoapi.git#egg=pycocotools&subdirectory=PythonAPIbefore running the following If you are on Linux uncomment- pycocotools>=2.0conda env create -f environment.yml - Activate the conda environment and register it with Jupyter:

If you would like to use JupyterLab, installconda activate cv python -m ipykernel install --user --name cv --display-name "Python (cv)"jupyter-webrtcwidget:jupyter labextension install jupyter-webrtc - Start the Jupyter notebook server

jupyter notebook - At this point, you should be able to run the notebooks in this repo.

As an alternative to the steps above, and if one wants to install only the 'utils_cv' library (without creating a new conda environment), this can be done by running

pip install git+https://github.com/microsoft/ComputerVision.git@master#egg=utils_cv

or by downloading the repo and then running pip install . in the

root directory.

Introduction

Note that for certain computer vision problems, you may not need to build your own models. Instead, pre-built or easily customizable solutions exist which do not require any custom coding or machine learning expertise. We strongly recommend evaluating if these can sufficiently solve your problem. If these solutions are not applicable, or the accuracy of these solutions is not sufficient, then resorting to more complex and time-consuming custom approaches may be necessary.

The following Microsoft services offer simple solutions to address common computer vision tasks:

-

Vision Services are a set of pre-trained REST APIs which can be called for image tagging, face recognition, OCR, video analytics, and more. These APIs work out of the box and require minimal expertise in machine learning, but have limited customization capabilities. See the various demos available to get a feel for the functionality (e.g. Computer Vision).

-

Custom Vision is a SaaS service to train and deploy a model as a REST API given a user-provided training set. All steps including image upload, annotation, and model deployment can be performed using either the UI or a Python SDK. Training image classification or object detection models can be achieved with minimal machine learning expertise. The Custom Vision offers more flexibility than using the pre-trained cognitive services APIs, but requires the user to bring and annotate their own data.

Build Your Own Computer Vision Model

If you need to train your own model, the following services and links provide additional information that is likely useful.

-

Azure Machine Learning service (AzureML) is a service that helps users accelerate the training and deploying of machine learning models. While not specific for computer vision workloads, the AzureML Python SDK can be used for scalable and reliable training and deployment of machine learning solutions to the cloud. We leverage Azure Machine Learning in several of the notebooks within this repository (e.g. deployment to Azure Kubernetes Service)

-

Azure AI Reference architectures provide a set of examples (backed by code) of how to build common AI-oriented workloads that leverage multiple cloud components. While not computer vision specific, these reference architectures cover several machine learning workloads such as model deployment or batch scoring.

Computer Vision Domains

Most applications in computer vision (CV) fall into one of these 4 categories:

- Image classification: Given an input image, predict what object is present in the image. This is typically the easiest CV problem to solve, however classification requires objects to be reasonably large in the image.

- Object Detection: Given an input image, identify and locate which objects are present (using rectangular coordinates). Object detection can find small objects in an image. Compared to image classification, both model training and manually annotating images is more time-consuming in object detection, since both the label and location are required.

- Image Similarity Given an input image, find all similar objects in images from a reference dataset. Here, rather than predicting a label and/or rectangle, the task is to sort through a reference dataset to find objects similar to that found in the query image.

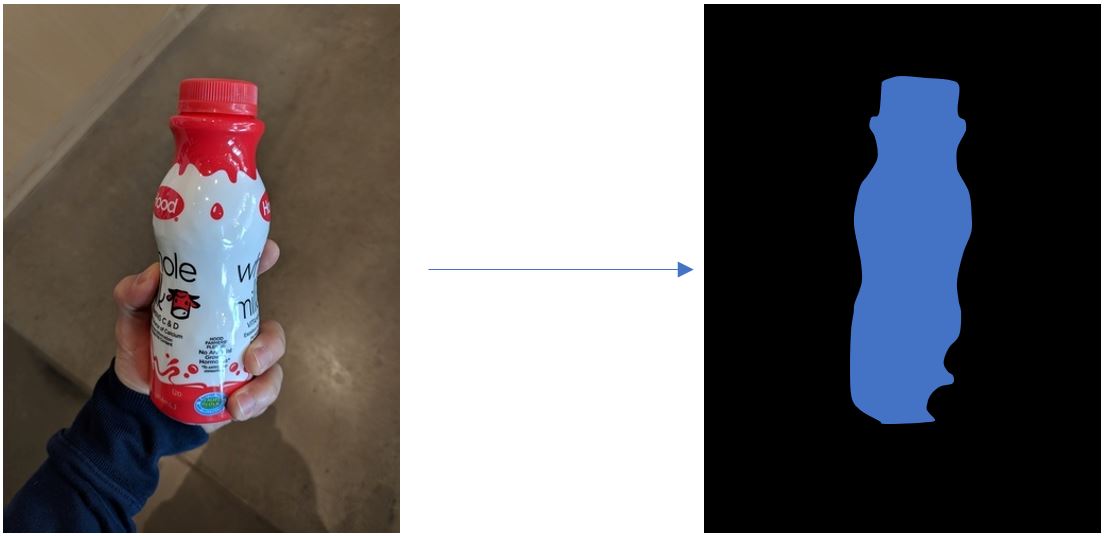

- Image Segmentation Given an input image, assign a label to every pixel (e.g., background, bottle, hand, sky, etc.). In practice, this problem is less common in industry, in large part due to time required to label the ground truth segmentation required in order to train a solution.

Build Status

VM Testing

| Build Type | Branch | Status | Branch | Status | |

|---|---|---|---|---|---|

| Linux GPU | master | staging | |||

| Linux CPU | master | staging | |||

| Windows GPU | master | staging | |||

| Windows CPU | master | staging | |||

| AzureML Notebooks | master | staging |

AzureML Testing

| Build Type | Branch | Status | Branch | Status | |

|---|---|---|---|---|---|

| Linxu GPU | master | staging | |||

| Linux CPU | master | staging | |||

| Notebook unit GPU | master | staging | |||

| Nightly GPU | master | staging |

Contributing

This project welcomes contributions and suggestions. Please see our contribution guidelines.

Data/Telemetry

The Azure Machine Learning image classification notebooks (20_azure_workspace_setup, 21_deployment_on_azure_container_instances, 22_deployment_on_azure_kubernetes_service, 23_aci_aks_web_service_testing, and 24_exploring_hyperparameters_on_azureml) collect browser usage data and send it to Microsoft to help improve our products and services. Read Microsoft's privacy statement to learn more.

To opt out of tracking, please go to the raw .ipynb files and remove the following line of code (the URL will be slightly different depending on the file):

""