diff --git a/CONTRIBUTORS.md b/CONTRIBUTORS.md

index 630e0595..fd3a69a0 100644

--- a/CONTRIBUTORS.md

+++ b/CONTRIBUTORS.md

@@ -28,7 +28,7 @@ list of contributions that are welcomed

How to Contribute

-----------------

-See [Contributor guide](docs/how_to/contribute.md) on tips for contributions.

+See [Contributor guide](http://tvm.ai/contribute/) on tips for contributions.

Committers

diff --git a/README.md b/README.md

index 77d72c64..604d30ad 100644

--- a/README.md

+++ b/README.md

@@ -4,11 +4,7 @@

[](./LICENSE)

[](http://mode-gpu.cs.washington.edu:8080/job/dmlc/job/tvm/job/master/)

-[Installation](docs/how_to/install.md) |

[Documentation](http://docs.tvm.ai) |

-[Tutorials](http://tutorials.tvm.ai) |

-[Operator Inventory](topi) |

-[FAQ](docs/faq.md) |

[Contributors](CONTRIBUTORS.md) |

[Community](http://tvm.ai/community.html) |

[Release Notes](NEWS.md)

diff --git a/apps/android_deploy/README.md b/apps/android_deploy/README.md

index 9620a2f1..94cc9984 100644

--- a/apps/android_deploy/README.md

+++ b/apps/android_deploy/README.md

@@ -65,15 +65,15 @@ Here's a piece of example for `config.mk`.

```makefile

APP_ABI = arm64-v8a

-

+

APP_PLATFORM = android-17

-

+

# whether enable OpenCL during compile

USE_OPENCL = 1

-

+

# the additional include headers you want to add, e.g., SDK_PATH/adrenosdk/Development/Inc

ADD_C_INCLUDES = /opt/adrenosdk-osx/Development/Inc

-

+

# the additional link libs you want to add, e.g., ANDROID_LIB_PATH/libOpenCL.so

ADD_LDLIBS = libOpenCL.so

```

@@ -99,7 +99,7 @@ If everything goes well, you will find compile tools in `/opt/android-toolchain-

### Place compiled model on Android application assets folder

-Follow instruction to get compiled version model for android target [here.](https://github.com/dmlc/tvm/blob/master/docs/how_to/deploy_android.md#build-model-for-android-target)

+Follow instruction to get compiled version model for android target [here.](https://tvm.ai/deploy/android.html)

Copied these compiled model deploy_lib.so, deploy_graph.json and deploy_param.params to apps/android_deploy/app/src/main/assets/ and modify TVM flavor changes on [java](https://github.com/dmlc/tvm/blob/master/apps/android_deploy/app/src/main/java/ml/dmlc/tvm/android/demo/MainActivity.java#L81)

diff --git a/apps/howto_deploy/README.md b/apps/howto_deploy/README.md

index 570c42ed..fda6251a 100644

--- a/apps/howto_deploy/README.md

+++ b/apps/howto_deploy/README.md

@@ -8,4 +8,4 @@ Type the following command to run the sample code under the current folder(need

./run_example.sh

```

-Checkout [How to Deploy TVM Modules](http://docs.tvm.ai/how_to/deploy.html) for more information.

+Checkout [How to Deploy TVM Modules](http://docs.tvm.ai/deploy/cpp_deploy.html) for more information.

diff --git a/docs/api_links.rst b/docs/api_links.rst

index d749a244..909cfe36 100644

--- a/docs/api_links.rst

+++ b/docs/api_links.rst

@@ -1,5 +1,5 @@

-Links to C++/JS API References

-==============================

+Links to C++ and JS API References

+==================================

This page contains links to API references that are build with different doc build system.

diff --git a/docs/contribute/code_guide.rst b/docs/contribute/code_guide.rst

new file mode 100644

index 00000000..dc7d998c

--- /dev/null

+++ b/docs/contribute/code_guide.rst

@@ -0,0 +1,39 @@

+.. _code_guide:

+

+Code Guide and Tips

+===================

+

+This is a document used to record tips in tvm codebase for reviewers and contributors.

+Most of them are summarized through lessons during the contributing and process.

+

+

+C++ Code Styles

+---------------

+- Use the Google C/C++ style.

+- The public facing functions are documented in doxygen format.

+- Favor concrete type declaration over ``auto`` as long as it is short.

+- Favor passing by const reference (e.g. ``const Expr&``) over passing by value.

+ Except when the function consumes the value by copy constructor or move,

+ pass by value is better than pass by const reference in such cases.

+

+Python Code Styles

+------------------

+- The functions and classes are documented in `numpydoc `_ format.

+- Check your code style using ``make pylint``

+

+

+Handle Integer Constant Expression

+----------------------------------

+We often need to handle constant integer expressions in tvm. Before we do so, the first question we want to ask is that is it really necessary to get a constant integer. If symbolic expression also works and let the logic flow, we should use symbolic expression as much as possible. So the generated code works for shapes that are not known ahead of time.

+

+Note that in some cases we cannot know certain information, e.g. sign of symbolic variable, it is ok to make assumptions in certain cases. While adding precise support if the variable is constant.

+

+If we do have to get constant integer expression, we should get the constant value using type ``int64_t`` instead of ``int``, to avoid potential integer overflow. We can always reconstruct an integer with the corresponding expression type via ``make_const``. The following code gives an example.

+

+.. code:: c++

+

+ Expr CalculateExpr(Expr value) {

+ int64_t int_value = GetConstInt(value);

+ int_value = CalculateExprInInt64(int_value);

+ return make_const(value.type(), int_value);

+ }

diff --git a/docs/contribute/code_review.rst b/docs/contribute/code_review.rst

new file mode 100644

index 00000000..efbb77c6

--- /dev/null

+++ b/docs/contribute/code_review.rst

@@ -0,0 +1,47 @@

+Perform Code Reviews

+====================

+

+This is a general guideline for code reviewers. First of all, while it is great to add new features to a project, we must also be aware that each line of code we introduce also brings **technical debt** that we may have to eventually pay.

+

+Open source code is maintained by a community with diverse backend, and it is even more important to bring clear, documented and maintainable code. Code reviews are shepherding process to spot potential problems, improve quality of the code. We should, however, not rely on code review process to get the code into a ready state. Contributors are encouraged to polish the code to a ready state before requesting reviews. This is especially expected for code owner and comitter candidates.

+

+Here are some checklists for code reviews, it is also helpful reference for contributors

+

+Hold the Highest Standard

+-------------------------

+The first rule for code reviewers is to always keep the highest standard, and do not approve code just to "be friendly". Good, informative critics each other learn and prevents technical debt in early stages.

+

+Ensure Test Coverage

+--------------------

+Each new change of features should introduce test cases, bug fixes should include regression tests that prevent the problem from happening again.

+

+Documentations are Mandatory

+----------------------------

+Documentation is usually a place we overlooked, new functions or change to a function should be directly updated in documents. A new feature is meaningless without documentation to make it accessible. See more at :ref:`doc_guide`

+

+Deliberate on User-facing API

+-----------------------------

+A good, minimum and stable API is critical to the project’s life. A good API makes a huge difference. Always think very carefully about all the aspects including naming, arguments definitions and behavior. One good rule to check is to be consistent with existing well-known package’s APIs if the feature overlap. For example, tensor operation APIs should always be consistent with the numpy.

+

+Minimum Dependency

+------------------

+Always be cautious in introducing dependencies. While it is important to reuse code and not reinventing the wheel, dependencies can increase burden of users in deployment. A good design principle only depends on the part when a user actually use it.

+

+Ensure Readability

+------------------

+While it is hard to implement a new feature, it is even harder to make others understand and maintain the code you wrote. It is common for a PMC or committer to not being able to understand certain contributions. In such case, a reviewer should say "I don’t understand" and ask the contributor to clarify. We highly encourage code comments which explain the code logic along with the code.

+

+Concise Implementation

+----------------------

+Some basic principles applied here: favor vectorized array code over loops, is there existing API that solves the problem.

+

+Document Lessons in Code Reviews

+--------------------------------

+When you find there are some common lessons that can be summarized in the guideline,

+add it to the :ref:`code_guide`.

+It is always good to refer to the guideline document when requesting changes,

+so the lessons can be shared to all the community.

+

+Respect each other

+------------------

+The code reviewers and contributors are paying the most precious currencies in the world -- time. We are volunteers in the community to spend the time to build good code, help each other, learn and have fun hacking.

diff --git a/docs/contribute/document.rst b/docs/contribute/document.rst

new file mode 100644

index 00000000..ab67fbec

--- /dev/null

+++ b/docs/contribute/document.rst

@@ -0,0 +1,88 @@

+.. _doc_guide:

+

+Write Document and Tutorials

+============================

+

+We use the `Sphinx `_ for the main documentation.

+Sphinx support both the reStructuredText and markdown.

+When possible, we encourage to use reStructuredText as it has richer features.

+Note that the python doc-string and tutorials allow you to embed reStructuredText syntax.

+

+

+Document Python

+---------------

+We use `numpydoc `_

+format to document the function and classes.

+The following snippet gives an example docstring.

+We always document all the public functions,

+when necessary, provide an usage example of the features we support(as shown below).

+

+.. code:: python

+

+ def myfunction(arg1, arg2, arg3=3):

+ """Briefly describe my function.

+

+ Parameters

+ ----------

+ arg1 : Type1

+ Description of arg1

+

+ arg2 : Type2

+ Description of arg2

+

+ arg3 : Type3, optional

+ Description of arg3

+

+ Returns

+ -------

+ rv1 : RType1

+ Description of return type one

+

+ Examples

+ --------

+ .. code:: python

+

+ # Example usage of myfunction

+ x = myfunction(1, 2)

+ """

+ return rv1

+

+Be careful to leave blank lines between sections of your documents.

+In the above case, there has to be a blank line before `Parameters`, `Returns` and `Examples`

+in order for the doc to be built correctly. To add a new function to the doc,

+we need to add the `sphinx.autodoc `_

+rules to the `docs/api/python `_).

+You can refer to the existing files under this folder on how to add the functions.

+

+

+Document C++

+------------

+We use the doxgen format to document c++ functions.

+The following snippet shows an example of c++ docstring.

+

+.. code:: c++

+

+ /*!

+ * \brief Description of my function

+ * \param arg1 Description of arg1

+ * \param arg2 Descroption of arg2

+ * \returns describe return value

+ */

+ int myfunction(int arg1, int arg2) {

+ // When necessary, also add comment to clarify internal logics

+ }

+

+Besides documenting function usages, we also highly recommend contributors

+to add comments about code logics to improve readability.

+

+

+Write Tutorials

+---------------

+We use the `sphinx-gallery `_ to build python tutorials.

+You can find the source code under `tutorials `_ quite self explanatory.

+One thing that worth noting is that the comment blocks are written in reStructuredText instead of markdown so be aware of the syntax.

+

+The tutorial code will run on our build server to generate the document page.

+So we may have a restriction like not being able to access a remote Raspberry Pi,

+in such case add a flag variable to the tutorial (e.g. `use_rasp`) and allow users to easily switch to the real device by changing one flag.

+Then use the existing environment to demonstrate the usage.

diff --git a/docs/contribute/git_howto.md b/docs/contribute/git_howto.md

new file mode 100644

index 00000000..53ff89b1

--- /dev/null

+++ b/docs/contribute/git_howto.md

@@ -0,0 +1,57 @@

+# Git Usage Tips

+

+Here are some tips for git workflow.

+

+## How to resolve conflict with master

+- First rebase to most recent master

+```bash

+# The first two steps can be skipped after you do it once.

+git remote add upstream [url to tvm repo]

+git fetch upstream

+git rebase upstream/master

+```

+- The git may show some conflicts it cannot merge, say ```conflicted.py```.

+ - Manually modify the file to resolve the conflict.

+ - After you resolved the conflict, mark it as resolved by

+```bash

+git add conflicted.py

+```

+- Then you can continue rebase by

+```bash

+git rebase --continue

+```

+- Finally push to your fork, you may need to force push here.

+```bash

+git push --force

+```

+

+## How to combine multiple commits into one

+Sometimes we want to combine multiple commits, especially when later commits are only fixes to previous ones,

+to create a PR with set of meaningful commits. You can do it by following steps.

+- Before doing so, configure the default editor of git if you haven't done so before.

+```bash

+git config core.editor the-editor-you-like

+```

+- Assume we want to merge last 3 commits, type the following commands

+```bash

+git rebase -i HEAD~3

+```

+- It will pop up an text editor. Set the first commit as ```pick```, and change later ones to ```squash```.

+- After you saved the file, it will pop up another text editor to ask you modify the combined commit message.

+- Push the changes to your fork, you need to force push.

+```bash

+git push --force

+```

+

+## Reset to the most recent master

+You can always use git reset to reset your version to the most recent master.

+Note that all your ***local changes will get lost***.

+So only do it when you do not have local changes or when your pull request just get merged.

+```bash

+git reset --hard [hash tag of master]

+git push --force

+```

+

+## What is the consequence of force push

+The previous two tips requires force push, this is because we altered the path of the commits.

+It is fine to force push to your own fork, as long as the commits changed are only yours.

diff --git a/docs/contribute/index.rst b/docs/contribute/index.rst

new file mode 100644

index 00000000..7b757aac

--- /dev/null

+++ b/docs/contribute/index.rst

@@ -0,0 +1,30 @@

+Contribute to TVM

+=================

+

+TVM has been developed by community members.

+Everyone is welcomed to contribute.

+We value all forms of contributions, including, but not limited to:

+

+- Code reviewing of the existing patches.

+- Documentation and usage examples

+- Community participation in forums and issues.

+- Code readability and developer guide

+

+ - We welcome contributions that add code comments

+ to improve readability

+ - We also welcome contributions to docs to explain the

+ design choices of the internal.

+

+- Test cases to make the codebase more robust

+- Tutorials, blog posts, talks that promote the project.

+

+Here are guidelines for contributing to various aspect of the project:

+

+.. toctree::

+ :maxdepth: 2

+

+ code_review

+ document

+ code_guide

+ pull_request

+ git_howto

diff --git a/docs/contribute/pull_request.rst b/docs/contribute/pull_request.rst

new file mode 100644

index 00000000..80a0448c

--- /dev/null

+++ b/docs/contribute/pull_request.rst

@@ -0,0 +1,26 @@

+Submit a Pull Request

+=====================

+

+This is a quick guide to submit a pull request, please also refer to the detailed guidelines.

+

+- Before submit, please rebase your code on the most recent version of master, you can do it by

+

+ .. code:: bash

+

+ git remote add upstream [url to tvm repo]

+ git fetch upstream

+ git rebase upstream/master

+

+- Make sure code style check pass by typing ``make lint``, and all the existing test-cases pass.

+- Add test-cases to cover the new features or bugfix the patch introduces.

+- Document the code you wrote, see more at :ref:`doc_guide`

+- Send the pull request, fix the problems reported by automatic checks.

+ Request code reviews from other contributors and improves your patch according to feedbacks.

+

+ - To get your code reviewed quickly, we encourage you to help review others' code so they can do the favor in return.

+ - Code review is a shepherding process that helps to improve contributor's code quality.

+ We should treat it proactively, to improve the code as much as possible before the review.

+ We highly value patches that can get in without extensive reviews.

+ - The detailed guidelines and summarizes useful lessons.

+

+- The patch can be merged after the reviewers approve the pull request.

diff --git a/docs/deploy/android.md b/docs/deploy/android.md

new file mode 100644

index 00000000..ca431693

--- /dev/null

+++ b/docs/deploy/android.md

@@ -0,0 +1,25 @@

+# Deploy to Android

+

+

+## Build model for Android Target

+

+NNVM compilation of model for android target could follow same approach like android_rpc.

+

+An reference exampe can be found at [chainer-nnvm-example](https://github.com/tkat0/chainer-nnvm-example)

+

+Above example will directly run the compiled model on RPC target. Below modification at [rum_mobile.py](https://github.com/tkat0/chainer-nnvm-example/blob/5b97fd4d41aa4dde4b0aceb0be311054fb5de451/run_mobile.py#L64) will save the compilation output which is required on android target.

+

+```

+lib.export_library("deploy_lib.so", ndk.create_shared)

+with open("deploy_graph.json", "w") as fo:

+ fo.write(graph.json())

+with open("deploy_param.params", "wb") as fo:

+ fo.write(nnvm.compiler.save_param_dict(params))

+```

+

+deploy_lib.so, deploy_graph.json, deploy_param.params will go to android target.

+

+## TVM Runtime for Android Target

+

+Refer [here](https://github.com/dmlc/tvm/blob/master/apps/android_deploy/README.md#build-and-installation) to build CPU/OpenCL version flavor TVM runtime for android target.

+From android java TVM API to load model & execute can be refered at this [java](https://github.com/dmlc/tvm/blob/master/apps/android_deploy/app/src/main/java/ml/dmlc/tvm/android/demo/MainActivity.java) sample source.

diff --git a/docs/deploy/cpp_deploy.md b/docs/deploy/cpp_deploy.md

new file mode 100644

index 00000000..d02d33d1

--- /dev/null

+++ b/docs/deploy/cpp_deploy.md

@@ -0,0 +1,35 @@

+Deploy TVM Module using C++ API

+===============================

+

+We provide an example on how to deploy TVM modules in [apps/howto_deploy](https://github.com/dmlc/tvm/tree/master/apps/howto_deploy)

+

+To run the example, you can use the following command

+

+```bash

+cd apps/howto_deploy

+./run_example.sh

+```

+

+Get TVM Runtime Library

+-----------------------

+

+The only thing we need is to link to a TVM runtime in your target platform.

+TVM provides a minimum runtime, which costs around 300K to 600K depending on how much modules we use.

+In most cases, we can use ```libtvm_runtime.so``` that comes with the build.

+

+If somehow you find it is hard to build ```libtvm_runtime```, checkout [tvm_runtime_pack.cc](https://github.com/dmlc/tvm/tree/master/apps/howto_deploy/tvm_runtime_pack.cc).

+It is an example all in one file that gives you TVM runtime.

+You can compile this file using your build system and include this into your project.

+

+You can also checkout [apps](https://github.com/dmlc/tvm/tree/master/apps/) for example applications build with TVM on iOS, Android and others.

+

+Dynamic Library vs. System Module

+---------------------------------

+TVM provides two ways to use the compiled library.

+You can checkout [prepare_test_libs.py](https://github.com/dmlc/tvm/tree/master/apps/howto_deploy/prepare_test_libs.py)

+on how to generate the library and [cpp_deploy.cc](https://github.com/dmlc/tvm/tree/master/apps/howto_deploy/cpp_deploy.cc) on how to use them.

+

+- Store library as a shared library and dynamically load the library into your project.

+- Bundle the compiled library into your project in system module mode.

+

+Dynamic loading is more flexible and can load new modules on the fly. System module is a more ```static``` approach. We can use system module in places where dynamic library loading is banned.

diff --git a/docs/deploy/index.rst b/docs/deploy/index.rst

new file mode 100644

index 00000000..bf607a4b

--- /dev/null

+++ b/docs/deploy/index.rst

@@ -0,0 +1,17 @@

+Deploy and Integration

+======================

+

+This page contains guidelines on how to deploy TVM to various platforms

+as well as how to integrate it with your project.

+

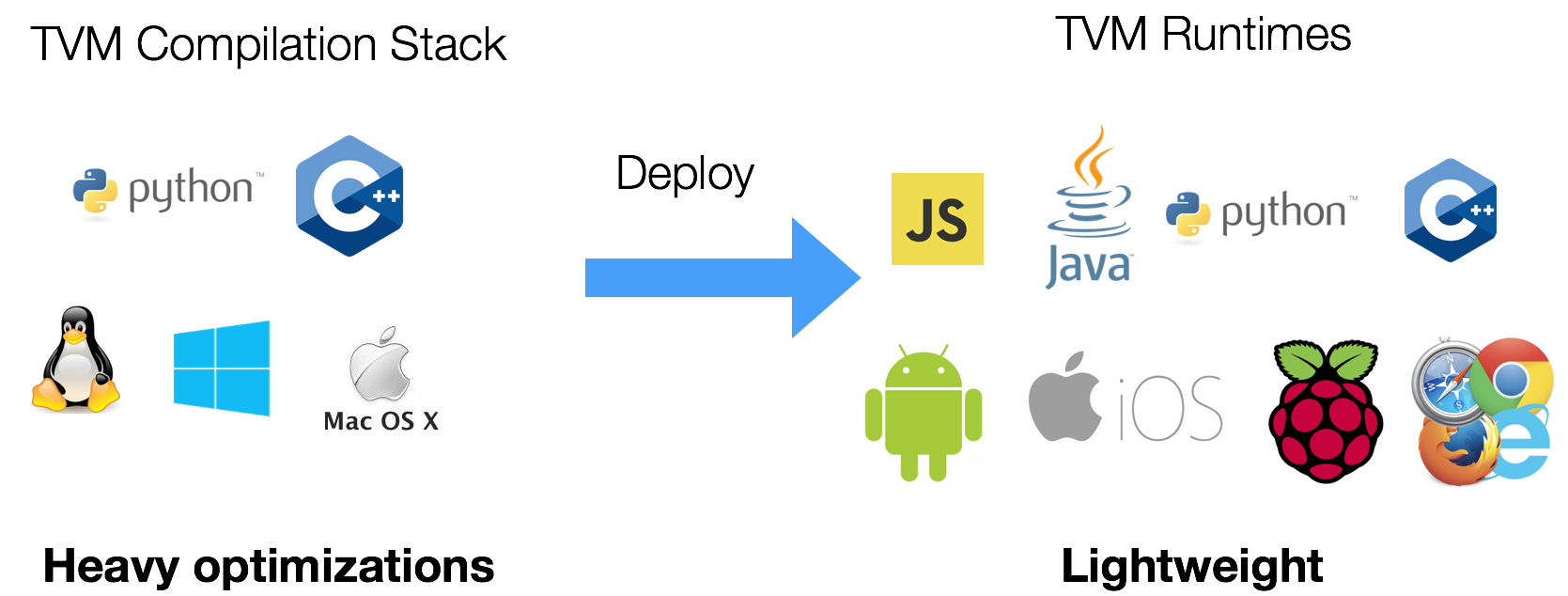

+.. image:: http://www.tvm.ai/images/release/tvm_flexible.png

+

+In order to integrate the compiled module, we do not have to ship the compiler stack. We only need to use a lightweight runtime API that can be integrated into various platforms.

+

+.. toctree::

+ :maxdepth: 2

+

+ cpp_deploy

+ android

+ nnvm

+ integrate

diff --git a/docs/how_to/integrate.md b/docs/deploy/integrate.md

similarity index 93%

rename from docs/how_to/integrate.md

rename to docs/deploy/integrate.md

index 9819d4ad..b6f3b1fa 100644

--- a/docs/how_to/integrate.md

+++ b/docs/deploy/integrate.md

@@ -3,7 +3,6 @@ Integrate TVM into Your Project

TVM's runtime is designed to be lightweight and portable.

There are several ways you can integrate TVM into your project.

-If you are looking for minimum deployment of a compiled module, take a look at [deployment guide](deploy.md)

This article introduces possible ways to integrate TVM

as a JIT compiler to generate functions on your system.

diff --git a/docs/how_to/deploy.md b/docs/deploy/nnvm.md

similarity index 54%

rename from docs/how_to/deploy.md

rename to docs/deploy/nnvm.md

index 69fedaa9..aa6c39fa 100644

--- a/docs/how_to/deploy.md

+++ b/docs/deploy/nnvm.md

@@ -1,71 +1,7 @@

-How to Deploy Compiled Modules

-==============================

-We provide an example on how to deploy TVM modules in [apps/howto_deploy](https://github.com/dmlc/tvm/tree/master/apps/howto_deploy)

-

-To run the example, you can use the following command

-

-```bash

-cd apps/howto_deploy

-./run_example.sh

-```

-

-Get TVM Runtime Library

------------------------

-

-

-

-The only thing we need is to link to a TVM runtime in your target platform.

-TVM provides a minimum runtime, which costs around 300K to 600K depending on how much modules we use.

-In most cases, we can use ```libtvm_runtime.so``` that comes with the build.

-

-If somehow you find it is hard to build ```libtvm_runtime```, checkout [tvm_runtime_pack.cc](https://github.com/dmlc/tvm/tree/master/apps/howto_deploy/tvm_runtime_pack.cc).

-It is an example all in one file that gives you TVM runtime.

-You can compile this file using your build system and include this into your project.

-

-You can also checkout [apps](https://github.com/dmlc/tvm/tree/master/apps/) for example applications build with TVM on iOS, Android and others.

-

-Dynamic Library vs. System Module

----------------------------------

-TVM provides two ways to use the compiled library.

-You can checkout [prepare_test_libs.py](https://github.com/dmlc/tvm/tree/master/apps/howto_deploy/prepare_test_libs.py)

-on how to generate the library and [cpp_deploy.cc](https://github.com/dmlc/tvm/tree/master/apps/howto_deploy/cpp_deploy.cc) on how to use them.

-

-- Store library as a shared library and dynamically load the library into your project.

-- Bundle the compiled library into your project in system module mode.

-

-Dynamic loading is more flexible and can load new modules on the fly. System module is a more ```static``` approach. We can use system module in places where dynamic library loading is banned.

-

-Deploy to Android

------------------

-### Build model for Android Target

-

-NNVM compilation of model for android target could follow same approach like android_rpc.

-

-An reference exampe can be found at [chainer-nnvm-example](https://github.com/tkat0/chainer-nnvm-example)

-

-Above example will directly run the compiled model on RPC target. Below modification at [rum_mobile.py](https://github.com/tkat0/chainer-nnvm-example/blob/5b97fd4d41aa4dde4b0aceb0be311054fb5de451/run_mobile.py#L64) will save the compilation output which is required on android target.

-

-```

-lib.export_library("deploy_lib.so", ndk.create_shared)

-with open("deploy_graph.json", "w") as fo:

- fo.write(graph.json())

-with open("deploy_param.params", "wb") as fo:

- fo.write(nnvm.compiler.save_param_dict(params))

-```

-

-deploy_lib.so, deploy_graph.json, deploy_param.params will go to android target.

-

-### TVM Runtime for Android Target

-

-Refer [here](https://github.com/dmlc/tvm/blob/master/apps/android_deploy/README.md#build-and-installation) to build CPU/OpenCL version flavor TVM runtime for android target.

-From android java TVM API to load model & execute can be refered at this [java](https://github.com/dmlc/tvm/blob/master/apps/android_deploy/app/src/main/java/ml/dmlc/tvm/android/demo/MainActivity.java) sample source.

-

-

-Deploy NNVM Modules

--------------------

+# Deploy NNVM Modules

NNVM compiled modules are fully embedded in TVM runtime as long as ```GRAPH_RUNTIME``` option

-is enabled in tvm runtime. Check out the [TVM documentation](http://docs.tvm.ai/) for

-how to deploy TVM runtime to your system.

+is enabled in tvm runtime.

+

In a nutshell, we will need three items to deploy a compiled module.

Checkout our tutorials on getting started with NNVM compiler for more details.

diff --git a/docs/dev/index.rst b/docs/dev/index.rst

index 5056630f..3fb05293 100644

--- a/docs/dev/index.rst

+++ b/docs/dev/index.rst

@@ -1,5 +1,5 @@

-TVM Design and Developer Guide

-==============================

+Design and Developer Guide

+==========================

Building a compiler stack for deep learning systems involves many many systems-level design decisions.

In this part of documentation, we share the rationale for the specific choices made when designing TVM.

diff --git a/docs/faq.md b/docs/faq.md

index 92cb886f..54df0ced 100644

--- a/docs/faq.md

+++ b/docs/faq.md

@@ -4,7 +4,7 @@ This document contains frequently asked questions.

How to Install

--------------

-See [Installation](https://github.com/dmlc/tvm/blob/master/docs/how_to/install.md)

+See [Installation](http://tvm.ai/install/)

TVM's relation to Other IR/DSL Projects

---------------------------------------

diff --git a/docs/how_to/contribute.md b/docs/how_to/contribute.md

deleted file mode 100644

index 9818f829..00000000

--- a/docs/how_to/contribute.md

+++ /dev/null

@@ -1,102 +0,0 @@

-# Contribute to TVM

-

-TVM has been developed by community members.

-Everyone is more than welcome to contribute. It is a way to make the project better and more accessible to more users.

-

-- Please add your name to [CONTRIBUTORS.md](https://github.com/dmlc/tvm/blob/master/CONTRIBUTORS.md)

-- Please update [NEWS.md](https://github.com/dmlc/tvm/blob/master/NEWS.md) to add note on your changes to the API or added a new document.

-

-## Guidelines

-* [Submit Pull Request](#submit-pull-request)

-* [Git Workflow Howtos](#git-workflow-howtos)

- - [How to resolve conflict with master](#how-to-resolve-conflict-with-master)

- - [How to combine multiple commits into one](#how-to-combine-multiple-commits-into-one)

- - [What is the consequence of force push](#what-is-the-consequence-of-force-push)

-* [Code Quality](#code-quality)

-* [Testcases](#testcases)

-* [Core Library](#core-library)

-* [Python Package](#python-package)

-

-## Submit Pull Request

-* Before submit, please rebase your code on the most recent version of master, you can do it by

-```bash

-git remote add upstream [url to tvm repo]

-git fetch upstream

-git rebase upstream/master

-```

-* If you have multiple small commits,

- it might be good to merge them together(use git rebase then squash) into more meaningful groups.

-* Send the pull request!

- - Fix the problems reported by automatic checks

- - If you are contributing a new module or new function, add a test.

-

-## Git Workflow Howtos

-### How to resolve conflict with master

-- First rebase to most recent master

-```bash

-# The first two steps can be skipped after you do it once.

-git remote add upstream [url to tvm repo]

-git fetch upstream

-git rebase upstream/master

-```

-- The git may show some conflicts it cannot merge, say ```conflicted.py```.

- - Manually modify the file to resolve the conflict.

- - After you resolved the conflict, mark it as resolved by

-```bash

-git add conflicted.py

-```

-- Then you can continue rebase by

-```bash

-git rebase --continue

-```

-- Finally push to your fork, you may need to force push here.

-```bash

-git push --force

-```

-

-### How to combine multiple commits into one

-Sometimes we want to combine multiple commits, especially when later commits are only fixes to previous ones,

-to create a PR with set of meaningful commits. You can do it by following steps.

-- Before doing so, configure the default editor of git if you haven't done so before.

-```bash

-git config core.editor the-editor-you-like

-```

-- Assume we want to merge last 3 commits, type the following commands

-```bash

-git rebase -i HEAD~3

-```

-- It will pop up an text editor. Set the first commit as ```pick```, and change later ones to ```squash```.

-- After you saved the file, it will pop up another text editor to ask you modify the combined commit message.

-- Push the changes to your fork, you need to force push.

-```bash

-git push --force

-```

-

-### Reset to the most recent master

-You can always use git reset to reset your version to the most recent master.

-Note that all your ***local changes will get lost***.

-So only do it when you do not have local changes or when your pull request just get merged.

-```bash

-git reset --hard [hash tag of master]

-git push --force

-```

-

-### What is the consequence of force push

-The previous two tips requires force push, this is because we altered the path of the commits.

-It is fine to force push to your own fork, as long as the commits changed are only yours.

-

-## Code Quality

-

-Each patch we made can also become a potential technical debt in the future. We value high-quality code that can be understood and maintained by the community. We encourage contributors to submit coverage test cases, write or contribute comments on their code to explain the logic and provide reviews feedbacks to hold these standards.

-

-## Testcases

-- All the testcases are in tests

-

-## Core Library

-- Follow Google C style for C++.

-- We use doxygen to document all the interface code.

-- You can reproduce the linter checks by typing ```make lint```

-

-## Python Package

-- Always add docstring to the new functions in numpydoc format.

-- You can reproduce the linter checks by typing ```make lint```

diff --git a/docs/index.rst b/docs/index.rst

index 28f6b405..f46775d1 100644

--- a/docs/index.rst

+++ b/docs/index.rst

@@ -6,11 +6,10 @@ Get Started

.. toctree::

:maxdepth: 1

- how_to/install

+ install/index

tutorials/index

- how_to/deploy

- how_to/integrate

- how_to/contribute

+ deploy/index

+ contribute/index

faq

API Reference

diff --git a/docs/how_to/install.md b/docs/install/index.rst

similarity index 54%

rename from docs/how_to/install.md

rename to docs/install/index.rst

index c71c823d..45697d53 100644

--- a/docs/how_to/install.md

+++ b/docs/install/index.rst

@@ -1,95 +1,113 @@

-Installation Guide

-==================

+Installation

+============

This page gives instructions on how to build and install the tvm package from

scratch on various systems. It consists of two steps:

1. First build the shared library from the C++ codes (`libtvm.so` for linux/osx and `libtvm.dll` for windows).

2. Setup for the language packages (e.g. Python Package).

-To get started, clone tvm repo from github. It is important to clone the submodules along, with ```--recursive``` option.

-```bash

-git clone --recursive https://github.com/dmlc/tvm

-```

+To get started, clone tvm repo from github. It is important to clone the submodules along, with ``--recursive`` option.

+

+.. code:: bash

+

+ git clone --recursive https://github.com/dmlc/tvm

+

For windows users who use github tools, you can open the git shell, and type the following command.

-```bash

-git submodule init

-git submodule update

-```

-## Contents

-- [Build the Shared Library](#build-the-shared-library)

-- [Python Package Installation](#python-package-installation)

+.. code:: bash

-## Build the Shared Library

+ git submodule init

+ git submodule update

+

+

+Build the Shared Library

+------------------------

Our goal is to build the shared libraries:

+

- On Linux the target library are `libtvm.so, libtvm_topi.so`

- On OSX the target library are `libtvm.dylib, libtvm_topi.dylib`

- On Windows the target library are `libtvm.dll, libtvm_topi.dll`

-```bash

-sudo apt-get update

-sudo apt-get install -y python python-dev python-setuptools gcc libtinfo-dev zlib1g-dev

-```

-The minimal building requirement is

+.. code:: bash

+

+ sudo apt-get update

+ sudo apt-get install -y python python-dev python-setuptools gcc libtinfo-dev zlib1g-dev

+

+The minimal building requirements are

+

- A recent c++ compiler supporting C++ 11 (g++-4.8 or higher)

- We highly recommend to build with LLVM to enable all the features.

- It is possible to build without llvm dependency if we only want to use CUDA/OpenCL

-The configuration of tvm can be modified by ```config.mk```

-- First copy ```make/config.mk``` to the project root, on which

+The configuration of tvm can be modified by `config.mk`

+

+- First copy ``make/config.mk`` to the project root, on which

any local modification will be ignored by git, then modify the according flags.

- - On macOS, for some versions of XCode, you need to add ```-lc++abi``` in the LDFLAGS or you'll get link errors.

+

+ - On macOS, for some versions of XCode, you need to add ``-lc++abi`` in the LDFLAGS or you'll get link errors.

+

- TVM optionally depends on LLVM. LLVM is required for CPU codegen that needs LLVM.

+

- LLVM 4.0 or higher is needed for build with LLVM. Note that verison of LLVM from default apt may lower than 4.0.

- Since LLVM takes long time to build from source, you can download pre-built version of LLVM from

[LLVM Download Page](http://releases.llvm.org/download.html).

- - Unzip to a certain location, modify ```config.mk``` to add ```LLVM_CONFIG=/path/to/your/llvm/bin/llvm-config```

+

+ - Unzip to a certain location, modify ``config.mk`` to add ``LLVM_CONFIG=/path/to/your/llvm/bin/llvm-config``

+

- You can also use [LLVM Nightly Ubuntu Build](https://apt.llvm.org/)

- - Note that apt-package append ```llvm-config``` with version number.

- For example, set ```LLVM_CONFIG=llvm-config-4.0``` if you installed 4.0 package

+

+ - Note that apt-package append ``llvm-config`` with version number.

+ For example, set ``LLVM_CONFIG=llvm-config-4.0`` if you installed 4.0 package

We can then build tvm by `make`.

-```bash

-make -j4

-```

+.. code:: bash

+

+ make -j4

After we build tvm, we can proceed to build nnvm using the following script.

-```bash

-cd nnvm

-make -j4

-```

+.. code:: bash

+

+ cd nnvm

+ make -j4

+

This will creates `libnnvm_compiler.so` under the `nnvm/lib` folder.

If everything goes well, we can go to the specific language installation section.

-### Building on Windows

+Building on Windows

+~~~~~~~~~~~~~~~~~~~

TVM support build via MSVC using cmake. The minimum required VS version is **Visual Studio Community 2015 Update 3**.

In order to generate the VS solution file using cmake,

make sure you have a recent version of cmake added to your path and then from the tvm directory:

-```bash

-mkdir build

-cd build

-cmake -G "Visual Studio 14 2015 Win64" -DCMAKE_BUILD_TYPE=Release -DCMAKE_CONFIGURATION_TYPES="Release" ..

-```

+.. code:: bash

+

+ mkdir build

+ cd build

+ cmake -G "Visual Studio 14 2015 Win64" -DCMAKE_BUILD_TYPE=Release -DCMAKE_CONFIGURATION_TYPES="Release" ..

+

This will generate the VS project using the MSVC 14 64 bit generator.

Open the .sln file in the build directory and build with Visual Studio.

In order to build with LLVM in windows, you will need to build LLVM from source.

You need to run build the nnvm by running the same script under the nnvm folder.

-### Building ROCm support

-Currently, ROCm is supported only on linux, so all the instructions are written with linux in mind.

-- Set ```USE_ROCM=1```, set ROCM_PATH to the correct path.

-- You need to first install HIP runtime from ROCm. Make sure the installation system has ROCm installed in it.

-- Install latest stable version of LLVM (v6.0.1), and LLD, make sure ```ld.lld``` is available via command line.

+Building ROCm support

+~~~~~~~~~~~~~~~~~~~~~

-## Python Package Installation

+Currently, ROCm is supported only on linux, so all the instructions are written with linux in mind.

+

+- Set ``USE_ROCM=1``, set ROCM_PATH to the correct path.

+- You need to first install HIP runtime from ROCm. Make sure the installation system has ROCm installed in it.

+- Install latest stable version of LLVM (v6.0.1), and LLD, make sure ``ld.lld`` is available via command line.

+

+Python Package Installation

+---------------------------

The python package is located at python

There are several ways to install the package:

@@ -97,22 +115,31 @@ There are several ways to install the package:

1. Set the environment variable `PYTHONPATH` to tell python where to find

the library. For example, assume we cloned `tvm` on the home directory

`~`. then we can added the following line in `~/.bashrc`.

- It is ***recommended for developers*** who may change the codes.

- The changes will be immediately reflected once you pulled the code and rebuild the project (no need to call ```setup``` again)

+ It is **recommended for developers** who may change the codes.

+ The changes will be immediately reflected once you pulled the code and rebuild the project (no need to call ``setup`` again)

+

+ .. code:: bash

+

+ export PYTHONPATH=/path/to/tvm/python:/path/to/tvm/topi/python:/path/to/tvm/nnvm/python:${PYTHONPATH}

- ```bash

- export PYTHONPATH=/path/to/tvm/python:/path/to/tvm/topi/python:/path/to/tvm/nnvm/python:${PYTHONPATH}

- ```

2. Install tvm python bindings by `setup.py`:

- ```bash

- # install tvm package for the current user

- # NOTE: if you installed python via homebrew, --user is not needed during installaiton

- # it will be automatically installed to your user directory.

- # providing --user flag may trigger error during installation in such case.

- export MACOSX_DEPLOYMENT_TARGET=10.9 # This is required for mac to avoid symbol conflicts with libstdc++

- cd python; python setup.py install --user; cd ..

- cd topi/python; python setup.py install --user; cd ../..

- cd nnvm/python; python setup.py install --user; cd ../..

- ```

+ .. code:: bash

+

+ # install tvm package for the current user

+ # NOTE: if you installed python via homebrew, --user is not needed during installaiton

+ # it will be automatically installed to your user directory.

+ # providing --user flag may trigger error during installation in such case.

+ export MACOSX_DEPLOYMENT_TARGET=10.9 # This is required for mac to avoid symbol conflicts with libstdc++

+ cd python; python setup.py install --user; cd ..

+ cd topi/python; python setup.py install --user; cd ../..

+ cd nnvm/python; python setup.py install --user; cd ../..

+

+Install Contrib Libraries

+-------------------------

+

+.. toctree::

+ :maxdepth: 1

+

+ nnpack

diff --git a/docs/how_to/nnpack.md b/docs/install/nnpack.md

similarity index 65%

rename from docs/how_to/nnpack.md

rename to docs/install/nnpack.md

index d271af86..1e9bd22b 100644

--- a/docs/how_to/nnpack.md

+++ b/docs/install/nnpack.md

@@ -1,4 +1,4 @@

-### NNPACK for Multi-Core CPU Support in TVM

+# NNPACK Contrib Installation

[NNPACK](https://github.com/Maratyszcza/NNPACK) is an acceleration package

for neural network computations, which can run on x86-64, ARMv7, or ARM64 architecture CPUs.

@@ -11,7 +11,7 @@ For regular use prefer native tuned TVM implementation.

_TVM_ supports NNPACK for forward propagation (inference only) in convolution, max-pooling, and fully-connected layers.

In this document, we give a high level overview of how to use NNPACK with _TVM_.

-### Conditions

+## Conditions

The underlying implementation of NNPACK utilizes several acceleration methods,

including [fft](https://arxiv.org/abs/1312.5851) and [winograd](https://arxiv.org/abs/1509.09308).

These algorithms work better on some special `batch size`, `kernel size`, and `stride` settings than on other,

@@ -19,48 +19,8 @@ so depending on the context, not all convolution, max-pooling, or fully-connecte

When favorable conditions for running NNPACKS are not met,

NNPACK only supports Linux and OS X systems. Windows is not supported at present.

-The following table explains under which conditions NNPACK will work.

-| operation | conditions |

-|:--------- |:---------- |

-|convolution |2d convolution `and` no-bias=False `and` dilate=(1,1) `and` num_group=1 `and` batch-size = 1 or batch-size > 1 && stride = (1,1);|

-|pooling | max-pooling `and` kernel=(2,2) `and` stride=(2,2) `and` pooling_convention=full |

-|fully-connected| without any restrictions |

-

-### Build/Install LLVM

-LLVM is required for CPU codegen that needs LLVM.

-Since LLVM takes long time to build from source, you can download pre-built version of LLVM from [LLVM Download Page](http://releases.llvm.org/download.html).

-For llvm 4.0 you can do the following step :

-

-```bash

-# Add llvm repository in apt source list

-echo "deb http://apt.llvm.org/xenial/ llvm-toolchain-xenial-4.0 main" >> /etc/apt/sources.list

-

-# Update apt source list

-apt-get update

-# Install clang and full llvm

-apt-get install -y \

- clang-4.0 \

- clang-4.0-doc \

- libclang-common-4.0-dev \

- libclang-4.0-dev \

- libclang1-4.0 \

- libclang1-4.0-dbg \

- libllvm-4.0-ocaml-dev \

- libllvm4.0 \

- libllvm4.0-dbg \

- lldb-4.0 \

- llvm-4.0 \

- llvm-4.0-dev \

- llvm-4.0-doc \

- llvm-4.0-examples \

- llvm-4.0-runtime \

- clang-format-4.0 \

- python-clang-4.0 \

- libfuzzer-4.0-dev

-```

-

-### Build/Install NNPACK

+## Build/Install NNPACK

If the trained model meets some conditions of using NNPACK,

you can build TVM with NNPACK support.

@@ -69,7 +29,7 @@ Follow these simple steps:

Note: The following NNPACK installation instructions have been tested on Ubuntu 16.04.

-#### Build [Ninja](https://ninja-build.org/)

+### Build [Ninja](https://ninja-build.org/)

NNPACK need a recent version of Ninja. So we need to install ninja from source.

```bash

@@ -83,7 +43,7 @@ Set the environment variable PATH to tell bash where to find the ninja executabl

export PATH="${PATH}:~/ninja"

```

-#### Build [NNPACK](https://github.com/Maratyszcza/NNPACK)

+### Build [NNPACK](https://github.com/Maratyszcza/NNPACK)

The new CMAKE version of NNPACK download [Peach](https://github.com/Maratyszcza/PeachPy) and other dependencies alone

@@ -105,7 +65,7 @@ echo "/usr/local/lib" > /etc/ld.so.conf.d/nnpack.conf

sudo ldconfig

```

-### Build TVM with NNPACK support

+## Build TVM with NNPACK support

```bash

git clone --recursive https://github.com/dmlc/tvm

diff --git a/jvm/README.md b/jvm/README.md

index 24387586..46b37767 100644

--- a/jvm/README.md

+++ b/jvm/README.md

@@ -27,7 +27,7 @@ TVM4J contains three modules:

### Build

-First please refer to [Installation Guide](http://docs.tvm.ai/how_to/install.html) and build runtime shared library from the C++ codes (libtvm\_runtime.so for Linux and libtvm\_runtime.dylib for OSX).

+First please refer to [Installation Guide](http://docs.tvm.ai/install/) and build runtime shared library from the C++ codes (libtvm\_runtime.so for Linux and libtvm\_runtime.dylib for OSX).

Then you can compile tvm4j by

diff --git a/python/tvm/api.py b/python/tvm/api.py

index 71ae62a3..081b67cb 100644

--- a/python/tvm/api.py

+++ b/python/tvm/api.py

@@ -576,13 +576,16 @@ def reduce_axis(dom, name="rv"):

def select(cond, t, f):

- """Construct a select branch

+ """Construct a select branch.

+

Parameters

----------

cond : Expr

The condition

+

t : Expr

The result expression if cond is true.

+

f : Expr

The result expression if cond is false.

@@ -593,6 +596,7 @@ def select(cond, t, f):

"""

return _make.Select(convert(cond), convert(t), convert(f))

+

def comm_reducer(fcombine, fidentity, name="reduce"):

"""Create a commutative reducer for reduction.