|

|

||

|---|---|---|

| cluster-configuration | ||

| examples | ||

| frameworklauncher | ||

| grafana/dashboards | ||

| hadoop-ai | ||

| job-tutorial | ||

| pai-management | ||

| prometheus | ||

| pylon | ||

| rest-server | ||

| utilities | ||

| webportal | ||

| .gitattributes | ||

| .gitignore | ||

| .travis.yml | ||

| LICENSE | ||

| README.md | ||

| pailogo.jpg | ||

| sysarch.png | ||

README.md

Open Platform for AI (PAI)

Introduction

Platform for AI (PAI) is a platform for cluster management and resource scheduling. The platform incorporates the mature design that has a proven track record in Microsoft's large scale production environment.

PAI supports AI jobs (e.g., deep learning jobs) running in a GPU cluster. The platform provides PAI runtime environment support, with which existing deep learning frameworks, e.g., CNTK and TensorFlow, can onboard PAI without any code changes. The runtime environment support provides great extensibility: new workload can leverage the environment support to run on PAI with just a few extra lines of script and/or Python code.

PAI supports GPU scheduling, a key requirement of deep learning jobs. For better performance, PAI supports fine-grained topology-aware job placement that can request for the GPU with a specific location (e.g., under the same PCI-E switch).

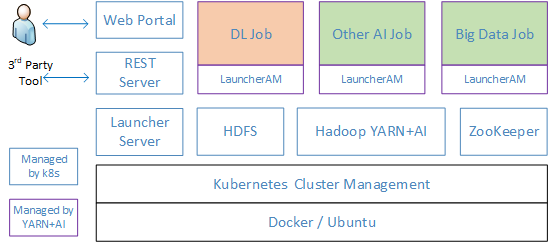

PAI embraces a microservices architecture: every component runs in a container. The system leverages Kubernetes to deploy and manage static components in the system. The more dynamic deep learning jobs are scheduled and managed by Hadoop YARN with our GPU enhancement. The training data and training results are stored in Hadoop HDFS.

An Open AI Platform for R&D and Education

One key purpose of PAI is to support the highly diversified requirements from academia and industry. PAI is completely open: it is under the MIT license. PAI is architected in a modular way: different module can be plugged in as appropriate. This makes PAI particularly attractive to evaluate various research ideas, which include but not limited to the following components:

- Scheduling mechanism for deep learning workload

- Deep neural network application that requires evaluation under realistic platform environment

- New deep learning framework

- AutoML

- Compiler technique for AI

- High performance networking for AI

- Profiling tool, including network, platform, and AI job profiling

- AI Benchmark suite

- New hardware for AI, including FPGA, ASIC, Neural Processor

- AI Storage support

- AI platform management

PAI operates in an open model. It is initially designed and developed by Microsoft Research (MSR) and Microsoft Search Technology Center (STC) platform team. We are glad to have Peking University, Xi'an Jiaotong University, Zhejiang University, and University of Science and Technology of China join us to develop the platform jointly. Contributions from academia and industry are all highly welcome.

System Deployment

Prerequisite

The system runs in a cluster of machines each equipped with one or multiple GPUs. Each machine in the cluster should:

- Run Ubuntu 16.04 LTS.

- Assign a static IP address.

- Have no Docker installed or a Docker with api version >= 1.26.

- Have access to a Docker registry service (e.g., Docker hub) to store the Docker images for the services to be deployed.

The system also requires a dev machine that runs in the same environment that has full access to the cluster. And the system need NTP service for clock synchronization.

Deployment process

To deploy and use the system, the process consists of the following steps.

- Deploy PAI following our bootup process

- Access web portal for job submission and cluster management

Job management

After system services have been deployed, user can access the web portal, a Web UI, for cluster management and job management. Please refer to this tutorial for details about job submission.

Cluster management

The web portal also provides Web UI for cluster management.

System Architecture

The system architecture is illustrated above. User submits jobs or monitors cluster status through the Web Portal, which calls APIs provided by the REST server. Third party tools can also call REST server directly for job management. Upon receiving API calls, the REST server coordinates with FrameworkLauncher (short for Launcher) to perform job management. The Launcher Server handles requests from the REST Server and submits jobs to Hadoop YARN. The job, scheduled by YARN with GPU enhancement, can leverage GPUs in the cluster for deep learning computation. Other type of CPU based AI workloads or traditional big data job can also run in the platform, coexisted with those GPU-based jobs. The platform leverages HDFS to store data. All jobs are assumed to support HDFS. All the static services (blue-lined box) are managed by Kubernetes, while jobs (purple-lined box) are managed by Hadoop YARN.

Contributing

This project welcomes contributions and suggestions. Most contributions require you to agree to a Contributor License Agreement (CLA) declaring that you have the right to, and actually do, grant us the rights to use your contribution. For details, visit https://cla.microsoft.com.

When you submit a pull request, a CLA-bot will automatically determine whether you need to provide a CLA and decorate the PR appropriately (e.g., label, comment). Simply follow the instructions provided by the bot. You will only need to do this once across all repos using our CLA.

This project has adopted the Microsoft Open Source Code of Conduct. For more information see the Code of Conduct FAQ or contact opencode@microsoft.com with any additional questions or comments.