* OD model & data ckpt * torch dependency * removed 3.6 from PR gate * removed 3.6 from PR gate * import fixes * str format fix * image utils * model util function * dependency updates * added constants * unit tests * lint fixes * sorted imports * dependency update * install updates * gate tweak * gate tweak * gate tweak * gate tweak * gate tweak * gate tweak * torch install tweak * torch install tweak * torch install tweak * torch install tweak * torch install tweak * torch install tweak * updated gate test for torch installation on pip * updated gate test for torch installation on pip * updated gate test for torch installation on pip * updated gate test for torch installation on pip * removed torch installation * torch ckpt * torch conda install * conda setup * gate tweak * bash test * gate py + conda * bash support to py commands * bash support to py commands * bash support to py commands * bash support to py commands * bash support to py commands * added mkl support * added mkl support * disabled py 3.7 due to pip internal import error * raiutils dependency update * raiutils dependency update * numpy+mkl fixes * numpy+mkl fixes * test revert * gate update * revert dependencies * removed pip compile * removed pip sync * convert CI-python to use conda and install pytorch dependencies * convert CI-python to use conda and install pytorch dependencies * dependency update * auto lint fixes * added torch & torchvision versions for macos compat * version revert * disabled torch incompat test --------- Co-authored-by: Ilya Matiach <ilmat@microsoft.com> |

||

|---|---|---|

| .azure-devops | ||

| .eslintrc | ||

| .github | ||

| .vscode | ||

| Localise | ||

| apps | ||

| docs | ||

| erroranalysis | ||

| img | ||

| libs | ||

| nlp_feature_extractors | ||

| notebooks | ||

| rai_core_flask | ||

| rai_test_utils | ||

| raiutils | ||

| raiwidgets | ||

| responsibleai | ||

| responsibleai_text | ||

| responsibleai_vision | ||

| scripts | ||

| tools | ||

| .editorconfig | ||

| .eslintrc.json | ||

| .gitignore | ||

| .prettierignore | ||

| .prettierrc | ||

| .yarnrc | ||

| CHANGES.md | ||

| CODEOWNERS | ||

| CONTRIBUTING.md | ||

| LICENSE | ||

| README.md | ||

| RELEASING.md | ||

| SECURITY.md | ||

| _NOTICE.md | ||

| babel.config.json | ||

| jest.config.js | ||

| jest.preset.js | ||

| nx.json | ||

| package.json | ||

| pyproject.toml | ||

| requirements-linting.txt | ||

| rollup.config.js | ||

| setup.cfg | ||

| setupTest.ts | ||

| tsconfig.base.json | ||

| version.cfg | ||

| webpack.config.js | ||

| workspace.json | ||

| yarn.lock | ||

README.md

Responsible AI Toolbox

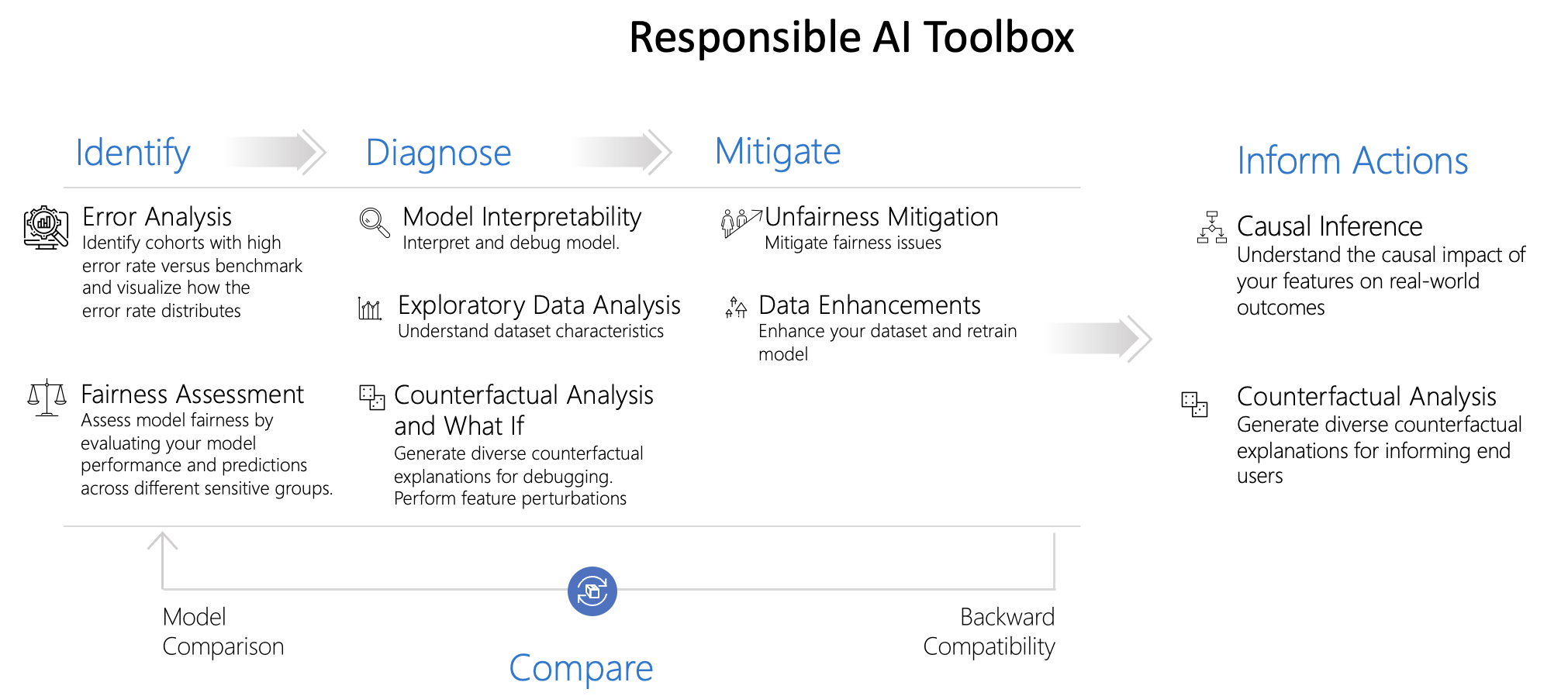

Responsible AI is an approach to assessing, developing, and deploying AI systems in a safe, trustworthy, and ethical manner, and take responsible decisions and actions.

Responsible AI Toolbox is a suite of tools providing a collection of model and data exploration and assessment user interfaces and libraries that enable a better understanding of AI systems. These interfaces and libraries empower developers and stakeholders of AI systems to develop and monitor AI more responsibly, and take better data-driven actions.

The Toolbox consists of three repositories:

| Repository | Tools Covered |

|---|---|

| Responsible-AI-Toolbox Repository (Here) | This repository contains four visualization widgets for model assessment and decision making: 1. Responsible AI dashboard, a single pane of glass bringing together several mature Responsible AI tools from the toolbox for a holistic responsible assessment and debugging of models and making informed business decisions. With this dashboard, you can identify model errors, diagnose why those errors are happening, and mitigate them. Moreover, the causal decision-making capabilities provide actionable insights to your stakeholders and customers. 2. Error Analysis dashboard, for identifying model errors and discovering cohorts of data for which the model underperforms. 3. Interpretability dashboard, for understanding model predictions. This dashboard is powered by InterpretML. 4. Fairness dashboard, for understanding model’s fairness issues using various group-fairness metrics across sensitive features and cohorts. This dashboard is powered by Fairlearn. |

| Responsible-AI-Toolbox-Mitigations Repository | The Responsible AI Mitigations Library helps AI practitioners explore different measurements and mitigation steps that may be most appropriate when the model underperforms for a given data cohort. The library currently has two modules: 1. DataProcessing, which offers mitigation techniques for improving model performance for specific cohorts. 2. DataBalanceAnalysis, which provides metrics for diagnosing errors that originate from data imbalance either on class labels or feature values. 3. Cohort: provides classes for handling and managing cohorts, which allows the creation of custom pipelines for each cohort in an easy and intuitive interface. The module also provides techniques for learning different decoupled estimators (models) for different cohorts and combining them in a way that optimizes different definitions of group fairness. |

| Responsible-AI-Tracker Repository | Responsible AI Toolbox Tracker is a JupyterLab extension for managing, tracking, and comparing results of machine learning experiments for model improvement. Using this extension, users can view models, code, and visualization artifacts within the same framework enabling therefore fast model iteration and evaluation processes. Main functionalities include: 1. Managing and linking model improvement artifacts 2. Disaggregated model evaluation and comparisons 3. Integration with the Responsible AI Mitigations library 4. Integration with mlflow |

| Responsible-AI-Toolbox-GenBit Repository | The Responsible AI Gender Bias (GenBit) Library helps AI practitioners measure gender bias in Natural Language Processing (NLP) datasets. The main goal of GenBit is to analyze your text corpora and compute metrics that give insights into the gender bias present in a corpus. |

Introducing Responsible AI dashboard

Responsible AI dashboard is a single pane of glass, enabling you to easily flow through different stages of model debugging and decision-making. This customizable experience can be taken in a multitude of directions, from analyzing the model or data holistically, to conducting a deep dive or comparison on cohorts of interest, to explaining and perturbing model predictions for individual instances, and to informing users on business decisions and actions.

In order to achieve these capabilities, the dashboard integrates together ideas and technologies from several open-source toolkits in the areas of

-

Error Analysis powered by Error Analysis, which identifies cohorts of data with higher error rate than the overall benchmark. These discrepancies might occur when the system or model underperforms for specific demographic groups or infrequently observed input conditions in the training data.

-

Fairness Assessment powered by Fairlearn, which identifies which groups of people may be disproportionately negatively impacted by an AI system and in what ways.

-

Model Interpretability powered by InterpretML, which explains blackbox models, helping users understand their model's global behavior, or the reasons behind individual predictions.

-

Counterfactual Analysis powered by DiCE, which shows feature-perturbed versions of the same datapoint who would have received a different prediction outcome, e.g., Taylor's loan has been rejected by the model. But they would have received the loan if their income was higher by $10,000.

-

Causal Analysis powered by EconML, which focuses on answering What If-style questions to apply data-driven decision-making – how would revenue be affected if a corporation pursues a new pricing strategy? Would a new medication improve a patient’s condition, all else equal?

-

Data Balance powered by Responsible AI, which helps users gain an overall understanding of their data, identify features receiving the positive outcome more than others, and visualize feature distributions.

Responsible AI dashboard is designed to achieve the following goals:

- To help further accelerate engineering processes in machine learning by enabling practitioners to design customizable workflows and tailor Responsible AI dashboards that best fit with their model assessment and data-driven decision making scenarios.

- To help model developers create end to end and fluid debugging experiences and navigate seamlessly through error identification and diagnosis by using interactive visualizations that identify errors, inspect the data, generate global and local explanations models, and potentially inspect problematic examples.

- To help business stakeholders explore causal relationships in the data and take informed decisions in the real world.

This repository contains the Jupyter notebooks with examples to showcase how to use this widget. Get started here.

Installation

Use the following pip command to install the Responsible AI Toolbox.

If running in jupyter, please make sure to restart the jupyter kernel after installing.

pip install raiwidgets

Responsible AI dashboard Customization

The Responsible AI Toolbox’s strength lies in its customizability. It empowers users to design tailored, end-to-end model debugging and decision-making workflows that address their particular needs. Need some inspiration? Here are some examples of how Toolbox components can be put together to analyze scenarios in different ways:

Please note that model overview (including fairness analysis) and data explorer components are activated by default!

| Responsible AI Dashboard Flow | Use Case |

|---|---|

| Model Overview -> Error Analysis -> Data Explorer | To identify model errors and diagnose them by understanding the underlying data distribution |

| Model Overview -> Fairness Assessment -> Data Explorer | To identify model fairness issues and diagnose them by understanding the underlying data distribution |

| Model Overview -> Error Analysis -> Counterfactuals Analysis and What-If | To diagnose errors in individual instances with counterfactual analysis (minimum change to lead to a different model prediction) |

| Model Overview -> Data Explorer -> Data Balance | To understand the root cause of errors and fairness issues introduced via data imbalances or lack of representation of a particular data cohort |

| Model Overview -> Interpretability | To diagnose model errors through understanding how the model has made its predictions |

| Data Explorer -> Causal Inference | To distinguish between correlations and causations in the data or decide the best treatments to apply to see a positive outcome |

| Interpretability -> Causal Inference | To learn whether the factors that model has used for decision making has any causal effect on the real-world outcome. |

| Data Explorer -> Counterfactuals Analysis and What-If | To address customer questions about what they can do next time to get a different outcome from an AI. |

| Data Explorer -> Data Balance | To gain an overall understanding of the data, identify features receiving the positive outcome more than others, and visualize feature distributions |

Useful Links

Model Debugging Examples:

- Try the tool: model debugging of a census income prediction model (classification)

- Try the tool: model debugging of a housing price prediction model (classification)

- Try the tool: model debugging of a diabetes progression prediction model (regression)

- Try the tool: model debugging of a fridge object detection model

Responsible Decision Making Examples:

- Try the tool: make decisions for house improvements

- Try the tool: provide recommendations to patients using diabetes data

- Try the tool: model debugging of a fridge image classification model

- Try the tool: model debugging of a fridge multilabel image classification model

- Try the tool: model debugging of a fridge object detection model

Supported Models

This Responsible AI Toolbox API supports models that are trained on datasets in Python numpy.ndarray, pandas.DataFrame, iml.datatypes.DenseData, or scipy.sparse.csr_matrix format.

The explanation functions of Interpret-Community accept both models and pipelines as input as long as the model or pipeline implements a predict or predict_proba function that conforms to the Scikit convention. If not compatible, you can wrap your model's prediction function into a wrapper function that transforms the output into the format that is supported (predict or predict_proba of Scikit), and pass that wrapper function to your selected interpretability techniques.

If a pipeline script is provided, the explanation function assumes that the running pipeline script returns a prediction. The repository also supports models trained via PyTorch, TensorFlow, and Keras deep learning frameworks.

Other Use Cases

Tools within the Responsible AI Toolbox can also be used with AI models offered as APIs by providers such as Azure Cognitive Services. To see example use cases, see the folders below:

- Cognitive Services Speech to Text Fairness testing

- Cognitive Services Face Verification Fairness testing