Add files via upload

This commit is contained in:

Родитель

4be159a331

Коммит

1c1501738e

Двоичный файл не отображается.

|

|

@ -0,0 +1,13 @@

|

|||

Single Shot Seamless Object Pose Estimation

|

||||

|

||||

Copyright (c) Microsoft Corporation

|

||||

|

||||

All rights reserved.

|

||||

|

||||

MIT License

|

||||

|

||||

Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the Software), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions:

|

||||

|

||||

The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software.

|

||||

|

||||

THE SOFTWARE IS PROVIDED *AS IS*, WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.

|

||||

|

|

@ -0,0 +1,53 @@

|

|||

# Class to read

|

||||

|

||||

class MeshPly:

|

||||

def __init__(self, filename, color=[0., 0., 0.]):

|

||||

|

||||

f = open(filename, 'r')

|

||||

self.vertices = []

|

||||

self.colors = []

|

||||

self.indices = []

|

||||

self.normals = []

|

||||

|

||||

vertex_mode = False

|

||||

face_mode = False

|

||||

|

||||

nb_vertices = 0

|

||||

nb_faces = 0

|

||||

|

||||

idx = 0

|

||||

|

||||

with f as open_file_object:

|

||||

for line in open_file_object:

|

||||

elements = line.split()

|

||||

if vertex_mode:

|

||||

self.vertices.append([float(i) for i in elements[:3]])

|

||||

self.normals.append([float(i) for i in elements[3:6]])

|

||||

|

||||

if elements[6:9]:

|

||||

self.colors.append([float(i) / 255. for i in elements[6:9]])

|

||||

else:

|

||||

self.colors.append([float(i) / 255. for i in color])

|

||||

|

||||

idx += 1

|

||||

if idx == nb_vertices:

|

||||

vertex_mode = False

|

||||

face_mode = True

|

||||

idx = 0

|

||||

elif face_mode:

|

||||

self.indices.append([float(i) for i in elements[1:4]])

|

||||

idx += 1

|

||||

if idx == nb_faces:

|

||||

face_mode = False

|

||||

elif elements[0] == 'element':

|

||||

if elements[1] == 'vertex':

|

||||

nb_vertices = int(elements[2])

|

||||

elif elements[1] == 'face':

|

||||

nb_faces = int(elements[2])

|

||||

elif elements[0] == 'end_header':

|

||||

vertex_mode = True

|

||||

|

||||

|

||||

if __name__ == '__main__':

|

||||

path_model = ''

|

||||

mesh = MeshPly(path_model)

|

||||

142

README.md

142

README.md

|

|

@ -1,14 +1,136 @@

|

|||

# SINGLESHOTPOSE

|

||||

|

||||

# Contributing

|

||||

This is the code for the following paper:

|

||||

|

||||

This project welcomes contributions and suggestions. Most contributions require you to agree to a

|

||||

Contributor License Agreement (CLA) declaring that you have the right to, and actually do, grant us

|

||||

the rights to use your contribution. For details, visit https://cla.microsoft.com.

|

||||

Bugra Tekin, Sudipta N. Sinha and Pascal Fua, "Real-Time Seamless Single Shot 6D Object Pose Prediction", CVPR 2018.

|

||||

|

||||

When you submit a pull request, a CLA-bot will automatically determine whether you need to provide

|

||||

a CLA and decorate the PR appropriately (e.g., label, comment). Simply follow the instructions

|

||||

provided by the bot. You will only need to do this once across all repos using our CLA.

|

||||

### Introduction

|

||||

|

||||

This project has adopted the [Microsoft Open Source Code of Conduct](https://opensource.microsoft.com/codeofconduct/).

|

||||

For more information see the [Code of Conduct FAQ](https://opensource.microsoft.com/codeofconduct/faq/) or

|

||||

contact [opencode@microsoft.com](mailto:opencode@microsoft.com) with any additional questions or comments.

|

||||

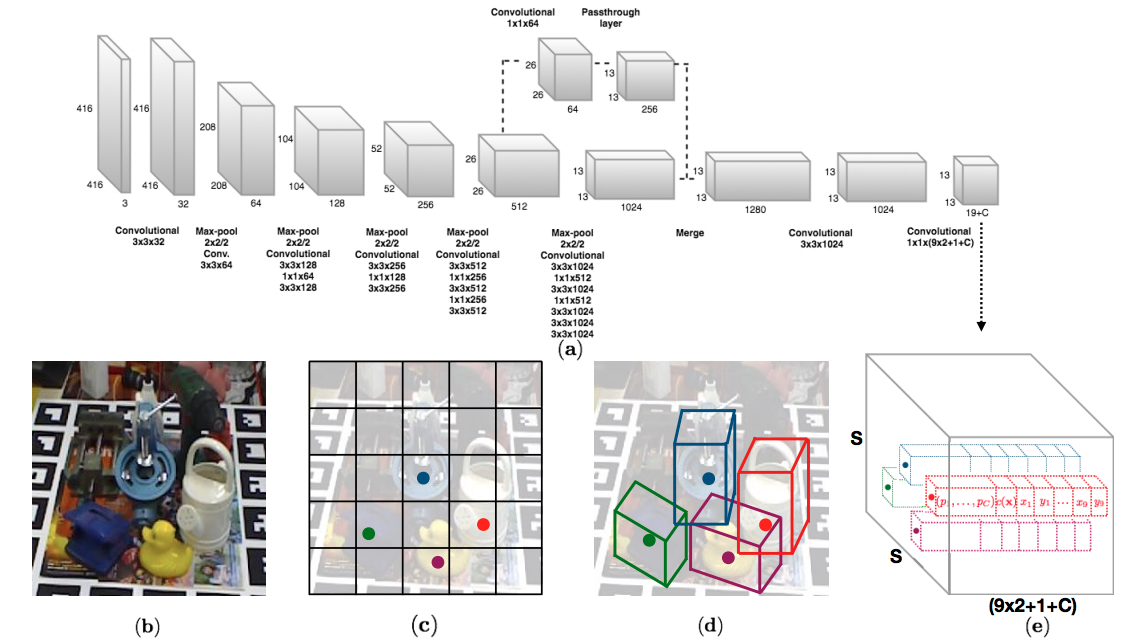

We propose a single-shot approach for simultaneously detecting an object in an RGB image and predicting its 6D pose without requiring multiple stages or having to examine multiple hypotheses. The key component of our method is a new CNN architecture inspired by the YOLO network design that directly predicts the 2D image locations of the projected vertices of the object's 3D bounding box. The object's 6D pose is then estimated using a PnP algorithm. [Paper](http://openaccess.thecvf.com/content_cvpr_2018/papers/Tekin_Real-Time_Seamless_Single_CVPR_2018_paper.pdf), [arXiv](https://arxiv.org/abs/1711.08848)

|

||||

|

||||

|

||||

|

||||

#### Citation

|

||||

If you use this code, please cite the following

|

||||

> @article{varol17a,

|

||||

TITLE = {{Real-Time Seamless Single Shot 6D Object Pose Prediction}},

|

||||

AUTHOR = {Tekin, Bugra and Sinha, Sudipta N. and Fua, Pascal},

|

||||

JOURNAL = {CVPR},

|

||||

YEAR = {2018}

|

||||

}

|

||||

|

||||

### License

|

||||

|

||||

SingleShotPose is released under the MIT License (refer to the LICENSE file for details).

|

||||

|

||||

#### Environment and dependencies

|

||||

|

||||

The code is tested on Linux with CUDA v8 and cudNN v5.1. The implementation is based on PyTorch and tested on Python2.7. The code requires the following dependencies that could be installed with conda or pip: numpy, scipy, PIL, opencv-python

|

||||

|

||||

#### Downloading and preparing the data

|

||||

|

||||

Inside the main code directory, run the following to download and extract (1) the preprocessed LINEMOD dataset, (2) trained models for the LINEMOD dataset, (3) the trained model for the OCCLUSION dataset, (4) background images from the VOC2012 dataset respectively.

|

||||

```

|

||||

wget -O LINEMOD.tar --no-check-certificate "https://onedrive.live.com/download?cid=05750EBEE1537631&resid=5750EBEE1537631%21135&authkey=AJRHFmZbcjXxTmI"

|

||||

wget -O backup.tar --no-check-certificate "https://onedrive.live.com/download?cid=0C78B7DE6C569D7B&resid=C78B7DE6C569D7B%21191&authkey=AP183o4PlczZR78"

|

||||

wget -O multi_obj_pose_estimation/backup_multi.tar --no-check-certificate "https://onedrive.live.com/download?cid=05750EBEE1537631&resid=5750EBEE1537631%21136&authkey=AFQv01OSbvhGnoM"

|

||||

wget https://pjreddie.com/media/files/VOCtrainval_11-May-2012.tar

|

||||

wget https://pjreddie.com/media/files/darknet19_448.conv.23 -P cfg/

|

||||

tar xf LINEMOD.tar

|

||||

tar xf backup.tar

|

||||

tar xf multi_obj_pose_estimation/backup_multi.tar -C multi_obj_pose_estimation/

|

||||

tar xf VOCtrainval_11-May-2012.tar

|

||||

```

|

||||

Alternatively, you can directly go to the links above and manually download and extract the files at the corresponding directories. The whole download process might take a long while (~60 minutes).

|

||||

|

||||

#### Training the model

|

||||

|

||||

To train the model run,

|

||||

|

||||

```

|

||||

python train.py datafile cfgfile initweightfile

|

||||

```

|

||||

e.g.

|

||||

```

|

||||

python train.py cfg/ape.data cfg/yolo-pose.cfg backup/ape/init.weights

|

||||

```

|

||||

|

||||

[datafile] contains information about the training/test splits and 3D object models

|

||||

|

||||

[cfgfile] contains information about the network structure

|

||||

|

||||

[initweightfile] contains initialization weights. The weights "backup/[OBJECT_NAME]/init.weights" are pretrained on LINEMOD for faster convergence. We found it effective to pretrain the model without confidence estimation first and fine-tune the network later on with confidence estimation as well. "init.weights" contain the weights of these pretrained networks. However, you can also still train the network from a more crude initialization (with weights trained on ImageNet). This usually results in a slower and sometime slightly worse convergence. You can find in cfg/ folder, the file <<darknet19_448.conv.23>>, includes the network weights pretrained on ImageNet. Alternatively, you can pretrain your own weights by setting the regularization parameter for the confidence loss to 0 as explained in "Pretraining the model" section.

|

||||

|

||||

At the start of the training you will see an output like this:

|

||||

|

||||

```

|

||||

layer filters size input output

|

||||

0 conv 32 3 x 3 / 1 416 x 416 x 3 -> 416 x 416 x 32

|

||||

1 max 2 x 2 / 2 416 x 416 x 32 -> 208 x 208 x 32

|

||||

2 conv 64 3 x 3 / 1 208 x 208 x 32 -> 208 x 208 x 64

|

||||

3 max 2 x 2 / 2 208 x 208 x 64 -> 104 x 104 x 64

|

||||

...

|

||||

30 conv 20 1 x 1 / 1 13 x 13 x1024 -> 13 x 13 x 20

|

||||

31 detection

|

||||

```

|

||||

|

||||

This defines the network structure. During training, the best network model is saved into the "model.weights" file. To train networks for other objects, just change the object name while calling the train function, e.g., "python train.py cfg/duck.data cfg/yolo-pose.cfg backup/duck/init.weights"

|

||||

|

||||

#### Testing the model

|

||||

|

||||

To test the model run

|

||||

|

||||

```

|

||||

python valid.py datafile cfgfile weightfile

|

||||

e.g.,

|

||||

python valid.py cfg/ape.data cfg/yolo-pose.cfg backup/ape/model_backup.weights

|

||||

```

|

||||

|

||||

[weightfile] contains our trained models.

|

||||

|

||||

#### Pretraining the model (Optional)

|

||||

|

||||

Models are already pretrained but if you would like to pretrain the network from scratch and get the initialization weights yourself, you can run the following:

|

||||

|

||||

python train.py cfg/ape.data cfg/yolo-pose-pre.cfg cfg/darknet19_448.conv.23

|

||||

cp backup/ape/model.weights backup/ape/init.weights

|

||||

|

||||

During pretraining the regularization parameter for the confidence term is set to "0" in the config file "cfg/yolo-pose-pre.cfg". "darknet19_448.conv.23" includes the weights of YOLOv2 trained on ImageNet.

|

||||

|

||||

#### Multi-object pose estimation on the OCCLUSION dataset

|

||||

|

||||

Inside multi_obj_pose_estimation/ folder

|

||||

|

||||

Testing:

|

||||

|

||||

```

|

||||

python valid_multi.py cfgfile weightfile

|

||||

e.g.,

|

||||

python valid_multi.py cfg/yolo-pose-multi.cfg backup_multi/model_backup.weights

|

||||

```

|

||||

|

||||

Training:

|

||||

|

||||

```

|

||||

python train_multi.py datafile cfgfile weightfile

|

||||

```

|

||||

e.g.,

|

||||

```

|

||||

python train_multi.py cfg/occlusion.data cfg/yolo-pose-multi.cfg backup_multi/init.weights

|

||||

```

|

||||

|

||||

#### Output Representation

|

||||

|

||||

Our output target representation consist of 21 values. We predict 9 points corresponding to the centroid and corners of the 3D object model. Additionally we predict the class in each cell. That makes 9x2+1 = 19 points. In multi-object training, during training, we assign whichever anchor box has the most similar size to the current object as the responsible one to predict the 2D coordinates for that object. To encode the size of the objects, we have additional 2 numbers for the range in x dimension and y dimension. Therefore, we have 9x2+1+2 = 21 numbers

|

||||

|

||||

Respectively, 21 numbers correspond to the following: 1st number: class label, 2nd number: x0 (x-coordinate of the centroid), 3rd number: y0 (y-coordinate of the centroid), 4th number: x1 (x-coordinate of the first corner), 5th number: y1 (y-coordinate of the first corner), ..., 18th number: x8 (x-coordinate of the eighth corner), 19th number: y8 (y-coordinate of the eighth corner), 20th number: x range, 21st number: y range.

|

||||

|

||||

The coordinates are normalized by the image width and height: x / image_width åand y / image_height. This is useful to have similar output ranges for the coordinate regression and object classification tasks.

|

||||

|

||||

#### Acknowledgments

|

||||

|

||||

The code is written by [Bugra Tekin](http://bugratekin.info) and is built on the YOLOv2 implementation of the github user [@marvis](https://github.com/marvis)

|

||||

|

||||

#### Contact

|

||||

|

||||

For any questions or bug reports, please contact [Bugra Tekin](http://bugratekin.info)

|

||||

|

|

@ -0,0 +1,208 @@

|

|||

import torch

|

||||

from utils import convert2cpu

|

||||

|

||||

def parse_cfg(cfgfile):

|

||||

blocks = []

|

||||

fp = open(cfgfile, 'r')

|

||||

block = None

|

||||

line = fp.readline()

|

||||

while line != '':

|

||||

line = line.rstrip()

|

||||

if line == '' or line[0] == '#':

|

||||

line = fp.readline()

|

||||

continue

|

||||

elif line[0] == '[':

|

||||

if block:

|

||||

blocks.append(block)

|

||||

block = dict()

|

||||

block['type'] = line.lstrip('[').rstrip(']')

|

||||

# set default value

|

||||

if block['type'] == 'convolutional':

|

||||

block['batch_normalize'] = 0

|

||||

else:

|

||||

key,value = line.split('=')

|

||||

key = key.strip()

|

||||

if key == 'type':

|

||||

key = '_type'

|

||||

value = value.strip()

|

||||

block[key] = value

|

||||

line = fp.readline()

|

||||

|

||||

if block:

|

||||

blocks.append(block)

|

||||

fp.close()

|

||||

return blocks

|

||||

|

||||

def print_cfg(blocks):

|

||||

print('layer filters size input output');

|

||||

prev_width = 416

|

||||

prev_height = 416

|

||||

prev_filters = 3

|

||||

out_filters =[]

|

||||

out_widths =[]

|

||||

out_heights =[]

|

||||

ind = -2

|

||||

for block in blocks:

|

||||

ind = ind + 1

|

||||

if block['type'] == 'net':

|

||||

prev_width = int(block['width'])

|

||||

prev_height = int(block['height'])

|

||||

continue

|

||||

elif block['type'] == 'convolutional':

|

||||

filters = int(block['filters'])

|

||||

kernel_size = int(block['size'])

|

||||

stride = int(block['stride'])

|

||||

is_pad = int(block['pad'])

|

||||

pad = (kernel_size-1)/2 if is_pad else 0

|

||||

width = (prev_width + 2*pad - kernel_size)/stride + 1

|

||||

height = (prev_height + 2*pad - kernel_size)/stride + 1

|

||||

print('%5d %-6s %4d %d x %d / %d %3d x %3d x%4d -> %3d x %3d x%4d' % (ind, 'conv', filters, kernel_size, kernel_size, stride, prev_width, prev_height, prev_filters, width, height, filters))

|

||||

prev_width = width

|

||||

prev_height = height

|

||||

prev_filters = filters

|

||||

out_widths.append(prev_width)

|

||||

out_heights.append(prev_height)

|

||||

out_filters.append(prev_filters)

|

||||

elif block['type'] == 'maxpool':

|

||||

pool_size = int(block['size'])

|

||||

stride = int(block['stride'])

|

||||

width = prev_width/stride

|

||||

height = prev_height/stride

|

||||

print('%5d %-6s %d x %d / %d %3d x %3d x%4d -> %3d x %3d x%4d' % (ind, 'max', pool_size, pool_size, stride, prev_width, prev_height, prev_filters, width, height, filters))

|

||||

prev_width = width

|

||||

prev_height = height

|

||||

prev_filters = filters

|

||||

out_widths.append(prev_width)

|

||||

out_heights.append(prev_height)

|

||||

out_filters.append(prev_filters)

|

||||

elif block['type'] == 'avgpool':

|

||||

width = 1

|

||||

height = 1

|

||||

print('%5d %-6s %3d x %3d x%4d -> %3d' % (ind, 'avg', prev_width, prev_height, prev_filters, prev_filters))

|

||||

prev_width = width

|

||||

prev_height = height

|

||||

prev_filters = filters

|

||||

out_widths.append(prev_width)

|

||||

out_heights.append(prev_height)

|

||||

out_filters.append(prev_filters)

|

||||

elif block['type'] == 'softmax':

|

||||

print('%5d %-6s -> %3d' % (ind, 'softmax', prev_filters))

|

||||

out_widths.append(prev_width)

|

||||

out_heights.append(prev_height)

|

||||

out_filters.append(prev_filters)

|

||||

elif block['type'] == 'cost':

|

||||

print('%5d %-6s -> %3d' % (ind, 'cost', prev_filters))

|

||||

out_widths.append(prev_width)

|

||||

out_heights.append(prev_height)

|

||||

out_filters.append(prev_filters)

|

||||

elif block['type'] == 'reorg':

|

||||

stride = int(block['stride'])

|

||||

filters = stride * stride * prev_filters

|

||||

width = prev_width/stride

|

||||

height = prev_height/stride

|

||||

print('%5d %-6s / %d %3d x %3d x%4d -> %3d x %3d x%4d' % (ind, 'reorg', stride, prev_width, prev_height, prev_filters, width, height, filters))

|

||||

prev_width = width

|

||||

prev_height = height

|

||||

prev_filters = filters

|

||||

out_widths.append(prev_width)

|

||||

out_heights.append(prev_height)

|

||||

out_filters.append(prev_filters)

|

||||

elif block['type'] == 'route':

|

||||

layers = block['layers'].split(',')

|

||||

layers = [int(i) if int(i) > 0 else int(i)+ind for i in layers]

|

||||

if len(layers) == 1:

|

||||

print('%5d %-6s %d' % (ind, 'route', layers[0]))

|

||||

prev_width = out_widths[layers[0]]

|

||||

prev_height = out_heights[layers[0]]

|

||||

prev_filters = out_filters[layers[0]]

|

||||

elif len(layers) == 2:

|

||||

print('%5d %-6s %d %d' % (ind, 'route', layers[0], layers[1]))

|

||||

prev_width = out_widths[layers[0]]

|

||||

prev_height = out_heights[layers[0]]

|

||||

assert(prev_width == out_widths[layers[1]])

|

||||

assert(prev_height == out_heights[layers[1]])

|

||||

prev_filters = out_filters[layers[0]] + out_filters[layers[1]]

|

||||

out_widths.append(prev_width)

|

||||

out_heights.append(prev_height)

|

||||

out_filters.append(prev_filters)

|

||||

elif block['type'] == 'region':

|

||||

print('%5d %-6s' % (ind, 'detection'))

|

||||

out_widths.append(prev_width)

|

||||

out_heights.append(prev_height)

|

||||

out_filters.append(prev_filters)

|

||||

elif block['type'] == 'shortcut':

|

||||

from_id = int(block['from'])

|

||||

from_id = from_id if from_id > 0 else from_id+ind

|

||||

print('%5d %-6s %d' % (ind, 'shortcut', from_id))

|

||||

prev_width = out_widths[from_id]

|

||||

prev_height = out_heights[from_id]

|

||||

prev_filters = out_filters[from_id]

|

||||

out_widths.append(prev_width)

|

||||

out_heights.append(prev_height)

|

||||

out_filters.append(prev_filters)

|

||||

elif block['type'] == 'connected':

|

||||

filters = int(block['output'])

|

||||

print('%5d %-6s %d -> %3d' % (ind, 'connected', prev_filters, filters))

|

||||

prev_filters = filters

|

||||

out_widths.append(1)

|

||||

out_heights.append(1)

|

||||

out_filters.append(prev_filters)

|

||||

else:

|

||||

print('unknown type %s' % (block['type']))

|

||||

|

||||

def load_conv(buf, start, conv_model):

|

||||

num_w = conv_model.weight.numel()

|

||||

num_b = conv_model.bias.numel()

|

||||

conv_model.bias.data.copy_(torch.from_numpy(buf[start:start+num_b])); start = start + num_b

|

||||

conv_model.weight.data.copy_(torch.from_numpy(buf[start:start+num_w])); start = start + num_w

|

||||

return start

|

||||

|

||||

def save_conv(fp, conv_model):

|

||||

if conv_model.bias.is_cuda:

|

||||

convert2cpu(conv_model.bias.data).numpy().tofile(fp)

|

||||

convert2cpu(conv_model.weight.data).numpy().tofile(fp)

|

||||

else:

|

||||

conv_model.bias.data.numpy().tofile(fp)

|

||||

conv_model.weight.data.numpy().tofile(fp)

|

||||

|

||||

def load_conv_bn(buf, start, conv_model, bn_model):

|

||||

num_w = conv_model.weight.numel()

|

||||

num_b = bn_model.bias.numel()

|

||||

bn_model.bias.data.copy_(torch.from_numpy(buf[start:start+num_b])); start = start + num_b

|

||||

bn_model.weight.data.copy_(torch.from_numpy(buf[start:start+num_b])); start = start + num_b

|

||||

bn_model.running_mean.copy_(torch.from_numpy(buf[start:start+num_b])); start = start + num_b

|

||||

bn_model.running_var.copy_(torch.from_numpy(buf[start:start+num_b])); start = start + num_b

|

||||

conv_model.weight.data.copy_(torch.from_numpy(buf[start:start+num_w])); start = start + num_w

|

||||

return start

|

||||

|

||||

def save_conv_bn(fp, conv_model, bn_model):

|

||||

if bn_model.bias.is_cuda:

|

||||

convert2cpu(bn_model.bias.data).numpy().tofile(fp)

|

||||

convert2cpu(bn_model.weight.data).numpy().tofile(fp)

|

||||

convert2cpu(bn_model.running_mean).numpy().tofile(fp)

|

||||

convert2cpu(bn_model.running_var).numpy().tofile(fp)

|

||||

convert2cpu(conv_model.weight.data).numpy().tofile(fp)

|

||||

else:

|

||||

bn_model.bias.data.numpy().tofile(fp)

|

||||

bn_model.weight.data.numpy().tofile(fp)

|

||||

bn_model.running_mean.numpy().tofile(fp)

|

||||

bn_model.running_var.numpy().tofile(fp)

|

||||

conv_model.weight.data.numpy().tofile(fp)

|

||||

|

||||

def load_fc(buf, start, fc_model):

|

||||

num_w = fc_model.weight.numel()

|

||||

num_b = fc_model.bias.numel()

|

||||

fc_model.bias.data.copy_(torch.from_numpy(buf[start:start+num_b])); start = start + num_b

|

||||

fc_model.weight.data.copy_(torch.from_numpy(buf[start:start+num_w])); start = start + num_w

|

||||

return start

|

||||

|

||||

def save_fc(fp, fc_model):

|

||||

fc_model.bias.data.numpy().tofile(fp)

|

||||

fc_model.weight.data.numpy().tofile(fp)

|

||||

|

||||

if __name__ == '__main__':

|

||||

import sys

|

||||

blocks = parse_cfg('cfg/yolo.cfg')

|

||||

if len(sys.argv) == 2:

|

||||

blocks = parse_cfg(sys.argv[1])

|

||||

print_cfg(blocks)

|

||||

|

|

@ -0,0 +1,388 @@

|

|||

import torch

|

||||

import torch.nn as nn

|

||||

import torch.nn.functional as F

|

||||

import numpy as np

|

||||

from region_loss import RegionLoss

|

||||

from cfg import *

|

||||

|

||||

class MaxPoolStride1(nn.Module):

|

||||

def __init__(self):

|

||||

super(MaxPoolStride1, self).__init__()

|

||||

|

||||

def forward(self, x):

|

||||

x = F.max_pool2d(F.pad(x, (0,1,0,1), mode='replicate'), 2, stride=1)

|

||||

return x

|

||||

|

||||

class Reorg(nn.Module):

|

||||

def __init__(self, stride=2):

|

||||

super(Reorg, self).__init__()

|

||||

self.stride = stride

|

||||

def forward(self, x):

|

||||

stride = self.stride

|

||||

assert(x.data.dim() == 4)

|

||||

B = x.data.size(0)

|

||||

C = x.data.size(1)

|

||||

H = x.data.size(2)

|

||||

W = x.data.size(3)

|

||||

assert(H % stride == 0)

|

||||

assert(W % stride == 0)

|

||||

ws = stride

|

||||

hs = stride

|

||||

x = x.view(B, C, H/hs, hs, W/ws, ws).transpose(3,4).contiguous()

|

||||

x = x.view(B, C, H/hs*W/ws, hs*ws).transpose(2,3).contiguous()

|

||||

x = x.view(B, C, hs*ws, H/hs, W/ws).transpose(1,2).contiguous()

|

||||

x = x.view(B, hs*ws*C, H/hs, W/ws)

|

||||

return x

|

||||

|

||||

class GlobalAvgPool2d(nn.Module):

|

||||

def __init__(self):

|

||||

super(GlobalAvgPool2d, self).__init__()

|

||||

|

||||

def forward(self, x):

|

||||

N = x.data.size(0)

|

||||

C = x.data.size(1)

|

||||

H = x.data.size(2)

|

||||

W = x.data.size(3)

|

||||

x = F.avg_pool2d(x, (H, W))

|

||||

x = x.view(N, C)

|

||||

return x

|

||||

|

||||

# for route and shortcut

|

||||

class EmptyModule(nn.Module):

|

||||

def __init__(self):

|

||||

super(EmptyModule, self).__init__()

|

||||

|

||||

def forward(self, x):

|

||||

return x

|

||||

|

||||

# support route shortcut and reorg

|

||||

class Darknet(nn.Module):

|

||||

def __init__(self, cfgfile):

|

||||

super(Darknet, self).__init__()

|

||||

self.blocks = parse_cfg(cfgfile)

|

||||

self.models = self.create_network(self.blocks) # merge conv, bn,leaky

|

||||

self.loss = self.models[len(self.models)-1]

|

||||

|

||||

self.width = int(self.blocks[0]['width'])

|

||||

self.height = int(self.blocks[0]['height'])

|

||||

|

||||

if self.blocks[(len(self.blocks)-1)]['type'] == 'region':

|

||||

self.anchors = self.loss.anchors

|

||||

self.num_anchors = self.loss.num_anchors

|

||||

self.anchor_step = self.loss.anchor_step

|

||||

self.num_classes = self.loss.num_classes

|

||||

|

||||

self.header = torch.IntTensor([0,0,0,0])

|

||||

self.seen = 0

|

||||

self.iter = 0

|

||||

|

||||

def forward(self, x):

|

||||

ind = -2

|

||||

self.loss = None

|

||||

outputs = dict()

|

||||

for block in self.blocks:

|

||||

ind = ind + 1

|

||||

#if ind > 0:

|

||||

# return x

|

||||

|

||||

if block['type'] == 'net':

|

||||

continue

|

||||

elif block['type'] == 'convolutional' or block['type'] == 'maxpool' or block['type'] == 'reorg' or block['type'] == 'avgpool' or block['type'] == 'softmax' or block['type'] == 'connected':

|

||||

x = self.models[ind](x)

|

||||

outputs[ind] = x

|

||||

elif block['type'] == 'route':

|

||||

layers = block['layers'].split(',')

|

||||

layers = [int(i) if int(i) > 0 else int(i)+ind for i in layers]

|

||||

if len(layers) == 1:

|

||||

x = outputs[layers[0]]

|

||||

outputs[ind] = x

|

||||

elif len(layers) == 2:

|

||||

x1 = outputs[layers[0]]

|

||||

x2 = outputs[layers[1]]

|

||||

x = torch.cat((x1,x2),1)

|

||||

outputs[ind] = x

|

||||

elif block['type'] == 'shortcut':

|

||||

from_layer = int(block['from'])

|

||||

activation = block['activation']

|

||||

from_layer = from_layer if from_layer > 0 else from_layer + ind

|

||||

x1 = outputs[from_layer]

|

||||

x2 = outputs[ind-1]

|

||||

x = x1 + x2

|

||||

if activation == 'leaky':

|

||||

x = F.leaky_relu(x, 0.1, inplace=True)

|

||||

elif activation == 'relu':

|

||||

x = F.relu(x, inplace=True)

|

||||

outputs[ind] = x

|

||||

elif block['type'] == 'region':

|

||||

continue

|

||||

if self.loss:

|

||||

self.loss = self.loss + self.models[ind](x)

|

||||

else:

|

||||

self.loss = self.models[ind](x)

|

||||

outputs[ind] = None

|

||||

elif block['type'] == 'cost':

|

||||

continue

|

||||

else:

|

||||

print('unknown type %s' % (block['type']))

|

||||

return x

|

||||

|

||||

def print_network(self):

|

||||

print_cfg(self.blocks)

|

||||

|

||||

def create_network(self, blocks):

|

||||

models = nn.ModuleList()

|

||||

|

||||

prev_filters = 3

|

||||

out_filters =[]

|

||||

conv_id = 0

|

||||

for block in blocks:

|

||||

if block['type'] == 'net':

|

||||

prev_filters = int(block['channels'])

|

||||

continue

|

||||

elif block['type'] == 'convolutional':

|

||||

conv_id = conv_id + 1

|

||||

batch_normalize = int(block['batch_normalize'])

|

||||

filters = int(block['filters'])

|

||||

kernel_size = int(block['size'])

|

||||

stride = int(block['stride'])

|

||||

is_pad = int(block['pad'])

|

||||

pad = (kernel_size-1)/2 if is_pad else 0

|

||||

activation = block['activation']

|

||||

model = nn.Sequential()

|

||||

if batch_normalize:

|

||||

model.add_module('conv{0}'.format(conv_id), nn.Conv2d(prev_filters, filters, kernel_size, stride, pad, bias=False))

|

||||

model.add_module('bn{0}'.format(conv_id), nn.BatchNorm2d(filters, eps=1e-4))

|

||||

#model.add_module('bn{0}'.format(conv_id), BN2d(filters))

|

||||

else:

|

||||

model.add_module('conv{0}'.format(conv_id), nn.Conv2d(prev_filters, filters, kernel_size, stride, pad))

|

||||

if activation == 'leaky':

|

||||

model.add_module('leaky{0}'.format(conv_id), nn.LeakyReLU(0.1, inplace=True))

|

||||

elif activation == 'relu':

|

||||

model.add_module('relu{0}'.format(conv_id), nn.ReLU(inplace=True))

|

||||

prev_filters = filters

|

||||

out_filters.append(prev_filters)

|

||||

models.append(model)

|

||||

elif block['type'] == 'maxpool':

|

||||

pool_size = int(block['size'])

|

||||

stride = int(block['stride'])

|

||||

if stride > 1:

|

||||

model = nn.MaxPool2d(pool_size, stride)

|

||||

else:

|

||||

model = MaxPoolStride1()

|

||||

out_filters.append(prev_filters)

|

||||

models.append(model)

|

||||

elif block['type'] == 'avgpool':

|

||||

model = GlobalAvgPool2d()

|

||||

out_filters.append(prev_filters)

|

||||

models.append(model)

|

||||

elif block['type'] == 'softmax':

|

||||

model = nn.Softmax()

|

||||

out_filters.append(prev_filters)

|

||||

models.append(model)

|

||||

elif block['type'] == 'cost':

|

||||

if block['_type'] == 'sse':

|

||||

model = nn.MSELoss(size_average=True)

|

||||

elif block['_type'] == 'L1':

|

||||

model = nn.L1Loss(size_average=True)

|

||||

elif block['_type'] == 'smooth':

|

||||

model = nn.SmoothL1Loss(size_average=True)

|

||||

out_filters.append(1)

|

||||

models.append(model)

|

||||

elif block['type'] == 'reorg':

|

||||

stride = int(block['stride'])

|

||||

prev_filters = stride * stride * prev_filters

|

||||

out_filters.append(prev_filters)

|

||||

models.append(Reorg(stride))

|

||||

elif block['type'] == 'route':

|

||||

layers = block['layers'].split(',')

|

||||

ind = len(models)

|

||||

layers = [int(i) if int(i) > 0 else int(i)+ind for i in layers]

|

||||

if len(layers) == 1:

|

||||

prev_filters = out_filters[layers[0]]

|

||||

elif len(layers) == 2:

|

||||

assert(layers[0] == ind - 1)

|

||||

prev_filters = out_filters[layers[0]] + out_filters[layers[1]]

|

||||

out_filters.append(prev_filters)

|

||||

models.append(EmptyModule())

|

||||

elif block['type'] == 'shortcut':

|

||||

ind = len(models)

|

||||

prev_filters = out_filters[ind-1]

|

||||

out_filters.append(prev_filters)

|

||||

models.append(EmptyModule())

|

||||

elif block['type'] == 'connected':

|

||||

filters = int(block['output'])

|

||||

if block['activation'] == 'linear':

|

||||

model = nn.Linear(prev_filters, filters)

|

||||

elif block['activation'] == 'leaky':

|

||||

model = nn.Sequential(

|

||||

nn.Linear(prev_filters, filters),

|

||||

nn.LeakyReLU(0.1, inplace=True))

|

||||

elif block['activation'] == 'relu':

|

||||

model = nn.Sequential(

|

||||

nn.Linear(prev_filters, filters),

|

||||

nn.ReLU(inplace=True))

|

||||

prev_filters = filters

|

||||

out_filters.append(prev_filters)

|

||||

models.append(model)

|

||||

elif block['type'] == 'region':

|

||||

loss = RegionLoss()

|

||||

anchors = block['anchors'].split(',')

|

||||

loss.anchors = [float(i) for i in anchors]

|

||||

loss.num_classes = int(block['classes'])

|

||||

loss.num_anchors = int(block['num'])

|

||||

loss.anchor_step = len(loss.anchors)/loss.num_anchors

|

||||

loss.object_scale = float(block['object_scale'])

|

||||

loss.noobject_scale = float(block['noobject_scale'])

|

||||

loss.class_scale = float(block['class_scale'])

|

||||

loss.coord_scale = float(block['coord_scale'])

|

||||

out_filters.append(prev_filters)

|

||||

models.append(loss)

|

||||

else:

|

||||

print('unknown type %s' % (block['type']))

|

||||

|

||||

return models

|

||||

|

||||

def load_weights(self, weightfile):

|

||||

fp = open(weightfile, 'rb')

|

||||

header = np.fromfile(fp, count=4, dtype=np.int32)

|

||||

self.header = torch.from_numpy(header)

|

||||

self.seen = self.header[3]

|

||||

buf = np.fromfile(fp, dtype = np.float32)

|

||||

fp.close()

|

||||

|

||||

start = 0

|

||||

ind = -2

|

||||

for block in self.blocks:

|

||||

if start >= buf.size:

|

||||

break

|

||||

ind = ind + 1

|

||||

if block['type'] == 'net':

|

||||

continue

|

||||

elif block['type'] == 'convolutional':

|

||||

model = self.models[ind]

|

||||

batch_normalize = int(block['batch_normalize'])

|

||||

if batch_normalize:

|

||||

start = load_conv_bn(buf, start, model[0], model[1])

|

||||

else:

|

||||

start = load_conv(buf, start, model[0])

|

||||

elif block['type'] == 'connected':

|

||||

model = self.models[ind]

|

||||

if block['activation'] != 'linear':

|

||||

start = load_fc(buf, start, model[0])

|

||||

else:

|

||||

start = load_fc(buf, start, model)

|

||||

elif block['type'] == 'maxpool':

|

||||

pass

|

||||

elif block['type'] == 'reorg':

|

||||

pass

|

||||

elif block['type'] == 'route':

|

||||

pass

|

||||

elif block['type'] == 'shortcut':

|

||||

pass

|

||||

elif block['type'] == 'region':

|

||||

pass

|

||||

elif block['type'] == 'avgpool':

|

||||

pass

|

||||

elif block['type'] == 'softmax':

|

||||

pass

|

||||

elif block['type'] == 'cost':

|

||||

pass

|

||||

else:

|

||||

print('unknown type %s' % (block['type']))

|

||||

|

||||

def load_weights_until_last(self, weightfile):

|

||||

fp = open(weightfile, 'rb')

|

||||

header = np.fromfile(fp, count=4, dtype=np.int32)

|

||||

self.header = torch.from_numpy(header)

|

||||

self.seen = self.header[3]

|

||||

buf = np.fromfile(fp, dtype = np.float32)

|

||||

fp.close()

|

||||

|

||||

start = 0

|

||||

ind = -2

|

||||

blocklen = len(self.blocks)

|

||||

for i in range(blocklen-2):

|

||||

block = self.blocks[i]

|

||||

if start >= buf.size:

|

||||

break

|

||||

ind = ind + 1

|

||||

if block['type'] == 'net':

|

||||

continue

|

||||

elif block['type'] == 'convolutional':

|

||||

model = self.models[ind]

|

||||

batch_normalize = int(block['batch_normalize'])

|

||||

if batch_normalize:

|

||||

start = load_conv_bn(buf, start, model[0], model[1])

|

||||

else:

|

||||

start = load_conv(buf, start, model[0])

|

||||

elif block['type'] == 'connected':

|

||||

model = self.models[ind]

|

||||

if block['activation'] != 'linear':

|

||||

start = load_fc(buf, start, model[0])

|

||||

else:

|

||||

start = load_fc(buf, start, model)

|

||||

elif block['type'] == 'maxpool':

|

||||

pass

|

||||

elif block['type'] == 'reorg':

|

||||

pass

|

||||

elif block['type'] == 'route':

|

||||

pass

|

||||

elif block['type'] == 'shortcut':

|

||||

pass

|

||||

elif block['type'] == 'region':

|

||||

pass

|

||||

elif block['type'] == 'avgpool':

|

||||

pass

|

||||

elif block['type'] == 'softmax':

|

||||

pass

|

||||

elif block['type'] == 'cost':

|

||||

pass

|

||||

else:

|

||||

print('unknown type %s' % (block['type']))

|

||||

|

||||

|

||||

def save_weights(self, outfile, cutoff=0):

|

||||

if cutoff <= 0:

|

||||

cutoff = len(self.blocks)-1

|

||||

|

||||

fp = open(outfile, 'wb')

|

||||

self.header[3] = self.seen

|

||||

header = self.header

|

||||

header.numpy().tofile(fp)

|

||||

|

||||

ind = -1

|

||||

for blockId in range(1, cutoff+1):

|

||||

ind = ind + 1

|

||||

block = self.blocks[blockId]

|

||||

if block['type'] == 'convolutional':

|

||||

model = self.models[ind]

|

||||

batch_normalize = int(block['batch_normalize'])

|

||||

if batch_normalize:

|

||||

save_conv_bn(fp, model[0], model[1])

|

||||

else:

|

||||

save_conv(fp, model[0])

|

||||

elif block['type'] == 'connected':

|

||||

model = self.models[ind]

|

||||

if block['activation'] != 'linear':

|

||||

save_fc(fc, model)

|

||||

else:

|

||||

save_fc(fc, model[0])

|

||||

elif block['type'] == 'maxpool':

|

||||

pass

|

||||

elif block['type'] == 'reorg':

|

||||

pass

|

||||

elif block['type'] == 'route':

|

||||

pass

|

||||

elif block['type'] == 'shortcut':

|

||||

pass

|

||||

elif block['type'] == 'region':

|

||||

pass

|

||||

elif block['type'] == 'avgpool':

|

||||

pass

|

||||

elif block['type'] == 'softmax':

|

||||

pass

|

||||

elif block['type'] == 'cost':

|

||||

pass

|

||||

else:

|

||||

print('unknown type %s' % (block['type']))

|

||||

fp.close()

|

||||

|

|

@ -0,0 +1,101 @@

|

|||

#!/usr/bin/python

|

||||

# encoding: utf-8

|

||||

|

||||

import os

|

||||

import random

|

||||

from PIL import Image

|

||||

import numpy as np

|

||||

from image import *

|

||||

import torch

|

||||

|

||||

from torch.utils.data import Dataset

|

||||

from utils import read_truths_args, read_truths, get_all_files

|

||||

|

||||

class listDataset(Dataset):

|

||||

|

||||

def __init__(self, root, shape=None, shuffle=True, transform=None, target_transform=None, train=False, seen=0, batch_size=64, num_workers=4, bg_file_names=None):

|

||||

with open(root, 'r') as file:

|

||||

self.lines = file.readlines()

|

||||

|

||||

if shuffle:

|

||||

random.shuffle(self.lines)

|

||||

|

||||

self.nSamples = len(self.lines)

|

||||

self.transform = transform

|

||||

self.target_transform = target_transform

|

||||

self.train = train

|

||||

self.shape = shape

|

||||

self.seen = seen

|

||||

self.batch_size = batch_size

|

||||

self.num_workers = num_workers

|

||||

self.bg_file_names = bg_file_names

|

||||

|

||||

def __len__(self):

|

||||

return self.nSamples

|

||||

|

||||

def __getitem__(self, index):

|

||||

assert index <= len(self), 'index range error'

|

||||

imgpath = self.lines[index].rstrip()

|

||||

|

||||

if self.train and index % 32== 0:

|

||||

if self.seen < 400*32:

|

||||

width = 13*32

|

||||

self.shape = (width, width)

|

||||

elif self.seen < 800*32:

|

||||

width = (random.randint(0,7) + 13)*32

|

||||

self.shape = (width, width)

|

||||

elif self.seen < 1200*32:

|

||||

width = (random.randint(0,9) + 12)*32

|

||||

self.shape = (width, width)

|

||||

elif self.seen < 1600*32:

|

||||

width = (random.randint(0,11) + 11)*32

|

||||

self.shape = (width, width)

|

||||

elif self.seen < 2000*32:

|

||||

width = (random.randint(0,13) + 10)*32

|

||||

self.shape = (width, width)

|

||||

elif self.seen < 2400*32:

|

||||

width = (random.randint(0,15) + 9)*32

|

||||

self.shape = (width, width)

|

||||

elif self.seen < 3000*32:

|

||||

width = (random.randint(0,17) + 8)*32

|

||||

self.shape = (width, width)

|

||||

else: # self.seen < 20000*64:

|

||||

width = (random.randint(0,19) + 7)*32

|

||||

self.shape = (width, width)

|

||||

if self.train:

|

||||

jitter = 0.2

|

||||

hue = 0.1

|

||||

saturation = 1.5

|

||||

exposure = 1.5

|

||||

|

||||

# Get background image path

|

||||

random_bg_index = random.randint(0, len(self.bg_file_names) - 1)

|

||||

bgpath = self.bg_file_names[random_bg_index]

|

||||

|

||||

img, label = load_data_detection(imgpath, self.shape, jitter, hue, saturation, exposure, bgpath)

|

||||

label = torch.from_numpy(label)

|

||||

else:

|

||||

img = Image.open(imgpath).convert('RGB')

|

||||

if self.shape:

|

||||

img = img.resize(self.shape)

|

||||

|

||||

labpath = imgpath.replace('images', 'labels').replace('JPEGImages', 'labels').replace('.jpg', '.txt').replace('.png','.txt')

|

||||

label = torch.zeros(50*21)

|

||||

if os.path.getsize(labpath):

|

||||

ow, oh = img.size

|

||||

tmp = torch.from_numpy(read_truths_args(labpath, 8.0/ow))

|

||||

tmp = tmp.view(-1)

|

||||

tsz = tmp.numel()

|

||||

if tsz > 50*21:

|

||||

label = tmp[0:50*21]

|

||||

elif tsz > 0:

|

||||

label[0:tsz] = tmp

|

||||

|

||||

if self.transform is not None:

|

||||

img = self.transform(img)

|

||||

|

||||

if self.target_transform is not None:

|

||||

label = self.target_transform(label)

|

||||

|

||||

self.seen = self.seen + self.num_workers

|

||||

return (img, label)

|

||||

|

|

@ -0,0 +1,184 @@

|

|||

#!/usr/bin/python

|

||||

# encoding: utf-8

|

||||

import random

|

||||

import os

|

||||

from PIL import Image, ImageChops, ImageMath

|

||||

import numpy as np

|

||||

|

||||

def scale_image_channel(im, c, v):

|

||||

cs = list(im.split())

|

||||

cs[c] = cs[c].point(lambda i: i * v)

|

||||

out = Image.merge(im.mode, tuple(cs))

|

||||

return out

|

||||

|

||||

def distort_image(im, hue, sat, val):

|

||||

im = im.convert('HSV')

|

||||

cs = list(im.split())

|

||||

cs[1] = cs[1].point(lambda i: i * sat)

|

||||

cs[2] = cs[2].point(lambda i: i * val)

|

||||

|

||||

def change_hue(x):

|

||||

x += hue*255

|

||||

if x > 255:

|

||||

x -= 255

|

||||

if x < 0:

|

||||

x += 255

|

||||

return x

|

||||

cs[0] = cs[0].point(change_hue)

|

||||

im = Image.merge(im.mode, tuple(cs))

|

||||

|

||||

im = im.convert('RGB')

|

||||

return im

|

||||

|

||||

def rand_scale(s):

|

||||

scale = random.uniform(1, s)

|

||||

if(random.randint(1,10000)%2):

|

||||

return scale

|

||||

return 1./scale

|

||||

|

||||

def random_distort_image(im, hue, saturation, exposure):

|

||||

dhue = random.uniform(-hue, hue)

|

||||

dsat = rand_scale(saturation)

|

||||

dexp = rand_scale(exposure)

|

||||

res = distort_image(im, dhue, dsat, dexp)

|

||||

return res

|

||||

|

||||

def data_augmentation(img, shape, jitter, hue, saturation, exposure):

|

||||

|

||||

ow, oh = img.size

|

||||

|

||||

dw =int(ow*jitter)

|

||||

dh =int(oh*jitter)

|

||||

|

||||

pleft = random.randint(-dw, dw)

|

||||

pright = random.randint(-dw, dw)

|

||||

ptop = random.randint(-dh, dh)

|

||||

pbot = random.randint(-dh, dh)

|

||||

|

||||

swidth = ow - pleft - pright

|

||||

sheight = oh - ptop - pbot

|

||||

|

||||

sx = float(swidth) / ow

|

||||

sy = float(sheight) / oh

|

||||

|

||||

flip = random.randint(1,10000)%2

|

||||

cropped = img.crop( (pleft, ptop, pleft + swidth - 1, ptop + sheight - 1))

|

||||

|

||||

dx = (float(pleft)/ow)/sx

|

||||

dy = (float(ptop) /oh)/sy

|

||||

|

||||

sized = cropped.resize(shape)

|

||||

|

||||

img = random_distort_image(sized, hue, saturation, exposure)

|

||||

|

||||

return img, flip, dx,dy,sx,sy

|

||||

|

||||

def fill_truth_detection(labpath, w, h, flip, dx, dy, sx, sy):

|

||||

max_boxes = 50

|

||||

label = np.zeros((max_boxes,21))

|

||||

if os.path.getsize(labpath):

|

||||

bs = np.loadtxt(labpath)

|

||||

if bs is None:

|

||||

return label

|

||||

bs = np.reshape(bs, (-1, 21))

|

||||

cc = 0

|

||||

for i in range(bs.shape[0]):

|

||||

x0 = bs[i][1]

|

||||

y0 = bs[i][2]

|

||||

x1 = bs[i][3]

|

||||

y1 = bs[i][4]

|

||||

x2 = bs[i][5]

|

||||

y2 = bs[i][6]

|

||||

x3 = bs[i][7]

|

||||

y3 = bs[i][8]

|

||||

x4 = bs[i][9]

|

||||

y4 = bs[i][10]

|

||||

x5 = bs[i][11]

|

||||

y5 = bs[i][12]

|

||||

x6 = bs[i][13]

|

||||

y6 = bs[i][14]

|

||||

x7 = bs[i][15]

|

||||

y7 = bs[i][16]

|

||||

x8 = bs[i][17]

|

||||

y8 = bs[i][18]

|

||||

|

||||

x0 = min(0.999, max(0, x0 * sx - dx))

|

||||

y0 = min(0.999, max(0, y0 * sy - dy))

|

||||

x1 = min(0.999, max(0, x1 * sx - dx))

|

||||

y1 = min(0.999, max(0, y1 * sy - dy))

|

||||

x2 = min(0.999, max(0, x2 * sx - dx))

|

||||

y2 = min(0.999, max(0, y2 * sy - dy))

|

||||

x3 = min(0.999, max(0, x3 * sx - dx))

|

||||

y3 = min(0.999, max(0, y3 * sy - dy))

|

||||

x4 = min(0.999, max(0, x4 * sx - dx))

|

||||

y4 = min(0.999, max(0, y4 * sy - dy))

|

||||

x5 = min(0.999, max(0, x5 * sx - dx))

|

||||

y5 = min(0.999, max(0, y5 * sy - dy))

|

||||

x6 = min(0.999, max(0, x6 * sx - dx))

|

||||

y6 = min(0.999, max(0, y6 * sy - dy))

|

||||

x7 = min(0.999, max(0, x7 * sx - dx))

|

||||

y7 = min(0.999, max(0, y7 * sy - dy))

|

||||

x8 = min(0.999, max(0, x8 * sx - dx))

|

||||

y8 = min(0.999, max(0, y8 * sy - dy))

|

||||

|

||||

bs[i][1] = x0

|

||||

bs[i][2] = y0

|

||||

bs[i][3] = x1

|

||||

bs[i][4] = y1

|

||||

bs[i][5] = x2

|

||||

bs[i][6] = y2

|

||||

bs[i][7] = x3

|

||||

bs[i][8] = y3

|

||||

bs[i][9] = x4

|

||||

bs[i][10] = y4

|

||||

bs[i][11] = x5

|

||||

bs[i][12] = y5

|

||||

bs[i][13] = x6

|

||||

bs[i][14] = y6

|

||||

bs[i][15] = x7

|

||||

bs[i][16] = y7

|

||||

bs[i][17] = x8

|

||||

bs[i][18] = y8

|

||||

|

||||

label[cc] = bs[i]

|

||||

cc += 1

|

||||

if cc >= 50:

|

||||

break

|

||||

|

||||

label = np.reshape(label, (-1))

|

||||

return label

|

||||

|

||||

def change_background(img, mask, bg):

|

||||

# oh = img.height

|

||||

# ow = img.width

|

||||

ow, oh = img.size

|

||||

bg = bg.resize((ow, oh)).convert('RGB')

|

||||

|

||||

imcs = list(img.split())

|

||||

bgcs = list(bg.split())

|

||||

maskcs = list(mask.split())

|

||||

fics = list(Image.new(img.mode, img.size).split())

|

||||

|

||||

for c in range(len(imcs)):

|

||||

negmask = maskcs[c].point(lambda i: 1 - i / 255)

|

||||

posmask = maskcs[c].point(lambda i: i / 255)

|

||||

fics[c] = ImageMath.eval("a * c + b * d", a=imcs[c], b=bgcs[c], c=posmask, d=negmask).convert('L')

|

||||

out = Image.merge(img.mode, tuple(fics))

|

||||

|

||||

return out

|

||||

|

||||

def load_data_detection(imgpath, shape, jitter, hue, saturation, exposure, bgpath):

|

||||

labpath = imgpath.replace('images', 'labels').replace('JPEGImages', 'labels').replace('.jpg', '.txt').replace('.png','.txt')

|

||||

maskpath = imgpath.replace('JPEGImages', 'mask').replace('/00', '/').replace('.jpg', '.png')

|

||||

|

||||

## data augmentation

|

||||

img = Image.open(imgpath).convert('RGB')

|

||||

mask = Image.open(maskpath).convert('RGB')

|

||||

bg = Image.open(bgpath).convert('RGB')

|

||||

|

||||

img = change_background(img, mask, bg)

|

||||

img,flip,dx,dy,sx,sy = data_augmentation(img, shape, jitter, hue, saturation, exposure)

|

||||

ow, oh = img.size

|

||||

label = fill_truth_detection(labpath, ow, oh, flip, dx, dy, 1./sx, 1./sy)

|

||||

return img,label

|

||||

|

||||

|

|

@ -0,0 +1,301 @@

|

|||

import time

|

||||

import torch

|

||||

import math

|

||||

import torch.nn as nn

|

||||

import torch.nn.functional as F

|

||||

from torch.autograd import Variable

|

||||

from utils import *

|

||||

|

||||

def build_targets(pred_corners, target, anchors, num_anchors, num_classes, nH, nW, noobject_scale, object_scale, sil_thresh, seen):

|

||||

nB = target.size(0)

|

||||

nA = num_anchors

|

||||

nC = num_classes

|

||||

anchor_step = len(anchors)/num_anchors

|

||||

conf_mask = torch.ones(nB, nA, nH, nW) * noobject_scale

|

||||

coord_mask = torch.zeros(nB, nA, nH, nW)

|

||||

cls_mask = torch.zeros(nB, nA, nH, nW)

|

||||

tx0 = torch.zeros(nB, nA, nH, nW)

|

||||

ty0 = torch.zeros(nB, nA, nH, nW)

|

||||

tx1 = torch.zeros(nB, nA, nH, nW)

|

||||

ty1 = torch.zeros(nB, nA, nH, nW)

|

||||

tx2 = torch.zeros(nB, nA, nH, nW)

|

||||

ty2 = torch.zeros(nB, nA, nH, nW)

|

||||

tx3 = torch.zeros(nB, nA, nH, nW)

|

||||

ty3 = torch.zeros(nB, nA, nH, nW)

|

||||

tx4 = torch.zeros(nB, nA, nH, nW)

|

||||

ty4 = torch.zeros(nB, nA, nH, nW)

|

||||

tx5 = torch.zeros(nB, nA, nH, nW)

|

||||

ty5 = torch.zeros(nB, nA, nH, nW)

|

||||

tx6 = torch.zeros(nB, nA, nH, nW)

|

||||

ty6 = torch.zeros(nB, nA, nH, nW)

|

||||

tx7 = torch.zeros(nB, nA, nH, nW)

|

||||

ty7 = torch.zeros(nB, nA, nH, nW)

|

||||

tx8 = torch.zeros(nB, nA, nH, nW)

|

||||

ty8 = torch.zeros(nB, nA, nH, nW)

|

||||

tconf = torch.zeros(nB, nA, nH, nW)

|

||||

tcls = torch.zeros(nB, nA, nH, nW)

|

||||

|

||||

nAnchors = nA*nH*nW

|

||||

nPixels = nH*nW

|

||||

for b in range(nB):

|

||||

cur_pred_corners = pred_corners[b*nAnchors:(b+1)*nAnchors].t()

|

||||

cur_confs = torch.zeros(nAnchors)

|

||||

for t in range(50):

|

||||

if target[b][t*21+1] == 0:

|

||||

break

|

||||

gx0 = target[b][t*21+1]*nW

|

||||

gy0 = target[b][t*21+2]*nH

|

||||

gx1 = target[b][t*21+3]*nW

|

||||

gy1 = target[b][t*21+4]*nH

|

||||

gx2 = target[b][t*21+5]*nW

|

||||

gy2 = target[b][t*21+6]*nH

|

||||

gx3 = target[b][t*21+7]*nW

|

||||

gy3 = target[b][t*21+8]*nH

|

||||

gx4 = target[b][t*21+9]*nW

|

||||

gy4 = target[b][t*21+10]*nH

|

||||

gx5 = target[b][t*21+11]*nW

|

||||

gy5 = target[b][t*21+12]*nH

|

||||

gx6 = target[b][t*21+13]*nW

|

||||

gy6 = target[b][t*21+14]*nH

|

||||

gx7 = target[b][t*21+15]*nW

|

||||

gy7 = target[b][t*21+16]*nH

|

||||

gx8 = target[b][t*21+17]*nW

|

||||

gy8 = target[b][t*21+18]*nH

|

||||

|

||||

cur_gt_corners = torch.FloatTensor([gx0/nW,gy0/nH,gx1/nW,gy1/nH,gx2/nW,gy2/nH,gx3/nW,gy3/nH,gx4/nW,gy4/nH,gx5/nW,gy5/nH,gx6/nW,gy6/nH,gx7/nW,gy7/nH,gx8/nW,gy8/nH]).repeat(nAnchors,1).t() # 16 x nAnchors

|

||||

cur_confs = torch.max(cur_confs, corner_confidences9(cur_pred_corners, cur_gt_corners)) # some irrelevant areas are filtered, in the same grid multiple anchor boxes might exceed the threshold

|

||||

conf_mask[b][cur_confs>sil_thresh] = 0

|

||||

if seen < -1:#6400:

|

||||

tx0.fill_(0.5)

|

||||

ty0.fill_(0.5)

|

||||

tx1.fill_(0.5)

|

||||

ty1.fill_(0.5)

|

||||

tx2.fill_(0.5)

|

||||

ty2.fill_(0.5)

|

||||

tx3.fill_(0.5)

|

||||

ty3.fill_(0.5)

|

||||

tx4.fill_(0.5)

|

||||

ty4.fill_(0.5)

|

||||

tx5.fill_(0.5)

|

||||

ty5.fill_(0.5)

|

||||

tx6.fill_(0.5)

|

||||

ty6.fill_(0.5)

|

||||

tx7.fill_(0.5)

|

||||

ty7.fill_(0.5)

|

||||

tx8.fill_(0.5)

|

||||

ty8.fill_(0.5)

|

||||

coord_mask.fill_(1)

|

||||

|

||||

nGT = 0

|

||||

nCorrect = 0

|

||||

for b in range(nB):

|

||||

for t in range(50):

|

||||

if target[b][t*21+1] == 0:

|

||||

break

|

||||

nGT = nGT + 1

|

||||

best_iou = 0.0

|

||||

best_n = -1

|

||||

min_dist = 10000

|

||||

gx0 = target[b][t*21+1] * nW

|

||||

gy0 = target[b][t*21+2] * nH

|

||||

gi0 = int(gx0)

|

||||

gj0 = int(gy0)

|

||||

gx1 = target[b][t*21+3] * nW

|

||||

gy1 = target[b][t*21+4] * nH

|

||||

gx2 = target[b][t*21+5] * nW

|

||||

gy2 = target[b][t*21+6] * nH

|

||||

gx3 = target[b][t*21+7] * nW

|

||||

gy3 = target[b][t*21+8] * nH

|

||||

gx4 = target[b][t*21+9] * nW

|

||||

gy4 = target[b][t*21+10] * nH

|

||||

gx5 = target[b][t*21+11] * nW

|

||||

gy5 = target[b][t*21+12] * nH

|

||||

gx6 = target[b][t*21+13] * nW

|

||||

gy6 = target[b][t*21+14] * nH

|

||||

gx7 = target[b][t*21+15] * nW

|

||||

gy7 = target[b][t*21+16] * nH

|

||||

gx8 = target[b][t*21+17] * nW

|

||||

gy8 = target[b][t*21+18] * nH

|

||||

|

||||

best_n = 0 # 1 anchor box

|

||||

gt_box = [gx0/nW,gy0/nH,gx1/nW,gy1/nH,gx2/nW,gy2/nH,gx3/nW,gy3/nH,gx4/nW,gy4/nH,gx5/nW,gy5/nH,gx6/nW,gy6/nH,gx7/nW,gy7/nH,gx8/nW,gy8/nH]

|

||||

pred_box = pred_corners[b*nAnchors+best_n*nPixels+gj0*nW+gi0]

|

||||

conf = corner_confidence9(gt_box, pred_box)

|

||||

coord_mask[b][best_n][gj0][gi0] = 1

|

||||

cls_mask[b][best_n][gj0][gi0] = 1

|

||||

conf_mask[b][best_n][gj0][gi0] = object_scale

|

||||

tx0[b][best_n][gj0][gi0] = target[b][t*21+1] * nW - gi0

|

||||

ty0[b][best_n][gj0][gi0] = target[b][t*21+2] * nH - gj0

|

||||

tx1[b][best_n][gj0][gi0] = target[b][t*21+3] * nW - gi0

|

||||

ty1[b][best_n][gj0][gi0] = target[b][t*21+4] * nH - gj0

|

||||

tx2[b][best_n][gj0][gi0] = target[b][t*21+5] * nW - gi0

|

||||

ty2[b][best_n][gj0][gi0] = target[b][t*21+6] * nH - gj0

|

||||

tx3[b][best_n][gj0][gi0] = target[b][t*21+7] * nW - gi0

|

||||

ty3[b][best_n][gj0][gi0] = target[b][t*21+8] * nH - gj0

|

||||

tx4[b][best_n][gj0][gi0] = target[b][t*21+9] * nW - gi0

|

||||

ty4[b][best_n][gj0][gi0] = target[b][t*21+10] * nH - gj0

|

||||

tx5[b][best_n][gj0][gi0] = target[b][t*21+11] * nW - gi0

|

||||

ty5[b][best_n][gj0][gi0] = target[b][t*21+12] * nH - gj0

|

||||

tx6[b][best_n][gj0][gi0] = target[b][t*21+13] * nW - gi0

|

||||

ty6[b][best_n][gj0][gi0] = target[b][t*21+14] * nH - gj0

|

||||

tx7[b][best_n][gj0][gi0] = target[b][t*21+15] * nW - gi0

|

||||

ty7[b][best_n][gj0][gi0] = target[b][t*21+16] * nH - gj0

|

||||

tx8[b][best_n][gj0][gi0] = target[b][t*21+17] * nW - gi0

|

||||

ty8[b][best_n][gj0][gi0] = target[b][t*21+18] * nH - gj0

|

||||

tconf[b][best_n][gj0][gi0] = conf

|

||||

tcls[b][best_n][gj0][gi0] = target[b][t*21]

|

||||

|

||||

if conf > 0.5:

|

||||

nCorrect = nCorrect + 1

|

||||

|

||||

return nGT, nCorrect, coord_mask, conf_mask, cls_mask, tx0, tx1, tx2, tx3, tx4, tx5, tx6, tx7, tx8, ty0, ty1, ty2, ty3, ty4, ty5, ty6, ty7, ty8, tconf, tcls

|

||||

|

||||

class RegionLoss(nn.Module):

|

||||

def __init__(self, num_classes=0, anchors=[], num_anchors=1):

|

||||

super(RegionLoss, self).__init__()

|

||||

self.num_classes = num_classes

|

||||

self.anchors = anchors

|

||||

self.num_anchors = num_anchors

|

||||

self.anchor_step = len(anchors)/num_anchors

|

||||

self.coord_scale = 1

|

||||

self.noobject_scale = 1

|

||||

self.object_scale = 5

|

||||

self.class_scale = 1

|

||||

self.thresh = 0.6

|

||||

self.seen = 0

|

||||

|

||||

def forward(self, output, target):

|

||||

# Parameters

|

||||

t0 = time.time()

|

||||

nB = output.data.size(0)

|

||||

nA = self.num_anchors

|

||||

nC = self.num_classes

|

||||

nH = output.data.size(2)

|

||||

nW = output.data.size(3)

|

||||

|

||||

# Activation

|

||||

output = output.view(nB, nA, (19+nC), nH, nW)

|

||||

x0 = F.sigmoid(output.index_select(2, Variable(torch.cuda.LongTensor([0]))).view(nB, nA, nH, nW))

|

||||

y0 = F.sigmoid(output.index_select(2, Variable(torch.cuda.LongTensor([1]))).view(nB, nA, nH, nW))

|

||||

x1 = output.index_select(2, Variable(torch.cuda.LongTensor([2]))).view(nB, nA, nH, nW)

|

||||

y1 = output.index_select(2, Variable(torch.cuda.LongTensor([3]))).view(nB, nA, nH, nW)

|

||||

x2 = output.index_select(2, Variable(torch.cuda.LongTensor([4]))).view(nB, nA, nH, nW)

|

||||

y2 = output.index_select(2, Variable(torch.cuda.LongTensor([5]))).view(nB, nA, nH, nW)

|

||||

x3 = output.index_select(2, Variable(torch.cuda.LongTensor([6]))).view(nB, nA, nH, nW)

|

||||

y3 = output.index_select(2, Variable(torch.cuda.LongTensor([7]))).view(nB, nA, nH, nW)

|

||||

x4 = output.index_select(2, Variable(torch.cuda.LongTensor([8]))).view(nB, nA, nH, nW)

|

||||

y4 = output.index_select(2, Variable(torch.cuda.LongTensor([9]))).view(nB, nA, nH, nW)

|

||||

x5 = output.index_select(2, Variable(torch.cuda.LongTensor([10]))).view(nB, nA, nH, nW)

|

||||

y5 = output.index_select(2, Variable(torch.cuda.LongTensor([11]))).view(nB, nA, nH, nW)

|

||||

x6 = output.index_select(2, Variable(torch.cuda.LongTensor([12]))).view(nB, nA, nH, nW)

|

||||

y6 = output.index_select(2, Variable(torch.cuda.LongTensor([13]))).view(nB, nA, nH, nW)

|

||||

x7 = output.index_select(2, Variable(torch.cuda.LongTensor([14]))).view(nB, nA, nH, nW)

|

||||

y7 = output.index_select(2, Variable(torch.cuda.LongTensor([15]))).view(nB, nA, nH, nW)

|

||||

x8 = output.index_select(2, Variable(torch.cuda.LongTensor([16]))).view(nB, nA, nH, nW)

|

||||

y8 = output.index_select(2, Variable(torch.cuda.LongTensor([17]))).view(nB, nA, nH, nW)

|

||||

conf = F.sigmoid(output.index_select(2, Variable(torch.cuda.LongTensor([18]))).view(nB, nA, nH, nW))

|

||||

cls = output.index_select(2, Variable(torch.linspace(19,19+nC-1,nC).long().cuda()))

|

||||

cls = cls.view(nB*nA, nC, nH*nW).transpose(1,2).contiguous().view(nB*nA*nH*nW, nC)

|

||||

t1 = time.time()

|

||||

|

||||

# Create pred boxes

|

||||

pred_corners = torch.cuda.FloatTensor(18, nB*nA*nH*nW)

|

||||

grid_x = torch.linspace(0, nW-1, nW).repeat(nH,1).repeat(nB*nA, 1, 1).view(nB*nA*nH*nW).cuda()

|

||||

grid_y = torch.linspace(0, nH-1, nH).repeat(nW,1).t().repeat(nB*nA, 1, 1).view(nB*nA*nH*nW).cuda()

|

||||

pred_corners[0] = (x0.data + grid_x) / nW

|

||||

pred_corners[1] = (y0.data + grid_y) / nH

|

||||

pred_corners[2] = (x1.data + grid_x) / nW

|

||||

pred_corners[3] = (y1.data + grid_y) / nH

|

||||

pred_corners[4] = (x2.data + grid_x) / nW

|

||||

pred_corners[5] = (y2.data + grid_y) / nH

|

||||

pred_corners[6] = (x3.data + grid_x) / nW

|

||||

pred_corners[7] = (y3.data + grid_y) / nH

|

||||

pred_corners[8] = (x4.data + grid_x) / nW

|

||||

pred_corners[9] = (y4.data + grid_y) / nH

|

||||

pred_corners[10] = (x5.data + grid_x) / nW

|

||||

pred_corners[11] = (y5.data + grid_y) / nH

|

||||

pred_corners[12] = (x6.data + grid_x) / nW

|

||||

pred_corners[13] = (y6.data + grid_y) / nH

|

||||

pred_corners[14] = (x7.data + grid_x) / nW

|

||||

pred_corners[15] = (y7.data + grid_y) / nH

|

||||

pred_corners[16] = (x8.data + grid_x) / nW

|

||||

pred_corners[17] = (y8.data + grid_y) / nH

|

||||

gpu_matrix = pred_corners.transpose(0,1).contiguous().view(-1,18)

|

||||

pred_corners = convert2cpu(gpu_matrix)

|

||||

t2 = time.time()

|

||||

|

||||

# Build targets

|

||||

nGT, nCorrect, coord_mask, conf_mask, cls_mask, tx0, tx1, tx2, tx3, tx4, tx5, tx6, tx7, tx8, ty0, ty1, ty2, ty3, ty4, ty5, ty6, ty7, ty8, tconf, tcls = \

|

||||

build_targets(pred_corners, target.data, self.anchors, nA, nC, nH, nW, self.noobject_scale, self.object_scale, self.thresh, self.seen)

|

||||

cls_mask = (cls_mask == 1)

|

||||

nProposals = int((conf > 0.25).sum().data[0])

|

||||

tx0 = Variable(tx0.cuda())

|

||||

ty0 = Variable(ty0.cuda())

|

||||

tx1 = Variable(tx1.cuda())

|

||||

ty1 = Variable(ty1.cuda())

|

||||

tx2 = Variable(tx2.cuda())

|

||||

ty2 = Variable(ty2.cuda())

|

||||

tx3 = Variable(tx3.cuda())

|

||||

ty3 = Variable(ty3.cuda())

|

||||

tx4 = Variable(tx4.cuda())

|

||||

ty4 = Variable(ty4.cuda())

|

||||

tx5 = Variable(tx5.cuda())

|

||||

ty5 = Variable(ty5.cuda())

|

||||

tx6 = Variable(tx6.cuda())

|

||||

ty6 = Variable(ty6.cuda())

|

||||

tx7 = Variable(tx7.cuda())