* Add `sb deploy` command content. * Fix inline if-expression syntax in playbook. * Fix quote escape issue in bash command. * Add custom env in config. * Update default config for multi GPU benchmarks. * Update MANIFEST.in to include jinja2 template. * Require jinja2 minimum version. * Fix occasional duplicate output in Ansible runner. * Fix mixed color from Ansible and Python colorlog. * Update according to comments. * Change superbench.env from list to dict in config file. |

||

|---|---|---|

| .azure-pipelines | ||

| .github | ||

| dockerfile | ||

| examples/benchmarks | ||

| imgs | ||

| superbench | ||

| tests | ||

| third_party | ||

| .clang-format | ||

| .codecov.yml | ||

| .dockerignore | ||

| .editorconfig | ||

| .flake8 | ||

| .gitattributes | ||

| .gitignore | ||

| .gitmodules | ||

| .mypy.ini | ||

| .pre-commit-config.yaml | ||

| .style.yapf | ||

| CODE_OF_CONDUCT.md | ||

| LICENSE | ||

| MANIFEST.in | ||

| Makefile | ||

| README.md | ||

| SECURITY.md | ||

| SUPPORT.md | ||

| setup.py | ||

README.md

SuperBenchmark

| Azure Pipelines | Build Status |

|---|---|

| cpu-unit-test | |

| gpu-unit-test |

SuperBench is a validation and profiling tool for AI infrastructure, which supports:

- AI infrastructure validation and diagnosis

- Distributed validation tools to validate hundreds or thousands of servers automatically

- Consider both raw hardware and E2E model performance with ML workload patterns

- Build a contract to identify hardware issues

- Provide infrastructural-oriented criteria as Performance/Quality Gates for hardware and system release

- Provide detailed performance report and advanced analysis tool

- AI workload benchmarking and profiling

- Provide comprehensive performance comparison between different existing hardware

- Provide insights for hardware and software co-design

It includes micro-benchmark for primitive computation and communication benchmarking, and model-benchmark to measure domain-aware end-to-end deep learning workloads.

🔴 Note: SuperBench is in the early pre-alpha stage for open source, and not ready for general public yet. If you want to jump in early, you can try building latest code yourself.

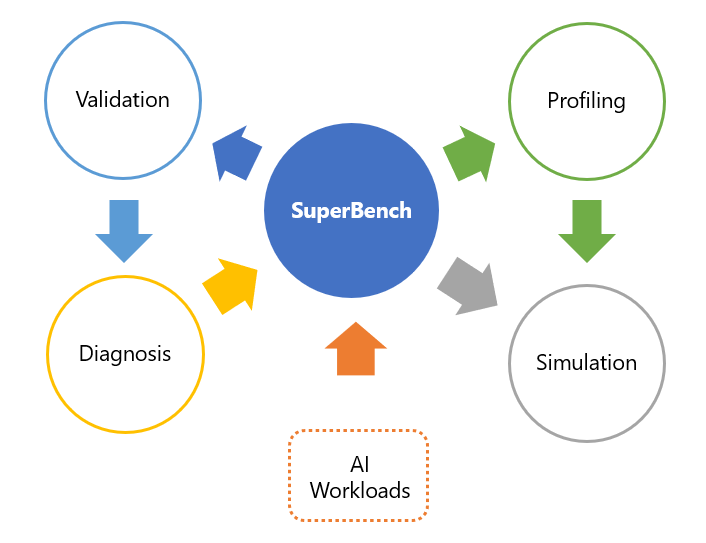

SuperBench capabilities, workflow and benchmarking metrics

The following graphic shows the capabilities provide by SuperBench core framework and its extension.

Benchmarking metrics provided by SuperBench are listed as below.

Micro Benchmark

|

Model Benchmark

|

|

| Metrics |

|

|

Installation

Using Docker (Preferred)

System Requirements

- Platform: Ubuntu 18.04 or later (64-bit)

- Docker: Docker CE 19.03 or later

Install SuperBench

-

Using Pre-Build Images

docker pull superbench/superbench:dev-cuda11.1.1 docker run -it --rm \ --privileged --net=host --ipc=host --gpus=all \ superbench/superbench:dev-cuda11.1.1 bash -

Building the Image

docker build -f dockerfile/cuda11.1.1.dockerfile -t superbench/superbench:dev .

Using Python

System Requirements

-

Platform: Ubuntu 18.04 or later (64-bit); Windows 10 (64-bit) with WSL2

-

Python: Python 3.6 or later, pip 18.0 or later

Check whether Python environment is already configured:

# check Python version python3 --version # check pip version python3 -m pip --versionIf not, install the followings:

It's recommended to use a virtual environment (optional):

# create a new virtual environment python3 -m venv --system-site-packages ./venv # activate the virtual environment source ./venv/bin/activate # exit the virtual environment later # after you finish running superbench deactivate

Install SuperBench

-

PyPI Binary

# not available yet -

From Source

# get source code git clone https://github.com/microsoft/superbenchmark cd superbenchmark # install superbench python3 -m pip install . make postinstall

Usage

Run SuperBench

# run benchmarks in default settings

sb exec

# use a custom config

sb exec --config-file ./superbench/config/default.yaml

Benchmark Gallary

Please find more benchmark examples here.

Developer Guide

If you want to develop new feature, please follow below steps to set up development environment.

Check Environment

Follow System Requirements.

Set Up

# get latest code

git clone https://github.com/microsoft/superbenchmark

cd superbenchmark

# install superbench

python3 -m pip install -e .[dev,test]

Lint and Test

# format code using yapf

python3 setup.py format

# check code style with mypy and flake8

python3 setup.py lint

# run all unit tests

python3 setup.py test

Submit a Pull Request

Please install pre-commit before git commit to run all pre-checks.

pre-commit install

Open a pull request to main branch on GitHub.

Contributing

Contributor License Agreement

This project welcomes contributions and suggestions. Most contributions require you to agree to a Contributor License Agreement (CLA) declaring that you have the right to, and actually do, grant us the rights to use your contribution. For details, visit https://cla.opensource.microsoft.com.

When you submit a pull request, a CLA bot will automatically determine whether you need to provide a CLA and decorate the PR appropriately (e.g., status check, comment). Simply follow the instructions provided by the bot. You will only need to do this once across all repos using our CLA.

This project has adopted the Microsoft Open Source Code of Conduct. For more information see the Code of Conduct FAQ or contact opencode@microsoft.com with any additional questions or comments.

Contributing principles

SuperBenchmark is an open-source project. Your participation and contribution are highly appreciated. There are several important things you need know before contributing to this project:

What content can be added to SuperBenchmark

-

Bug fixes for existing features.

-

New features for benchmark module (micro-benchmark, model-benchmark, etc.)

If you would like to contribute a new feature on SuperBenchmark, please submit your proposal first. In GitHub Issues module, choose

Enhancement Requestto finish the submission. If the proposal is accepted, you can submit pull requests to origin main branch.

Contribution steps

If you would like to contribute to the project, please follow below steps of joint development on GitHub.

Forkthe repo first to your personal GitHub account.- Checkout from main branch for feature development.

- When you finish the feature, please fetch the latest code from origin repo, merge to your branch and resolve conflict.

- Submit pull requests to origin main branch.

- Please note that there might be comments or questions from reviewers. It will need your help to update the pull request.

Trademarks

This project may contain trademarks or logos for projects, products, or services. Authorized use of Microsoft trademarks or logos is subject to and must follow Microsoft's Trademark & Brand Guidelines. Use of Microsoft trademarks or logos in modified versions of this project must not cause confusion or imply Microsoft sponsorship. Any use of third-party trademarks or logos are subject to those third-party's policies.