Add consumption to the README |

||

|---|---|---|

| Solution Overview | ||

| Technical Guides | ||

| User Guides | ||

| armTemplates | ||

| img | ||

| scripts | ||

| .gitignore | ||

| LICENSE | ||

| LICENSE-CODE | ||

| README.md | ||

README.md

Technical Reference Implementation for Enterprise BI and Reporting

Summary

This solution creates a reference implementation for an end-to-end BI and Reporting platform built using SQL Data Warehouse, SQL Server Analysis Services, SQL Server Reporting Services, Power BI, and custom solutions for job management and coordination. Customers who are planning to build such a system for their enterprise applications, or onboarding their on-prem solution can use this TRI to get a jumpstart on the infrastructure implementation, and customize it for their needs. Systems Integrators can partner with us to use this reference implementation to accelerate their customer deployments.Description

Estimated Provisioning Time: 2 Hours

Azure offers a rich data and analytics platform for customers and ISVs seeking to build scalable BI and reporting solutions. However, customers face pragmatic challenges in building the right infrastructure for enterprise-grade production systems. They have to evaluate the various products for security, scale, performance and geo-availability requirements. They have to understand service features and their interoperability, and they must plan to address any perceived gaps using custom software. This takes time, effort, and many times, the end-to-end system architecture they design is sub-optimal. Consequently, the promise and expectations set during proof-of-concept (POC) stages do not translate to robust production systems in the expected time-to-market.This TRI addresses this customer pain by providing a reference implementation that is:

- pre-built based on selected and stable Azure components proven to work in enterprise BI and reporting scenarios

- easily configured and deployed to an Azure subscription within a few hours

- bundled with software to handle all the operational essentials for a full-fledged production system

- tested end-to-end against large workloads

Once deployed, the TRI can be used as-is, or customized to fit the application needs using the technical documentation that is provided with the TRI. This enables the customer to build the solution that delivers the business goals based on a robust and functional infrastructure.

Audience

It is recommended that the TRI is reviewed and deployed by a person who is familiar with operational concepts of data warehousing, business intelligence, and analytics. Knowledge of Azure is a plus, but not mandatory. The technical guides provide pointers to Azure documentation for all the resources employed in this TRI.

Note: Connect with one of our Advanced Analytics partners to arrange a proof of concept in your environment: Artis Consulting

Architecture

How the TRI works, in a Nutshell

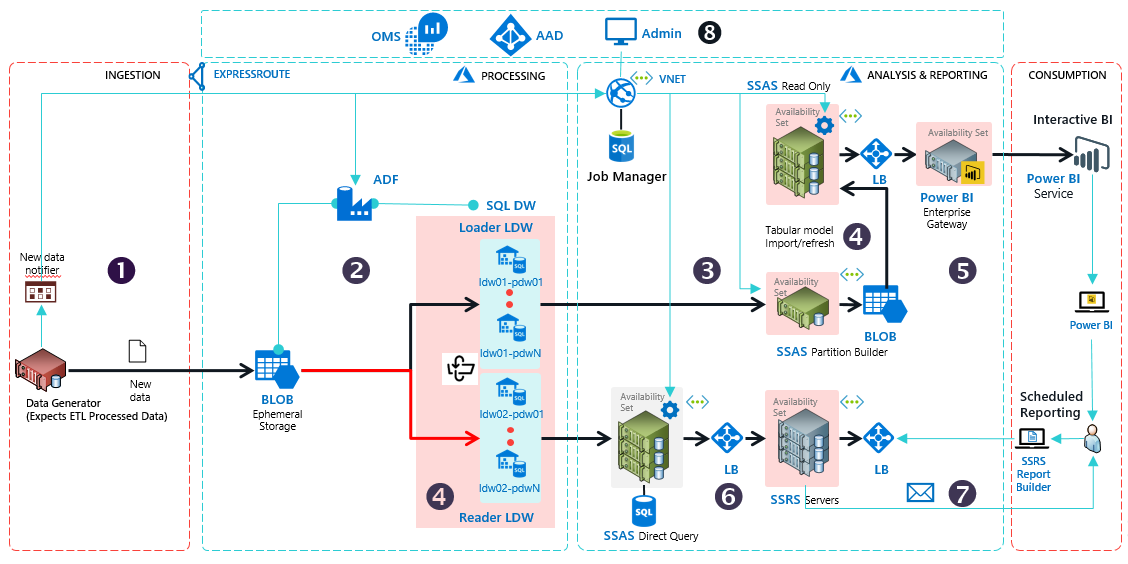

TRI has 4 segments – Ingestion, Processing, Analysis & Reporting, and Consumption

- A data generator, provided in place of the customer's data source, queries the job manager for a staging Azure Blob storage. The job manager returns the handle to an ephemeral BLOB, and the data generator pushes data files into this storage. The TRI is designed with a key assumption that the data that is to be ingested into the system has been ETL-processed for reporting and analytics.

- When the job manager detects fresh data in the Azure Blob, it creates a dynamic one-time Azure data factory pipeline to load the data from the Blob into a logical Loader SQL Data Warehouse (DW) using Polybase. The logical SQL DW is a design provided by the TRI for scalability and performance, to allow multiple physical SQL Data Warehouse replicas to handle large-scale concurrent queries.

- Interactive BI is best served by cached analytical models to enable fast drilldowns for summaries aggregated over various dimensions or pivots. As data lands in DW tables, a SSAS partition builder starts refreshing the tabular models that are dependent on these tables.

- After a preconfigured duration, the job manager flips the Loader DW to become the Reader DW, ready to serve queries for report generation. The current Reader flips to become the Loader, and the job manager starts data load on the Loader. This Loader-Reader pairing is another design provided by the TRI for scalability and performance of queries against the data warehouse. When this flip happens, the Partition Builder commits the tabular models into files in a Blob. This Blob is then loaded into an availability-set of SSAS read-only servers.

- You can set up a Power BI gateway to the SSAS Read-Only nodes, enabling Power BI dashboards to access the tabular models.

- For reporting, SSRS generates the reports from data in the SQL DW via SSAS Direct Query. SSAS also offers row-level security for the data fetched from SQL DW.

- You can schedule report generation with SSRS using the Report Builder client tool. The generated reports are stored in SSRS servers. You can enable email-based delivery of reports to users.

- The end-to-end system can be managed via an Administrative web app. The TRI is configured with Azure Active Directory, which you can use to control access to the apps (Admin UI and Control Server) and underlying Azure resources for the users of your system, and OMS, which enables you to monitor the individual components in the Azure resource group.

User's Guide

Please follow the step by step instructions in the User's Guide to deploy and operationalize the TRI.

Technical Guides

The design and operational details of the main data components of the TRI are provided in the Technical Guide.

Resource Consumption

In preparation for a deployment, please be aware that the default configuration will use the following resources. The solution may be configured to use a different number of resources during deployment. Please adjust your quotas accordingly.

- 10 Virtual Machines

- 20 Cores

- 8 Storage Accounts

- 3 Sql Servers

- 2 Sql Data Warehouses

- 1 Sql Database

- 6 OMS Solutions

- 6 Network Security Groups

- 10 Network Interfaces

- 1 Log Analytics

- 6 Load Balancers

- 1 Data Factory

- 1 Batch Account

- 6 Availability Sets

- 1 Automation Account

- 1 Application Insight

- 1 App Service Plan

- 1 App Service