|

|

||

|---|---|---|

| .travis.yml | ||

| Docker4AzurebyRancher1.png | ||

| Docker4AzurebyRancherAutoScale1.png | ||

| LICENSE | ||

| OMSDocker4Azure1.png | ||

| README.md | ||

| ScaledRancherWorkers.png | ||

| ScaledWorkers1.png | ||

| azuredeploy.json | ||

| docker-compose-ekv3.yml | ||

| docker-compose-piggymetricsv3.yml | ||

| docker-compose-votingappv3.yml | ||

| dockerce4azure.png | ||

| raft.gif | ||

README.md

| title | description | keywords |

|---|---|---|

| Deploy Docker for Azure with OMS | Learn how to deploy Docker for Azure with one click along with Operational Management Suite Container monitoring, using an ARM (Azure Resource Manager) Template and deploy service stacks | docker, docker for azure , install, orchestration, management, azure, swarm, OMS, monitoring, scaling |

Docker for Azure Release Notes. This template has two simple additions for pushing OMS dockerized agent in each of the nodes (managers and workers) on top of Template - Docker for Azure Stable Channel 17.03.1 CE Release date: 03/30/2017

azure-docker4azureoms

Docker for Azure with OMS and some more stacks

Table of Contents

- Docker for Azure with OMS

- Prerequisites

- Deploy and Visualize

- MSFT OSCC

- Tips

- Some Samples

- Spring Cloud Netflix Samples

- Monitor dashboard

- Simplest Topology Specs

- Multi Manager with scaled worker

- Raft HA

- Reporting Bugs

- Patches and pull requests

- Usage of Operational Management Suite

- Scaling

- Note on Docker EE and Docker CE for Azure

Prerequisites

- Containerized helper-script to help create the Service Principal

- Obtain App ID

- Obtain App Secret

$ docker run -ti docker4x/create-sp-azure sp-name rg-name rg-region

- Obtain Workspace ID and Key for OMS Solutions

- Deploy the above mentioned solutions.

- Obtain OMS Workspace ID

- Obtain Workspace Key

Deploy and Visualize

Docker for Azure Release Notes. This template has two simple additions for pushing OMS dockerized agent in each of the nodes (managers and workers) on top of Template - Docker for Azure Stable Channel 17.03.1 CE Release date: 03/30/2017

MSFT OSCC

This project has adopted the Microsoft Open Source Code of Conduct. For more information see the Code of Conduct FAQ or contact opencode@microsoft.com with any additional questions or comments.

Tips

-

Post Deployment, one can ssh to the manager using the id_rsa.pub as mentioned during swarm creation:

ssh docker@sshlbrip -p 50000 -

Transfer the keys to the swarm manager to use it as a jumpbox to workers:

scp -P 50000 ~/.ssh/id_rsa ~/.ssh/id_rsa.pub docker@sshlbrip:/home/docker/.ssh -

For Deploying a stack in v3 docker-compose file:

docker stack deploy -c --path to docker-compose.yml file-- --stackname-- -

To update stack:

docker stack up deploy -c --path to docker-compose.yml file-- --stackname--

Some Samples

wget https://raw.githubusercontent.com/Azure/azure-docker4azureoms/master/docker-compose-ekv3.yml && docker stack deploy -c docker-compose-ekv3.yml elasticsearchkibana

- ElasticSearch Service: http://Docker4AzureRGExternalLoadBalance:9200/

- Kibana Service: http://Docker4AzureRGExternalLoadBalance:5601/

wget https://raw.githubusercontent.com/Azure/azure-docker4azureoms/master/docker-compose-votingappv3.yml && docker stack deploy -c docker-compose-votingappv3.yml votingapp

- Vote: http://Docker4AzureRGExternalLoadBalance:5002/

- Voting Results: http://Docker4AzureRGExternalLoadBalance:5003

- @manomarks Swarm Visualizer: http://Docker4AzureRGExternalLoadBalance:8080 -

docker service create --name=viz --publish=8087:8080/tcp --constraint=node.role==manager --mount=type=bind,src=/var/run/docker.sock,dst=/var/run/docker.sock manomarks/visualizer

wget https://raw.githubusercontent.com/robinong79/docker-swarm-monitoring/master/composefiles/docker-compose-monitoring.yml && wget https://raw.githubusercontent.com/robinong79/docker-swarm-monitoring/master/composefiles/docker-compose-logging.yml && docker network create --driver overlay monitoring && docker network create --driver overlay logging && docker stack deploy -c docker-compose-logging.yml elk && docker stack deploy -c docker-compose-monitoring.yml prommon

Spring Cloud Netflix Samples

Forked from https://github.com/sqshq/PiggyMetrics, this example demonstrates the use of Netlix OSS API with Spring. The docker-compose file has been updated to make use of the latest features of Compose 3.0; it's still a work in progress. The service container logs are drained into OMS.

wget https://raw.githubusercontent.com/Azure/azure-docker4azureoms/master/docker-compose-piggymetricsv3.yml && docker stack deploy -c docker-compose-piggymetricsv3.yml piggymetrics

- Rabbit MQ Service: http://Docker4AzureRGExternalLoadBalancer:15672/ (guest/guest)

- Eureka Service: http://Docker4AzureRGExternalLoadBalance:8761/

- Echo Test Service: http://Docker4AzureRGExternalLoadBalance:8989/

- PiggyMetrics Sprint Boot Service: http://Docker4AzureRGExternalLoadBalance:8081/

- Hystrix: http://Docker4AzureRGExternalLoadBalance:9000/hystrix

- Turbine Stream for Hystrix Dashboard: http://Docker4AzureRGExternalLoadBalance:8989/turbine/turbine.stream

Monitor dashboard

In this project configuration, each microservice with Hystrix on board pushes metrics to Turbine via Spring Cloud Bus (with AMQP broker). The Monitoring project is just a small Spring boot application with Turbine and Hystrix Dashboard.

Let's see our system behavior under load: Account service calls Statistics service and it responses with a vary imitation delay. Response timeout threshold is set to 1 second.

|

|

|

|

|---|---|---|---|

0 ms delay |

500 ms delay |

800 ms delay |

1100 ms delay |

| Well behaving system. The throughput is about 22 requests/second. Small number of active threads in Statistics service. The median service time is about 50 ms. | The number of active threads is growing. We can see purple number of thread-pool rejections and therefore about 30-40% of errors, but circuit is still closed. | Half-open state: the ratio of failed commands is more than 50%, the circuit breaker kicks in. After sleep window amount of time, the next request is let through. | 100 percent of the requests fail. The circuit is now permanently open. Retry after sleep time won't close circuit again, because the single request is too slow. |

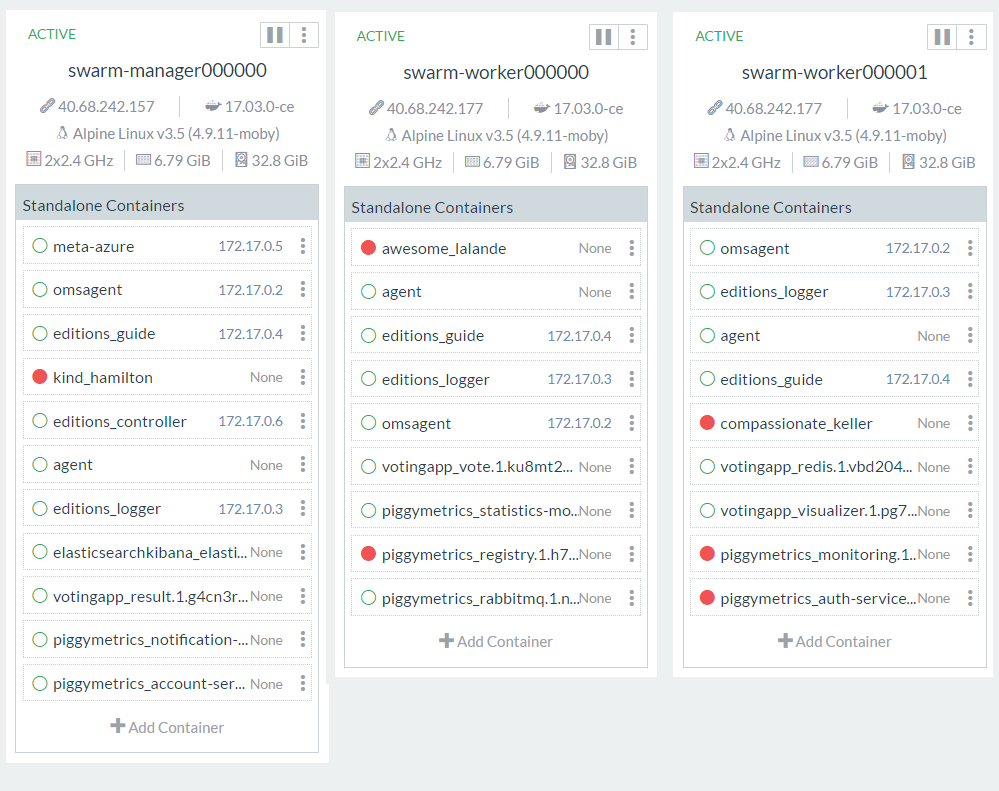

Simplest Topology Specs

The Simplest topology spec of 1 Manager and 2 worker nodes is as follows for Docker for Azure Stable Channel 17.03.1 CE Release date: 03/30/2017 - Release Date

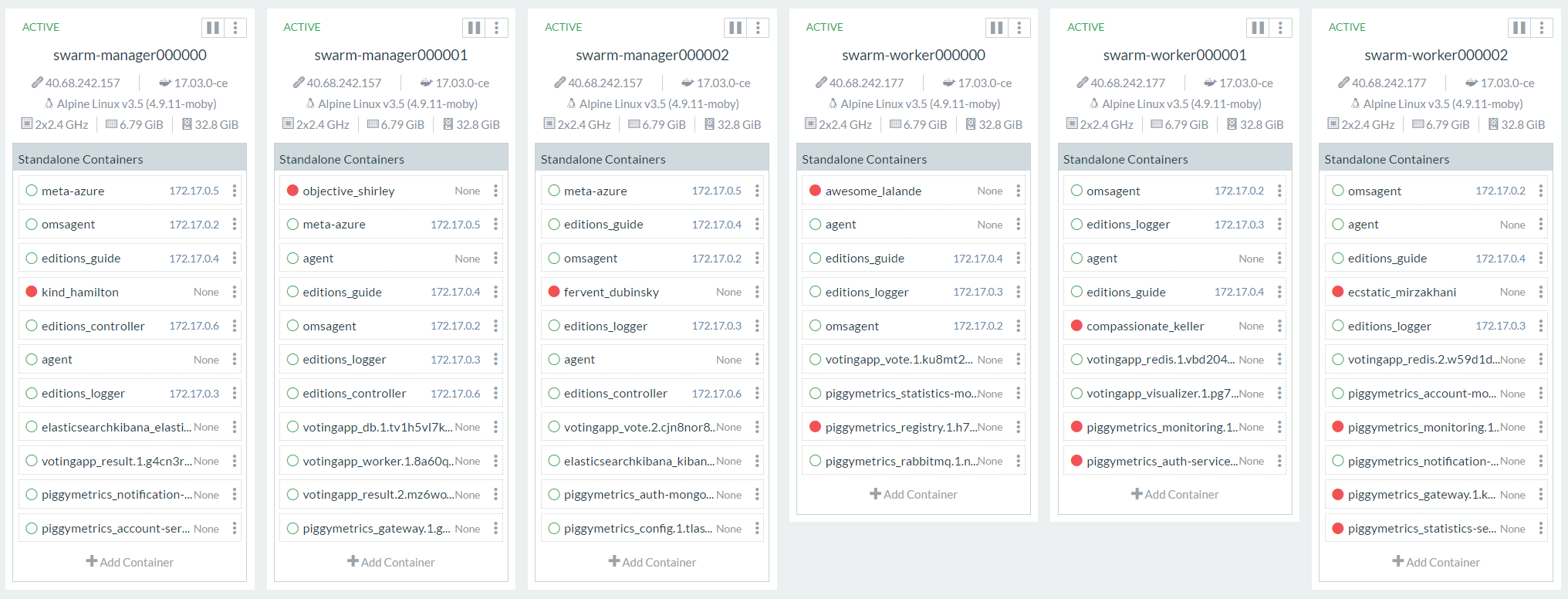

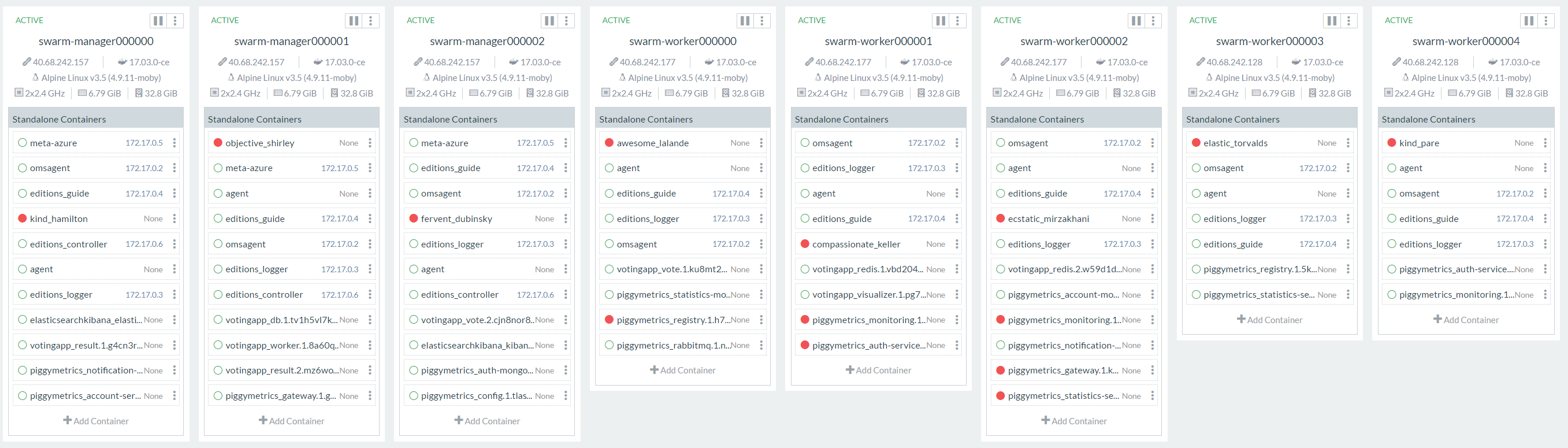

Multi Manager with scaled worker

3 Swarm Managers with Scaled 3rd Worker as below :

Raft HA

The consensus algorithm must ensure that if any state machine applies set x to 3 as the nth command, no other state machine will ever apply a different nth command. Raftscope as below for 5 Swarm Managers.

Reporting bugs

Please report bugs by opening an issue in the GitHub Issue Tracker

Patches and pull requests

Patches can be submitted as GitHub pull requests. If using GitHub please make sure your branch applies to the current master as a 'fast forward' merge (i.e. without creating a merge commit). Use the git rebase command to update your branch to the current master if necessary.

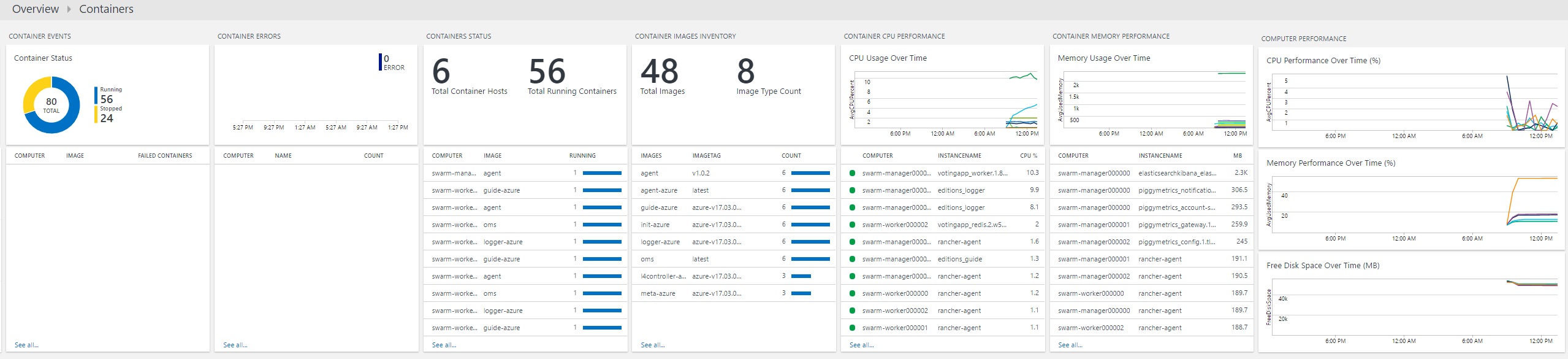

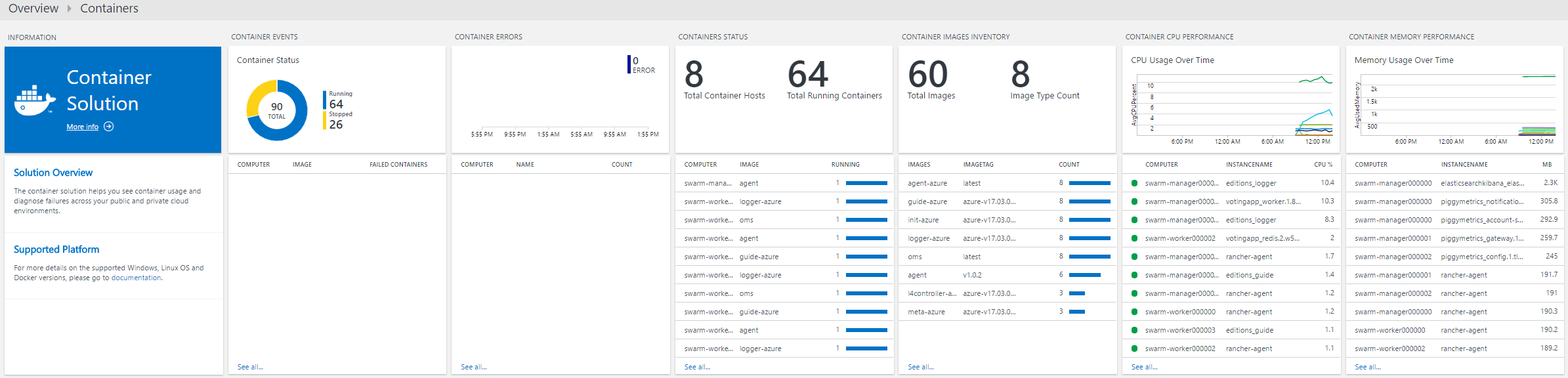

Usage of Operational Management Suite

OMS Setup is via the OMS Workspace Id and OMS Workspace Key as per the steps below.

Create a free account for MS Azure Operational Management Suite with workspaceName

- Provide a Name for the OMS Workspace.

- Link your Subscription to the OMS Portal.

- Depending upon the region, a Resource Group would be created in the Sunscription like "mms-weu" for "West Europe" and the named OMS Workspace with portal details etc. would be created in the Resource Group.

- Logon to the OMS Workspace and Go to -> Settings -> "Connected Sources" -> "Linux Servers" -> Obtain the Workspace ID like

ba1e3f33-648d-40a1-9c70-3d8920834669and the "Primary and/or Secondary Key" likexkifyDr2s4L964a/Skq58ItA/M1aMnmumxmgdYliYcC2IPHBPphJgmPQrKsukSXGWtbrgkV2j1nHmU0j8I8vVQ== - Add The solutions "Agent Health", "Activity Log Analytics" and "Container" Solutions from the "Solutions Gallery" of the OMS Portal of the workspace.

- While Deploying the Docker for Azure Template just the WorkspaceID and the Key are to be mentioned and all will be registered including all containers in any nodes of the Docker for Azure auto cluster.

- Then one can login to https://OMSWorkspaceName.portal.mms.microsoft.com/#Workspace/overview/solutions/details/index?solutionId=Containers and check all containers running for Docker for Azure and use Log Analytics and if Required perform automated backups of the APK Based sys using the corresponding Solutions for OMS.

- OMS Monitoring 3 Swarm Managers with 3 workers and all their containers.

Scaling

VMSS auto scale is by default off to be addressed in a later version

- For now manual scaling as per the pic below (Existing Stack re-deployment is required)

docker stack up deploy -c --path to docker-compose.yml file-- --stackname--

- Automatic OMS Registering of new workers in the swarm as in the pic last. (Manually added 2 new workers as per the next below pic and hence 3 Managers + 3 Workers initially deployed via this template + 2 manually scaled worker nodes = Total 8).

- Rancher overlay for manually "scaled" workers and topology

Note on Docker EE and Docker CE for Azure

Presently Docker is using the docker4x repository for entirely private images with dockerized small footprint go apps for catering to standard design of Docker CE and Docker EE for Public Cloud. The following are the last ones for Azure including the ones used for Docker Azure EE (DDC) and Docker CE. The base system service stack of Docker for CE and EE can be easily obtained via any standard monitoring like OMS or names obtained via

docker search docker4x --limit 100|grep azure

- docker4x/upgrademon-azure

- docker4x/requp-azure

- docker4x/upgrade-azure

- docker4x/upg-azure

- docker4x/ddc-init-azure

- docker4x/l4controller-azure

- docker4x/create-sp-azure

- docker4x/logger-azure

- docker4x/azure-vhd-utils

- docker4x/l4azure

- docker4x/waalinuxagent (dated)

- docker4x/meta-azure

- docker4x/cloud-azure

- docker4x/guide-azure

- docker4x/init-azure

- docker4x/agent-azure