15 KiB

Deploying a HDP 2.4 MultiNode HortonWorks Hadoop Cluster with Ambari on Azure

This tutorial walks through setting up a three node HortonWorks HDP 2.4 cluster using Azure virtual machines found on the marketplace.

IMPORTANT NOTE:

This is adapted from HortonWorks instruction video on HDP 2.4 Multinode Hadoop Installation using Ambari

Prerequisites

- An Active Azure subscription.

Deploy Virtual Machines

Create three machines that will serve as resource manager, yarn and two nodes.

-

Login to Azure Portal

-

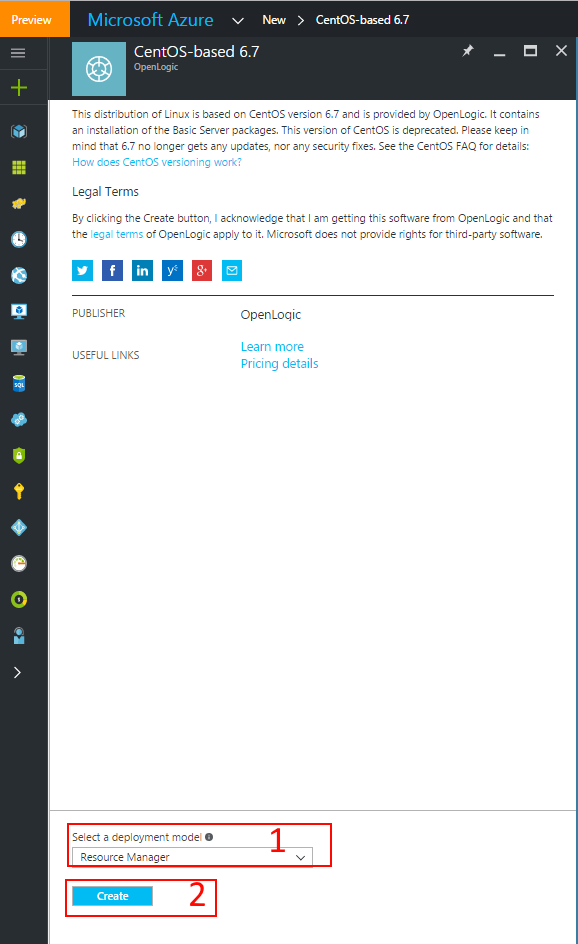

Navigate to Azure Market Place and search for "CentOS-based 6.7".

-

Select the Open Logic Version:

IMPORTANT NOTE CentOS 6.7 is used here to match the deployment environment of the native HDP 2.4 Sandbox from HortonWorks.

-

Deploy virtual machine deploy using the Resource Manager model:

-

Set network and storage settings:

Create a new storage account and network security group. Under the network security group, create various inbound rules. Port 8080 allows access to Ambari web view (for cluster installation and adminstration).

This is done by following the highlighted boxes in the visual below.

IMPORTANT NOTE:

It is advised to add this machine to the same virtual network as your SQL Server 2016 (IAAS). This makes accessibility and connectivity easier between nodes.

Instead of creating a new storage account, you can attach your own or existing storage account at this point.Please make note of your newly created Network Security Group name (highlighted box #3) as it will be reused on the other two machines that will be deployed.

-

Add more Network Security Inbound rules:

Follow the highlighted boxes (#5 - #8) in the imagery of step 7 above to open up more service TCP ports on the virtual machine needed by HDP.

Other big data applications can be installed on HDP deployed VMs. On the security group, allow the following ports - 8000, 8888, 50070, 50095, 50075, 15000, 60010, 60030, 10000, 10001, 19888, 8443, 11000, 8050, 8993, 8744, 60080, 50111, 6080, 8088, 8080, 42080, 8020, 111, 8040, 4200, 21000, 9000, 8983, 4040, 18080, 9995, 9090.

When you are done, your final Inbound security rules should match the image below. -

Validate your configuration

Make sure validation is successful before continuing.

-

Create two more virtual machines following the steps above. These machines will serve as DataNodes. Please ensure that the VMs are part of the same virtual network and Network Security Group names (Step 7 highlighted box #1 and highlighted box #3 respectively). You will not need to repeat the steps of opening the ports as it is already open in the NSG.

This completes our deployment.

Attach extra storage disks to virtual machines

The local SSD attached to the virtual machine is not big enough to process big data.

-

Follow steps below to attach an extra data disk to all three virtual machines.

-

Format and mount the new data disk

-

Go to Azure Portal

- ClickVirtual Machines > Choose your machine > Overview

- Copy the Public IP address

- Open an SSH tool like Putty or mRemoteNG

-

Ssh into the first virtual machine :

$ ssh <created_user>@<host> -

The new disk for a newly created Azure virtual machine, running Ubuntu, will be dev/sdc.

- List the mounted disks :

$ ls /dev/sd?

- List the mounted disks :

-

Change user to root :

sudo su -

Mount new disk using the following options in this order : n, p, 1, default settings, w

$ fdisk /dev/sdc- Enter n to create a new partition

- Enter p to set disk as primary

- Enter 1 to set disk as 1st partition

- Use default cylinder settings

- Select 1 for First cylinder

- Select default (133544) for Last cylinder

- Enter w to be able to write to disk

-

Set disk format

$ mkfs -t ext4 /dev/sdc1. This will take a while. -

Make directory for mount point :

$ mkdir /data -

Mount new disk at mount point

$ mount /dev/sdc1 /data

-

-

Persist new disk at reboot

-

Change user to root :

sudo su -

Open

/etc/fstab -

Enter this entry :

/dev/sdc1 /data ext4 defaults 0 0 -

Save and exit file

-

Create and Set the Fully Qualified Domain Name (FQDN) for the Azure VMs

On each virtual machine, we will be updating the /etc/hosts file. Machines in the cluster need information of the hostname to IP address mappings.

Create hostname FQDN for all machines

-

Login to Azure Portal

-

Go to Virtual Machines and select your machine(s)

-

Click on Overview > Public IP address/DNS name label > Configuration and create a DNS name label and Save. Please make a note of the name you provide here (for example

new1) as it will be used in the later steps. Also copy the FQDN, for instance in the imagery below, your FQDN would benew1.eastus2.cloudapp.azure.com. -

Make a note Private IP addresses (Virtual Machines > Machine Name > Network Interfaces)

-

Repeat above steps for the other two machines.

Add Hostnames on all machines

-

Ssh into first host.

-

Check and change the hostname on the node.

-

Switch to root :

sudo su -

Update the hosts file

-

Open

/etc/hostson machine -

Append the hostname to IP address mapping for all three nodes to

/etc/hosts.

Assuming the private IP addresses are 10.0.0.1, 10.0.0.2 and 10.0.0.3 and machine names (during the FQDN creation step above) node1, node2, and node310.0.0.1 node1 node1.eastus2.cloudapp.azure.com 10.0.0.2 node2 node2.eastus2.cloudapp.azure.com 10.0.0.3 node3 node3.eastus2.cloudapp.azure.com

-

-

-

Persist new hostname :

sudo hostname your_new_hostname -

Repeat above steps for the other two machines. So that all machines in the cluster can communicate with each other.

Shutdown Iptables on the hosts

On each machine, shutdown iptables. We need to shutdown Iptables to allow the Hadoop daemons communicate across nodes. SELinux still offers security on the machines.

-

Check service status :

service iptables status

If Iptables is ON, a similar output as image below will be seen

Ensure SELinux on the the hosts is enabled, permissive and enforced

-

Check status :

$ sestatus -

Change the current status to

permissiveif it isenforcing:$ setenforce 0

This prevents denied access to the Hadoop daemons and Ambari installation, but just log every access.

Restart and Update NTP Service on the hosts

-

Restart service :

/etc/init.d/ntpd restart -

Update runlevel settings :

chkconfig ntpd on

Setup Passwordless SSH

Perform steps on all nodes.

-

Change root password :

sudo passwd rootIMPORTANT NOTE:

You need to know the root password of the remote machine in order to copy over your ssh public key. -

Create new root password. This allows us copy over ssh keys easily.

Now starting from machines #1

-

Switch to root user :

sudo su -

Create ssh keys accepting all default prompts :

ssh-keygen -

Copy over public ssh key to other two nodes. For example to node

new2:$ ssh-copy-id root@new2 -

Add machine public key to its authorized_keys file

$ cd ~/.sshcat id_rsa.pub >> authorized_keys

You should be able to login to localhost passwordless. Confirm by running this :

ssh localhost -

Repeat Passwordless ssh on machines #2 and #3 using steps above.

Setup Ambari Repository

Download the following repo files contained in the zip folder here

- ambari.repo

- epel.repo

- epel-testing.repo

- HDP.repo

- HDP-UTILS.repo

- puppetlabs.repo

- sandbox.repo

Using an scp software, like WinSCP for Windows and Fugu for Mac, copy them over to /etc/yum.repos.d/ on your virtual machines.

IMPORTANT NOTE

Remember to log in as root, while using scp, in order to copy over files to/etc/yum.repos.d/.

Get Java and JDK directory

Java is pre-installed on Azure Virtual Machines

-

Confirm Java installation and version :

java -versionIMPORTANT NOTE Java version should be greater than or equal to 1.7x

-

Get Java Home directory following instructions :

-

Find Java bin path :

which javaIMPORTANT NOTE For example :

/usr/bin/javafor Ubuntu version 6.7 -

Get relative link of Java :

ls -ltr <path_returned_by_which_java> -

Copy path to right hand side of the symlink returned above (For example /usr/bin/java -> /etc/alternatives/java) and use it for this final step :

ls -ltr <path_to_symlink_copied> -

Make a note of the final JDK path returned. You will need it in the later steps for Ambari to know the JAVA_HOME

-

IMPORTANT NOTE

You do not need to repeat these steps (Java and JDK directory) on all machines as it will be same on all three machines.

Path should look like this /usr/lib/jvm/jre-1.7.0-openjdk.x86_64/bin/java. You will only need the path to the JDK like this /usr/lib/jvm/jre-1.7.0-openjdk.x86_64/

Otherwise follow instructions from Digital Ocean on using apt-get to

install java on your machine.

Setup the Ambari Server and Agent

We choose the first node to be the Ambari Server and follow steps below.

-

Ssh into VM and change user to root :

sudo su -

Clean Yum cache :

yum clean all -

Install Ambari Server :

yum install ambari-server -y -

Setup the server with the following commands:

-

ambari-server setup -

Choose all the defaults until you hit Customize user account for ambari-server daemon and then you choose

n -

Choose Custom JDK - Option (3) for Java JDK

-

Enter the JDK path copied above as Path to JAVA_HOME

-

Enter

nfor advanced database configuration and let Ambari install -

Start Server :

ambari-server start

-

Install HDP Ambari Server View

-

Point browser from your local machine to the FQDN of first machine (Ambari Server) :

http://<FQDN_of_first_machine:8080>/ -

Log in with username

adminand passwordadmin -

Click on Get Started > Launch Install Wizard

-

Name your cluster and click Next.

-

Select Stack > Click HDP 2.4

-

Uncollapse Advanced Repository Options Menu.

-

Uncheck every Operating System except Redhat6

-

Keep Skip Repository Base URL validation (Advanced) as unchecked.

Click Next to continue.

-

-

Setup FQDN for Target Hosts and Allow SSH access

-

Ssh into the machine

-

cat /etc/hosts -

Copy FQDN entries of all three machines. For instance

node1.eastus2.cloudapp.azure.com node2.eastus2.cloudapp.azure.com node3.eastus2.cloudapp.azure.com -

Enter FQDN entries into Targeted Host textbox on Ambari

-

Copy the first machine's ssh private key info :

cat ~/.ssh/id_rsa -

Paste private key information into Host Registration Information textbox

-

Make sure SSH User Account is

root. Hit Confirm and Register and then continue.

-

-

Successfully Confirm Hosts. Host will register and install onto the cluster.

-

Hosts will be checked for potential problems, ignore any warnings and continue.

-

Choose Services

- HDFS

- YARN + MapReduce2

- Zookeeper

- Ambari Metrics

Click Next

-

Assign Masters

Change the components to be installed on different nodes using the dropdown. Ensure the following are installed on the main machine.-

NameNode

-

Metrics Collector

Tweak others as desired, but default settings are fine.

-

-

Assign Slaves and Clients

-

Client : Set to main (master node) machine (i.e Set up to be the Resource Manager)

-

NodeManager : Assign to all nodes. This is YARN's agent that handles individual machine (node) compute needs.

-

DataNode : Assign to other machines (worker nodes) to allocate all resources on main node to Resource Management.

-

NFSGateway : This is not relevant for this tutorial. However feel free to assign this to main (master node) machine. This gives clients the ability to mount HDFS and interact with it using NFS.

Click Next

-

-

Customize Services

-

Make sure NameNode and DataNode directories are pointing to

/data/hadoop/hdfs/namenodeand/data/hadoop/hdfs/data. This guarantees the cluster nodes are using the earlier attached data disk. -

Fix warning on Ambari Metrics

- From the top menu tabs (between Zookeeper and Misc) select Ambari Metrics

- Click on Ambari Metrics

- Uncollapse General (under Ambari Metrics). This should have a Red Warning flag.

- Scroll down to Grafana Admin Password (If you find one)

- Create new password

- Scroll to the end of the page and click on Next

- From the top menu tabs (between Zookeeper and Misc) select Ambari Metrics

-

-

Click Deploy

This step takes a while to install components on all three nodes. For instance below - node1.example.com, node2.example.com and node3.example.com

-