|

|

||

|---|---|---|

| .ado/workflows | ||

| .devcontainer | ||

| .github | ||

| code | ||

| configs | ||

| docs | ||

| infra | ||

| .gitattributes | ||

| .gitignore | ||

| CODE_OF_CONDUCT.md | ||

| LICENSE | ||

| README.md | ||

| SECURITY.md | ||

README.md

Enterprise Scale Analytics and AI - Data Domain: Stream Processing

General disclaimer Please be aware that this template is in private preview. Therefore, expect smaller bugs and issues when working with the solution. Please submit an Issue in GitHub if you come across any issues that you would like us to fix.

DO NOT COPY - UNDER DEVELOPMENT - MS INTERNAL ONLY - Please be aware that this template is in private preview without any SLA.

Description

Enterprise Scale Analytics and AI solution pattern emphasizes self-service and follows the concept of creating landing zones for cross-functional teams. Operation and responsibility of these landing zones is handed over to the responsible teams inside the data node. The teams are free to deploy their own services within the guardrails set by Azure Policy. To scale across the landing zones more quickly and allow a shorter time to market, we use the concept of Data Domain and Data Product templates. Data Domain and Data Product templates are blueprints, which can be used to quickly spin up environments for these cross-functional teams. The teams can fork these repositories to quickly spin up environments based on their requirements. This Data Domain template deploys a set of services, which can be used for data stream processing. The template includes a set of different services for processing data streams, which allows the teams to choose their tools based on their requirements and preferences.

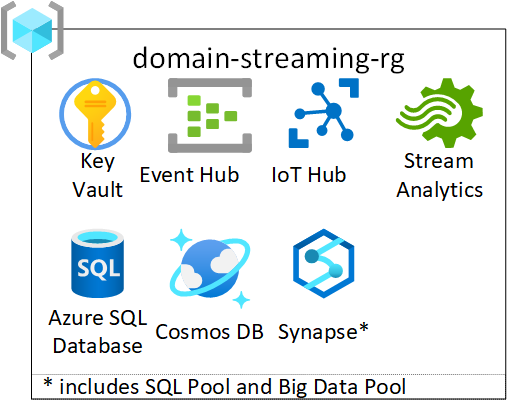

What will be deployed?

By default, all the services which come under Data Domain Streaming are enabled, and you must explicitly disable services that you don't want to be deployed.

Note: Before deploying the resources, we recommend to check registration status of the required resource providers in your subscription. For more information, see Resource providers for Azure services.

For each Data Domain Streaming template, the following services are created:

- Key Vault

- Event Hub

- IoT Hub

- Stream Analytics

- Cosmos DB

- Synapse Workspace

- Azure SQL Database

- SQL Pool

- SQL Server

- SQL Elastic Pool

- BigData Pool

For more details regarding the services that will be deployed, please read the Domains guide in the Enterprise Scale Analytics documentation.

You have two options for deploying this reference architecture:

- Use the

Deploy to Azurebutton for an immediate deployment - Use GitHub Actions or Azure DevOps Pipelines for an automated, repeatable deployment

Prerequisites

Note: Please make sure you have successfully deployed a Data Management Landing Zone and a Data Landing Zone. The Data Domain relies on the Private DNS Zones that are deployed in the Data Management Template. If you have Private DNS Zones deployed elsewhere, you can also point to these. If you do not have the Private DNS Zones deployed for the respective services, this template deployment will fail. Also, this template requires subnets as specified in the prerequisites. The Data Landing Zone already creates a few subnets, which can be used for this Data Domain.

The following prerequisites are required to make this repository work:

- A Data Management Landing Zone deployed. For more information, check the Data Management Landing Zone repo.

- A Data Landing Zone deployed. For more information, check the Data Landing Zone repo.

- A resource group within an Azure subscription

- User Access Administrator or Owner access to a resource group to be able to create a service principal and role assignments for it.

- Access to a subnet with

privateEndpointNetworkPoliciesandprivateLinkServiceNetworkPoliciesset to disabled. The Data Landing Zone deployment already creates a few subnets with this configuration (subnets with suffix-privatelink-subnet). - For deployment, please choose one of the below Supported Regions list.

Supported Regions:

- Asia Southeast

- Europe North

- Europe West

- France Central

- Japan East

- South Africa North

- UK South

- US Central

- US East

- US East 2

- US West 2

If you don't have an Azure subscription, create your Azure free account today.

Option 1: Deploy to Azure - Quickstart

| Data Domain Streaming |

|---|

Option 2: GitHub Actions or Azure DevOps Pipelines

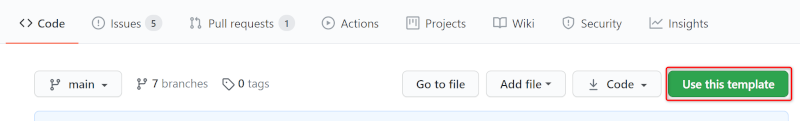

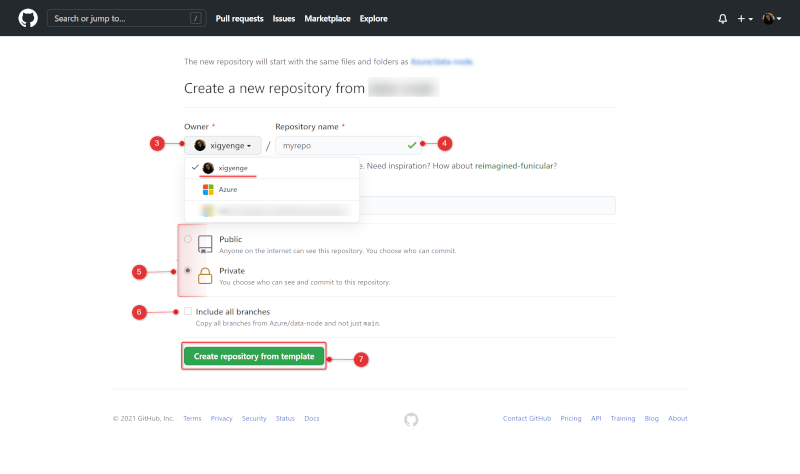

1. Create repository from a template

- On GitHub, navigate to the main page of this repository.

- Above the file list, click Use this template

- Use the Owner drop-down menu and select the account you want to own the repository.

- Type a name for your repository and an optional description.

- Choose a repository visibility. For more information, see "About repository visibility."

- Optionally, to include the directory structure and files from all branches in the template and not just the default branch, select Include all branches.

- Click Create repository from template.

2. Setting up the required Service Principal and access

A service principal with Contributor role needs to be generated for authentication and authorization from GitHub or Azure DevOps to your Azure Data Landing Zone subscription, where the data-domain-streaming services will be deployed. Just go to the Azure Portal to find the ID of your subscription. Then start the Cloud Shell or Azure CLI, login to Azure, set the Azure context and execute the following commands to generate the required credentials:

Note: The purpose of this new Service Principal is to assign least-privilege rights. Therefore, it requires the Contributor role at a resource group scope in order to deploy the resources inside the resource group dedicated to a specific data domain. The Network Contributor role assignment is required as well in this repository in order to assign the resources to the dedicated subnet.

Azure CLI

# Replace {service-principal-name} and {subscription-id} with your

# Azure subscription id and any name for your service principal.

az ad sp create-for-rbac \

--name "{service-principal-name}" \

--skip-assignment \

--sdk-auth

This will generate the following JSON output:

{

"clientId": "<GUID>",

"clientSecret": "<GUID>",

"subscriptionId": "<GUID>",

"tenantId": "<GUID>",

(...)

}

Note: Take note of the output. It will be required for the next steps.

Now that the new Service Principal is created, as mentioned, role assignments are required for this service principal in order to be able to successfully deploy all services. Required role assignments which will be added on a later step include:

| Role Name | Description | Scope |

|---|---|---|

| Private DNS Zone Contributor | We expect you to deploy all Private DNS Zones for all data services into a single subscription and resource group. Therefor, the service principal needs to be Private DNS Zone Contributor on the global dns resource group which was created during the Data Management Zone deployment. This is required to deploy A-records for the respective private endpoints. | (Resource Group Scope) /subscriptions/{subscriptionId}/resourceGroups/{resourceGroupName} |

| Contributor | We expect you to deploy all data-domain-streaming services into a single resource group within the Data Landing Zone subscription. The service principal requires a Contributor role-assignment on that resource group. | (Resource Group Scope) /subscriptions/{subscriptionId}/resourceGroups/{resourceGroupName} |

| Network Contributor | In order to deploy Private Endpoints to the specified privatelink-subnet which was created during the Data Landing Zone deployment, the service principal requires Network Contributor access on that specific subnet. | (Child-Resource Scope) /subscriptions/{subscriptionId}/resourceGroups/{resourceGroupName} /providers/Microsoft.Network/virtualNetworks/{virtualNetworkName}/subnets/{subnetName}" |

To add these role assignments, you can use the Azure Portal or run the following commands using Azure CLI/Azure Powershell:

Azure CLI - Add role assignments

# Get Service Principal Object ID

az ad sp list --display-name "{servicePrincipalName}" --query "[].{objectId:objectId}" --output tsv

# Add role assignment

# Resource Scope level assignment

az role assignment create \

--assignee "{servicePrincipalObjectId}" \

--role "{roleName}" \

--scopes "{scope}"

# Resource group scope level assignment

az role assignment create \

--assignee "{servicePrincipalObjectId}" \

--role "{roleName}" \

--resource-group "{resourceGroupName}"

# For Child-Resource Scope level assignment

# TBD

Azure Powershell - Add role assignments

# Get Service Principal Object ID

$spObjectId = (Get-AzADServicePrincipal -DisplayName "{servicePrincipalName}").id

# Add role assignment

# For Resource Scope level assignment

New-AzRoleAssignment `

-ObjectId $spObjectId `

-RoleDefinitionName "{roleName}" `

-Scope "{scope}"

# For Resource group scope level assignment

New-AzRoleAssignment `

-ObjectId $spObjectId `

-RoleDefinitionName "{roleName}" `

-ResourceGroupName "{resourceGroupName}"

# For Child-Resource Scope level assignment

New-AzRoleAssignment `

-ObjectId $spObjectId `

-RoleDefinitionName "{roleName}" `

-ResourceName "{subnetName}" `

-ResourceType "Microsoft.Network/virtualNetworks/subnets" `

-ParentResource "virtualNetworks/{virtualNetworkName}" `

-ResourceGroupName "{resourceGroupName}

3. Resource Deployment

Now that you have set up the Service Principal, you need to choose how would you like to deploy the resources. Deployment options:

GitHub Actions

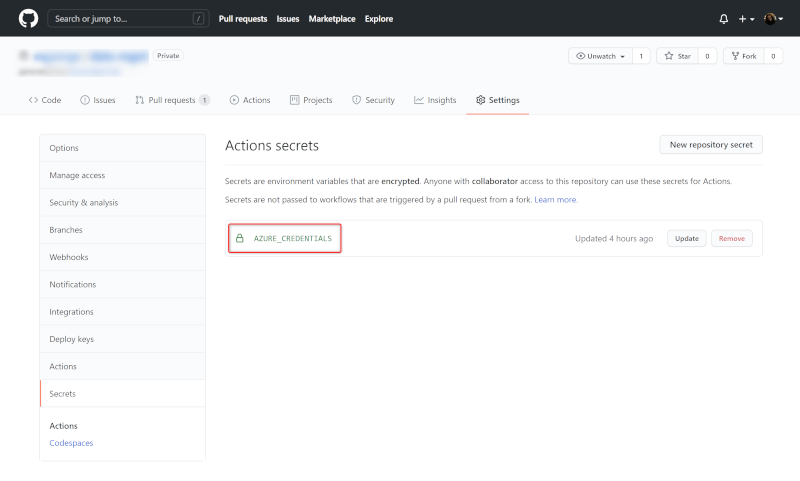

If you want to use GitHub Actions for deploying the resources, add the previous JSON output as a repository secret with the name AZURE_CREDENTIALS in your GitHub repository:

To do so, execute the following steps:

- On GitHub, navigate to the main page of the repository.

- Under your repository name, click on the Settings tab.

- In the left sidebar, click Secrets.

- Click New repository secret.

- Type the name

AZURE_CREDENTIALSfor your secret in the Name input box. - Enter the JSON output from above as value for your secret.

- Click Add secret.

Azure DevOps

If you want to use Azure DevOps Pipelines for deploying the resources, you need to create an Azure Resource Manager service connection. To do so, execute the following steps:

-

First, you need to create an Azure DevOps Project. Instructions can be found here.

-

In Azure DevOps, open the Project settings.

-

Now, select the Service connections page from the project settings page.

-

Choose New service connection and select Azure Resource Manager.

-

On the next page select Service principal (manual).

-

Select the appropriate environment to which you would like to deploy the templates. Only the default option Azure Cloud is currently supported.

-

For the Scope Level, select Subscription and enter your

subscription Idandname. -

Enter the details of the service principal that we have generated in step 3. (Service Principal Id = clientId, Service Principal Key = clientSecret, Tenant ID = tenantId) and click on Verify to make sure that the connection works.

-

Enter a user-friendly Connection name to use when referring to this service connection. Take note of the name because this will be required in the parameter update process.

-

Optionally, enter a Description.

-

Click on Verify and save.

More information can be found here.

4. Parameter Update Process

Note: This section applies for both Azure DevOps and GitHub Deployment

In order to deploy the ARM templates in this repository to the desired Azure subscription, you will need to modify some parameters in the forked repository, which will be used for updating the files which will be used during the deployment. Therefor, this step should not be skipped for neither Azure DevOps/GitHub options. As updating each parameter file manually is a time-consuming and potentially error-prone process, we have simplified the task with a GitHub Action workflow. You can update your deployment parameters by completing three steps:

Configure the updateParameters workflow

Note: There is only one 'updateParameters.yml', which can be found under the '.github' folder and this one will be used also for setting up the Azure DevOps Deployment

To begin, please open the .github/workflows/updateParameters.yml. In this file you need to update the environment variables. Just click on .github/workflows/updateParameters.yml and edit the following section:

env:

GLOBAL_DNS_RESOURCE_GROUP_ID: '/subscriptions/{subscriptionId}/resourceGroups/{resourceGroupName}'

DATA_LANDING_ZONE_SUBSCRIPTION_ID: '{dataLandingZoneSubscriptionId}'

DATA_DOMAIN_NAME: '{dataDomainName}' # Choose max. 11 characters. They will be used as a prefix for all services. If not unique, deployment can fail for some services.

LOCATION: '{regionName}' # Specifies the region for all services (e.g. 'northeurope', 'eastus', etc.)

SUBNET_ID: '/subscriptions/{subscriptionId}/resourceGroups/{resourceGroupName}/providers/Microsoft.Network/virtualNetworks/{vnetName}/subnets/{subnetName}' # Resource ID of the dedicated privatelink-subnet which was created during the Data Landing Zone deployment. Choose one which has the suffix **private-link**.

SYNAPSE_STORAGE_ACCOUNT_NAME: '{synapseStorageAccountName}' # Choose a storage account which was previously deployed in the Data Landing Zone.

SYNAPSE_STORAGE_ACCOUNT_FILE_SYSTEM_NAME: '{synapseStorageAccountFileSystemName}' # Choose the name of the container inside the Storage Account which was referenced in the above SYNAPSE_STORAGE_ACCOUNT_NAME variable.

PURVIEW_ID: '/subscriptions/{subscriptionId}/resourceGroups/{resourceGroupName}/providers/Microsoft.Purview/accounts/{purviewName}' # If no Purview account is deployed, leave it empty string.

AZURE_RESOURCE_MANAGER_CONNECTION_NAME: '{resourceManagerConnectionName}' # This is needed just for ADO Deployments.

The following table explains each of the parameters:

| Parameter | Description | Sample value |

|---|---|---|

| GLOBAL_DNS_RESOURCE_GROUP_ID | Specifies the global DNS resource group resource ID which gets deployed with the Data Management Landing Zone | /subscriptions/xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx/resourceGroups/my-resource-group |

| DATA_LANDING_ZONE_SUBSCRIPTION_ID | Specifies the subscription ID of the Data Landing Zone where all the resources will be deployed | xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx |

| DATA_DOMAIN_NAME | Specifies the name of your Data Domain. The value should consist of alphanumeric characters (A-Z, a-z, 0-9) and should not contain any special characters like -, _, ., etc. Special characters will be removed in the renaming process. |

mydomain01 |

| LOCATION | Specifies the region where you want the resources to be deployed. Please use the same region as for your Data Landing Zone. Otherwise the deployment will fail, since the Vnet and the Private Endpoints have to be in the same region. Also Check Supported Regions | northeurope |

| SUBNET_ID | Specifies the resource ID of the dedicated privatelink-subnet which was created during the Data Landing Zone deployment. Choose one which has the suffix private-link. The subnet is already configured with privateEndpointNetworkPolicies and privateLinkServiceNetworkPolicies set to Disabled, as mentioned in the Prerequisites |

/subscriptions/xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx/resourceGroups/my-network-rg/providers/Microsoft.Network/virtualNetworks/my-vnet/subnets/{my}-privatelink-subnet |

| SYNAPSE_STORAGE_ACCOUNT_NAME | Specifies the name of the Azure Synapse Storage Account, which was previously deployed in the Data Landing Zone. Go to the {DataLandingZoneName}-storage resource group in your Data Landing Zone and copy the resource name ({DataLandingZoneName}worksa). |

mydlzworksa |

| SYNAPSE_STORAGE_ACCOUNT_FILE_SYSTEM_NAME | Specifies the name of the Synapse Account filesystem, which is the name of the container inside the Storage Account that was referenced in the above SYNAPSE_STORAGE_ACCOUNT_NAME variable. | data |

| PURVIEW_ID | Specifies the resource ID of the Purview account to which the Synapse workspaces and Data Factories should connect to share data lineage and other metadata. In case you do not have a Purview account deployed at this stage, leave it empty string. | /subscriptions/xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx/resourceGroups/my-governance-rg/providers/Microsoft.Purview/accounts/my-purview |

| AZURE_RESOURCE_MANAGER_CONNECTION_NAME | Specifies the resource manager connection name in Azure DevOps. You can leave the default value if you want to use GitHub Actions for your deployment. More details on how to create the resource manager connection in Azure DevOps can be found in step 4. b) or here. | my-connection-name |

Execute the updateParameters workflow

After updating the values, please commit the updated version to the main branch of your repository. This will kick off a GitHub Action workflow, which will appear under the Actions tab of the main page of the repository. The Update Parameter Files workflow will update all parameters in your repository according to a pre-defined naming convention.

Configure the deployment pipeline

The workflow above will make changes to all of the ARM config files. These changes will be stored in a new branch. Once the process has finished, it will open a new pull request in your repository where you can review the changes made by the workflow. The pull request will also provide the values you need to use to configure the deployment pipeline. Please follow the instructions in the pull request to complete the parameter update process.

The instructions will guide towards the following steps:

- create a new resource group where all the resources specific to this Data Domain Streaming will be deployed;

- add the required role assignments for the Service Principal created at step 2. Setting up the required Service Principal ;

- change the environment variables in the deployment workflow file

Note: We are not renaming the environment variables in the workflow files because this could lead to an infinite loop of workflow runs being started.

Merge these changes back to the main branch of your repo

After following the instructions in the pull request, you can merge the pull request back into the main branch of your repository by clicking on Merge pull request. Finally, you can click on Delete branch to clean up your repository.

5. (not applicable for GH Actions) Reference pipeline from GitHub repository in Azure DevOps Pipelines

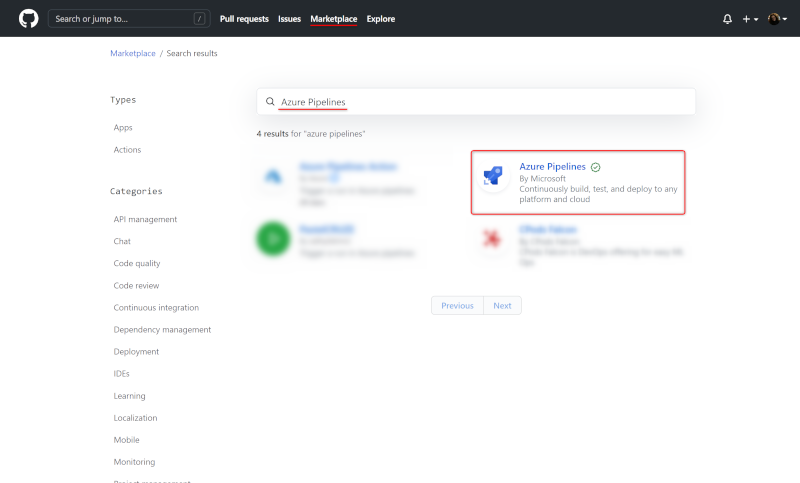

Install Azure DevOps Pipelines GitHub Application

First you need to add and install the Azure Pipelines GitHub App to your GitHub account. To do so, execute the following steps:

-

Click on Marketplace in the top navigation bar on GitHub.

-

In the Marketplace, search for Azure Pipelines. The Azure Pipelines offering is free for anyone to use for public repositories and free for a single build queue if you're using a private repository.

-

Select it and click on Install it for free.

-

If you are part of multiple GitHub organizations, you may need to use the Switch billing account dropdown to select the one into which you forked this repository.

-

You may be prompted to confirm your GitHub password to continue.

-

You may be prompted to log in to your Microsoft account. Make sure you log in with the one that is associated with your Azure DevOps account.

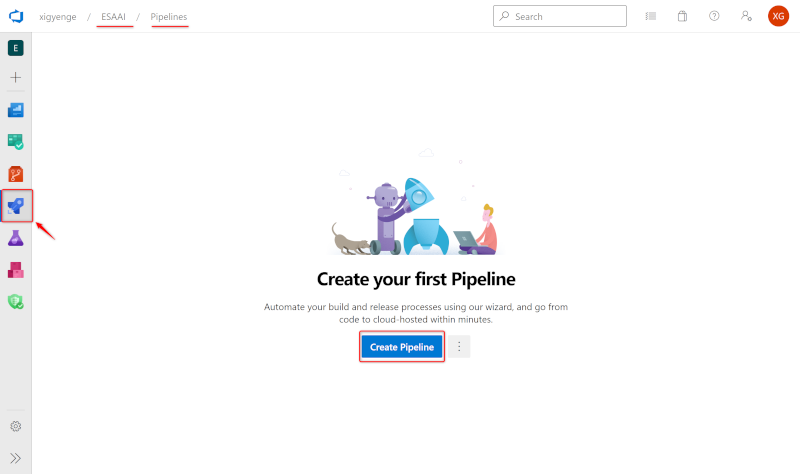

Configuring the Azure Pipelines project

As a last step, you need to create an Azure DevOps pipeline in your project based on the pipeline definition YAML file that is stored in your GitHub repository. To do so, execute the following steps:

-

Select the Azure DevOps project where you have setup your

Resource Manager Connection. -

Select Pipelines and then New Pipeline in order to create a new pipeline.

-

Choose GitHub YAML and search for your repository (e.g. "

GitHubUserName/RepositoryName"). -

Select your repository.

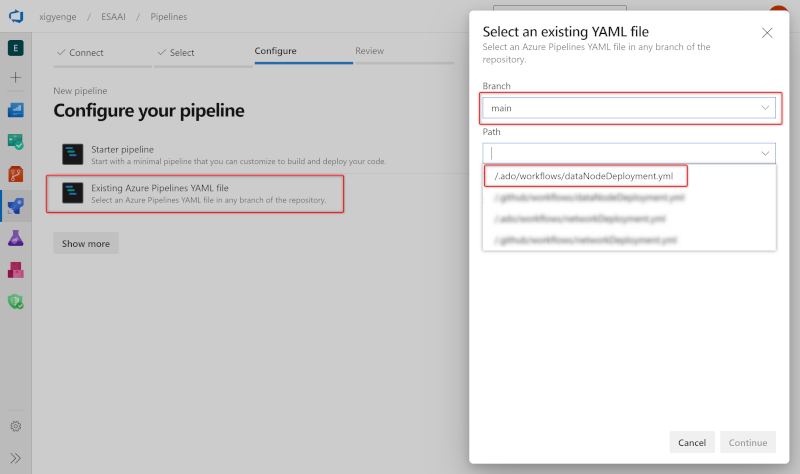

-

Click on Existing Azure Pipelines in YAML file

-

Select

mainas branch and/.ado/workflows/dataDomainDeployment.ymlas path. -

Click on Continue and then on Run.

6. Follow the workflow deployment

Congratulations! You have successfully executed all steps to deploy the template into your environment through GitHub Actions or Azure DevOps.

If you are using GitHub Actions, you can navigate to the Actions tab of the main page of the repository where you will see a workflow with the name Data Domain Deployment running. Click on it to see how it deploys one service after another. If you run into any issues, please open an issue here.

If you are using Azure DevOps Pipelines, you can navigate to the pipeline that you have created as part of step 6 and monitor it as each service is deployed. If you run into any issues, please open an issue here.

Documentation

Code Structure

| File/folder | Description |

|---|---|

.ado/workflows |

Folder for ADO workflows. The dataDomainDeployment.yml workflow shows the steps for an end-to-end deployment of the architecture. |

.github/workflows |

Folder for GitHub workflows. The updateParameters.yml workflow is used for the parameter update process, while the dataDomainDeployment.yml workflow shows the steps for an end-to-end deployment of the architecture. |

code |

Sample password generation script that will be run in the deployment workflow for resources that require a password during the deployment. |

configs |

Folder containing a script and configuration file that is used for the parameter update process. |

docs |

Resources for this README. |

infra |

Folder containing all the ARM templates for each of the resources that will be deployed (deploy.{resource}.json) together with their parameter files (params.{resource}.json). |

CODE_OF_CONDUCT.md |

Microsoft Open Source Code of Conduct. |

LICENSE |

The license for the sample. |

README.md |

This README file. |

SECURITY.md |

Microsoft Security README. |

Enterprise Scale Analytics and AI - Documentation and Implementation

- Documentation

- Implementation - Data Management

- Implementation - Data Landing Zone

- Implementation - Data Domain - Batch

- Implementation - Data Domain - Streaming

- Implementation - Data Product - Reporting

- Implementation - Data Product - Analytics & Data Science

Known issues

Error: MissingSubscriptionRegistration

Error Message:

ERROR: Deployment failed. Correlation ID: ***

"error": ***

"code": "MissingSubscriptionRegistration",

"message": "The subscription is not registered to use namespace 'Microsoft.DocumentDB'. See https://aka.ms/rps-not-found for how to register subscriptions.",

"details": [

***

"code": "MissingSubscriptionRegistration",

"target": "Microsoft.DocumentDB",

"message": "The subscription is not registered to use namespace 'Microsoft.DocumentDB'. See https://aka.ms/rps-not-found for how to register subscriptions."

Solution:

This error message appears, in case during the deployment it tries to create a type of resource which has never been deployed before inside the subscription. We recommend to check prior the deployment whether the required resource providers are registered for your subscription and if needed, register them through the Azure Portal, Azure Powershell or Azure CLI as mentioned here.

Contributing

This project welcomes contributions and suggestions. Most contributions require you to agree to a Contributor License Agreement (CLA) declaring that you have the right to, and actually do, grant us the rights to use your contribution. For details, visit https://cla.opensource.microsoft.com.

When you submit a pull request, a CLA bot will automatically determine whether you need to provide a CLA and decorate the PR appropriately (e.g., status check, comment). Simply follow the instructions provided by the bot. You will only need to do this once across all repositories using our CLA.

This project has adopted the Microsoft Open Source Code of Conduct. For more information see the Code of Conduct FAQ or contact opencode@microsoft.com with any additional questions or comments.