This pr is auto merged as it contains a mandatory file and is opened for more than 10 days. |

||

|---|---|---|

| docker/ubuntu-xenial-kinetic | ||

| docs | ||

| resources | ||

| setup | ||

| src | ||

| .gitignore | ||

| .gitmodules | ||

| LICENSE | ||

| README.md | ||

| SECURITY.md | ||

| azure-pipelines.yml | ||

| intera.sh | ||

README.md

Robot control through Microsoft Bot Framework and Cognitive Services

Introduction

In this Lab, you will learn how to use the Microsoft Bot Framework and Microsoft Cognitive Services to develop an intelligent chat bot that controls manipulator robot robot powered by ROS (Robot Operating System) and Gazebo, the robot simulator. This lab will give you the confidence to start your journey with intelligent collaborative robotics.

The lab will focus on the development of a chat bot using the Bot Framework to control a robot that performs complex tasks (such as sorting cubes). The Bot Framework allows us to develop chat bots in different programming languages and, by adding cognitive services to it, we can make our bot intelligent and include capabilities like natural language understanding, image recognition, text recognition, language translation and more. In this lab we will create a simple bot to allow users to communicate with a physical robot using natural language and computer vision.

It is not required any previous knowledge on robotics or ROS neither having any robot hardware to complete it.

We will use in the simulation the Sawyer robot, an integrated collaborative robot (aka cobot) solution designed with embedded vision, smart swappable grippers, and high-resolution force control. The robot's purpose is to automate specific repetitive industrial tasks. It comes with an arm that has a gripper which can be easily replaced by one of the available options from the ClickSmart Gripper Kit.

For the simulation, we will use Gazebo, a 3D robot simulator often used in ROS (see image below).

Gazebo simulation |

Microsoft Bot Framework |

Sorting cubes video

|

Table of Contents

- Introduction

- Requirements and Setup

- Host Machine Requirements

- Setup Lab Repository & ROS

- Setup Language Understanding

- Setup Computer Vision

- Hands on Lab

- Run Robot Simulation

- Test the 'move your arm' command

- Make the grippers move

- Show robot statistics

- Test the 'move cube' command

- Contributing

Requirements and Setup

Host Machine Requirements

This lab requires a computer with GPU and Ubuntu Linux 16.04. The main technologies that will be used locally are ROS and the bot framework. The rest of cognitive functionality will be located in the cloud.

The setup stage has three main steps that will be described in more detail in the following sections:

- Setup Lab Repository & ROS

- Language Understanding Setup

- Custom Vision Setup

Setup Lab Repository and ROS

Before downloading the repository we need to install python and git. Open a command terminal and type the following command:

sudo apt-get install -y git python

Navigate to your home folder and clone the Lab repository with its submodule dependencies by running the following commands on the terminal:

cd $HOME

git clone https://github.com/Microsoft/AI-Robot-Challenge-Lab.git --recurse-submodules

Now, it is time to install the rest of dependencies and build the solution. We have prepared a bundled setup script to install them (ROS, BotFramework) and build the code. Open a terminal and type following commands:

cd ~/AI-Robot-Challenge-Lab/setup

./robot-challenge-setup.sh

During the installation you might be required to introduce your sudo credentials. Please be patient; the installation will take around 30 minutes. Once completed, close the terminal as you need to refresh environment variables from the installation.

Setup Language Understanding

The Language Understanding (LUIS) technology enables your bot to understand a user's intent from their own words. For example, we could ask the robot to move just typing: move arm.

LUIS uses machine learning to allow developers to build applications that can receive user input in natural language and extract meaning from it.

For this lab, we have already created a bot configuration and you just need to configure it in Azure. If you want to know more about its internals, have a look at this link to check out the contents.

Create Azure resources

First we will create the required Azure resources for LUIS. While LUIS has a standalone portal for creating and configure the model, it uses Azure for subscription management. Here, we provide you an Azure Template to create the required Azure resources without entering into the Azure Portal.

Alternatively, you can create these resources manually for this lab following the steps described in this link.

Configure LUIS Service

In the next steps we will show you how you can configure the LUIS service it will be used for this lab:

- Login into the LUIS portal. Use the same credentials as you used for logging into Azure. If this is your first login in this portal, you will receive a welcome message. Now:

- Scroll down to the bottom of the welcome page.

- Click Create LUIS app.

- Select United States from the country list.

- Check the I agree checkbox.

- Click the Continue button.

- After that, we will get into the

My Appsdashboard. Now, we will import a LUIS App we have provided for this lab.- Select your subscription from the subscription combo box.

- Select also the authoring resource you created in previous steps. If you used the Azure template, select

robotics-luis-Authoring. - Click

Import new appto open the import dialog.

- Now we are in the Import dialog.

- Select the base model from

~/AI-Robot-Challenge-Lab/resources/robotics-bot-luis-app.json. - click on the Done button to complete the import process.

- Select the base model from

- Wait for the import to complete. When it is imported successfully. You should see an image like shown below.

- Click on the Train button and wait for it to finish. After the training, you will see a Training evaluation report where you should get 100% of success in prediction.

-

Now let's do a quick functionality check for the LUIS Service directly from the dashboard page.

- Click the Test button to open the test panel.

- Then Type

move armand press enter.

It should return the

MoveArmintent as it is shown in image below.

- Now, we will handle the application keys.

- Click on the Manage option.

- Copy the LUIS

Application ID(See image below) to Notepad . We'll need this App ID later on.

- Finally, let's create a prediction resource and publish it.

- Click the Azure Resources option.

- Click on Add prediction resource. You might need to scroll down to find the option.

- Select the only tenant.

- Select your subscription.

- Select the key of your Luis resource.

- Click on Assign resource.

- Publish your application:

- Click the Publish button at the top right corner.

- Click on the Publish button next to the Production slot.

- Wait for the process to finish.

Congratulations! You have your LUIS service ready to be used. We will do that in the Hands On Lab section.

Setup Computer Vision

The cloud-based Computer Vision service provides developers with access to advanced machine learning models for analyzing images. Computer Vision algorithms can analyze the content of an image in different ways, depending on the visual features you're interested in. For instance, in this lab we will be analyzing images to identify a dominant color for our robot to process. Precisely for this lab, we will use Computer Vision cognitive service to detect cube colors.

The Computer Vision API requires a subscription key from the Azure portal. This key needs to be either passed through a query string parameter or specified in the request header.Here, we give you an Azure Template to create the required resources.

Alternatively, you can create these resources manually for this lab following the steps described in this link.

Hands on Lab

Run Robot Simulation

Now the repository is downloaded and built we can launch the simulator. First enter into the intera SDK command line. To do that, type the following commands:

cd ~/AI-Robot-Challenge-Lab

./intera.sh sim

Launch the robot simulation. Type in the intera command line the following:

source devel/setup.bash

roslaunch sorting_demo sorting_demo.launch

Wait until the Sawyer robot simulation starts. If it is the first time you open Gazebo it may need to download some models and take a while. Eventually, two windows must open:

- Gazebo simulator

- Rviz visualizer

Rviz is an open-source 3D visualizer for the Robot Operating System (ROS) framework. It uses sensors data and custom visualization markers to develop robot capabilities in a 3D environment. For this lab, we will see the estimated robot pose based on the information received from joint sensors.

Sorting Cubes with Sawyer

The code provided implements with ROS a complete robot application (from perception, to arm motion planning and control) with the Sawyer Robot. Feel free to have a look go the code in src/sorting_demo.

The main robot controllers also starts a REST server and it is listening from any action request. For example, to see how the robot sort all the cubes on the trays, open a web browser and put the following URL:

http://localhost:5000/start

The robot must start sorting cubes into the three trays. You can see that in the following video:

To stop the simulation press Ctrl+C in the terminal and wait for the entire process to terminate.

Add support for Language Understanding

Now LUIS service is configured in Azure and our robot is running we can start programming our bot client in Python using the Bot Builder SDK V4.

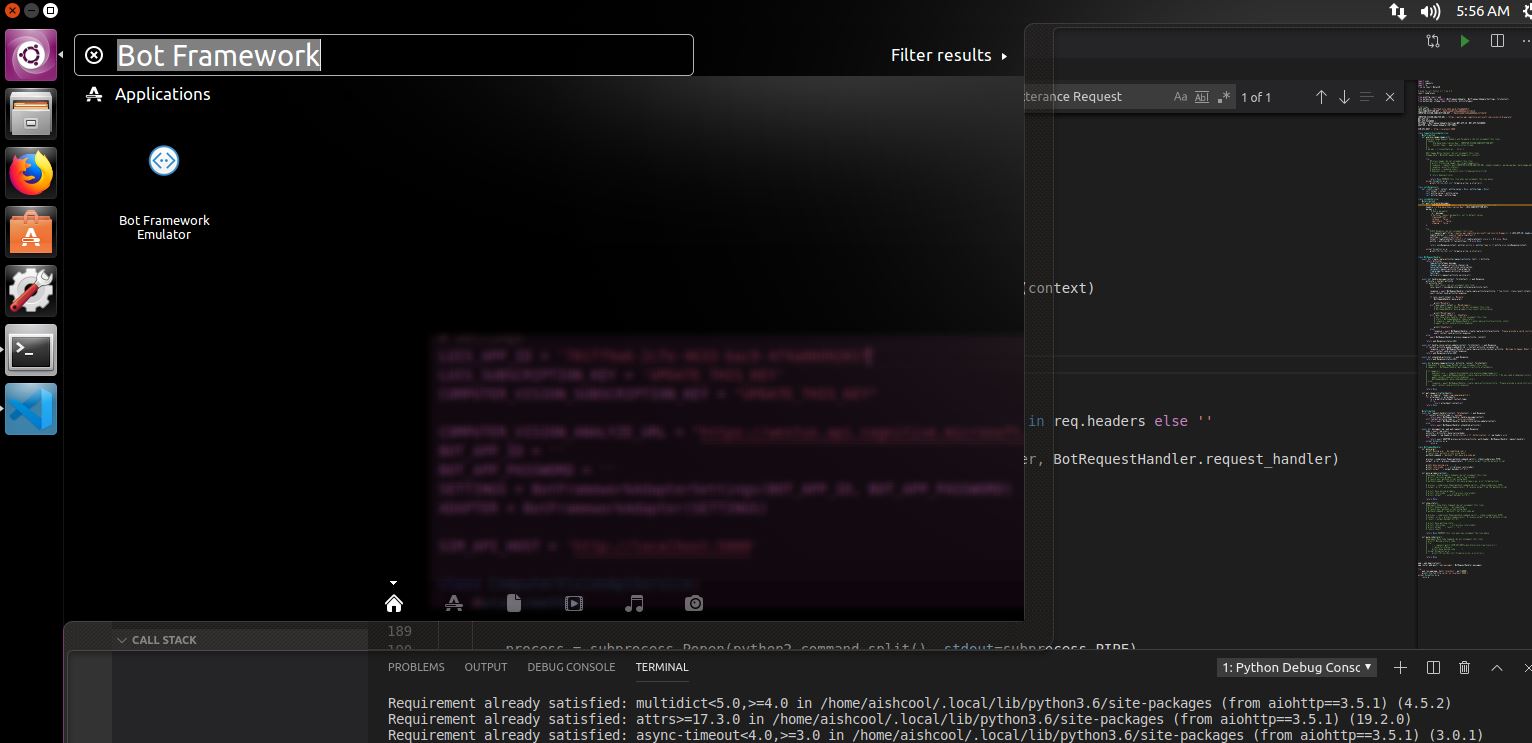

We'll run it locally using the Bot Framework Emulator and extend its functionality by using LUIS service to enable different operations for the robot.

The code base includes a method to make the physical robot move/wave the arm. This method invokes a script that uses the Intera SDK to send commands to the robot.

Let's add language understanding support to the bot.

-

Open Visual Studio Code.

-

Click on Open Folder and select the

~/AI-Robot-Challenge-Lab/src/chatbotfolder that you extracted earlier. -

Click on

talk-to-my-robot.pyto open the bot Python script. -

If prompted to install the Python Extension, select Install and once installed select Reload to activate the extension.

-

Click on View -> Command Palette from the top menu and type

Python:Select Interpreter. You should see Python 3.6 in the options and make sure to select this version. -

Search for the

#Settingscomment. Update the LUIS App ID and Key you previously obtained:LUIS_APP_ID = 'UPDATE_THIS_KEY' LUIS_SUBSCRIPTION_KEY = 'UPDATE_THIS_KEY' -

Go to the

BotRequestHandlerclass and modify thehandle_messagemethod:-

Hint: Search for the #Get LUIS result comment and uncomment the following line:

luis_result = LuisApiService.post_utterance(activity.text)NOTE: This method is the entry point of the bot messages. Here we can see how we get the incoming request, send it to LUIS, and use the intent result to trigger specific operations. In this case it already provides support to handle the MoveArm intent.

-

-

Go to the

LuisApiServiceclass and modify thepost_utterancemethod:-

Hint: Search for the

#Post Utterance Request Headers and Paramscomment and then uncomment the following lines:headers = {'Ocp-Apim-Subscription-Key': LUIS_SUBSCRIPTION_KEY} params = { # Query parameter 'q': message, # Optional request parameters, set to default values 'timezoneOffset': '0', 'verbose': 'false', 'spellCheck': 'false', 'staging': 'false', } -

Search for the

#LUIS Responsecomment and then uncomment the following lines:r = requests.get('https://westus.api.cognitive.microsoft.com/luis/v2.0/apps/%s' % LUIS_APP_ID, headers=headers, params=params) topScoreIntent = r.json()['topScoringIntent'] entities = r.json()['entities'] intent = topScoreIntent['intent'] if topScoreIntent['score'] > 0.5 else 'None' entity = entities[0] if len(entities) > 0 else None return LuisResponse(intent, entity['entity'], entity['type']) if entity else LuisResponse(intent) -

Delete the line containing

return Nonebelow the above code.

NOTE: Check your indentation to avoid Python compilation errors.

-

-

Save the talk-to-my-robot.py file.

Test the 'move your arm' command

The bot emulator provides a convenient way to interact and debug your bot locally. Let's use the emulator to send requests to our bot:

-

Review the Explorer from the left pane in VS Code. Find the CHATBOT folder and expand it.

-

Right-click the

talk-to-my-robot.pyfile. -

Select Run Python File in Terminal to execute the bot script. Install the VS Code Python extension if that option is not available

NOTE: Dismiss the alert:

Linter pylint is not installedif prompted. If you get compilation errors, ensure you have selected the correct interpreter in step 1 of the previous section and your indentation is correct.

- Open the Bot Framework Emulator app.

-

Click Open Bot and select the file

SawyerBot.botfrom your~/AI-Robot-Challenge-Lab/src/chatbotdirectory.NOTE: The V4 Bot Emulator gives us the ability to create bot configuration files for simpler connectivity when debugging.

-

Relaunch the simulator if it is closed

cd ~/AI-Robot-Challenge-Lab ./intera.sh simLaunch the robot simulation. Type in the intera command line the following:

source devel/setup.bash roslaunch sorting_demo sorting_demo.launch -

Type

move your armand press enter.

- Return to Gazebo and wait for the simulator to move the arm.

- Stop the bot by pressing CTRL+C in VS Code Terminal.

Make the grippers move

-

Go to the

BotRequestHandlerclass. -

Modify the

handle_messagemethod:-

Hint: Search for the

#Set Move Grippers Handlercomment and then uncomment the following line:BotCommandHandler.move_grippers(luis_result.entity_value)

-

-

Go to the

BotCommandHandlerclass.-

Hint: Search for the

#Implement Move Grippers Commandcomment and then uncomment the following lines:print(f'{action} grippers... wait a few seconds') # launch your python2 script using bash python2_command = "bash -c 'source ~/AI-Robot-Challenge-Lab/devel/setup.bash; python2.7 bot-move-grippers.py -a {}'".format(action) process = subprocess.Popen(python2_command.split(), stdout=subprocess.PIPE,shell=True) output, error = process.communicate() # receive output from the python2 script print('done moving grippers . . .') print('returncode: ' + str(process.returncode)) print('output: ' + output.decode("utf-8"))

NOTE: Check your indentation to avoid Python compilation errors.

-

-

Save the talk-to-my-robot.py file.

Test 'make the grippers move' command

- Right-click the

talk-to-my-robot.pyfile from the Explorer in VS Code. - Select Run Python File in Terminal to execute the bot script.

- Go back to the Bot Framework Emulator app.

- Click Start Over to start a new conversation.

- Type

close grippersand press enter. - Return to Gazebo and wait for the simulator to move the grippers.

- Go back to the Bot Framework Emulator app.

- Type

open grippersand press enter. - Return to Gazebo and wait for the simulator to move the grippers.

- Stop the bot by pressing CTRL+C in VS Code Terminal.

Show robot statistics

-

Go to the

BotRequestHandlerclass. -

Modify the

handle_messagemethod:-

Search for the

#Set Show Stats Handlercomment and then uncomment the following lines:stats = BotCommandHandler.show_stats() response = await BotRequestHandler.create_reply_activity(activity, stats) await context.send_activity(response)

-

-

Go to the

BotCommandHandlerclass.-

Search for the

#Set Show Stats Commandcomment and then uncomment the following lines:print('Showing stats... do something') # launch your python2 script using bash python2_command = "bash -c 'source ~/AI-Robot-Challenge-Lab/devel/setup.bash; python2.7 bot-stats-node.py'" process = subprocess.Popen(python2_command, stdout=subprocess.PIPE, shell=True) output, error = process.communicate() # receive output from the python2 script result = output.decode("utf-8") print('done getting state . . .') print('returncode: ' + str(process.returncode)) print('output: ' + result + '\n') return result -

Delete the line containing

return Nonebelow the above code.

NOTE: Check your indentation to avoid Python compilation errors.

-

-

Save the talk-to-my-robot.py file.

Test 'show robot statistics' command

- Right-click the

talk-to-my-robot.pyfile from the Explorer in VSCode. - Select Run Python File in Terminal to execute the bot script.

- Return to the Bot Framework Emulator app.

- Click Start Over to start a new conversation.

- Type

show statsand press enter. - Wait a few seconds and wait for a response from your bot, it will display the stats in the emulator.

- Stop the bot by pressing CTRL+C in VSCode Terminal.

Making Your Robot Intelligent with Microsoft AI

We will use Computer Vision to extract information from an image and the Intera SDK to send commands to our robot. For this scenario we'll extract the dominant color from an image and the robot will pick up a cube of the color specified.

Add Computer Vision to your script

-

Return to Visual Studio Code.

-

Open the talk-to-my-robot.py file.

-

Search for the

#Settingscomment update the Computer Vision Key you previously obtained:COMPUTER_VISION_SUBSCRIPTION_KEY = 'UPDATE_THIS_KEY' -

Go to the

BotRequestHandlerclass.- Search for the

#Implement Process Image Methodcomment and then uncomment the following lines:image_url = BotRequestHandler.get_image_url(activity.attachments) if image_url: dominant_color = ComputerVisionApiService.analyze_image(image_url) response = await BotRequestHandler.create_reply_activity(activity, f'Do you need a {dominant_color} cube? Let me find one for you!') await context.send_activity(response) BotCommandHandler.move_cube(dominant_color) else: response = await BotRequestHandler.create_reply_activity(activity, 'Please provide a valid instruction or image.') await context.send_activity(response)

- Search for the

-

Go to the

ComputerVisionApiServiceclass. -

Modify the

analyze_imagemethod:-

Search for the

#Analyze Image Request Headers and Parameterscomment and then uncomment the following lines:headers = { 'Ocp-Apim-Subscription-Key': COMPUTER_VISION_SUBSCRIPTION_KEY, 'Content-Type': 'application/octet-stream' } params = {'visualFeatures': 'Color'} -

Search for the

#Get Image Bytes Contentcomment and then uncomment the following line:image_data = BytesIO(requests.get(image_url).content) -

Search for the

#Process Imagecomment and then uncomment the following lines:print(f'Processing image: {image_url}') response = requests.post(COMPUTER_VISION_ANALYZE_URL, headers=headers, params=params, data=image_data) response.raise_for_status() analysis = response.json() dominant_color = analysis["color"]["dominantColors"][0] return dominant_color -

Delete the line containing

return Nonebelow the above code.

-

-

Go to the

BotCommandHandlerclass.-

Search for the

#Move Cube Commandcomment and then uncomment the following lines:print(f'Moving {color} cube...') try: r = requests.get(f'{SIM_API_HOST}/put_block_into_tray/{color}/1') r.raise_for_status() print('done moving cube . . .') except Exception as e: print("[Errno {0}] {1}".format(e.errno, e.strerror))

NOTE: Check your indentation to avoid Python compilation errors.

-

-

Save the talk-to-my-robot.py file.

Test the 'move cube' command

- Right-click the

talk-to-my-robot.pyfile from the Explorer in VS Code. - Select Run Python File in Terminal to execute the bot script.

- Go back to the Bot Framework Emulator app.

- Click Start Over to start a new conversation.

- Click the upload button from the left bottom corner to upload an image.

- Select the file

~/AI-Robot-Challenge-Lab/resources/Images/cube-blue.png.

- Return to Gazebo and wait for the simulator to move the requested cube.

- Go back to the Bot Framework Emulator app.

- Select another image of a different color and check the simulator to verify which cube it moved.

Contributing

This project welcomes contributions and suggestions. Most contributions require you to agree to a Contributor License Agreement (CLA) declaring that you have the right to, and actually do, grant us the rights to use your contribution. For details, visit https://cla.microsoft.com.

When you submit a pull request, a CLA-bot will automatically determine whether you need to provide a CLA and decorate the PR appropriately (e.g., label, comment). Simply follow the instructions provided by the bot. You will only need to do this once across all repos using our CLA.

This project has adopted the Microsoft Open Source Code of Conduct. For more information see the Code of Conduct FAQ or contact opencode@microsoft.com with any additional questions or comments.