This pr is auto merged as it contains a mandatory file and is opened for more than 10 days. |

||

|---|---|---|

| .github/ISSUE_TEMPLATE | ||

| DeployDeepModelKubernetes@841d4974b6 | ||

| DeployDeepModelPipelines@5af60dc57d | ||

| DeployMLModelKubernetes@88f03917f9 | ||

| DeployMLModelPipelines@ef8a33b9d8 | ||

| DeployRMLModelBatch@b4ab51168c | ||

| DeployRMLModelKubernetes@eaa6885277 | ||

| DeploySparkMLModelDatabricks@d8858be5e9 | ||

| TrainDistributedDeepModel@d037c568bb | ||

| TrainMLModelHyperdrive@602ac9ab69 | ||

| docs | ||

| images | ||

| .gitignore | ||

| .gitmodules | ||

| LICENSE | ||

| README.md | ||

| SECURITY.md | ||

README.md

AI Reference Architectures

This repository contains the recommended ways to train and deploy machine learning models on Azure. It ranges from running massively parallel hyperparameter tuning using Hyperdrive to deploying deep learning models on Kubernetes. Each tutorial takes you step by step through the process to train or deploy your model. If you are confused about what service to use and when look at the FAQ below.

For further documentation on the reference architectures please look here.

Getting Started

This repository is arranged as submodules and therefore you can either pull all the tutorials or simply the ones you want. To pull all the tutorials simply run:

git clone --recurse-submodules https://github.com/Microsoft/AIReferenceArchitectures.git

if you have git older than 2.13 run:

git clone --recursive https://github.com/Microsoft/AIReferenceArchitectures.git

Tutorials

| Tutorial | Environment | Description | Status |

|---|---|---|---|

| Deploy Deep Learning Model on Kubernetes | Python GPU | Deploy image classification model on Kubernetes or IoT Edge for real-time scoring using Azure ML | |

| Deploy Classic ML Model on Kubernetes | Python CPU | Train LightGBM model locally using Azure ML, deploy on Kubernetes or IoT Edge for real-time scoring | |

| Hyperparameter Tuning of Classical ML Models | Python CPU | Train LightGBM model locally and run Hyperparameter tuning using Hyperdrive in Azure ML | |

| Deploy Deep Learning Model on Pipelines | Python GPU | Deploy PyTorch style transfer model for batch scoring using Azure ML Pipelines | |

| Deploy Classic ML Model on Pipelines | Python CPU | Deploy one-class SVM for batch scoring anomaly detection using Azure ML Pipelines | |

| Deploy R ML Model on Kubernetes | R CPU | Deploy ML model for real-time scoring on Kubernetes | |

| Deploy R ML Model on Batch | R CPU | Deploy forecasting model for batch scoring using Azure Batch and doAzureParallel | |

| Deploy Spark ML Model on Databricks | Spark CPU | Deploy a classification model for batch scoring using Databricks | |

| Train Distributed Deep Leaning Model | Python GPU | Distributed training of ResNet50 model using Batch AI |

Requirements

The tutorials have been mainly tested on Linux VMs in Azure. Each tutorial may have slightly different requirements such as GPU for some of the deep learning ones. For more details please consult the readme in each tutorial.

Reporting Issues

Please report issues with each tutorial in the tutorial's own github page.

FAQ

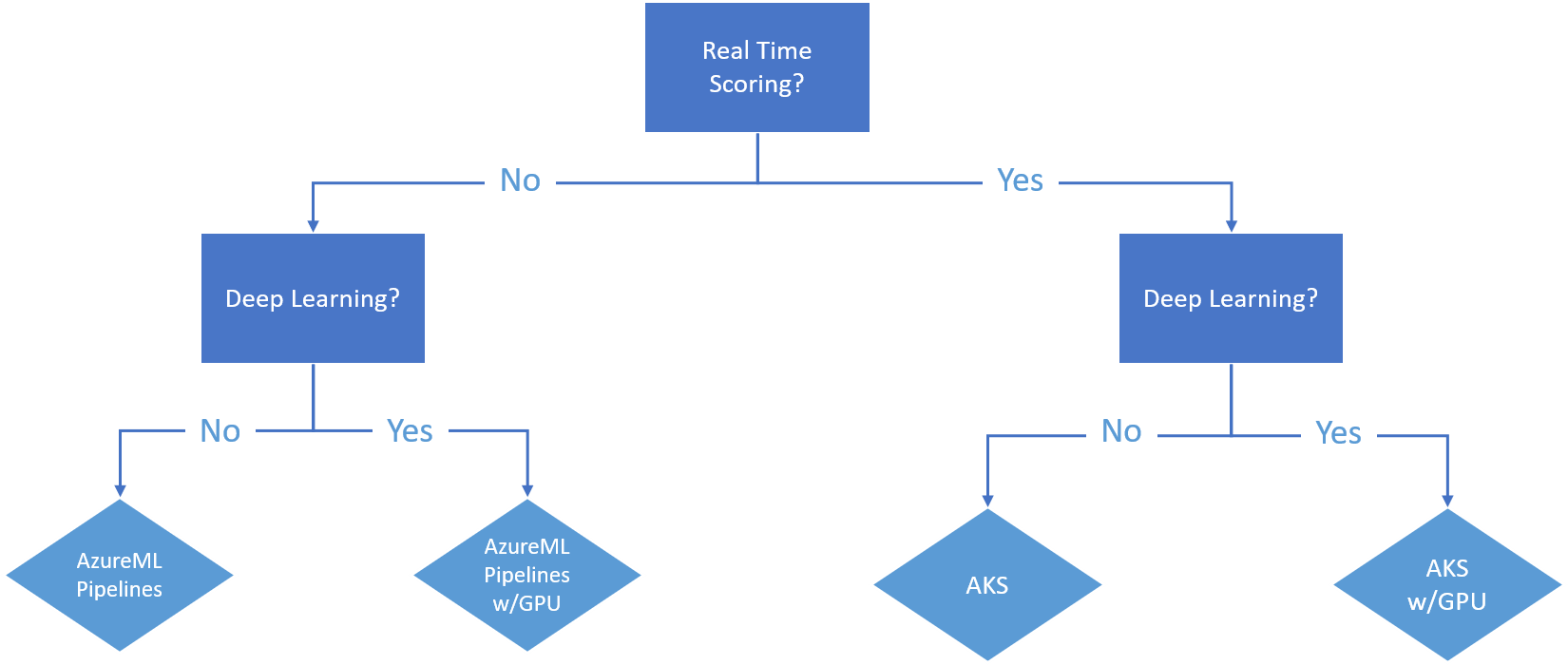

What service should I use for deploying models in Python?

When deploying ML models in Python there are two core questions. The first is will it be real time and whether the model is a deep learning model. For deploying deep learning models that require real time we recommend Azure Kubernetes Services (AKS) with GPUs. For a tutorial on how to do that look at AKS w/GPU. For deploying deep learning models for batch scoring we recommend using AzureML pipelines with GPUs, for a tutorial on how to do that look AzureML Pipelines w/GPU. For non deep learning models we recommend you use the same services but without GPUs. For a tutorial on deploying classical ML models for real time scoring look AKS and for batch scoring AzureML Pipelines

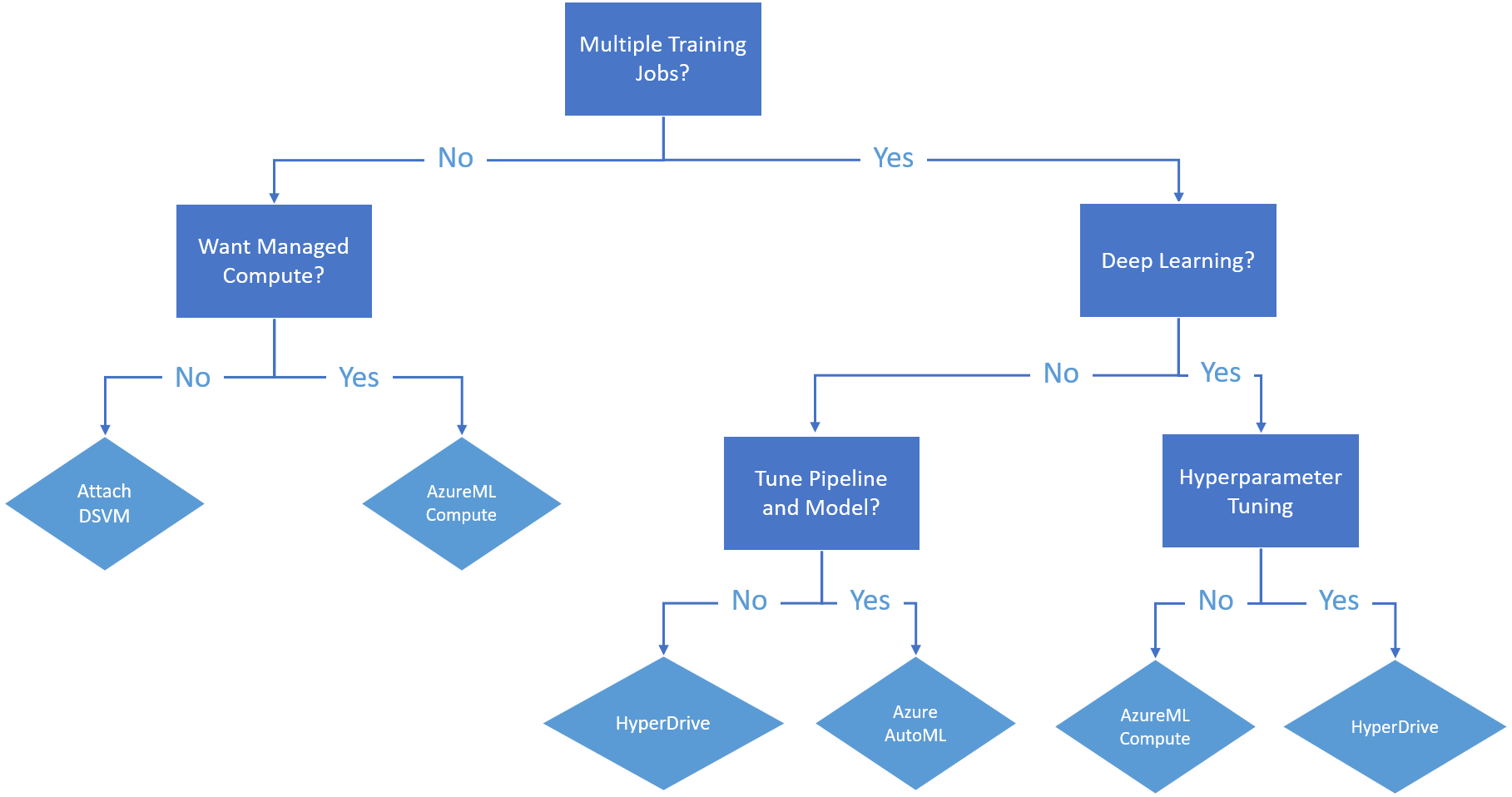

What service should I use to train a model in Python?

There are many options for training ML models in Python on Azure. The most straight forward way is to train your model on a DSVM. You can either do this in local model straight on the VM or through attaching it in AzureML as a compute target. If you want to have AzureML manage the compute for you and scale it up and down based on whether jobs are waiting in the queue then you should AzureML Compute.

Now if you are going to run multiple jobs for hyperparameter tuning or other purposes then we would recommend using Hyperdrive, Azure automated ML or AzureML Compute dependent on your requirements. For a tutorial on how to use Hyperdrive go here.

Recommend a Scenario

If there is a particular scenario you are interested in seeing a tutorial for please fill in a scenario suggestion

Ongoing Work

We are constantly developing interesting AI reference architectures using Microsoft AI Platform. Some of the ongoing projects include IoT Edge scenarios, model scoring on mobile devices, add more... To follow the progress and any new reference architectures, please go to the AI section of this link.

Contributing

This project welcomes contributions and suggestions. Most contributions require you to agree to a Contributor License Agreement (CLA) declaring that you have the right to, and actually do, grant us the rights to use your contribution. For details, visit https://cla.microsoft.com.

When you submit a pull request, a CLA-bot will automatically determine whether you need to provide a CLA and decorate the PR appropriately (e.g., label, comment). Simply follow the instructions provided by the bot. You will only need to do this once across all repos using our CLA.

This project has adopted the Microsoft Open Source Code of Conduct. For more information see the Code of Conduct FAQ or contact opencode@microsoft.com with any additional questions or comments.