|

|

||

|---|---|---|

| .github/workflows | ||

| Deployment | ||

| images/readMe | ||

| .gitattributes | ||

| .gitignore | ||

| CODE_OF_CONDUCT.md | ||

| ConversationalDataFormat.md | ||

| Extensibility.md | ||

| LICENSE | ||

| README.md | ||

| SECURITY.md | ||

| SolutionArchitecture.md | ||

| Troubleshooting.md | ||

| global.json | ||

README.md

Conversation knowledge mining solution accelerator

MENU: USER STORY | SIMPLE DEPLOY | SUPPORTING DOCUMENTATION | CUSTOMER TRUTH

User story

Overview

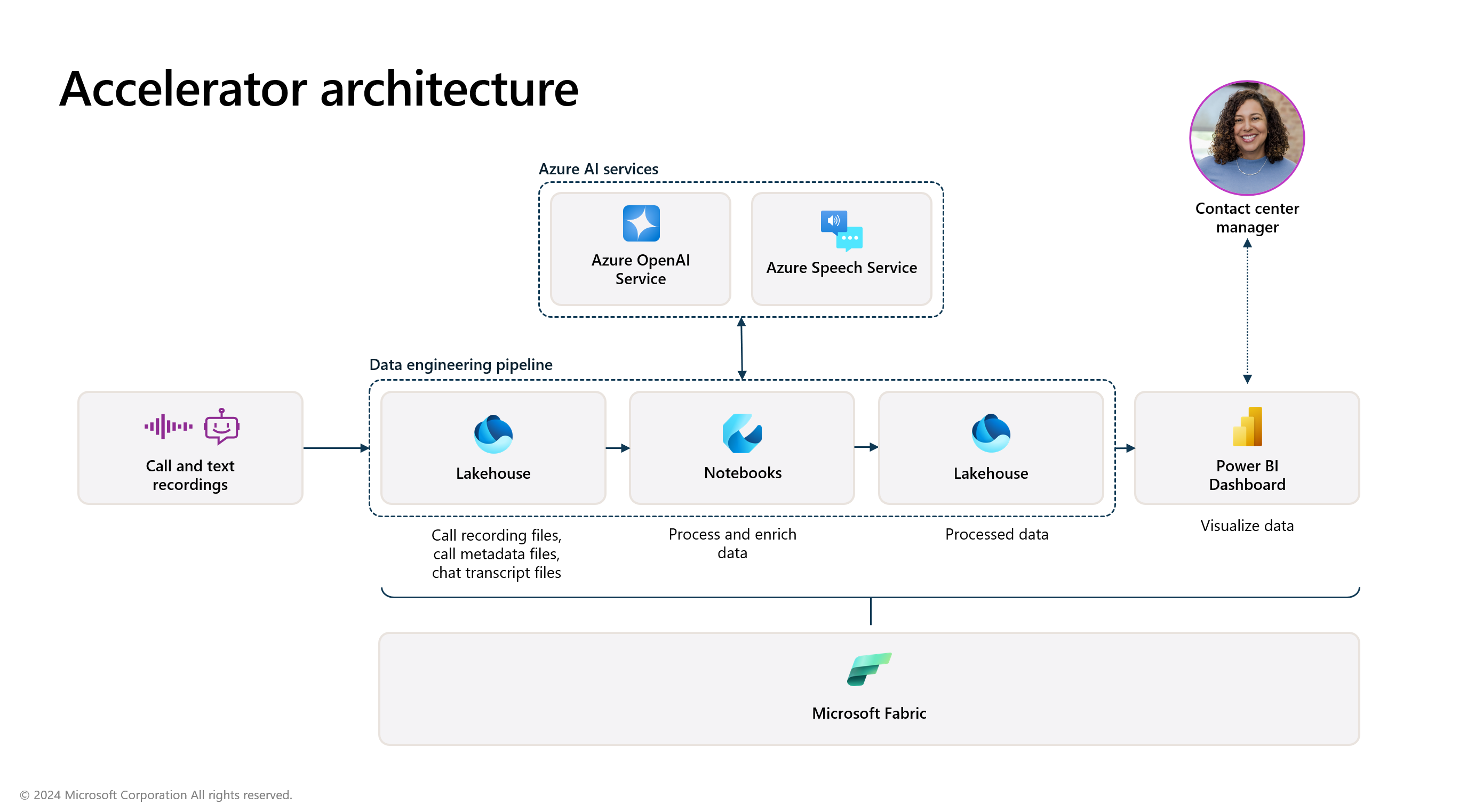

This solution accelerator enables customers with large amounts of conversational data to use generative AI to surface key phrases alongside operational metrics. This enables users to discover valuable insights for targeted business impact.

Version history: An updated version of the Conversation Knowledge Mining solution accelerator was published on 08/15/2024. If you deployed the accelerator prior to that date, please see “Version history” in the Supporting documentation section.

Technical key features

- Data processing: Microsoft Fabric processes both audio and conversation files at scale, leveraging its benefits for efficient and scalable data handling

- Summarization and key phrase extraction: Azure OpenAI is used to summarize long conversations into concise paragraphs and extract relevant key phrases

- Speech transcription and diarization: Azure Speech is used to transcribe audio files, including speaker diarization for post-call analytics. Diarization is the process of recognizing and separating individual speakers into mono-channel audio data

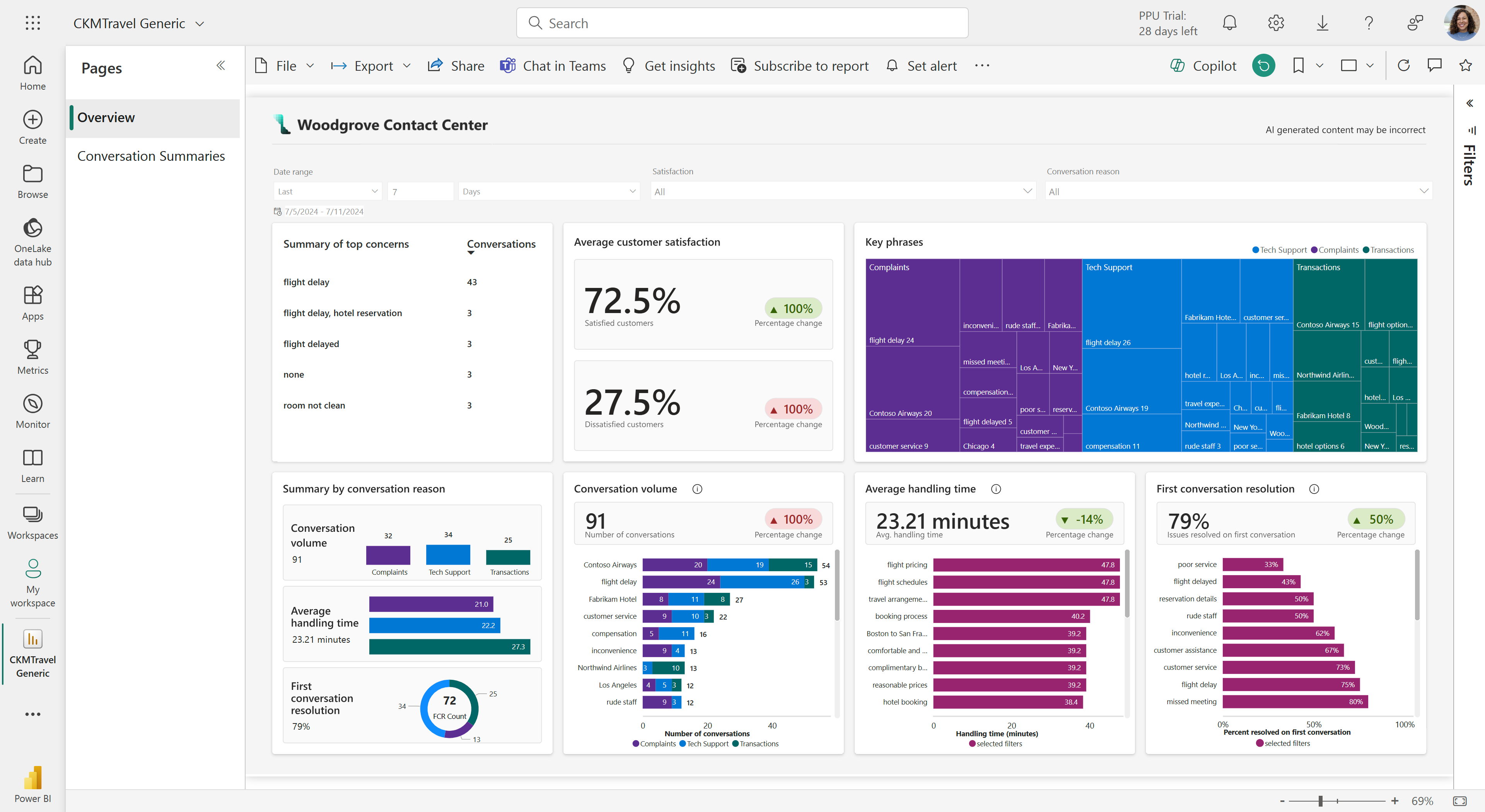

- Analytics dashboard: Power BI is used to visualize the correlation between operational metrics and AI-generated conversational data

Use case / scenario

A contact center manager reviews contact center performance to ensure resources are being used efficiently. To identify areas for improvement, they need to understand the correlation between conversational and operational data.

The contact center manager uses their dashboard to identify how LLM-generated conversational analytics and insights are impacting operations to make an informed decision about how to improve their center’s performance.

Target end users

Company personnel (employees, executives) looking to gain conversational insights in correlation with operational Contact Center metrics would leverage this accelerator to find what they need quickly.

Business value

- Conversation analysis: Generative AI analyzes call transcripts, summarizes content, identifies and aggregates key phrases for data visualization

- Automated customer satisfaction: Generative AI determines the post-call satisfaction rating of a customer’s experience with their agent

- Operational clarity: Relevant metrics such as call volume, handling time, and call resolution are pulled from the same call logs for operational data visualization

- Unified data: Unstructured (call transcripts) and structured (operational metrics) data are both analyzed and visualized within the same application

- Targeted decision enablement: Enable agents and managers to achieve glanceable insight recognition, corollary information analysis, and accelerated decision making

Products used/licenses required

-

Azure Speech Service

-

Azure OpenAI

-

Microsoft Fabric Capacity

-

The user deploying the template must have permission to create resources and resource groups.

Solution accelerator architecture

Simple deploy

How to install/deploy

-

Deploy Azure resources

Click the following deployment button to create the required resources for this accelerator directly in your Azure Subscription.-

Most fields will have a default name set already. You will need to update the following Azure OpenAI settings:

-

Region - the region where the resources will be created in

-

Solution Prefix - provide a 6 alphanumeric value that will be used to prefix resources

-

Location - location of resources, by default it will use the resource group's location

-

-

-

Create Fabric workspace

- Navigate to (Fabric Workspace)

- Click on Workspaces from left Navigation

- Click on + New Workspace

- Provide Name of Workspace

- Provide Description of Workspace (optional)

- Click Apply

- Open Workspace

- Retrieve Workspace ID from URL, refer to documentation additional assistance (here)

-

Deploy Fabric resources and artifacts

- Navigate to (Azure Portal)

- Click on Azure Cloud Shell in the top right of navigation Menu (add image)

- Run the run the following commands:

az login***Follow instructions in Azure Cloud Shell for login instructionsrm -rf ./Customer-Service-Conversational-Insights-with-Azure-OpenAI-Servicesgit clone https://github.com/microsoft/Customer-Service-Conversational-Insights-with-Azure-OpenAI-Servicescd ./Customer-Service-Conversational-Insights-with-Azure-OpenAI-Services/Deployment/scripts/fabric_scriptssh ./run_fabric_items_scripts.sh keyvault_param workspaceid_param solutionprefix_param- keyvault_param - the name of the keyvault that was created in Step 1

- workspaceid_param - the workspaceid created in Step 2

- solutionprefix_param - prefix used to append to lakehouse upon creation

- Get Fabric Lakehouse connection details:

- Once deployment is complete, navigate to Fabric Workspace

- Find Lakehouse in workspace (ex.lakehouse_solutionprefix_param)

- Click on the

...next to the SQL Analytics Endpoint - Click on

Copy SQL connection string - Click Copy button in popup window.

- Wait 10-15 minutes to allow the data pipelines to finish processing then proceed to next step.

-

Open Power BI report

- Download the .pbix file from the Reports folder.

- Open Power BI report in Power BI Dashboard

- Click on

Transform Datamenu option from the Task Bar - Click

Data source settings - Click

Change Source... - Input the Server link (from Fabric Workspace)

- Input Database name (the lakehouse name from Fabric Workspace)

- Click

OK - Click

Edit Permissions - If not signed in, sign in your credentials and proceed to click OK

- Click

Close - Report should refresh with new connection.

-

Publish Power BI

- Click

Publish(from PBI report in Power BI Desktop application) - Select Fabric Workspace

- Click

Select - After publish is complete, navigate to Fabric Workspace

- Click

...next to the Semantic model for Power BI report - Click on

Settings - Click on

Edit credentials(under Data source credentials) - Select

OAuth2for the Authentication method - Select option for

Privacy level setting for this data source - Click

Sign in - Navigate back to Fabric workspace and click on Power BI report

- Click

-

Schedule Post-Processing Notebook

It is essential to update dates daily as they advance based on the current day at the time of deployment. Since the Power BI report relies on the current date, we highly recommend scheduling or running the 03_post_processing notebook daily in the workspace. Please note that this process modifies the original date of the processed data. If you do not wish to run this, do not execute the 03_post_processing notebook.To schedule the notebook, follow these steps:

- Navigate to the Workspace

- Click on the "..." next to the 03_post_processing notebook

- Select "Schedule"

- Configure the schedule settings (we recommend running the notebook at least daily)

Process audio files

Currently, audio files are not processed during deployment. To manually process audio files, follow these steps:

- Open the

pipeline_notebook - Comment out cell 2 (only if there are zero files in the

conversation_inputdata folder waiting for JSON processing) - Uncomment cells 3 and 4

- Run

pipeline_notebook

Upload additional files

All files JSON and WAV files can be uploaded in the corresponding Lakehouse in the data/Files folder:

-

Conversation (JSON files): Upload JSON files in the conversation_input folder.

-

Audio (WAV files): Upload Audio files in the audio_input folder.

Post-deployment

- To process additional files, manually execute the pipeline_notebook after uploading new files.

- The OpenAI prompt can be modified within the Fabric notebooks.

Supporting documentation

How to customize

If you'd like to customize the accelerator, here are some ways you might do that:

- Modify the Power BI report to use a custom logo

- Ingest your own JSON conversation files by uploading them into the

conversation_inputlakehouse folder and run the data pipeline - Ingest your own audio conversation files by uploading them into the

audio_inputlakehouse folder and run the data pipeline

Troubleshooting

Additional resources

- Microsoft Fabric documentation - Microsoft Fabric | Microsoft Learn

- Azure OpenAI Service - Documentation, quickstarts, API reference - Azure AI services | Microsoft Learn

- Speech service documentation - Tutorials, API Reference - Azure AI services - Azure AI services | Microsoft Learn

More info

Version history

An updated version of the Conversation Knowledge Mining (CKM) solution accelerator was published on 08/15/2024. If you deployed the accelerator prior to that date, please note that CKM v2 cannot be deployed over CKM v1. Please also note that the CKM v2 .json conversation file format has been revised to include additional metadata, therefore CKM v1 files are no longer compatible. For resources related to CKM v1, please visit our archive (link-to-archive).

Customer truth

Customer stories coming soon.

Disclaimers

To the extent that the Software includes components or code used in or derived from Microsoft products or services, including without limitation Microsoft Azure Services (collectively, “Microsoft Products and Services”), you must also comply with the Product Terms applicable to such Microsoft Products and Services. You acknowledge and agree that the license governing the Software does not grant you a license or other right to use Microsoft Products and Services. Nothing in the license or this ReadMe file will serve to supersede, amend, terminate or modify any terms in the Product Terms for any Microsoft Products and Services.

You must also comply with all domestic and international export laws and regulations that apply to the Software, which include restrictions on destinations, end users, and end use. For further information on export restrictions, visit https://aka.ms/exporting.

You acknowledge that the Software and Microsoft Products and Services (1) are not designed, intended or made available as a medical device(s), and (2) are not designed or intended to be a substitute for professional medical advice, diagnosis, treatment, or judgment and should not be used to replace or as a substitute for professional medical advice, diagnosis, treatment, or judgment. Customer is solely responsible for displaying and/or obtaining appropriate consents, warnings, disclaimers, and acknowledgements to end users of Customer’s implementation of the Online Services.

You acknowledge the Software is not subject to SOC 1 and SOC 2 compliance audits. No Microsoft technology, nor any of its component technologies, including the Software, is intended or made available as a substitute for the professional advice, opinion, or judgement of a certified financial services professional. Do not use the Software to replace, substitute, or provide professional financial advice or judgment.

BY ACCESSING OR USING THE SOFTWARE, YOU ACKNOWLEDGE THAT THE SOFTWARE IS NOT DESIGNED OR INTENDED TO SUPPORT ANY USE IN WHICH A SERVICE INTERRUPTION, DEFECT, ERROR, OR OTHER FAILURE OF THE SOFTWARE COULD RESULT IN THE DEATH OR SERIOUS BODILY INJURY OF ANY PERSON OR IN PHYSICAL OR ENVIRONMENTAL DAMAGE (COLLECTIVELY, “HIGH-RISK USE”), AND THAT YOU WILL ENSURE THAT, IN THE EVENT OF ANY INTERRUPTION, DEFECT, ERROR, OR OTHER FAILURE OF THE SOFTWARE, THE SAFETY OF PEOPLE, PROPERTY, AND THE ENVIRONMENT ARE NOT REDUCED BELOW A LEVEL THAT IS REASONABLY, APPROPRIATE, AND LEGAL, WHETHER IN GENERAL OR IN A SPECIFIC INDUSTRY. BY ACCESSING THE SOFTWARE, YOU FURTHER ACKNOWLEDGE THAT YOUR HIGH-RISK USE OF THE SOFTWARE IS AT YOUR OWN RISK.