| Getting Started |

|---|

This lesson loads and draws models in 3D.

Setup

First create a new project using the instructions from the earlier lessons: Using DeviceResources and Adding the DirectX Tool Kit which we will use for this lesson.

Creating a model

Source assets for models are often stored in Autodesk FBX, Wavefront OBJ, or similar formats. A build process is used to convert them to a more run-time friendly format that is easier to load and render.

For this tutorial, we will make of use of the DirectXMesh meshconvert command-line tool. Start by saving cup._obj, cup.mtl, and cup.jpg into your new project's directory, and then from the top menu select Project / Add Existing Item.... Select "cup.jpg" and click "OK".

- Download the Meshconvert.exe from the DirectXMesh site save the EXE into your project's folder.

- Open a Command Prompt and then change to your project's folder.

Run the following command-line

meshconvert cup._obj -sdkmesh -nodds -y -flipu

Then from the top menu in Visual Studio select Project / Add Existing Item.... Select cup.sdkmesh and click "OK".

If you are using a Universal Windows Platform app or Xbox project rather than a Windows desktop app, you need to manually edit the Visual Studio project properties on the

cup.sdkmeshfile and make sure "Content" is set to "Yes" so the data file will be included in your packaged build.

Technical notes

- The switch

-sdkmeshselects the output type. The meshconvert command-line tool also supports-sdkmesh2,-cmo, and-vbo. See Geometry formats for more information. - The switch

-noddscauses any texture file name references in the material information of the source file to stay in their original file format (such as.pngor.jpg). Otherwise, it assumes you will be converting all the needed texture files to a.ddsinstead. - The

-flipuflips the direction of the texture coordinates. Since SimpleMath and these tutorials assume we are using right-handed viewing coordinates and the model was created using left-handed viewing coordinates we have to flip them to get the text in the texture to appear correctly. - The switch

-yindicates that it is ok to overwrite the output file in case you run it multiple times.

DirectX Tool Kit for DirectX 12 does not support

.cmomodels.

Drawing a model

In the Game.h file, add the following variables to the bottom of the Game class's private declarations (right after where you added m_graphicsMemory as part of setup):

DirectX::SimpleMath::Matrix m_world;

DirectX::SimpleMath::Matrix m_view;

DirectX::SimpleMath::Matrix m_proj;

std::unique_ptr<DirectX::CommonStates> m_states;

std::unique_ptr<DirectX::EffectFactory> m_fxFactory;

std::unique_ptr<DirectX::EffectTextureFactory> m_modelResources;

std::unique_ptr<DirectX::Model> m_model;

DirectX::Model::EffectCollection m_modelNormal;

In DirectX Tool Kit, a

Collectiontype is an alias for astd::vector<>. In this case, it's a vector of shared-pointers toIEffectinterface instances (i.e.std::vector<std::shared_ptr<IEffect>>).

In Game.cpp, add to the TODO of CreateDeviceDependentResources right after you create m_graphicsMemory:

m_states = std::make_unique<CommonStates>(device);

m_model = Model::CreateFromSDKMESH(device, L"cup.sdkmesh");

ResourceUploadBatch resourceUpload(device);

resourceUpload.Begin();

m_modelResources = m_model->LoadTextures(device, resourceUpload);

m_fxFactory = std::make_unique<EffectFactory>(m_modelResources->Heap(), m_states->Heap());

auto uploadResourcesFinished = resourceUpload.End(

m_deviceResources->GetCommandQueue());

uploadResourcesFinished.wait();

RenderTargetState rtState(m_deviceResources->GetBackBufferFormat(),

m_deviceResources->GetDepthBufferFormat());

EffectPipelineStateDescription pd(

nullptr,

CommonStates::Opaque,

CommonStates::DepthDefault,

CommonStates::CullClockwise,

rtState);

m_modelNormal = m_model->CreateEffects(*m_fxFactory, pd, pd);

m_world = Matrix::Identity;

Our model doesn't use alpha-blending which is why we have used

pdtwice in the call toCreateEffects. See below for more details.

In Game.cpp, add to the TODO of CreateWindowSizeDependentResources:

auto size = m_deviceResources->GetOutputSize();

m_view = Matrix::CreateLookAt(Vector3(2.f, 2.f, 2.f),

Vector3::Zero, Vector3::UnitY);

m_proj = Matrix::CreatePerspectiveFieldOfView(XM_PI / 4.f,

float(size.right) / float(size.bottom), 0.1f, 10.f);

In Game.cpp, add to the TODO of OnDeviceLost where you added m_graphicsMemory.reset():

m_states.reset();

m_fxFactory.reset();

m_modelResources.reset();

m_model.reset();

m_modelNormal.clear();

In Game.cpp, add to the TODO of Render:

ID3D12DescriptorHeap* heaps[] = { m_modelResources->Heap(), m_states->Heap() };

commandList->SetDescriptorHeaps(static_cast<UINT>(std::size(heaps)), heaps);

Model::UpdateEffectMatrices(m_modelNormal, m_world, m_view, m_proj);

m_model->Draw(commandList, m_modelNormal.cbegin());

In Game.cpp, add to the TODO of Update:

auto time = static_cast<float>(timer.GetTotalSeconds());

m_world = Matrix::CreateRotationZ(cosf(time) * 2.f);

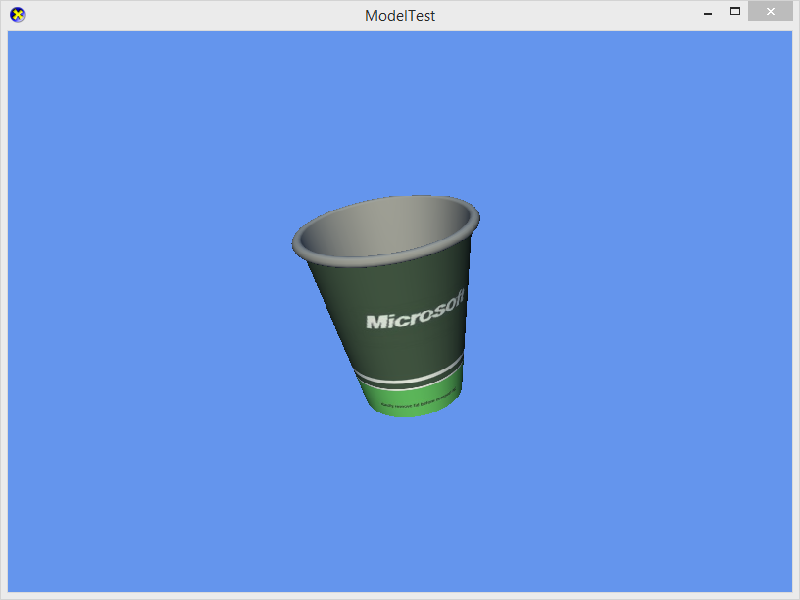

Build and run and you will see our cup model rendered with default lighting:

Click here for troubleshooting advice

If you get a runtime exception, then you may have the "cup.jpg" or "cup.sdkmesh" in the wrong folder, have modified the "Working Directory" in the "Debugging" configuration settings, or otherwise changed the expected paths at runtime of the application. You should set a break-point on Model::CreateFromSDKMESH and step into the code to find the exact problem.

The render function must set descriptor heaps using

SetDescriptorHeaps. This allows the application to use their own heaps instead of theEffectTextureFactoryclass for where the textures are in memory. You can freely mix and match heaps in the application, but remember that you can have only a single texture descriptor heap and a single sampler descriptor heap active at any given time.

Optimizing vertex & index buffers

By default, the Model loaders create the vertex and index buffers using GraphicsMemory which is "upload heap" memory. You can render directly from this, but it has the same performance as the 'dynamic' VBs/IBs. We can improve rendering performance by transferring the VBs/IBs to dedicated video memory with a call to LoadStaticBuffers.

In Game.cpp modify the TODO of CreateDeviceDependentResources:

...

m_model = Model::CreateFromSDKMESH(device, L"cup.sdkmesh");

ResourceUploadBatch resourceUpload(device);

resourceUpload.Begin();

m_model->LoadStaticBuffers(device, resourceUpload);

m_modelResources = m_model->LoadTextures(device, resourceUpload);

...

Rendering with alpha-blending

To support rendering with proper blending, the Model class maintains two lists of ' parts' for each mesh in the model. One list contains all the opaque parts which use the first EffectPipelineDescription instance and are drawn first. The other list contains all the parts that require alpha-blending.

The model we are using does not have alpha-blended materials, so we can use the same EffectPipelineDescription twice above. The more general pattern is to provide one description with opaque settings and one with alpha settings. Assuming we are using non-premultipled alpha for your alpha-blended textures, you'd use:

EffectPipelineStateDescription pdAlpha(

nullptr,

CommonStates::NonPremultiplied,

CommonStates::DepthDefault,

CommonStates::CullClockwise,

rtState);

m_modelNormal = m_model->CreateEffects(*m_fxFactory, pd, pdAlpha);

Rendering with different states

In DirectX Tool Kit for DX12 materials/effects are kept as a distinct phase of loading from the geometry. This is because in DirectX 12, the Pipeline State Objects (PSOs) capture not only the shaders but also all rendering state including the render target format and configuration. That means in practice you will need multiple sets of effects instances for a single model even if you are using the same shaders over again.

In the Game.h file, add the following variable to the bottom of the Game class's private declarations:

DirectX::Model::EffectCollection m_modelWireframe;

In Game.cpp, add to the TODO of CreateDeviceDependentResources after you create the m_modelNormal effect array:

EffectPipelineStateDescription pdWire(

nullptr,

CommonStates::Opaque,

CommonStates::DepthDefault,

CommonStates::Wireframe,

rtState);

m_modelWireframe = m_model->CreateEffects(*m_fxFactory, pdWire, pdWire);

In Game.cpp, add to the TODO of OnDeviceLost:

m_modelWireframe.clear();

In Game.cpp, modify the TODO of Render:

ID3D12DescriptorHeap* heaps[] = { m_modelResources->Heap(), m_states->Heap() };

commandList->SetDescriptorHeaps(static_cast<UINT>(std::size(heaps)), heaps);

Model::UpdateEffectMatrices(m_modelWireframe, m_world, m_view, m_proj);

m_model->Draw(commandList, m_modelWireframe.cbegin());

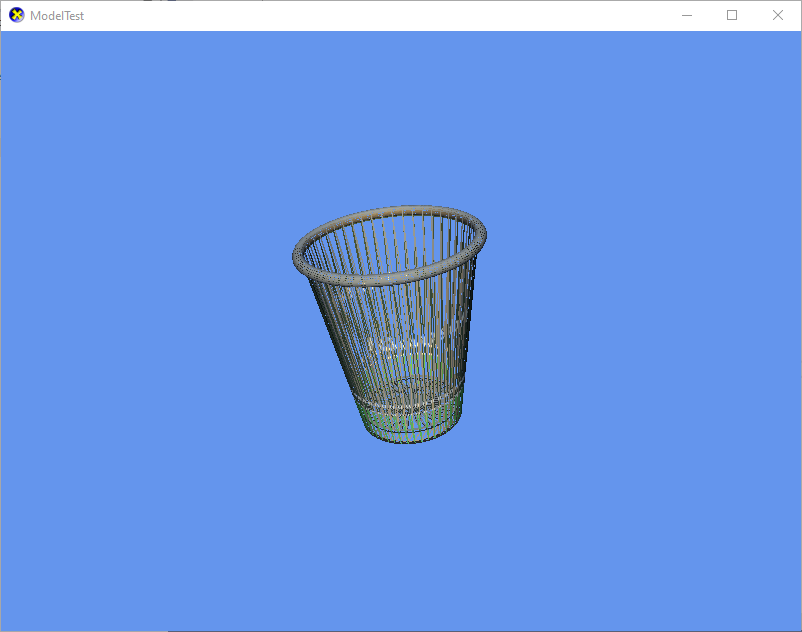

Build and run and you will see our cup model rendered with default lighting in wireframe mode:

Updating effects settings in a model

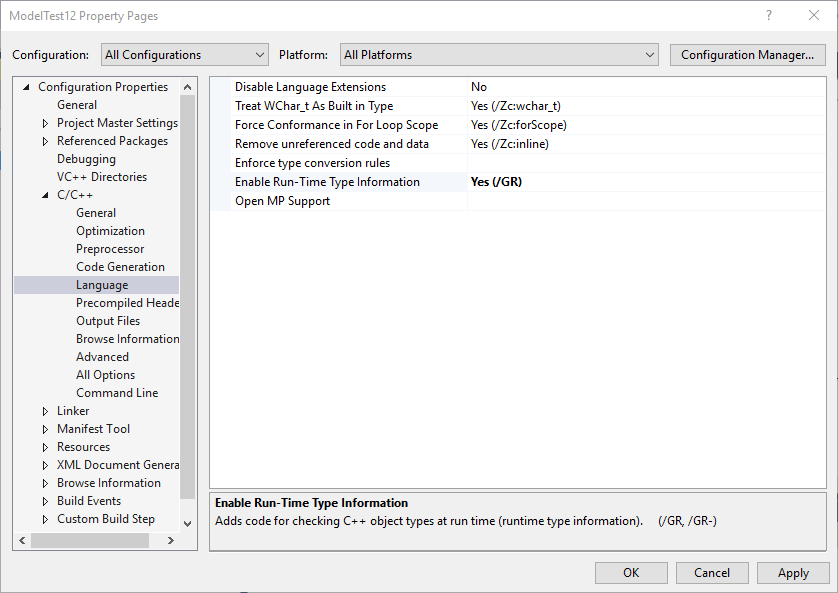

The Model class CreateEffects method creates effects for the loaded materials which are set to default lighting parameters. Because the effects system is flexible, we must first enable C++ Run-Time Type Information (RTTI) in order to safely discover the various interfaces supported at runtime. From the drop-down menu, select Project / Properties. Set to "All Configurations" / "All Platforms". On the left-hand tree view select C/C++ / Language. Then set "Enable Run-Time Type Information" to "Yes". Click "OK".

In the Game.h file, add the following variable to the bottom of the Game class's private declarations:

DirectX::Model::EffectCollection m_modelFog;

In Game.cpp, add to the TODO of CreateDeviceDependentResources after you create the m_modelNormal / m_modelWireframe effect arrays:

m_fxFactory->EnableFogging(true);

m_fxFactory->EnablePerPixelLighting(true);

m_modelFog = m_model->CreateEffects(*m_fxFactory, pd, pd);

for (auto& effect : m_modelFog)

{

auto lights = dynamic_cast<IEffectLights*>(effect.get());

if (lights)

{

lights->SetLightEnabled(0, true);

lights->SetLightDiffuseColor(0, Colors::Gold);

lights->SetLightEnabled(1, false);

lights->SetLightEnabled(2, false);

}

auto fog = dynamic_cast<IEffectFog*>(effect.get());

if (fog)

{

fog->SetFogColor(Colors::CornflowerBlue);

fog->SetFogStart(3.f);

fog->SetFogEnd(4.f);

}

}

In Game.cpp, add to the TODO of OnDeviceLost:

m_modelFog.clear();

In Game.cpp, modify the TODO of Render:

ID3D12DescriptorHeap* heaps[] = { m_modelResources->Heap(), m_states->Heap() };

commandList->SetDescriptorHeaps(static_cast<UINT>(std::size(heaps)), heaps);

Model::UpdateEffectMatrices(m_modelFog, m_world, m_view, m_proj);

m_model->Draw(commandList, m_modelFog.cbegin());

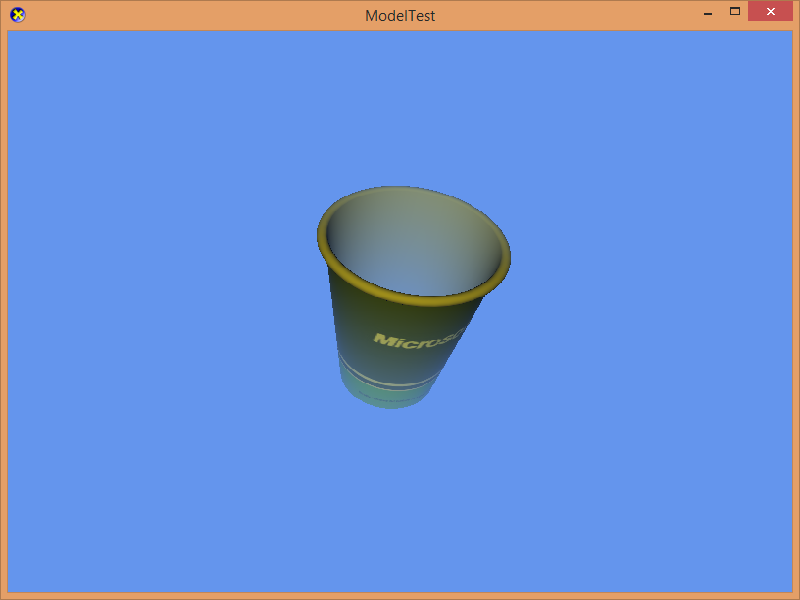

Build and run to get our cup with a colored light, per-pixel rather than vertex lighting, and fogging enabled.

Technical notes

Here we've made use to two C++ concepts:

- The

dynamic_castoperator allows us to safely determine if an effect in the model supports lighting and/or fog using the C++ run-time type checking. If the IEffect instance does not support the desired interface, the cast returns a nullptr and our code will skip those settings. - We used a ranged-based for loop to run through the fog effects array.

In DirectX Tool Kit for DirectX 11 the effect would select the proper shaders to use when you called Apply. With the Direct3D 12 Pipeline State Object, the shader configuration must be chosen when the effect is created. Therefore, we used EnableFogging and EnablePerPixelLighting on the effect factory to ensure the effects for m_modelFog were created with EffectFlags::PerPixelLighting | EffectFlags::Fog.

In DirectX Tool Kit for DirectX 11, the model loading is all done in single function and is then ready to render. Because of the design of DirectX 12, model loading is actually done in distinct phases:

- Load the geometry into upload buffers, and load the texture/material information into system memory (

CreateFromSDKMESH,CreateFromVBO). - Upload the geometry into video memory. This is optional, but is recommended for performance (

LoadStaticBuffers) . - Load required textures for the material information and upload them to video memory based on the texture information from step 1 (

LoadTextures). - Create one or more arrays of effect instances to generate the required PSOs based on the material information from step 1 (

CreateEffects).

This design also allows you to just use Model for the geometry and then render using our own materials/textures systems.

More to explore

- The Model class can also be used with a Physically-Based Rendering pipeline via PBREffectFactory. See Physically-based rendering for more information.

Next lesson: Animating using model bones

Further reading

DirectX Tool Kit docs EffectFactory, EffectTextureFactory, Effects, EffectPipelineStateDescription, Model, RenderTargetState

For Use

- Universal Windows Platform apps

- Windows desktop apps

- Windows 11

- Windows 10

- Xbox One

- Xbox Series X|S

Architecture

- x86

- x64

- ARM64

For Development

- Visual Studio 2022

- Visual Studio 2019 (16.11)

- clang/LLVM v12 - v18

- MinGW 12.2, 13.2

- CMake 3.20

Related Projects

DirectX Tool Kit for DirectX 11

Tools

See also

All content and source code for this package are subject to the terms of the MIT License.

This project has adopted the Microsoft Open Source Code of Conduct. For more information see the Code of Conduct FAQ or contact opencode@microsoft.com with any additional questions or comments.