Retrieval window |

||

|---|---|---|

| grtr | ||

| img | ||

| scripts | ||

| .gitignore | ||

| CODE_OF_CONDUCT.md | ||

| Dockerfile | ||

| LICENSE | ||

| README.md | ||

| SECURITY.md | ||

| environment.yml | ||

| metalwoz_dataspec.json | ||

| requirements.txt | ||

| setup.cfg | ||

| setup.py | ||

README.md

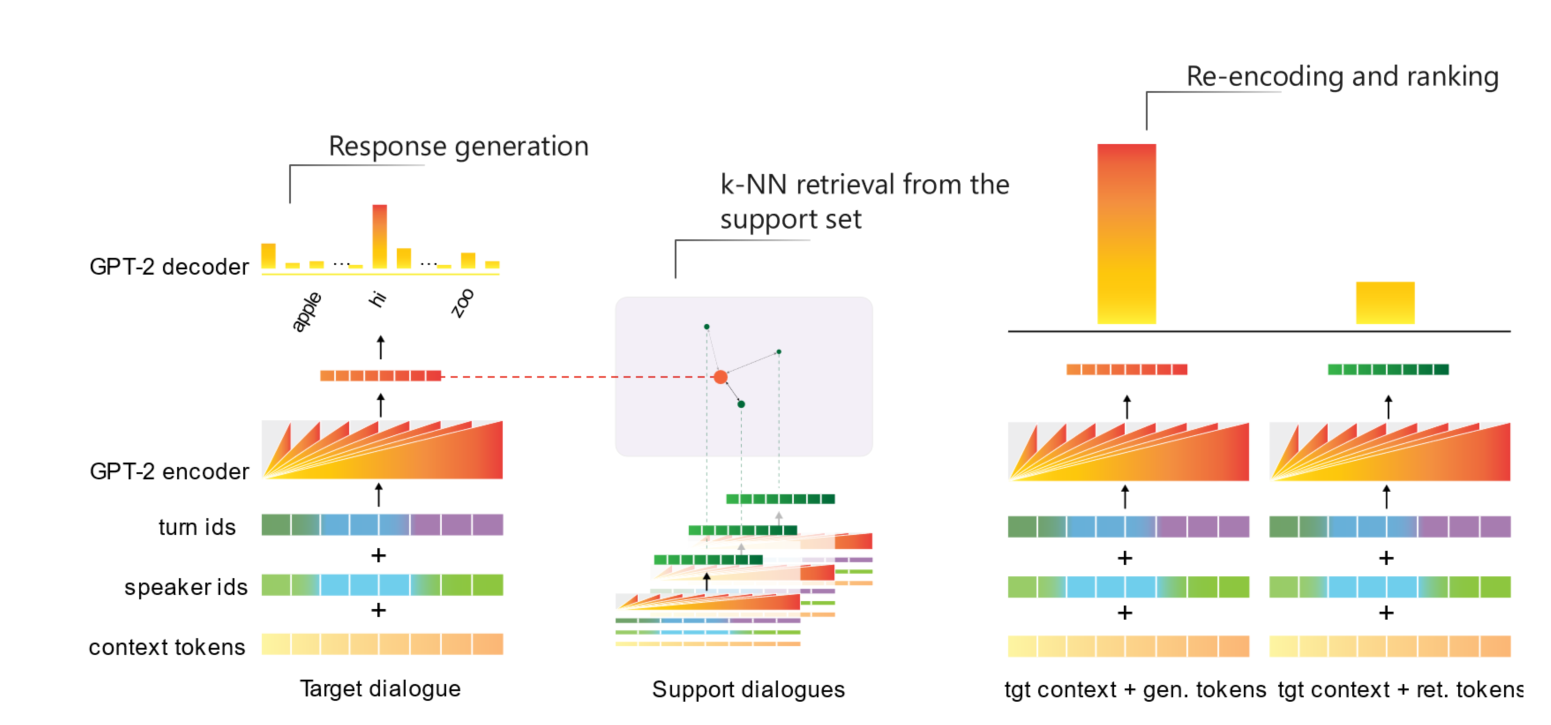

GRTr: Generative-Retrieval Transformers

Codebase for the papers:

Hybrid Generative-Retrieval Transformers for Dialogue Domain Adaptation, DSTC8@AAAI 2020

Fast Domain Adaptation for Goal-Oriented Dialogue Using a Hybrid Generative-Retrieval Transformer, ICASSP 2020 [virtual presentation]

By Igor Shalyminov, Alessandro Sordoni, Adam Atkinson, Hannes Schulz.

Installation

$ cd code-directory

$ conda create -n hybrid_retgen python=3.7

$ conda activate hybrid_retgen

$ conda install cython

$ pip install -e .

For mixed precision training:

$ pip install git+https://github.com/nvidia/apex

Datasets and experimental setup

Training a base GPT-2 model on MetaLWOz

$ python scripts/train <MetaLWOz zipfile> metalwoz_dataspec.json --dataset_cache cache exp/grtr --train_batch_size 4 --valid_batch_size 4 --early_stopping_after -1 --n_epochs 25

Add --fp16 O1 to use mixed precision training.

Predictions

generate-and-rank

python scripts/predict_generate_and_rank <MetaLWOz/MultiWoz zipfile> <testspec json> <output dir> <base GPT-2 model dir> --fine-tune --dataset_cache cache exp/grtr --train_batch_size 4 --valid_batch_size 4

generate only

python scripts/predict <MetaLWOz/MultiWoz zipfile> <testspec json> <output dir> <base GPT-2 model dir> --fine-tune --dataset_cache cache exp/grtr --train_batch_size 4 --valid_batch_size 4

Convenience bash scripts are provided in scripts/ to produce predictions for each of the three test specs.

Evaluation can be done using the evaluate script in the competition baseline repository.

Contributing

This project welcomes contributions and suggestions. Most contributions require you to agree to a Contributor License Agreement (CLA) declaring that you have the right to, and actually do, grant us the rights to use your contribution. For details, visit https://cla.opensource.microsoft.com.

When you submit a pull request, a CLA bot will automatically determine whether you need to provide a CLA and decorate the PR appropriately (e.g., status check, comment). Simply follow the instructions provided by the bot. You will only need to do this once across all repos using our CLA.

This project has adopted the Microsoft Open Source Code of Conduct. For more information see the Code of Conduct FAQ or contact opencode@microsoft.com with any additional questions or comments.