|

|

||

|---|---|---|

| .ipynb_checkpoints | ||

| InteractiveTranslation | ||

| analysis | ||

| docs | ||

| gpt | ||

| mt | ||

| mtpara | ||

| mtsimple | ||

| opennmt | ||

| static | ||

| templates | ||

| .azure-demo | ||

| .gitignore | ||

| CODE_OF_CONDUCT.md | ||

| Dockerfile | ||

| LICENSE | ||

| README.md | ||

| SECURITY.md | ||

| azure-pipelines.yml | ||

| config.json | ||

| docker-compose.yml | ||

| env-example | ||

| graph.png | ||

| manage.py | ||

| opennmt.zip | ||

| opt_data | ||

| pred.out | ||

| requirements.txt | ||

README.md

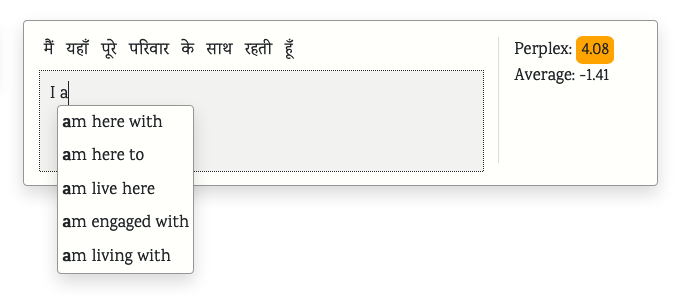

Interactive Neural Machine Translation

Assisting Translators with on-the-fly Translation Suggestions

Introduction

Interactive Machine Translation app uses Django and jQuery as its tech stack. Please refer to their docs for any doubts.

Installation Instructions

I. Prerequisites

- Clone INMT and prepare MT models:

git clone https://github.com/microsoft/inmt

-

Make a new model folder using

mkdir modelwhere the models need to be placed. Models can be downloaded from here. These contain English to Hindi translation models in both directions. -

If you want to train your own models, refer to Training MT Models.

Rest of the installation can be carried out either bare or using docker. Docker is preferable for its ease of installation.

II. Docker Installation

Assuming you have docker setup in your system, simply run docker-compose up -d. This application requires atleast 4GB of memory in order to run. Allot your docker memory accordingly.

III. Bare Installation

- Install dependencies using -

python -m pip install -r requirements.txt. Be sure to check your python version. This tool is compatible with Python3. - Install OpenNMT dependences using -

cd opennmt & python setup.py install & cd - - Run the migrations and start the server -

python manage.py makemigrations && python manage.py migrate && python manage.py runserver - The server opens on port 8000 by default. Open

localhost:8000/simplefor the simple interface.

Training MT Models

OpenNMT is used as the translation engine to power INMT. In order to train your own models, you need parallel sentences in your desired language. The basic instructions are listed as follows:

- Go to opennmt folder:

cd opennmt - Preprocess parallel (src & tgt) sentences:

onmt_preprocess -train_src data/src-train.txt -train_tgt data/tgt-train.txt -valid_src data/src-val.txt -valid_tgt data/tgt-val.txt -save_data data/demo - Train your model (with GPUs):

onmt_train -data data/demo -save_model demo-model

For advanced instructions on the training process, refer to OpenNMT docs.

Citation

If you find this work helpful, especially for research work, do consider citing us:

@inproceedings{santy-etal-2019-inmt,

title = "{INMT}: Interactive Neural Machine Translation Prediction",

author = "Santy, Sebastin and

Dandapat, Sandipan and

Choudhury, Monojit and

Bali, Kalika",

booktitle = "Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP): System Demonstrations",

month = nov,

year = "2019",

address = "Hong Kong, China",

publisher = "Association for Computational Linguistics",

url = "https://www.aclweb.org/anthology/D19-3018",

doi = "10.18653/v1/D19-3018",

pages = "103--108",

}

Contributing

This project welcomes contributions and suggestions. Most contributions require you to agree to a Contributor License Agreement (CLA) declaring that you have the right to, and actually do, grant us the rights to use your contribution. For details, visit https://cla.opensource.microsoft.com.

When you submit a pull request, a CLA bot will automatically determine whether you need to provide a CLA and decorate the PR appropriately (e.g., status check, comment). Simply follow the instructions provided by the bot. You will only need to do this once across all repos using our CLA.

This project has adopted the Microsoft Open Source Code of Conduct. For more information see the Code of Conduct FAQ or contact opencode@microsoft.com with any additional questions or comments.