* Matrix Strategy Adding a strategy to run in 3 regions : eastus, westus2, southcentralus location parameter was never set in the 03 notebook but added azlocation to set that field when running that notebook. * Re-order parameters Papermill complained it didn't know abut azurerggroup, but similarly said it didn't know what estimators were.... * Correct Param Names azurergname wasn't used throughout the matrix strategy, only the first one, which seems to make sense based on the results. |

||

|---|---|---|

| .ci | ||

| .gitignore | ||

| 00_Data_Prep.ipynb | ||

| 01_Training_Script.ipynb | ||

| 02_Testing_Script.ipynb | ||

| 03_Run_Locally.ipynb | ||

| 04_Hyperparameter_Random_Search.ipynb | ||

| 05_Train_Best_Model.ipynb | ||

| 06_Test_Best_Model.ipynb | ||

| 07_Train_With_AML_Pipeline.ipynb | ||

| 08_Tear_Down.ipynb | ||

| Design.png | ||

| LICENSE | ||

| README.md | ||

| azure-pipelines.yml | ||

| environment.yml | ||

| get_auth.py | ||

| text_utilities.py | ||

README.md

Author: Mario Bourgoin

Training of Python scikit-learn models on Azure

Overview

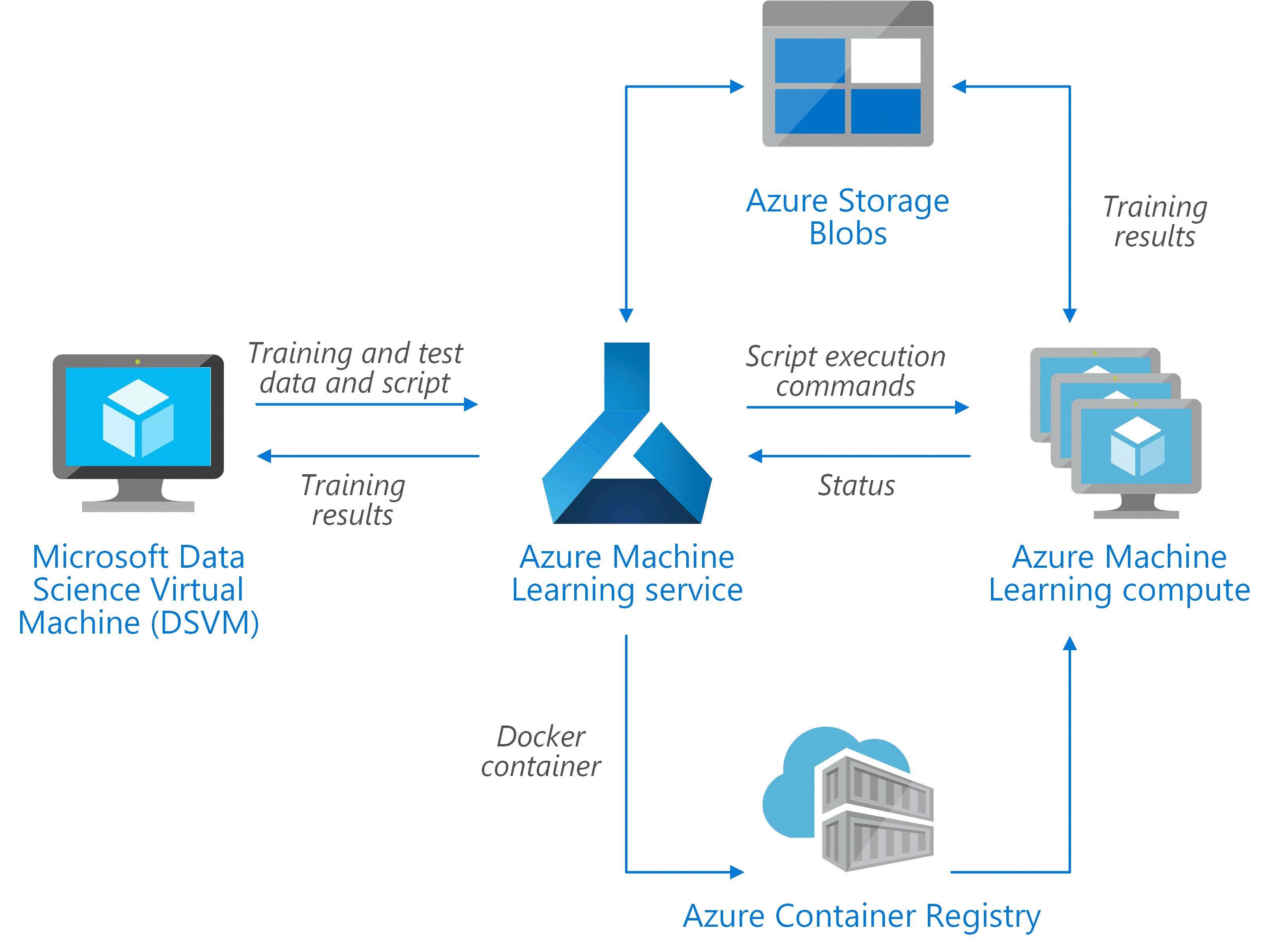

This scenario shows how to tune a Frequently Asked Questions (FAQ) matching model that can be deployed as a web service to provide predictions for user questions. For this scenario, "Input Data" in the architecture diagram refers to text strings containing the user questions to match with a list of FAQs. The scenario is designed for the Scikit-Learn machine learning library for Python but can be generalized to any scenario that uses Python models to make real-time predictions.

Design

The scenario uses a subset of Stack Overflow question data which includes original questions tagged as JavaScript, their duplicate questions, and their answers. It tunes a Scikit-Learn pipeline to predict the match probability of a duplicate question with each of the original questions. The application flow for this architecture is as follows:

The scenario uses a subset of Stack Overflow question data which includes original questions tagged as JavaScript, their duplicate questions, and their answers. It tunes a Scikit-Learn pipeline to predict the match probability of a duplicate question with each of the original questions. The application flow for this architecture is as follows:

- Create an Azure ML Service workspace.

- Create an Azure ML Compute cluster.

- Upload training, tuning, and testing data to Azure Storage.

- Configure a HyperDrive random hyperparameter search.

- Submit the search.

- Monitor until complete.

- Retrieve the best set of hyperparameters.

- Register the best model.

Prerequisites

- Linux (Ubuntu).

- Anaconda Python installed.

- Azure account.

The tutorial was developed on an Azure Ubuntu DSVM, which addresses the first two prerequisites. You can allocate such a VM on Azure Portal by creating a "Data Science Virtual Machine for Linux (Ubuntu)" resource.

Setup

To set up your environment to run these notebooks, please follow these steps. They setup the notebooks to use Azure seamlessly.

- Create a Linux Ubuntu VM.

- Log in to your VM. We recommend that you use a graphical client such as X2Go to access your VM. The remaining steps are to be done on the VM.

- Open a terminal emulator.

- Clone, fork, or download the zip file for this repository:

git clone https://github.com/Microsoft/MLHyperparameterTuning.git - Enter the local repository:

cd MLHyperparameterTuning - Create the Python MLHyperparameterTuning virtual environment using the environment.yml:

conda env create -f environment.yml - Activate the virtual environment:

The remaining steps should be done in this virtual environment.source activate MLHyperparameterTuning - Login to Azure:

You can verify that you are logged in to your subscription by executing the command:az loginaz account show -o table - If you have more than one Azure subscription, select it:

az account set --subscription <Your Azure Subscription> - Start the Jupyter notebook server:

jupyter notebook

Steps

After following the setup instructions above, run the Jupyter notebooks in order starting with 00_Data_Prep_Notebook.ipynb.

Cleaning up

The last Jupyter notebook describes how to delete the Azure resources created for running the tutorial. Consult the conda documentation for information on how to remove the conda environment created during the setup. And if you created a VM, you may also delete it.

Contributing

This project welcomes contributions and suggestions. Most contributions require you to agree to a Contributor License Agreement (CLA) declaring that you have the right to, and actually do, grant us the rights to use your contribution. For details, visit https://cla.microsoft.com.

When you submit a pull request, a CLA-bot will automatically determine whether you need to provide a CLA and decorate the PR appropriately (e.g., label, comment). Simply follow the instructions provided by the bot. You will only need to do this once across all repos using our CLA.

This project has adopted the Microsoft Open Source Code of Conduct. For more information see the Code of Conduct FAQ or contact opencode@microsoft.com with any additional questions or comments.